George Vosselman

LDPoly: Latent Diffusion for Polygonal Road Outline Extraction in Large-Scale Topographic Mapping

Apr 29, 2025Abstract:Polygonal road outline extraction from high-resolution aerial images is an important task in large-scale topographic mapping, where roads are represented as vectorized polygons, capturing essential geometric features with minimal vertex redundancy. Despite its importance, no existing method has been explicitly designed for this task. While polygonal building outline extraction has been extensively studied, the unique characteristics of roads, such as branching structures and topological connectivity, pose challenges to these methods. To address this gap, we introduce LDPoly, the first dedicated framework for extracting polygonal road outlines from high-resolution aerial images. Our method leverages a novel Dual-Latent Diffusion Model with a Channel-Embedded Fusion Module, enabling the model to simultaneously generate road masks and vertex heatmaps. A tailored polygonization method is then applied to obtain accurate vectorized road polygons with minimal vertex redundancy. We evaluate LDPoly on a new benchmark dataset, Map2ImLas, which contains detailed polygonal annotations for various topographic objects in several Dutch regions. Our experiments include both in-region and cross-region evaluations, with the latter designed to assess the model's generalization performance on unseen regions. Quantitative and qualitative results demonstrate that LDPoly outperforms state-of-the-art polygon extraction methods across various metrics, including pixel-level coverage, vertex efficiency, polygon regularity, and road connectivity. We also design two new metrics to assess polygon simplicity and boundary smoothness. Moreover, this work represents the first application of diffusion models for extracting precise vectorized object outlines without redundant vertices from remote-sensing imagery, paving the way for future advancements in this field.

Scale-wise Bidirectional Alignment Network for Referring Remote Sensing Image Segmentation

Jan 06, 2025Abstract:The goal of referring remote sensing image segmentation (RRSIS) is to extract specific pixel-level regions within an aerial image via a natural language expression. Recent advancements, particularly Transformer-based fusion designs, have demonstrated remarkable progress in this domain. However, existing methods primarily focus on refining visual features using language-aware guidance during the cross-modal fusion stage, neglecting the complementary vision-to-language flow. This limitation often leads to irrelevant or suboptimal representations. In addition, the diverse spatial scales of ground objects in aerial images pose significant challenges to the visual perception capabilities of existing models when conditioned on textual inputs. In this paper, we propose an innovative framework called Scale-wise Bidirectional Alignment Network (SBANet) to address these challenges for RRSIS. Specifically, we design a Bidirectional Alignment Module (BAM) with learnable query tokens to selectively and effectively represent visual and linguistic features, emphasizing regions associated with key tokens. BAM is further enhanced with a dynamic feature selection block, designed to provide both macro- and micro-level visual features, preserving global context and local details to facilitate more effective cross-modal interaction. Furthermore, SBANet incorporates a text-conditioned channel and spatial aggregator to bridge the gap between the encoder and decoder, enhancing cross-scale information exchange in complex aerial scenarios. Extensive experiments demonstrate that our proposed method achieves superior performance in comparison to previous state-of-the-art methods on the RRSIS-D and RefSegRS datasets, both quantitatively and qualitatively. The code will be released after publication.

PolyR-CNN: R-CNN for end-to-end polygonal building outline extraction

Jul 20, 2024

Abstract:Polygonal building outline extraction has been a research focus in recent years. Most existing methods have addressed this challenging task by decomposing it into several subtasks and employing carefully designed architectures. Despite their accuracy, such pipelines often introduce inefficiencies during training and inference. This paper presents an end-to-end framework, denoted as PolyR-CNN, which offers an efficient and fully integrated approach to predict vectorized building polygons and bounding boxes directly from remotely sensed images. Notably, PolyR-CNN leverages solely the features of the Region of Interest (RoI) for the prediction, thereby mitigating the necessity for complex designs. Furthermore, we propose a novel scheme with PolyR-CNN to extract detailed outline information from polygon vertex coordinates, termed vertex proposal feature, to guide the RoI features to predict more regular buildings. PolyR-CNN demonstrates the capacity to deal with buildings with holes through a simple post-processing method on the Inria dataset. Comprehensive experiments conducted on the CrowdAI dataset show that PolyR-CNN achieves competitive accuracy compared to state-of-the-art methods while significantly improving computational efficiency, i.e., achieving 79.2 Average Precision (AP), exhibiting a 15.9 AP gain and operating 2.5 times faster and four times lighter than the well-established end-to-end method PolyWorld. Replacing the backbone with a simple ResNet-50, PolyR-CNN maintains a 71.1 AP while running four times faster than PolyWorld.

RoIPoly: Vectorized Building Outline Extraction Using Vertex and Logit Embeddings

Jul 20, 2024Abstract:Polygonal building outlines are crucial for geographic and cartographic applications. The existing approaches for outline extraction from aerial or satellite imagery are typically decomposed into subtasks, e.g., building masking and vectorization, or treat this task as a sequence-to-sequence prediction of ordered vertices. The former lacks efficiency, and the latter often generates redundant vertices, both resulting in suboptimal performance. To handle these issues, we propose a novel Region-of-Interest (RoI) query-based approach called RoIPoly. Specifically, we formulate each vertex as a query and constrain the query attention on the most relevant regions of a potential building, yielding reduced computational overhead and more efficient vertex level interaction. Moreover, we introduce a novel learnable logit embedding to facilitate vertex classification on the attention map; thus, no post-processing is needed for redundant vertex removal. We evaluated our method on the vectorized building outline extraction dataset CrowdAI and the 2D floorplan reconstruction dataset Structured3D. On the CrowdAI dataset, RoIPoly with a ResNet50 backbone outperforms existing methods with the same or better backbones on most MS-COCO metrics, especially on small buildings, and achieves competitive results in polygon quality and vertex redundancy without any post-processing. On the Structured3D dataset, our method achieves the second-best performance on most metrics among existing methods dedicated to 2D floorplan reconstruction, demonstrating our cross-domain generalization capability. The code will be released upon acceptance of this paper.

Learning from Exemplars for Interactive Image Segmentation

Jun 17, 2024Abstract:Interactive image segmentation enables users to interact minimally with a machine, facilitating the gradual refinement of the segmentation mask for a target of interest. Previous studies have demonstrated impressive performance in extracting a single target mask through interactive segmentation. However, the information cues of previously interacted objects have been overlooked in the existing methods, which can be further explored to speed up interactive segmentation for multiple targets in the same category. To this end, we introduce novel interactive segmentation frameworks for both a single object and multiple objects in the same category. Specifically, our model leverages transformer backbones to extract interaction-focused visual features from the image and the interactions to obtain a satisfactory mask of a target as an exemplar. For multiple objects, we propose an exemplar-informed module to enhance the learning of similarities among the objects of the target category. To combine attended features from different modules, we incorporate cross-attention blocks followed by a feature fusion module. Experiments conducted on mainstream benchmarks demonstrate that our models achieve superior performance compared to previous methods. Particularly, our model reduces users' labor by around 15\%, requiring two fewer clicks to achieve target IoUs 85\% and 90\%. The results highlight our models' potential as a flexible and practical annotation tool. The source code will be released after publication.

Compositional 3D Scene Synthesis with Scene Graph Guided Layout-Shape Generation

Mar 19, 2024

Abstract:Compositional 3D scene synthesis has diverse applications across a spectrum of industries such as robotics, films, and video games, as it closely mirrors the complexity of real-world multi-object environments. Early works typically employ shape retrieval based frameworks which naturally suffer from limited shape diversity. Recent progresses have been made in shape generation with powerful generative models, such as diffusion models, which increases the shape fidelity. However, these approaches separately treat 3D shape generation and layout generation. The synthesized scenes are usually hampered by layout collision, which implies that the scene-level fidelity is still under-explored. In this paper, we aim at generating realistic and reasonable 3D scenes from scene graph. To enrich the representation capability of the given scene graph inputs, large language model is utilized to explicitly aggregate the global graph features with local relationship features. With a unified graph convolution network (GCN), graph features are extracted from scene graphs updated via joint layout-shape distribution. During scene generation, an IoU-based regularization loss is introduced to constrain the predicted 3D layouts. Benchmarked on the SG-FRONT dataset, our method achieves better 3D scene synthesis, especially in terms of scene-level fidelity. The source code will be released after publication.

Convincing Rationales for Visual Question Answering Reasoning

Feb 06, 2024Abstract:Visual Question Answering (VQA) is a challenging task of predicting the answer to a question about the content of an image. It requires deep understanding of both the textual question and visual image. Prior works directly evaluate the answering models by simply calculating the accuracy of the predicted answers. However, the inner reasoning behind the prediction is disregarded in such a "black box" system, and we do not even know if one can trust the predictions. In some cases, the models still get the correct answers even when they focus on irrelevant visual regions or textual tokens, which makes the models unreliable and illogical. To generate both visual and textual rationales next to the predicted answer to the given image/question pair, we propose Convincing Rationales for VQA, CRVQA. Considering the extra annotations brought by the new outputs, {CRVQA} is trained and evaluated by samples converted from some existing VQA datasets and their visual labels. The extensive experiments demonstrate that the visual and textual rationales support the prediction of the answers, and further improve the accuracy. Furthermore, {CRVQA} achieves competitive performance on generic VQA datatsets in the zero-shot evaluation setting. The dataset and source code will be released under https://github.com/lik1996/CRVQA2024.

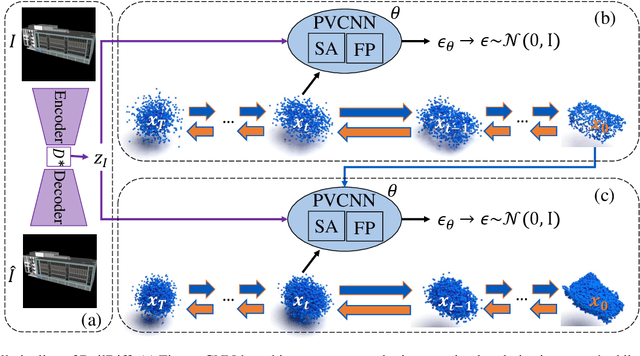

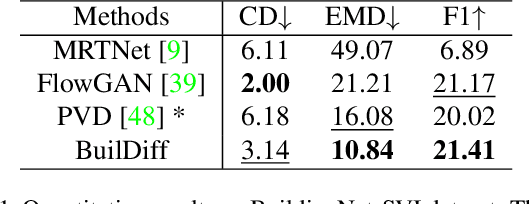

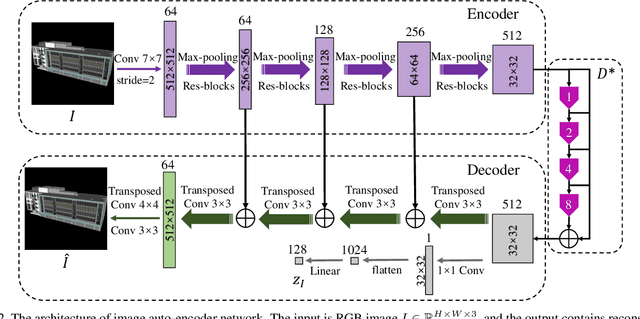

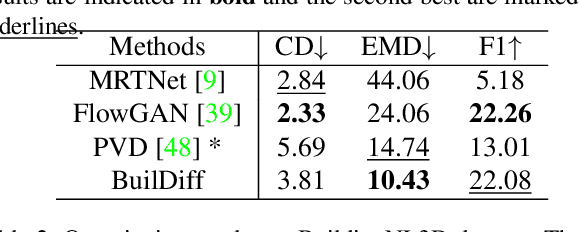

BuilDiff: 3D Building Shape Generation using Single-Image Conditional Point Cloud Diffusion Models

Aug 31, 2023

Abstract:3D building generation with low data acquisition costs, such as single image-to-3D, becomes increasingly important. However, most of the existing single image-to-3D building creation works are restricted to those images with specific viewing angles, hence they are difficult to scale to general-view images that commonly appear in practical cases. To fill this gap, we propose a novel 3D building shape generation method exploiting point cloud diffusion models with image conditioning schemes, which demonstrates flexibility to the input images. By cooperating two conditional diffusion models and introducing a regularization strategy during denoising process, our method is able to synthesize building roofs while maintaining the overall structures. We validate our framework on two newly built datasets and extensive experiments show that our method outperforms previous works in terms of building generation quality.

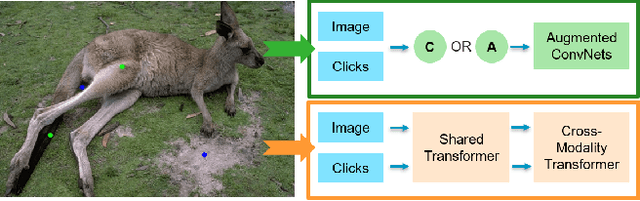

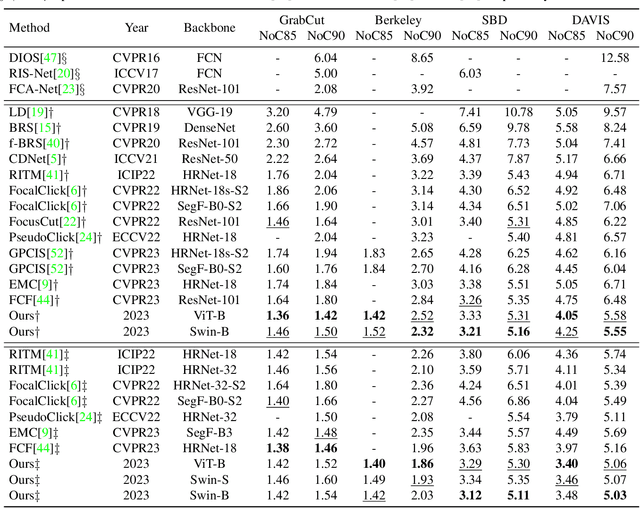

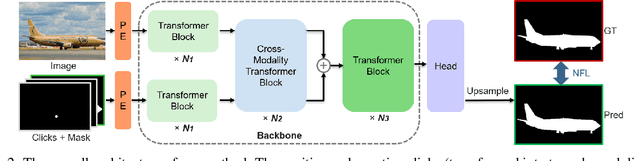

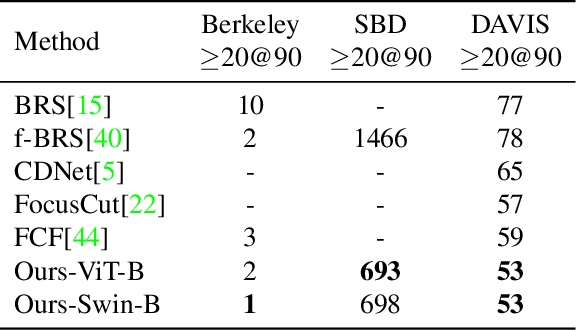

Interactive Image Segmentation with Cross-Modality Vision Transformers

Jul 05, 2023

Abstract:Interactive image segmentation aims to segment the target from the background with the manual guidance, which takes as input multimodal data such as images, clicks, scribbles, and bounding boxes. Recently, vision transformers have achieved a great success in several downstream visual tasks, and a few efforts have been made to bring this powerful architecture to interactive segmentation task. However, the previous works neglect the relations between two modalities and directly mock the way of processing purely visual information with self-attentions. In this paper, we propose a simple yet effective network for click-based interactive segmentation with cross-modality vision transformers. Cross-modality transformers exploits mutual information to better guide the learning process. The experiments on several benchmarks show that the proposed method achieves superior performance in comparison to the previous state-of-the-art models. The stability of our method in term of avoiding failure cases shows its potential to be a practical annotation tool. The code and pretrained models will be released under https://github.com/lik1996/iCMFormer.

Channel-Aware Distillation Transformer for Depth Estimation on Nano Drones

Mar 18, 2023Abstract:Autonomous navigation of drones using computer vision has achieved promising performance. Nano-sized drones based on edge computing platforms are lightweight, flexible, and cheap, thus suitable for exploring narrow spaces. However, due to their extremely limited computing power and storage, vision algorithms designed for high-performance GPU platforms cannot be used for nano drones. To address this issue this paper presents a lightweight CNN depth estimation network deployed on nano drones for obstacle avoidance. Inspired by Knowledge Distillation (KD), a Channel-Aware Distillation Transformer (CADiT) is proposed to facilitate the small network to learn knowledge from a larger network. The proposed method is validated on the KITTI dataset and tested on a nano drone Crazyflie, with an ultra-low power microprocessor GAP8.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge