Gabor Fodor

Hierarchical Federated Learning with SignSGD: A Highly Communication-Efficient Approach

Feb 02, 2026Abstract:Hierarchical federated learning (HFL) has emerged as a key architecture for large-scale wireless and Internet of Things systems, where devices communicate with nearby edge servers before reaching the cloud. In these environments, uplink bandwidth and latency impose strict communication limits, thereby making aggressive gradient compression essential. One-bit methods such as sign-based stochastic gradient descent (SignSGD) offer an attractive solution in flat federated settings, but existing theory and algorithms do not naturally extend to hierarchical settings. In particular, the interaction between majority-vote aggregation at the edge layer and model aggregation at the cloud layer, and its impact on end-to-end performance, remains unknown. To bridge this gap, we propose a highly communication-efficient sign-based HFL framework and develop its corresponding formulation for nonconvex learning, where devices send only signed stochastic gradients, edge servers combine them through majority-vote, and the cloud periodically averages the obtained edge models, while utilizing downlink quantization to broadcast the global model. We introduce the resulting scalable HFL algorithm, HierSignSGD, and provide the convergence analysis for SignSGD in a hierarchical setting. Our core technical contribution is a characterization of how biased sign compression, two-level aggregation intervals, and inter-cluster heterogeneity collectively affect convergence. Numerical experiments under homogeneous and heterogeneous data splits show that HierSignSGD, despite employing extreme compression, achieves accuracy comparable to or better than full-precision stochastic gradient descent while reducing communication cost in the process, and remains robust under aggressive downlink sparsification.

On the Impact of Channel Aging and Doppler-Affected Clutter on OFDM ISAC Systems

Jan 08, 2026Abstract:The temporal evolution of the propagation environment plays a central role in integrated sensing and communication (ISAC) systems. A slow-time evolution manifests as channel aging in communication links, while a fast-time one is associated with structured clutter with non-zero Doppler. Nevertheless, the joint impact of these two phenomena on ISAC performance has been largely overlooked. This addresses this research gap in a network utilizing orthogonal frequency division multiplexing waveforms. Here, a base station simultaneously serves multiple user equipment (UE) devices and performs monostatic sensing. Channel aging is captured through an autoregressive model with exponential correlation decay. In contrast, clutter is modeled as a collection of uncorrelated, coherent patches with non-zero Doppler, resulting in a Kronecker-separable covariance structure. We propose an aging-aware channel estimator that uses prior pilot observations to estimate the time-varying UE channels, characterized by a non-isotropic multipath fading structure. The clutter's structure enables a novel low-complexity sensing pipeline: clutter statistics are estimated from raw data and subsequently used to suppress the clutter's action, after which target parameters are extracted through range-angle and range-velocity maps. We evaluate the influence of frame length and pilot history on channel estimation accuracy and demonstrate substantial performance gains over block fading in low-to-moderate mobility regimes. The sensing pipeline is implemented in a clutter-dominated environment, demonstrating that effective clutter suppression can be achieved under practical configurations. Furthermore, our results show that dedicated sensing streams are required, as communication beams provide insufficient range resolution.

Pilot-to-Data Power Ratio in RIS-Assisted Multiantenna Communication

Jul 29, 2025Abstract:The optimization of the \gls{pdpr} is a recourse that helps wireless systems to acquire channel state information while minimizing the pilot overhead. While the optimization of the \gls{pdpr} in cellular networks has been studied extensively, the effect of the \gls{pdpr} in \gls{ris}-assisted networks has hardly been examined. This paper tackles this optimization when the communication is assisted by a RIS whose phase shifts are adjusted on the basis of the statistics of the channels. For a setting representative of a macrocellular deployment, the benefits of optimizing the PDPR are seen to be significant over a broad range of operating conditions. These benefits, demonstrated through the ergodic minimum mean squared error, for which a closed-form solution is derived, become more pronounced as the number of RIS elements and/or the channel coherence grow large.

When Near Becomes Far: From Rayleigh to Optimal Near-Field and Far-Field Boundaries

May 12, 2025Abstract:The transition toward 6G is pushing wireless communication into a regime where the classical plane-wave assumption no longer holds. Millimeter-wave and sub-THz frequencies shrink wavelengths to millimeters, while meter-scale arrays featuring hundreds of antenna elements dramatically enlarge the aperture. Together, these trends collapse the classical Rayleigh far-field boundary from kilometers to mere single-digit meters. Consequently, most practical 6G indoor, vehicular, and industrial deployments will inherently operate within the radiating near-field, where reliance on the plane-wave approximation leads to severe array-gain losses, degraded localization accuracy, and excessive pilot overhead. This paper re-examines the fundamental question: Where does the far-field truly begin? Rather than adopting purely geometric definitions, we introduce an application-oriented approach based on user-defined error budgets and a rigorous Fresnel-zone analysis that fully accounts for both amplitude and phase curvature. We propose three practical mismatch metrics: worst-case element mismatch, worst-case normalized mean square error, and spectral efficiency loss. For each metric, we derive a provably optimal transition distance--the minimal range beyond which mismatch permanently remains below a given tolerance--and provide closed-form solutions. Extensive numerical evaluations across diverse frequencies and antenna-array dimensions show that our proposed thresholds can exceed the Rayleigh distance by more than an order of magnitude. By transforming the near-field from a design nuisance into a precise, quantifiable tool, our results provide a clear roadmap for enabling reliable and resource-efficient near-field communications and sensing in emerging 6G systems.

One Target, Many Views: Multi-User Fusion for Collaborative Uplink ISAC

May 02, 2025Abstract:We propose a novel pilot-free multi-user uplink framework for integrated sensing and communication (ISAC) in mm-wave networks, where single-antenna users transmit orthogonal frequency division multiplexing signals without dedicated pilots. The base station exploits the spatial and velocity diversities of users to simultaneously decode messages and detect targets, transforming user transmissions into a powerful sensing tool. Each user's signal, structured by a known codebook, propagates through a sparse multi-path channel with shared moving targets and user-specific scatterers. Notably, common targets induce distinct delay-Doppler-angle signatures, while stationary scatterers cluster in parameter space. We formulate the joint multi-path parameter estimation and data decoding as a 3D super-resolution problem, extracting delays, Doppler shifts, and angles-of-arrival via atomic norm minimization, efficiently solved using semidefinite programming. A core innovation is multiuser fusion, where diverse user observations are collaboratively combined to enhance sensing and decoding. This approach improves robustness and integrates multi-user perspectives into a unified estimation framework, enabling high-resolution sensing and reliable communication. Numerical results show that the proposed framework significantly enhances both target estimation and communication performance, highlighting its potential for next-generation ISAC systems.

Mobility Management in Integrated Sensing and Communications Networks

Jan 14, 2025

Abstract:The performance of the integrated sensing and communication (ISAC) networks is considerably affected by the mobility of the transceiver nodes, user equipment devices (UEs) and the passive objects that are sensed. For instance, the sensing efficiency is considerably affected by the presence or absence of a line-of-sight connection between the sensing transceivers and the object; a condition that may change quickly due to mobility. Moreover, the mobility of the UEs and objects may result in dynamically varying communication-to-sensing and sensing-to communication interference, deteriorating the network performance. In such cases, there may be a need to handover the sensing process to neighbor nodes. In this article, we develop the concept of mobility management in ISAC networks. Here, depending on the mobility of objects and/or the transceiver nodes, the data traffic, the sensing or communication coverage area of the transceivers, and the network interference, the transmission and/or the reception of the sensing signals may be handed over to neighbor nodes. Also, the ISAC configuration and modality - that is, using monostatic or bistatic sensing - are updated accordingly, such that the sensed objects can be continuously sensed with low overhead. We show that mobility management reduces the sensing interruption and boosts the communication and sensing efficiency of ISAC networks.

RIS-Assisted Sensing: A Nested Tensor Decomposition-Based Approach

Dec 03, 2024

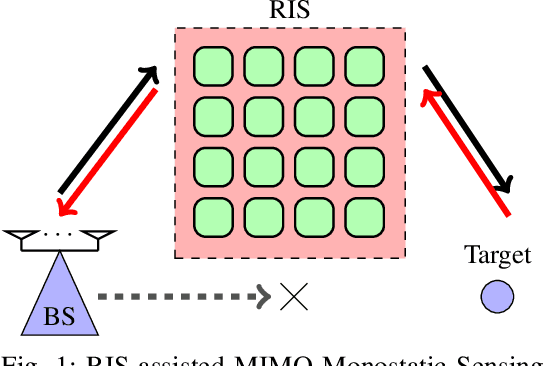

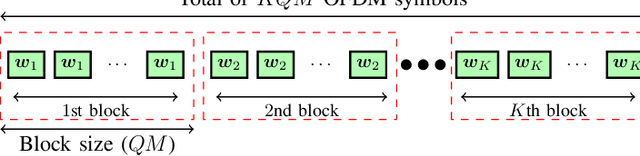

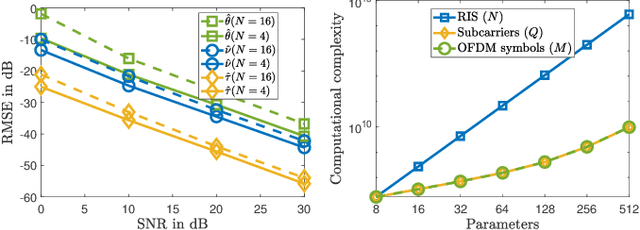

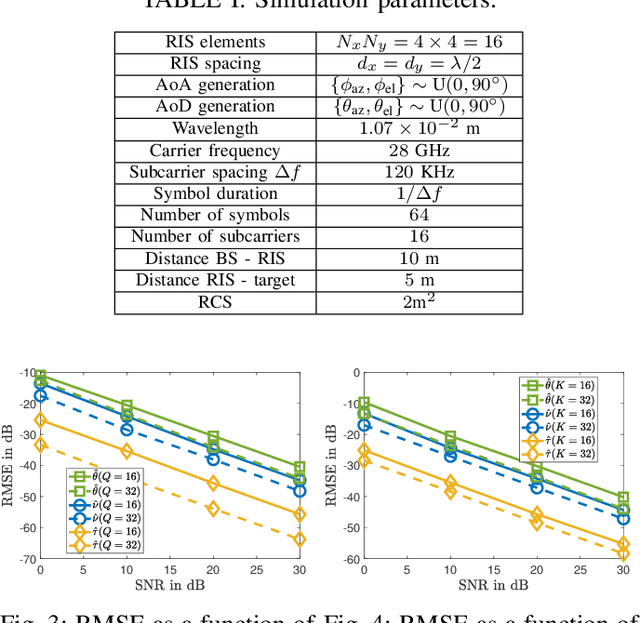

Abstract:We study a monostatic multiple-input multiple-output sensing scenario assisted by a reconfigurable intelligent surface using tensor signal modeling. We propose a method that exploits the intrinsic multidimensional structure of the received echo signal, allowing us to recast the target sensing problem as a nested tensor-based decomposition problem to jointly estimate the delay, Doppler, and angular information of the target. We derive a two-stage approach based on the alternating least squares algorithm followed by the estimation of the signal parameters via rotational invariance techniques to extract the target parameters. Simulation results show that the proposed tensor-based algorithm yields accurate estimates of the sensing parameters with low complexity.

Machine Learning for Spectrum Sharing: A Survey

Nov 28, 2024

Abstract:The 5th generation (5G) of wireless systems is being deployed with the aim to provide many sets of wireless communication services, such as low data rates for a massive amount of devices, broadband, low latency, and industrial wireless access. Such an aim is even more complex in the next generation wireless systems (6G) where wireless connectivity is expected to serve any connected intelligent unit, such as software robots and humans interacting in the metaverse, autonomous vehicles, drones, trains, or smart sensors monitoring cities, buildings, and the environment. Because of the wireless devices will be orders of magnitude denser than in 5G cellular systems, and because of their complex quality of service requirements, the access to the wireless spectrum will have to be appropriately shared to avoid congestion, poor quality of service, or unsatisfactory communication delays. Spectrum sharing methods have been the objective of intense study through model-based approaches, such as optimization or game theories. However, these methods may fail when facing the complexity of the communication environments in 5G, 6G, and beyond. Recently, there has been significant interest in the application and development of data-driven methods, namely machine learning methods, to handle the complex operation of spectrum sharing. In this survey, we provide a complete overview of the state-of-theart of machine learning for spectrum sharing. First, we map the most prominent methods that we encounter in spectrum sharing. Then, we show how these machine learning methods are applied to the numerous dimensions and sub-problems of spectrum sharing, such as spectrum sensing, spectrum allocation, spectrum access, and spectrum handoff. We also highlight several open questions and future trends.

* Published at NOW Foundations and Trends in Networking

Offline Reinforcement Learning and Sequence Modeling for Downlink Link Adaptation

Oct 30, 2024Abstract:Contemporary radio access networks employ link adaption (LA) algorithms to optimize the modulation and coding schemes to adapt to the prevailing propagation conditions and are near-optimal in terms of the achieved spectral efficiency. LA is a challenging task in the presence of mobility, fast fading, and imperfect channel quality information and limited knowledge of the receiver characteristics at the transmitter, which render model-based LA algorithms complex and suboptimal. Model-based LA is especially difficult as connected user equipment devices become increasingly heterogeneous in terms of receiver capabilities, antenna configurations and hardware characteristics. Recognizing these difficulties, previous works have proposed reinforcement learning (RL) for LA, which faces deployment difficulties due to their potential negative impacts on live performance. To address this challenge, this paper considers offline RL to learn LA policies from data acquired in live networks with minimal or no intrusive effects on the network operation. We propose three LA designs based on batch-constrained deep Q-learning, conservative Q-learning, and decision transformers, showing that offline RL algorithms can achieve performance of state-of-the-art online RL methods when data is collected with a proper behavioral policy.

Near-Field ISAC in 6G: Addressing Phase Nonlinearity via Lifted Super-Resolution

Oct 07, 2024Abstract:Integrated sensing and communications (ISAC) is a promising component of 6G networks, fusing communication and radar technologies to facilitate new services. Additionally, the use of extremely large-scale antenna arrays (ELLA) at the ISAC common receiver not only facilitates terahertz-rate communication links but also significantly enhances the accuracy of target detection in radar applications. In practical scenarios, communication scatterers and radar targets often reside in close proximity to the ISAC receiver. This, combined with the use of ELLA, fundamentally alters the electromagnetic characteristics of wireless and radar channels, shifting from far-field planar-wave propagation to near-field spherical wave propagation. Under the far-field planar-wave model, the phase of the array response vector varies linearly with the antenna index. In contrast, in the near-field spherical wave model, this phase relationship becomes nonlinear. This shift presents a fundamental challenge: the widely-used Fourier analysis can no longer be directly applied for target detection and communication channel estimation at the ISAC common receiver. In this work, we propose a feasible solution to address this fundamental issue. Specifically, we demonstrate that there exists a high-dimensional space in which the phase nonlinearity can be expressed as linear. Leveraging this insight, we develop a lifted super-resolution framework that simultaneously performs communication channel estimation and extracts target parameters with high precision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge