Xinyang Shao

DS4RS: Community-Driven and Explainable Dataset Search Engine for Recommender System Research

Aug 13, 2025

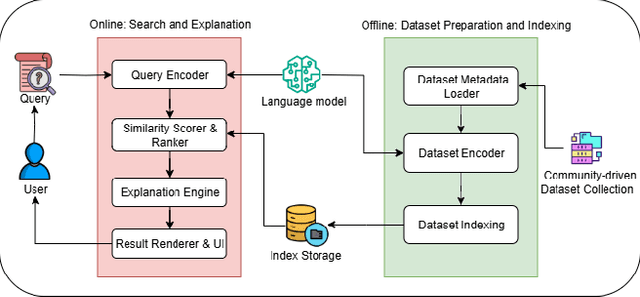

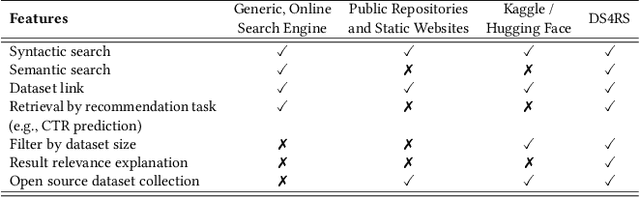

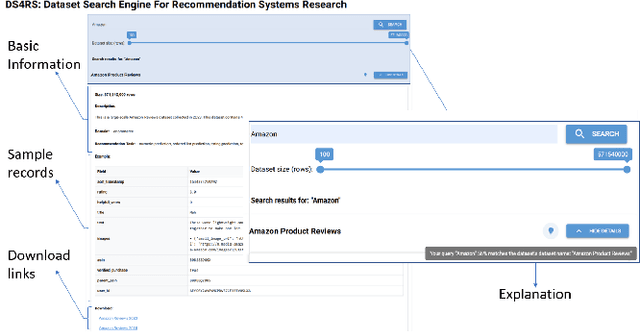

Abstract:Accessing suitable datasets is critical for research and development in recommender systems. However, finding datasets that match specific recommendation task or domains remains a challenge due to scattered sources and inconsistent metadata. To address this gap, we propose a community-driven and explainable dataset search engine tailored for recommender system research. Our system supports semantic search across multiple dataset attributes, such as dataset names, descriptions, and recommendation domain, and provides explanations of search relevance to enhance transparency. The system encourages community participation by allowing users to contribute standardized dataset metadata in public repository. By improving dataset discoverability and search interpretability, the system facilitates more efficient research reproduction. The platform is publicly available at: https://ds4rs.com.

Dataset-Agnostic Recommender Systems

Jan 13, 2025

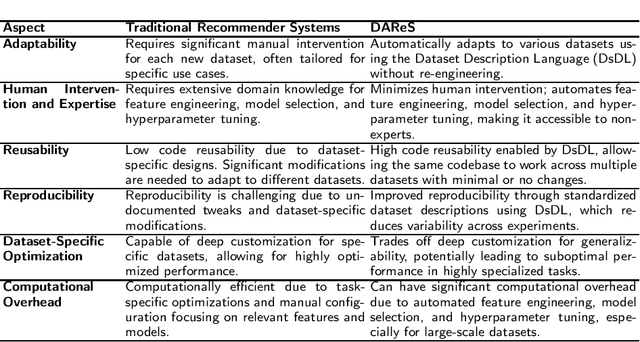

Abstract:[This is a position paper and does not contain any empirical or theoretical results] Recommender systems have become a cornerstone of personalized user experiences, yet their development typically involves significant manual intervention, including dataset-specific feature engineering, hyperparameter tuning, and configuration. To this end, we introduce a novel paradigm: Dataset-Agnostic Recommender Systems (DAReS) that aims to enable a single codebase to autonomously adapt to various datasets without the need for fine-tuning, for a given recommender system task. Central to this approach is the Dataset Description Language (DsDL), a structured format that provides metadata about the dataset's features and labels, and allow the system to understand dataset's characteristics, allowing it to autonomously manage processes like feature selection, missing values imputation, noise removal, and hyperparameter optimization. By reducing the need for domain-specific expertise and manual adjustments, DAReS offers a more efficient and scalable solution for building recommender systems across diverse application domains. It addresses critical challenges in the field, such as reusability, reproducibility, and accessibility for non-expert users or entry-level researchers.

RBoard: A Unified Platform for Reproducible and Reusable Recommender System Benchmarks

Sep 10, 2024

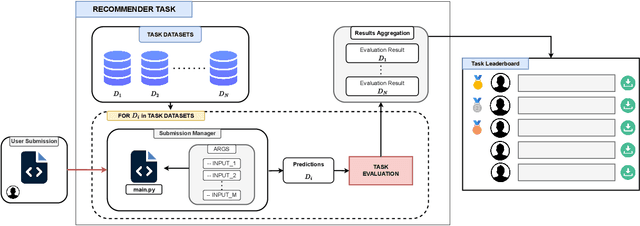

Abstract:Recommender systems research lacks standardized benchmarks for reproducibility and algorithm comparisons. We introduce RBoard, a novel framework addressing these challenges by providing a comprehensive platform for benchmarking diverse recommendation tasks, including CTR prediction, Top-N recommendation, and others. RBoard's primary objective is to enable fully reproducible and reusable experiments across these scenarios. The framework evaluates algorithms across multiple datasets within each task, aggregating results for a holistic performance assessment. It implements standardized evaluation protocols, ensuring consistency and comparability. To facilitate reproducibility, all user-provided code can be easily downloaded and executed, allowing researchers to reliably replicate studies and build upon previous work. By offering a unified platform for rigorous, reproducible evaluation across various recommendation scenarios, RBoard aims to accelerate progress in the field and establish a new standard for recommender systems benchmarking in both academia and industry. The platform is available at https://rboard.org and the demo video can be found at https://bit.ly/rboard-demo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge