Carlo Fischione

Hierarchical Federated Learning with SignSGD: A Highly Communication-Efficient Approach

Feb 02, 2026Abstract:Hierarchical federated learning (HFL) has emerged as a key architecture for large-scale wireless and Internet of Things systems, where devices communicate with nearby edge servers before reaching the cloud. In these environments, uplink bandwidth and latency impose strict communication limits, thereby making aggressive gradient compression essential. One-bit methods such as sign-based stochastic gradient descent (SignSGD) offer an attractive solution in flat federated settings, but existing theory and algorithms do not naturally extend to hierarchical settings. In particular, the interaction between majority-vote aggregation at the edge layer and model aggregation at the cloud layer, and its impact on end-to-end performance, remains unknown. To bridge this gap, we propose a highly communication-efficient sign-based HFL framework and develop its corresponding formulation for nonconvex learning, where devices send only signed stochastic gradients, edge servers combine them through majority-vote, and the cloud periodically averages the obtained edge models, while utilizing downlink quantization to broadcast the global model. We introduce the resulting scalable HFL algorithm, HierSignSGD, and provide the convergence analysis for SignSGD in a hierarchical setting. Our core technical contribution is a characterization of how biased sign compression, two-level aggregation intervals, and inter-cluster heterogeneity collectively affect convergence. Numerical experiments under homogeneous and heterogeneous data splits show that HierSignSGD, despite employing extreme compression, achieves accuracy comparable to or better than full-precision stochastic gradient descent while reducing communication cost in the process, and remains robust under aggressive downlink sparsification.

Computing on Dirty Paper: Interference-Free Integrated Communication and Computing

Oct 02, 2025

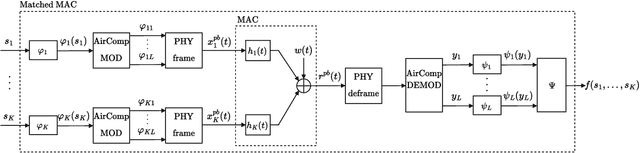

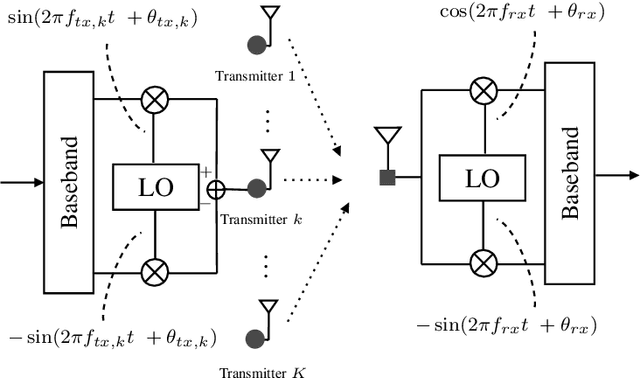

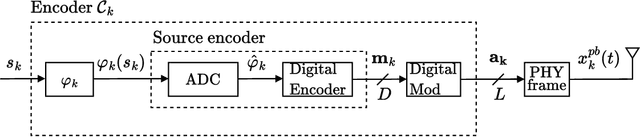

Abstract:Inspired by Costa's pioneering work on dirty paper coding (DPC), this paper proposes a novel scheme for integrated communication and computing (ICC), named Computing on Dirty Paper, whereby the transmission of discrete data symbols for communication, and over-the-air computation (AirComp) of nomographic functions can be achieved simultaneously over common multiple-access channels. In particular, the proposed scheme allows for the integration of communication and computation in a manner that is asymptotically interference-free, by precanceling the computing symbols at the transmitters (TXs) using DPC principles. A simulation-based assessment of the proposed ICC scheme under a single-input multiple-output (SIMO) setup is also offered, including the evaluation of performance for data detection, and of mean-squared-error (MSE) performance for function computation, over a block of symbols. The results validate the proposed method and demonstrate its ability to significantly outperform state-of-the-art (SotA) ICC schemes in terms of both bit error rate (BER) and MSE.

Multi-Layer Hierarchical Federated Learning with Quantization

May 13, 2025Abstract:Almost all existing hierarchical federated learning (FL) models are limited to two aggregation layers, restricting scalability and flexibility in complex, large-scale networks. In this work, we propose a Multi-Layer Hierarchical Federated Learning framework (QMLHFL), which appears to be the first study that generalizes hierarchical FL to arbitrary numbers of layers and network architectures through nested aggregation, while employing a layer-specific quantization scheme to meet communication constraints. We develop a comprehensive convergence analysis for QMLHFL and derive a general convergence condition and rate that reveal the effects of key factors, including quantization parameters, hierarchical architecture, and intra-layer iteration counts. Furthermore, we determine the optimal number of intra-layer iterations to maximize the convergence rate while meeting a deadline constraint that accounts for both communication and computation times. Our results show that QMLHFL consistently achieves high learning accuracy, even under high data heterogeneity, and delivers notably improved performance when optimized, compared to using randomly selected values.

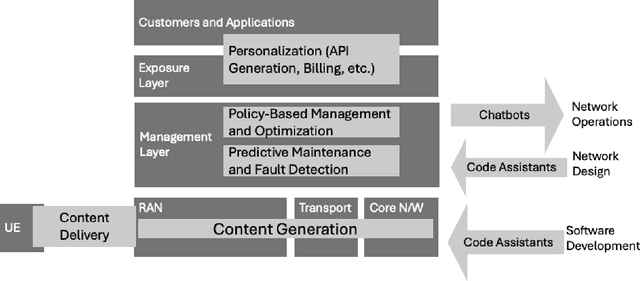

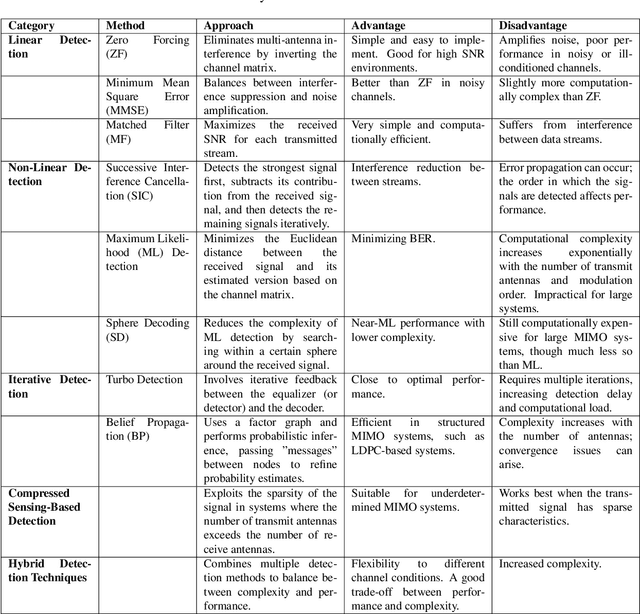

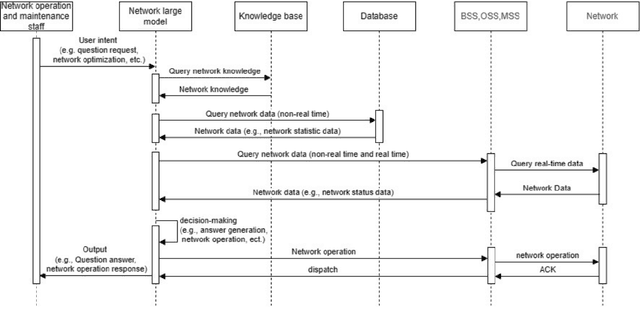

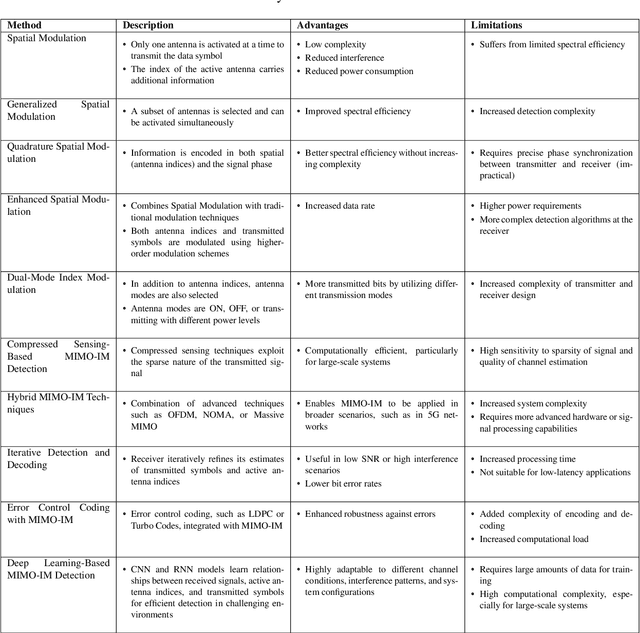

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

ReMAC:Digital Multiple Access Computing by Repeated Transmission

Feb 12, 2025Abstract:In this paper, we consider the ChannelComp framework, where multiple transmitters aim to compute a function of their values at a common receiver while using digital modulations over a multiple access channel. ChannelComp provides a general framework for computation by designing digital constellations for over-the-air computation. Currently, ChannelComp uses a symbol-level encoding. However, encoding repeated transmissions of the same symbol and performing the function computation using the corresponding received sequence may significantly improve the computation performance and reduce the encoding complexity. In this paper, we propose a new scheme where each transmitter repeats the transmission of the same symbol over multiple time slots while encoding such repetitions and designing constellation diagrams to minimize computational errors. We formally model such a scheme by an optimization problem, whose solution jointly identifies the constellation diagram and the repetition code. We call the proposed scheme ReMAC. To manage the computational complexity of the optimization, we divide it into two tractable subproblems. We verify the performance of ReMAC by numerical experiments. The simulation results reveal that ReMAC can reduce the computation error in noisy and fading channels by approximately up to 7.5$dB compared to standard ChannelComp, particularly for product functions.

Channel-Aware Constellation Design for Digital OTA Computation

Jan 24, 2025

Abstract:Over-the-air (OTA) computation has emerged as a promising technique for efficiently aggregating data from massive numbers of wireless devices. OTA computations can be performed by analog or digital communications. Analog OTA systems are often constrained by limited function adaptability and their reliance on analog amplitude modulation. On the other hand, digital OTA systems may face limitations such as high computational complexity and limited adaptability to varying network configurations. To address these challenges, this paper proposes a novel digital OTA computation system with a channel-aware constellation design for demodulation mappers. The proposed system dynamically adjusts the constellation based on the channel conditions of participating nodes, enabling reliable computation of various functions. By incorporating channel randomness into the constellation design, the system prevent overlap of constellation points, reduces computational complexity, and mitigates excessive transmit power consumption under poor channel conditions. Numerical results demonstrate that the system achieves reliable NMSE performance across a range of scenarios, offering valuable insights into the choice of signal processing methods and weighting strategies under varying computation point configurations, node counts, and quantization levels. This work advances the state of digital OTA computation by addressing critical challenges in scalability, transmit power consumption, and function adaptability.

Machine Learning for Spectrum Sharing: A Survey

Nov 28, 2024

Abstract:The 5th generation (5G) of wireless systems is being deployed with the aim to provide many sets of wireless communication services, such as low data rates for a massive amount of devices, broadband, low latency, and industrial wireless access. Such an aim is even more complex in the next generation wireless systems (6G) where wireless connectivity is expected to serve any connected intelligent unit, such as software robots and humans interacting in the metaverse, autonomous vehicles, drones, trains, or smart sensors monitoring cities, buildings, and the environment. Because of the wireless devices will be orders of magnitude denser than in 5G cellular systems, and because of their complex quality of service requirements, the access to the wireless spectrum will have to be appropriately shared to avoid congestion, poor quality of service, or unsatisfactory communication delays. Spectrum sharing methods have been the objective of intense study through model-based approaches, such as optimization or game theories. However, these methods may fail when facing the complexity of the communication environments in 5G, 6G, and beyond. Recently, there has been significant interest in the application and development of data-driven methods, namely machine learning methods, to handle the complex operation of spectrum sharing. In this survey, we provide a complete overview of the state-of-theart of machine learning for spectrum sharing. First, we map the most prominent methods that we encounter in spectrum sharing. Then, we show how these machine learning methods are applied to the numerous dimensions and sub-problems of spectrum sharing, such as spectrum sensing, spectrum allocation, spectrum access, and spectrum handoff. We also highlight several open questions and future trends.

* Published at NOW Foundations and Trends in Networking

Over-the-Air Computation in Cell-Free Massive MIMO Systems

Aug 31, 2024

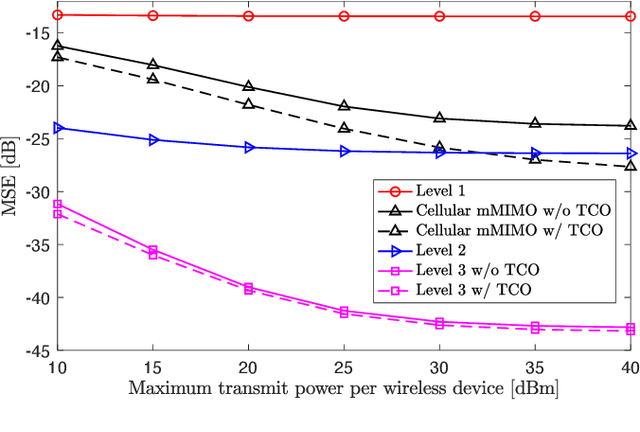

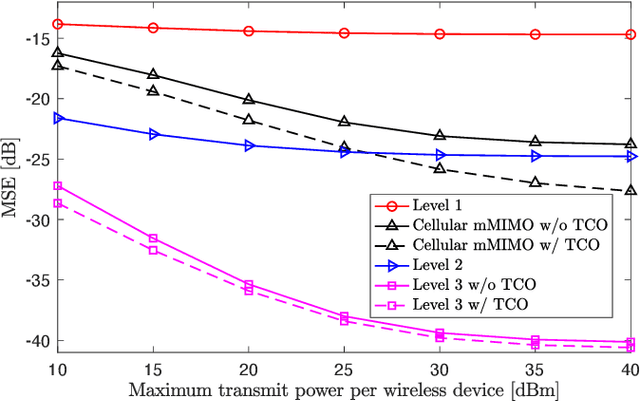

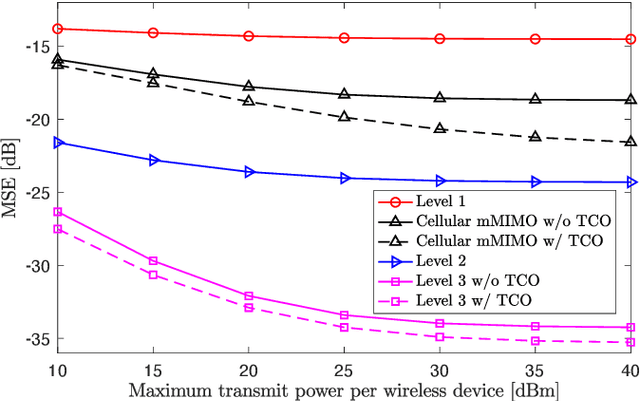

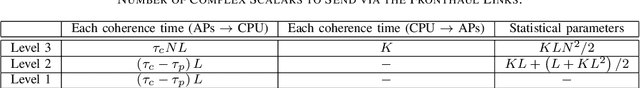

Abstract:Over-the-air computation (AirComp) is considered as a communication-efficient solution for data aggregation and distributed learning by exploiting the superposition properties of wireless multi-access channels. However, AirComp is significantly affected by the uneven signal attenuation experienced by different wireless devices. Recently, Cell-free Massive MIMO (mMIMO) has emerged as a promising technology to provide uniform coverage and high rates by joint coherent transmission. In this paper, we investigate AirComp in Cell-free mMIMO systems, taking into account spatially correlated fading and channel estimation errors. In particular, we propose optimal designs of transmit coefficients and receive combing at different levels of cooperation among access points. Numerical results demonstrate that Cell-free mMIMO using fully centralized processing significantly outperforms conventional Cellular mMIMO with regard to the mean squared error (MSE). Moreover, we show that Cell-free mMIMO using local processing and large-scale fading decoding can achieve a lower MSE than Cellular mMIMO when the wireless devices have limited power budgets.

Waveforms for Computing Over the Air

May 27, 2024

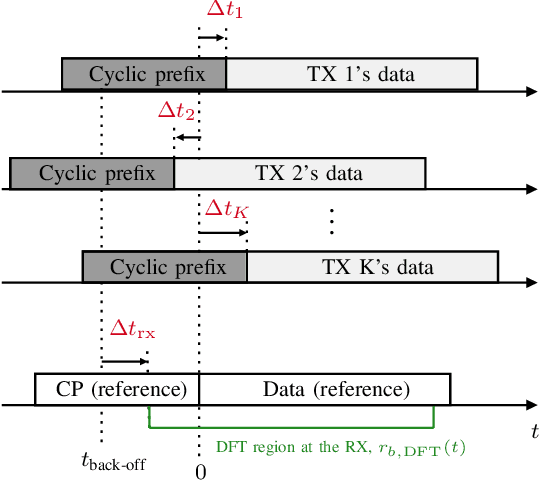

Abstract:Over-the-air computation (AirComp) leverages the signal-superposition characteristic of wireless multiple access channels to perform mathematical computations. Initially introduced to enhance communication reliability in interference channels and wireless sensor networks, AirComp has more recently found applications in task-oriented communications, namely, for wireless distributed learning and in wireless control systems. Its adoption aims to address latency challenges arising from an increased number of edge devices or IoT devices accessing the constrained wireless spectrum. This paper focuses on the physical layer of these systems, specifically on the waveform and the signal processing aspects at the transmitter and receiver to meet the challenges that AirComp presents within the different contexts and use cases.

A Novel Channel Coding Scheme for Digital Multiple Access Computing

Apr 25, 2024Abstract:In this paper, we consider the ChannelComp framework, which facilitates the computation of desired functions by multiple transmitters over a common receiver using digital modulations across a multiple access channel. While ChannelComp currently offers a broad framework for computation by designing digital constellations for over-the-air computation and employing symbol-level encoding, encoding the repeated transmissions of the same symbol and using the corresponding received sequence may significantly improve the computation performance and reduce the encoding complexity. In this paper, we propose an enhancement involving the encoding of the repetitive transmission of the same symbol at each transmitter over multiple time slots and the design of constellation diagrams, with the aim of minimizing computational errors. We frame this enhancement as an optimization problem, which jointly identifies the constellation diagram and the channel code for repetition, which we call ReChCompCode. To manage the computational complexity of the optimization, we divide it into two tractable subproblems. Through numerical experiments, we evaluate the performance of ReChCompCode. The simulation results reveal that ReChCompCode can reduce the computation error by approximately up to 30 dB compared to standard ChannelComp, particularly for product functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge