Francesco Ricca

University of Calabria

Direct Encoding of Declare Constraints in ASP

Dec 13, 2024Abstract:Answer Set Programming (ASP), a well-known declarative logic programming paradigm, has recently found practical application in Process Mining. In particular, ASP has been used to model tasks involving declarative specifications of business processes. In this area, Declare stands out as the most widely adopted declarative process modeling language, offering a means to model processes through sets of constraints valid traces must satisfy, that can be expressed in Linear Temporal Logic over Finite Traces (LTLf). Existing ASP-based solutions encode Declare constraints by modeling the corresponding LTLf formula or its equivalent automaton which can be obtained using established techniques. In this paper, we introduce a novel encoding for Declare constraints that directly models their semantics as ASP rules, eliminating the need for intermediate representations. We assess the effectiveness of this novel approach on two Process Mining tasks by comparing it with alternative ASP encodings and a Python library for Declare. Under consideration in Theory and Practice of Logic Programming (TPLP).

Enumerating Minimal Unsatisfiable Cores of LTLf formulas

Sep 14, 2024

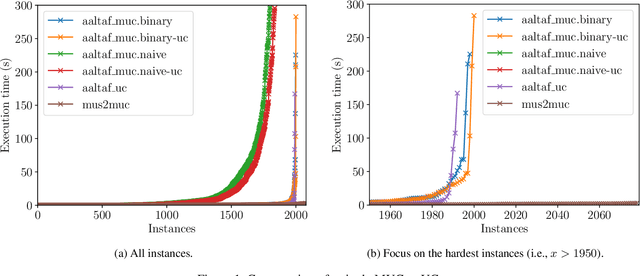

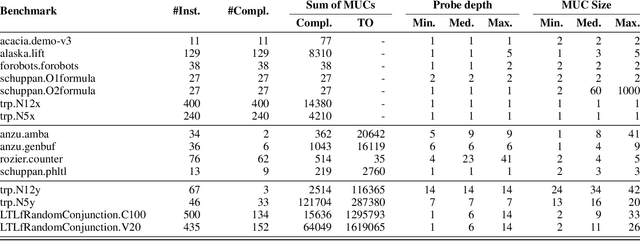

Abstract:Linear Temporal Logic over finite traces ($\text{LTL}_f$) is a widely used formalism with applications in AI, process mining, model checking, and more. The primary reasoning task for $\text{LTL}_f$ is satisfiability checking; yet, the recent focus on explainable AI has increased interest in analyzing inconsistent formulas, making the enumeration of minimal explanations for infeasibility a relevant task also for $\text{LTL}_f$. This paper introduces a novel technique for enumerating minimal unsatisfiable cores (MUCs) of an $\text{LTL}_f$ specification. The main idea is to encode a $\text{LTL}_f$ formula into an Answer Set Programming (ASP) specification, such that the minimal unsatisfiable subsets (MUSes) of the ASP program directly correspond to the MUCs of the original $\text{LTL}_f$ specification. Leveraging recent advancements in ASP solving yields a MUC enumerator achieving good performance in experiments conducted on established benchmarks from the literature.

Quantifying over Optimum Answer Sets

Aug 14, 2024Abstract:Answer Set Programming with Quantifiers (ASP(Q)) has been introduced to provide a natural extension of ASP modeling to problems in the polynomial hierarchy (PH). However, ASP(Q) lacks a method for encoding in an elegant and compact way problems requiring a polynomial number of calls to an oracle in $\Sigma_n^p$ (that is, problems in $\Delta_{n+1}^p$). Such problems include, in particular, optimization problems. In this paper we propose an extension of ASP(Q), in which component programs may contain weak constraints. Weak constraints can be used both for expressing local optimization within quantified component programs and for modeling global optimization criteria. We showcase the modeling capabilities of the new formalism through various application scenarios. Further, we study its computational properties obtaining complexity results and unveiling non-obvious characteristics of ASP(Q) programs with weak constraints.

LLASP: Fine-tuning Large Language Models for Answer Set Programming

Jul 26, 2024Abstract:Recently, Large Language Models (LLMs) have showcased their potential in various natural language processing tasks, including code generation. However, while significant progress has been made in adapting LLMs to generate code for several imperative programming languages and tasks, there remains a notable gap in their application to declarative formalisms, such as Answer Set Programming (ASP). In this paper, we move a step towards exploring the capabilities of LLMs for ASP code generation. First, we perform a systematic evaluation of several state-of-the-art LLMs. Despite their power in terms of number of parameters, training data and computational resources, empirical results demonstrate inadequate performances in generating correct ASP programs. Therefore, we propose LLASP, a fine-tuned lightweight model specifically trained to encode fundamental ASP program patterns. To this aim, we create an ad-hoc dataset covering a wide variety of fundamental problem specifications that can be encoded in ASP. Our experiments demonstrate that the quality of ASP programs generated by LLASP is remarkable. This holds true not only when compared to the non-fine-tuned counterpart but also when compared to the majority of eager LLM candidates, particularly from a semantic perspective. All the code and data used to perform the experiments are publicly available at https://anonymous.4open.science/r/LLASP-D86C/.

Towards Automatic Composition of ASP Programs from Natural Language Specifications

Mar 07, 2024Abstract:This paper moves the first step towards automating the composition of Answer Set Programming (ASP) specifications. In particular, the following contributions are provided: (i) A dataset focused on graph-related problem specifications, designed to develop and assess tools for ASP automatic coding; (ii) A two-step architecture, implemented in the NL2ASP tool, for generating ASP programs from natural language specifications. NL2ASP uses neural machine translation to transform natural language into Controlled Natural Language (CNL) statements. Subsequently, CNL statements are converted into ASP code using the CNL2ASP tool. An experiment confirms the viability of the approach.

Unit Testing in ASP Revisited: Language and Test-Driven Development Environment

Jan 04, 2024Abstract:Unit testing frameworks are nowadays considered a best practice, included in almost all modern software development processes, to achieve rapid development of correct specifications. Knowledge representation and reasoning paradigms such as Answer Set Programming (ASP), that have been used in industry-level applications, are not an exception. Indeed, the first unit testing specification language for ASP was proposed in 2011 as a feature of the ASPIDE development environment. Later, a more portable unit testing language was included in the LANA annotation language. In this paper we revisit both languages and tools for unit testing in ASP. We propose a new unit test specification language that allows one to inline tests within ASP programs, and we identify the computational complexity of the tasks associated with checking the various program-correctness assertions. Test-case specifications are transparent to the traditional evaluation, but can be interpreted by a specific testing tool. Thus, we present a novel environment supporting test driven development of ASP programs.

An efficient solver for ASP(Q)

May 17, 2023Abstract:Answer Set Programming with Quantifiers ASP(Q) extends Answer Set Programming (ASP) to allow for declarative and modular modeling of problems from the entire polynomial hierarchy. The first implementation of ASP(Q), called qasp, was based on a translation to Quantified Boolean Formulae (QBF) with the aim of exploiting the well-developed and mature QBF-solving technology. However, the implementation of the QBF encoding employed in qasp is very general and might produce formulas that are hard to evaluate for existing QBF solvers because of the large number of symbols and sub-clauses. In this paper, we present a new implementation that builds on the ideas of qasp and features both a more efficient encoding procedure and new optimized encodings of ASP(Q) programs in QBF. The new encodings produce smaller formulas (in terms of the number of quantifiers, variables, and clauses) and result in a more efficient evaluation process. An algorithm selection strategy automatically combines several QBF-solving back-ends to further increase performance. An experimental analysis, conducted on known benchmarks, shows that the new system outperforms qasp.

Neuro-Symbolic AI for Compliance Checking of Electrical Control Panels

May 17, 2023

Abstract:Artificial Intelligence plays a main role in supporting and improving smart manufacturing and Industry 4.0, by enabling the automation of different types of tasks manually performed by domain experts. In particular, assessing the compliance of a product with the relative schematic is a time-consuming and prone-to-error process. In this paper, we address this problem in a specific industrial scenario. In particular, we define a Neuro-Symbolic approach for automating the compliance verification of the electrical control panels. Our approach is based on the combination of Deep Learning techniques with Answer Set Programming (ASP), and allows for identifying possible anomalies and errors in the final product even when a very limited amount of training data is available. The experiments conducted on a real test case provided by an Italian Company operating in electrical control panel production demonstrate the effectiveness of the proposed approach.

Reducing the impact of out of vocabulary words in the translation of natural language questions into SPARQL queries

Nov 04, 2021

Abstract:Accessing the large volumes of information available in public knowledge bases might be complicated for those users unfamiliar with the SPARQL query language. Automatic translation of questions posed in natural language in SPARQL has the potential of overcoming this problem. Existing systems based on neural-machine translation are very effective but easily fail in recognizing words that are Out Of the Vocabulary (OOV) of the training set. This is a serious issue while querying large ontologies. In this paper, we combine Named Entity Linking, Named Entity Recognition, and Neural Machine Translation to perform automatic translation of natural language questions into SPARQL queries. We demonstrate empirically that our approach is more effective and resilient to OOV words than existing approaches by running the experiments on Monument, QALD-9, and LC-QuAD v1, which are well-known datasets for Question Answering over DBpedia.

Proceedings 36th International Conference on Logic Programming (Technical Communications)

Sep 19, 2020Abstract:Since the first conference held in Marseille in 1982, ICLP has been the premier international event for presenting research in logic programming. Contributions are solicited in all areas of logic programming and related areas, including but not restricted to: - Foundations: Semantics, Formalisms, Answer-Set Programming, Non-monotonic Reasoning, Knowledge Representation. - Declarative Programming: Inference engines, Analysis, Type and mode inference, Partial evaluation, Abstract interpretation, Transformation, Validation, Verification, Debugging, Profiling, Testing, Logic-based domain-specific languages, constraint handling rules. - Related Paradigms and Synergies: Inductive and Co-inductive Logic Programming, Constraint Logic Programming, Interaction with SAT, SMT and CSP solvers, Logic programming techniques for type inference and theorem proving, Argumentation, Probabilistic Logic Programming, Relations to object-oriented and Functional programming, Description logics, Neural-Symbolic Machine Learning, Hybrid Deep Learning and Symbolic Reasoning. - Implementation: Concurrency and distribution, Objects, Coordination, Mobility, Virtual machines, Compilation, Higher Order, Type systems, Modules, Constraint handling rules, Meta-programming, Foreign interfaces, User interfaces. - Applications: Databases, Big Data, Data Integration and Federation, Software Engineering, Natural Language Processing, Web and Semantic Web, Agents, Artificial Intelligence, Bioinformatics, Education, Computational life sciences, Education, Cybersecurity, and Robotics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge