Francesco Calimeri

Department of Mathematics and Computer Science, University of Calabria, Italy

Looking Beyond Accuracy: A Holistic Benchmark of ECG Foundation Models

Jan 29, 2026Abstract:The electrocardiogram (ECG) is a cost-effective, highly accessible and widely employed diagnostic tool. With the advent of Foundation Models (FMs), the field of AI-assisted ECG interpretation has begun to evolve, as they enable model reuse across different tasks by relying on embeddings. However, to responsibly employ FMs, it is crucial to rigorously assess to which extent the embeddings they produce are generalizable, particularly in error-sensitive domains such as healthcare. Although prior works have already addressed the problem of benchmarking ECG-expert FMs, they focus predominantly on the evaluation of downstream performance. To fill this gap, this study aims to find an in-depth, comprehensive benchmarking framework for FMs, with a specific focus on ECG-expert ones. To this aim, we introduce a benchmark methodology that complements performance-based evaluation with representation-level analysis, leveraging SHAP and UMAP techniques. Furthermore, we rely on the methodology for carrying out an extensive evaluation of several ECG-expert FMs pretrained via state-of-the-art techniques over different cross-continental datasets and data availability settings; this includes ones featuring data scarcity, a fairly common situation in real-world medical scenarios. Experimental results show that our benchmarking protocol provides a rich insight of ECG-expert FMs' embedded patterns, enabling a deeper understanding of their representational structure and generalizability.

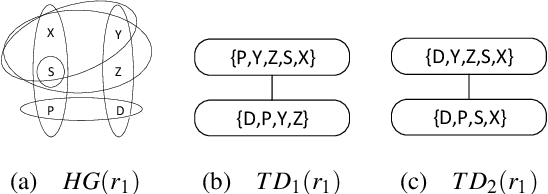

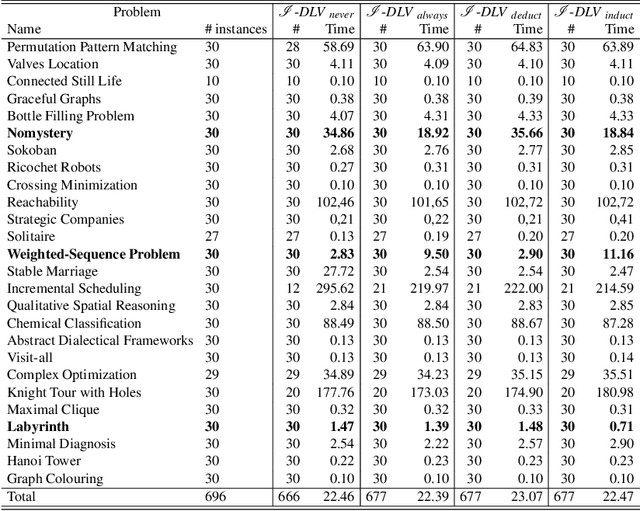

ASP-based Multi-shot Reasoning via DLV2 with Incremental Grounding

Dec 24, 2024Abstract:DLV2 is an AI tool for Knowledge Representation and Reasoning which supports Answer Set Programming (ASP) - a logic-based declarative formalism, successfully used in both academic and industrial applications. Given a logic program modelling a computational problem, an execution of DLV2 produces the so-called answer sets that correspond one-to-one to the solutions to the problem at hand. The computational process of DLV2 relies on the typical Ground & Solve approach where the grounding step transforms the input program into a new, equivalent ground program, and the subsequent solving step applies propositional algorithms to search for the answer sets. Recently, emerging applications in contexts such as stream reasoning and event processing created a demand for multi-shot reasoning: here, the system is expected to be reactive while repeatedly executed over rapidly changing data. In this work, we present a new incremental reasoner obtained from the evolution of DLV2 towards iterated reasoning. Rather than restarting the computation from scratch, the system remains alive across repeated shots, and it incrementally handles the internal grounding process. At each shot, the system reuses previous computations for building and maintaining a large, more general ground program, from which a smaller yet equivalent portion is determined and used for computing answer sets. Notably, the incremental process is performed in a completely transparent fashion for the user. We describe the system, its usage, its applicability and performance in some practically relevant domains. Under consideration in Theory and Practice of Logic Programming (TPLP).

LLASP: Fine-tuning Large Language Models for Answer Set Programming

Jul 26, 2024Abstract:Recently, Large Language Models (LLMs) have showcased their potential in various natural language processing tasks, including code generation. However, while significant progress has been made in adapting LLMs to generate code for several imperative programming languages and tasks, there remains a notable gap in their application to declarative formalisms, such as Answer Set Programming (ASP). In this paper, we move a step towards exploring the capabilities of LLMs for ASP code generation. First, we perform a systematic evaluation of several state-of-the-art LLMs. Despite their power in terms of number of parameters, training data and computational resources, empirical results demonstrate inadequate performances in generating correct ASP programs. Therefore, we propose LLASP, a fine-tuned lightweight model specifically trained to encode fundamental ASP program patterns. To this aim, we create an ad-hoc dataset covering a wide variety of fundamental problem specifications that can be encoded in ASP. Our experiments demonstrate that the quality of ASP programs generated by LLASP is remarkable. This holds true not only when compared to the non-fine-tuned counterpart but also when compared to the majority of eager LLM candidates, particularly from a semantic perspective. All the code and data used to perform the experiments are publicly available at https://anonymous.4open.science/r/LLASP-D86C/.

μ-Net: A Deep Learning-Based Architecture for μ-CT Segmentation

Jun 24, 2024

Abstract:X-ray computed microtomography ({\mu}-CT) is a non-destructive technique that can generate high-resolution 3D images of the internal anatomy of medical and biological samples. These images enable clinicians to examine internal anatomy and gain insights into the disease or anatomical morphology. However, extracting relevant information from 3D images requires semantic segmentation of the regions of interest, which is usually done manually and results time-consuming and tedious. In this work, we propose a novel framework that uses a convolutional neural network (CNN) to automatically segment the full morphology of the heart of Carassius auratus. The framework employs an optimized 2D CNN architecture that can infer a 3D segmentation of the sample, avoiding the high computational cost of a 3D CNN architecture. We tackle the challenges of handling large and high-resoluted image data (over a thousand pixels in each dimension) and a small training database (only three samples) by proposing a standard protocol for data normalization and processing. Moreover, we investigate how the noise, contrast, and spatial resolution of the sample and the training of the architecture are affected by the reconstruction technique, which depends on the number of input images. Experiments show that our framework significantly reduces the time required to segment new samples, allowing a faster microtomography analysis of the Carassius auratus heart shape. Furthermore, our framework can work with any bio-image (biological and medical) from {\mu}-CT with high-resolution and small dataset size

Data Augmentation: a Combined Inductive-Deductive Approach featuring Answer Set Programming

Oct 22, 2023Abstract:Although the availability of a large amount of data is usually given for granted, there are relevant scenarios where this is not the case; for instance, in the biomedical/healthcare domain, some applications require to build huge datasets of proper images, but the acquisition of such images is often hard for different reasons (e.g., accessibility, costs, pathology-related variability), thus causing limited and usually imbalanced datasets. Hence, the need for synthesizing photo-realistic images via advanced Data Augmentation techniques is crucial. In this paper we propose a hybrid inductive-deductive approach to the problem; in particular, starting from a limited set of real labeled images, the proposed framework makes use of logic programs for declaratively specifying the structure of new images, that is guaranteed to comply with both a set of constraints coming from the domain knowledge and some specific desiderata. The resulting labeled images undergo a dedicated process based on Deep Learning in charge of creating photo-realistic images that comply with the generated label.

I-DLV-sr: A Stream Reasoning System based on I-DLV

Aug 05, 2021

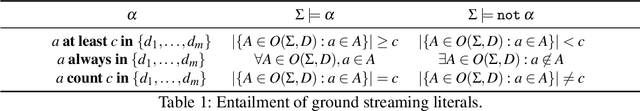

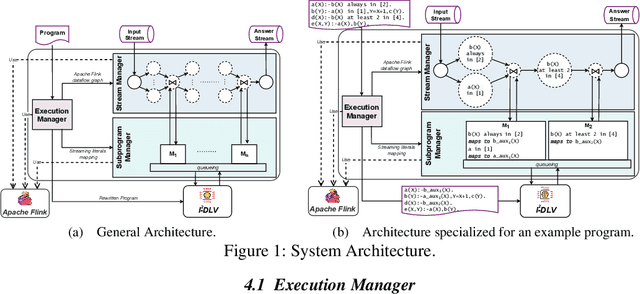

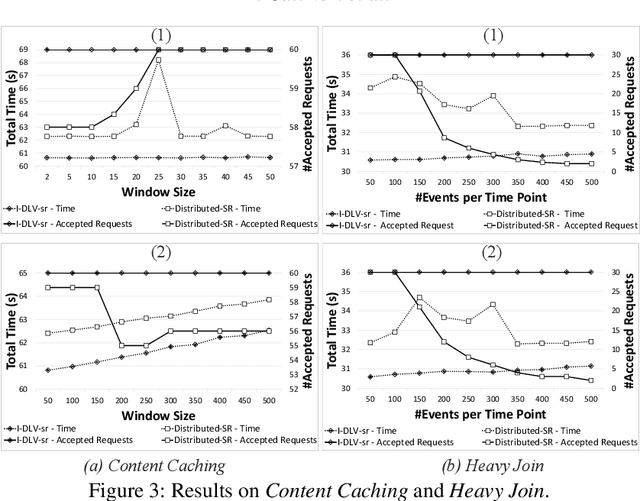

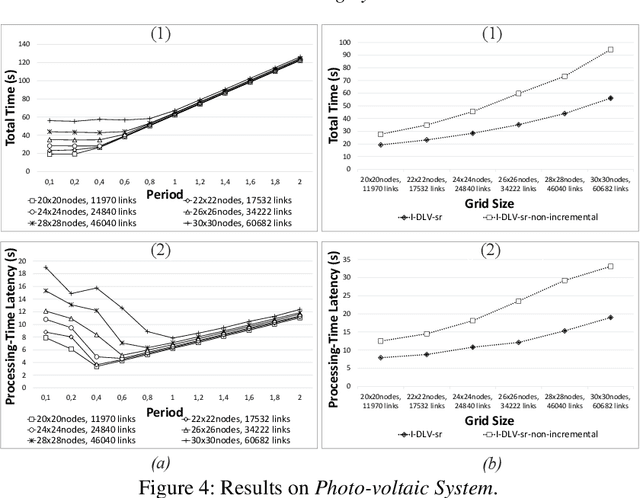

Abstract:We introduce a novel logic-based system for reasoning over data streams, which relies on a framework enabling a tight, fine-tuned interaction between Apache Flink and the I^2-DLV system. The architecture allows to take advantage from both the powerful distributed stream processing capabilities of Flink and the incremental reasoning capabilities of I^2-DLV based on overgrounding techniques. Besides the system architecture, we illustrate the supported input language and its modeling capabilities, and discuss the results of an experimental activity aimed at assessing the viability of the approach. This paper is under consideration in Theory and Practice of Logic Programming (TPLP).

How can we learn from challenges? A statistical approach to driving future algorithm development

Jun 17, 2021

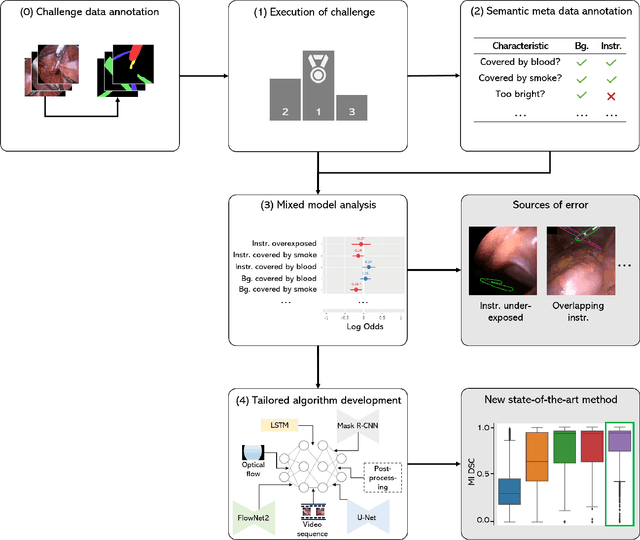

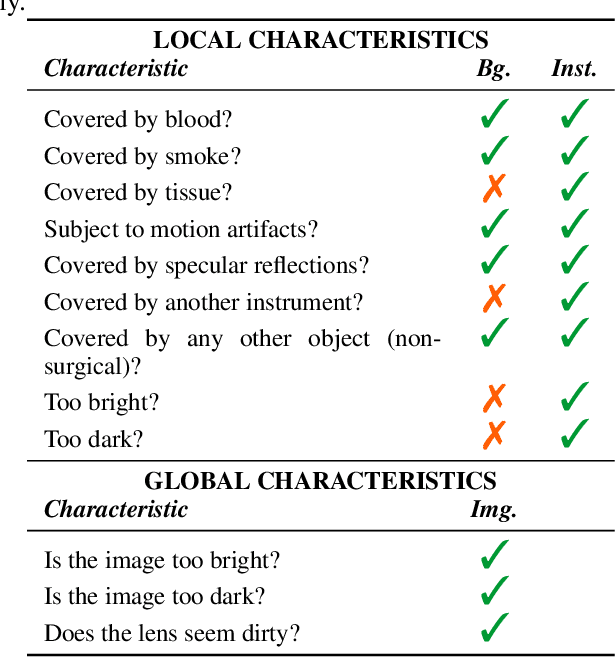

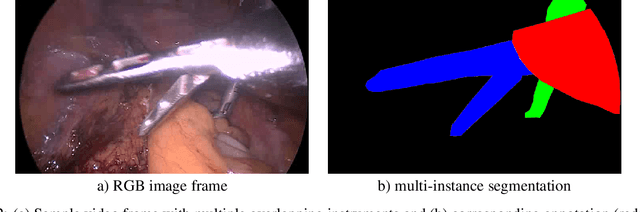

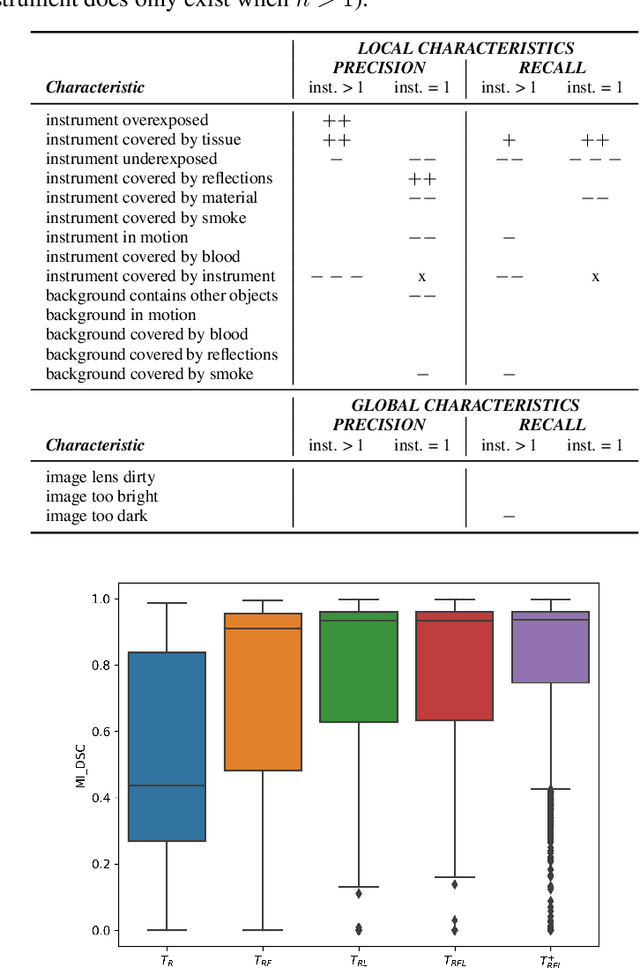

Abstract:Challenges have become the state-of-the-art approach to benchmark image analysis algorithms in a comparative manner. While the validation on identical data sets was a great step forward, results analysis is often restricted to pure ranking tables, leaving relevant questions unanswered. Specifically, little effort has been put into the systematic investigation on what characterizes images in which state-of-the-art algorithms fail. To address this gap in the literature, we (1) present a statistical framework for learning from challenges and (2) instantiate it for the specific task of instrument instance segmentation in laparoscopic videos. Our framework relies on the semantic meta data annotation of images, which serves as foundation for a General Linear Mixed Models (GLMM) analysis. Based on 51,542 meta data annotations performed on 2,728 images, we applied our approach to the results of the Robust Medical Instrument Segmentation Challenge (ROBUST-MIS) challenge 2019 and revealed underexposure, motion and occlusion of instruments as well as the presence of smoke or other objects in the background as major sources of algorithm failure. Our subsequent method development, tailored to the specific remaining issues, yielded a deep learning model with state-of-the-art overall performance and specific strengths in the processing of images in which previous methods tended to fail. Due to the objectivity and generic applicability of our approach, it could become a valuable tool for validation in the field of medical image analysis and beyond. and segmentation of small, crossing, moving and transparent instrument(s) (parts).

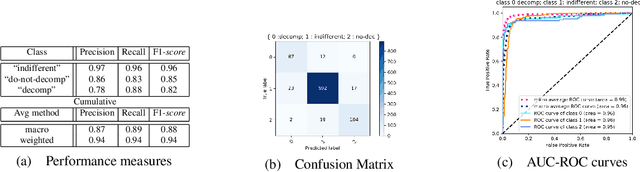

A Machine Learning guided Rewriting Approach for ASP Logic Programs

Sep 22, 2020

Abstract:Answer Set Programming (ASP) is a declarative logic formalism that allows to encode computational problems via logic programs. Despite the declarative nature of the formalism, some advanced expertise is required, in general, for designing an ASP encoding that can be efficiently evaluated by an actual ASP system. A common way for trying to reduce the burden of manually tweaking an ASP program consists in automatically rewriting the input encoding according to suitable techniques, for producing alternative, yet semantically equivalent, ASP programs. However, rewriting does not always grant benefits in terms of performance; hence, proper means are needed for predicting their effects with this respect. In this paper we describe an approach based on Machine Learning (ML) to automatically decide whether to rewrite. In particular, given an ASP program and a set of input facts, our approach chooses whether and how to rewrite input rules based on a set of features measuring their structural properties and domain information. To this end, a Multilayer Perceptrons model has then been trained to guide the ASP grounder I-DLV on rewriting input rules. We report and discuss the results of an experimental evaluation over a prototypical implementation.

* In Proceedings ICLP 2020, arXiv:2009.09158

ASP-Core-2 Input Language Format

Nov 11, 2019Abstract:Standardization of solver input languages has been a main driver for the growth of several areas within knowledge representation and reasoning, fostering the exploitation in actual applications. In this document we present the ASP-Core-2 standard input language for Answer Set Programming, which has been adopted in ASP Competition events since 2013.

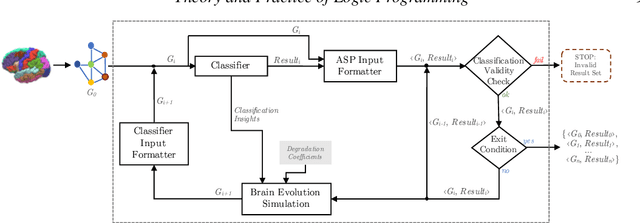

A Logic-Based Framework Leveraging Neural Networks for Studying the Evolution of Neurological Disorders

Oct 21, 2019

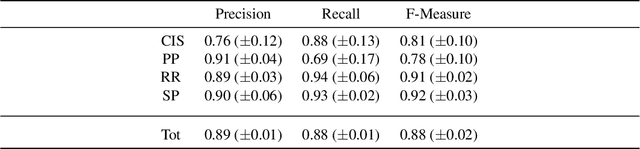

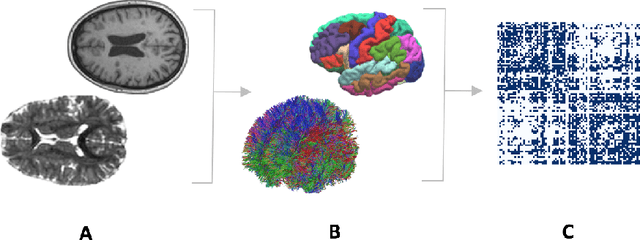

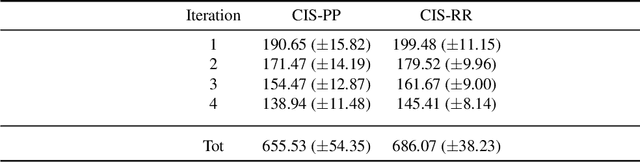

Abstract:Deductive formalisms have been strongly developed in recent years; among them, Answer Set Programming (ASP) gained some momentum, and has been lately fruitfully employed in many real-world scenarios. Nonetheless, in spite of a large number of success stories in relevant application areas, and even in industrial contexts, deductive reasoning cannot be considered the ultimate, comprehensive solution to AI; indeed, in several contexts, other approaches result to be more useful. Typical Bioinformatics tasks, for instance classification, are currently carried out mostly by Machine Learning (ML) based solutions. In this paper, we focus on the relatively new problem of analyzing the evolution of neurological disorders. In this context, ML approaches already demonstrated to be a viable solution for classification tasks; here, we show how ASP can play a relevant role in the brain evolution simulation task. In particular, we propose a general and extensible framework to support physicians and researchers at understanding the complex mechanisms underlying neurological disorders. The framework relies on a combined use of ML and ASP, and is general enough to be applied in several other application scenarios, which are outlined in the paper.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge