Angelika Kimmig

Cardiff University

smProbLog: Stable Model Semantics in ProbLog for Probabilistic Argumentation

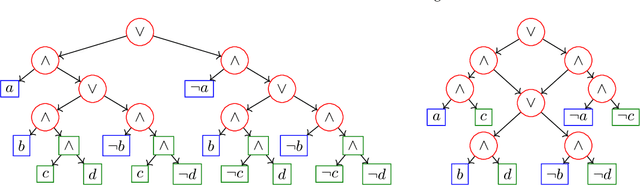

Apr 17, 2023Abstract:Argumentation problems are concerned with determining the acceptability of a set of arguments from their relational structure. When the available information is uncertain, probabilistic argumentation frameworks provide modelling tools to account for it. The first contribution of this paper is a novel interpretation of probabilistic argumentation frameworks as probabilistic logic programs. Probabilistic logic programs are logic programs in which some of the facts are annotated with probabilities. We show that the programs representing probabilistic argumentation frameworks do not satisfy a common assumption in probabilistic logic programming (PLP) semantics, which is, that probabilistic facts fully capture the uncertainty in the domain under investigation. The second contribution of this paper is then a novel PLP semantics for programs where a choice of probabilistic facts does not uniquely determine the truth assignment of the logical atoms. The third contribution of this paper is the implementation of a PLP system supporting this semantics: smProbLog. smProbLog is a novel PLP framework based on the probabilistic logic programming language ProbLog. smProbLog supports many inference and learning tasks typical of PLP, which, together with our first contribution, provide novel reasoning tools for probabilistic argumentation. We evaluate our approach with experiments analyzing the computational cost of the proposed algorithms and their application to a dataset of argumentation problems.

Neural Probabilistic Logic Programming in Discrete-Continuous Domains

Mar 14, 2023Abstract:Neural-symbolic AI (NeSy) allows neural networks to exploit symbolic background knowledge in the form of logic. It has been shown to aid learning in the limited data regime and to facilitate inference on out-of-distribution data. Probabilistic NeSy focuses on integrating neural networks with both logic and probability theory, which additionally allows learning under uncertainty. A major limitation of current probabilistic NeSy systems, such as DeepProbLog, is their restriction to finite probability distributions, i.e., discrete random variables. In contrast, deep probabilistic programming (DPP) excels in modelling and optimising continuous probability distributions. Hence, we introduce DeepSeaProbLog, a neural probabilistic logic programming language that incorporates DPP techniques into NeSy. Doing so results in the support of inference and learning of both discrete and continuous probability distributions under logical constraints. Our main contributions are 1) the semantics of DeepSeaProbLog and its corresponding inference algorithm, 2) a proven asymptotically unbiased learning algorithm, and 3) a series of experiments that illustrate the versatility of our approach.

Declarative Probabilistic Logic Programming in Discrete-Continuous Domains

Feb 21, 2023Abstract:Over the past three decades, the logic programming paradigm has been successfully expanded to support probabilistic modeling, inference and learning. The resulting paradigm of probabilistic logic programming (PLP) and its programming languages owes much of its success to a declarative semantics, the so-called distribution semantics. However, the distribution semantics is limited to discrete random variables only. While PLP has been extended in various ways for supporting hybrid, that is, mixed discrete and continuous random variables, we are still lacking a declarative semantics for hybrid PLP that not only generalizes the distribution semantics and the modeling language but also the standard inference algorithm that is based on knowledge compilation. We contribute the hybrid distribution semantics together with the hybrid PLP language DC-ProbLog and its inference engine infinitesimal algebraic likelihood weighting (IALW). These have the original distribution semantics, standard PLP languages such as ProbLog, and standard inference engines for PLP based on knowledge compilation as special cases. Thus, we generalize the state-of-the-art of PLP towards hybrid PLP in three different aspects: semantics, language and inference. Furthermore, IALW is the first inference algorithm for hybrid probabilistic programming based on knowledge compilation.

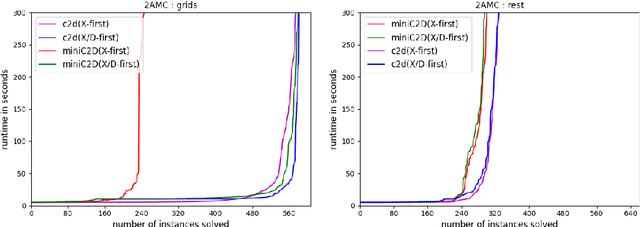

Efficient Knowledge Compilation Beyond Weighted Model Counting

May 16, 2022

Abstract:Quantitative extensions of logic programming often require the solution of so called second level inference tasks, i.e., problems that involve a third operation, such as maximization or normalization, on top of addition and multiplication, and thus go beyond the well-known weighted or algebraic model counting setting of probabilistic logic programming under the distribution semantics. We introduce Second Level Algebraic Model Counting (2AMC) as a generic framework for these kinds of problems. As 2AMC is to (algebraic) model counting what forall-exists-SAT is to propositional satisfiability, it is notoriously hard to solve. First level techniques based on Knowledge Compilation (KC) have been adapted for specific 2AMC instances by imposing variable order constraints on the resulting circuit. However, those constraints can severely increase the circuit size and thus decrease the efficiency of such approaches. We show that we can exploit the logical structure of a 2AMC problem to omit parts of these constraints, thus limiting the negative effect. Furthermore, we introduce and implement a strategy to generate a sufficient set of constraints statically, with a priori guarantees for the performance of KC. Our empirical evaluation on several benchmarks and tasks confirms that our theoretical results can translate into more efficient solving in practice. Under consideration for acceptance in TPLP.

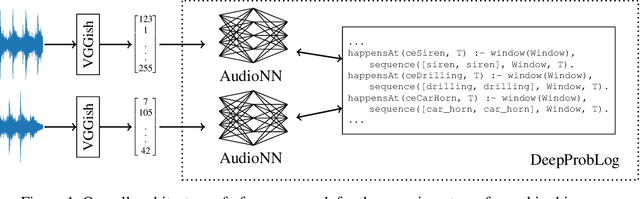

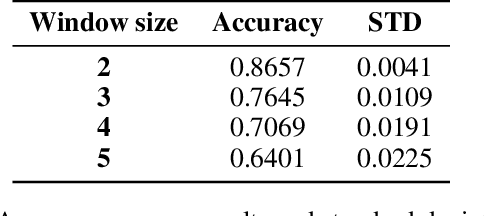

Using DeepProbLog to perform Complex Event Processing on an Audio Stream

Oct 15, 2021

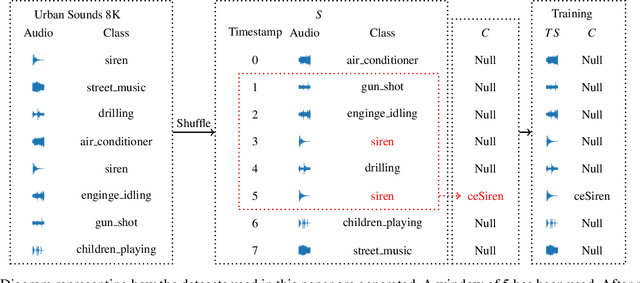

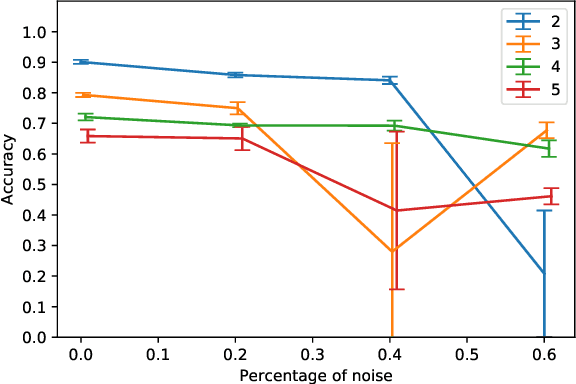

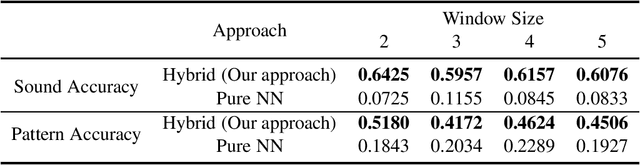

Abstract:In this paper, we present an approach to Complex Event Processing (CEP) that is based on DeepProbLog. This approach has the following objectives: (i) allowing the use of subsymbolic data as an input, (ii) retaining the flexibility and modularity on the definitions of complex event rules, (iii) allowing the system to be trained in an end-to-end manner and (iv) being robust against noisily labelled data. Our approach makes use of DeepProbLog to create a neuro-symbolic architecture that combines a neural network to process the subsymbolic data with a probabilistic logic layer to allow the user to define the rules for the complex events. We demonstrate that our approach is capable of detecting complex events from an audio stream. We also demonstrate that our approach is capable of training even with a dataset that has a moderate proportion of noisy data.

SMProbLog: Stable Model Semantics in ProbLog and its Applications in Argumentation

Oct 07, 2021

Abstract:We introduce SMProbLog, a generalization of the probabilistic logic programming language ProbLog. A ProbLog program defines a distribution over logic programs by specifying for each clause the probability that it belongs to a randomly sampled program, and these probabilities are mutually independent. The semantics of ProbLog is given by the success probability of a query, which corresponds to the probability that the query succeeds in a randomly sampled program. It is well-defined when each random sample uniquely determines the truth values of all logical atoms. Argumentation problems, however, represent an interesting practical application where this is not always the case. SMProbLog generalizes the semantics of ProbLog to the setting where multiple truth assignments are possible for a randomly sampled program, and implements the corresponding algorithms for both inference and learning tasks. We then show how this novel framework can be used to reason about probabilistic argumentation problems. Therefore, the key contribution of this paper are: a more general semantics for ProbLog programs, its implementation into a probabilistic programming framework for both inference and parameter learning, and a novel approach to probabilistic argumentation problems based on such framework.

Handling Epistemic and Aleatory Uncertainties in Probabilistic Circuits

Feb 22, 2021

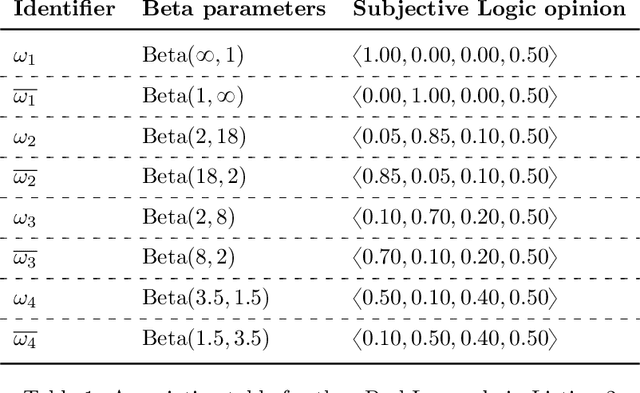

Abstract:When collaborating with an AI system, we need to assess when to trust its recommendations. If we mistakenly trust it in regions where it is likely to err, catastrophic failures may occur, hence the need for Bayesian approaches for probabilistic reasoning in order to determine the confidence (or epistemic uncertainty) in the probabilities in light of the training data. We propose an approach to overcome the independence assumption behind most of the approaches dealing with a large class of probabilistic reasoning that includes Bayesian networks as well as several instances of probabilistic logic. We provide an algorithm for Bayesian learning from sparse, albeit complete, observations, and for deriving inferences and their confidences keeping track of the dependencies between variables when they are manipulated within the unifying computational formalism provided by probabilistic circuits. Each leaf of such circuits is labelled with a beta-distributed random variable that provides us with an elegant framework for representing uncertain probabilities. We achieve better estimation of epistemic uncertainty than state-of-the-art approaches, including highly engineered ones, while being able to handle general circuits and with just a modest increase in the computational effort compared to using point probabilities.

Proceedings 36th International Conference on Logic Programming (Technical Communications)

Sep 19, 2020Abstract:Since the first conference held in Marseille in 1982, ICLP has been the premier international event for presenting research in logic programming. Contributions are solicited in all areas of logic programming and related areas, including but not restricted to: - Foundations: Semantics, Formalisms, Answer-Set Programming, Non-monotonic Reasoning, Knowledge Representation. - Declarative Programming: Inference engines, Analysis, Type and mode inference, Partial evaluation, Abstract interpretation, Transformation, Validation, Verification, Debugging, Profiling, Testing, Logic-based domain-specific languages, constraint handling rules. - Related Paradigms and Synergies: Inductive and Co-inductive Logic Programming, Constraint Logic Programming, Interaction with SAT, SMT and CSP solvers, Logic programming techniques for type inference and theorem proving, Argumentation, Probabilistic Logic Programming, Relations to object-oriented and Functional programming, Description logics, Neural-Symbolic Machine Learning, Hybrid Deep Learning and Symbolic Reasoning. - Implementation: Concurrency and distribution, Objects, Coordination, Mobility, Virtual machines, Compilation, Higher Order, Type systems, Modules, Constraint handling rules, Meta-programming, Foreign interfaces, User interfaces. - Applications: Databases, Big Data, Data Integration and Federation, Software Engineering, Natural Language Processing, Web and Semantic Web, Agents, Artificial Intelligence, Bioinformatics, Education, Computational life sciences, Education, Cybersecurity, and Robotics.

A Hybrid Neuro-Symbolic Approach for Complex Event Processing

Sep 18, 2020

Abstract:Training a model to detect patterns of interrelated events that form situations of interest can be a complex problem: such situations tend to be uncommon, and only sparse data is available. We propose a hybrid neuro-symbolic architecture based on Event Calculus that can perform Complex Event Processing (CEP). It leverages both a neural network to interpret inputs and logical rules that express the pattern of the complex event. Our approach is capable of training with much fewer labelled data than a pure neural network approach, and to learn to classify individual events even when training in an end-to-end manner. We demonstrate this comparing our approach against a pure neural network approach on a dataset based on Urban Sounds 8K.

The current state of automated argumentation theory: a literature review

Mar 30, 2020

Abstract:Automated negotiation can be an efficient method for resolving conflict and redistributing resources in a coalition setting. Automated negotiation has already seen increased usage in fields such as e-commerce and power distribution in smart girds, and recent advancements in opponent modelling have proven to deliver better outcomes. However, significant barriers to more widespread adoption remain, such as lack of predictable outcome over time and user trust. Additionally, there have been many recent advancements in the field of reasoning about uncertainty, which could help alleviate both those problems. As there is no recent survey on these two fields, and specifically not on their possible intersection we aim to provide such a survey here.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge