Marc Roig Vilamala

Analysing Explanation-Related Interactions in Collaborative Perception-Cognition-Communication-Action

Nov 19, 2024

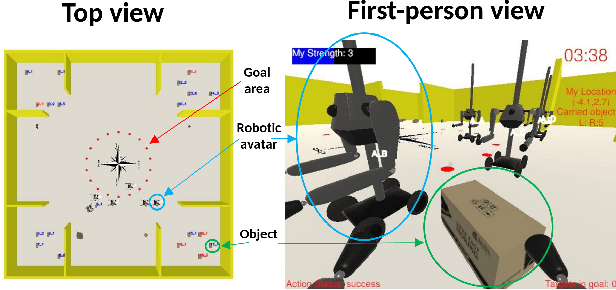

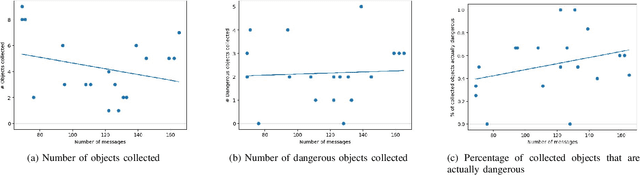

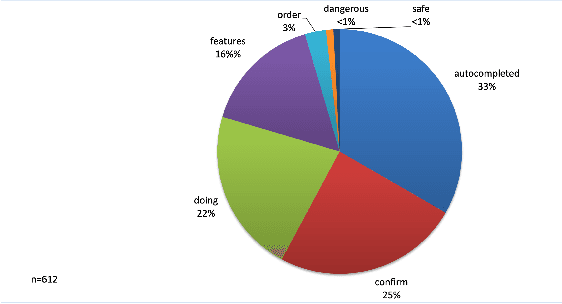

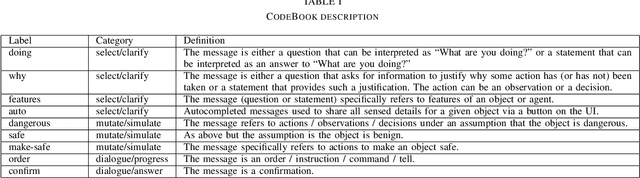

Abstract:Effective communication is essential in collaborative tasks, so AI-equipped robots working alongside humans need to be able to explain their behaviour in order to cooperate effectively and earn trust. We analyse and classify communications among human participants collaborating to complete a simulated emergency response task. The analysis identifies messages that relate to various kinds of interactive explanations identified in the explainable AI literature. This allows us to understand what type of explanations humans expect from their teammates in such settings, and thus where AI-equipped robots most need explanation capabilities. We find that most explanation-related messages seek clarification in the decisions or actions taken. We also confirm that messages have an impact on the performance of our simulated task.

* 4 pages, 3 figures, published as a Late Breaking Report in RO-MAN 2024

Knowledge from Uncertainty in Evidential Deep Learning

Oct 19, 2023Abstract:This work reveals an evidential signal that emerges from the uncertainty value in Evidential Deep Learning (EDL). EDL is one example of a class of uncertainty-aware deep learning approaches designed to provide confidence (or epistemic uncertainty) about the current test sample. In particular for computer vision and bidirectional encoder large language models, the `evidential signal' arising from the Dirichlet strength in EDL can, in some cases, discriminate between classes, which is particularly strong when using large language models. We hypothesise that the KL regularisation term causes EDL to couple aleatoric and epistemic uncertainty. In this paper, we empirically investigate the correlations between misclassification and evaluated uncertainty, and show that EDL's `evidential signal' is due to misclassification bias. We critically evaluate EDL with other Dirichlet-based approaches, namely Generative Evidential Neural Networks (EDL-GEN) and Prior Networks, and show theoretically and empirically the differences between these loss functions. We conclude that EDL's coupling of uncertainty arises from these differences due to the use (or lack) of out-of-distribution samples during training.

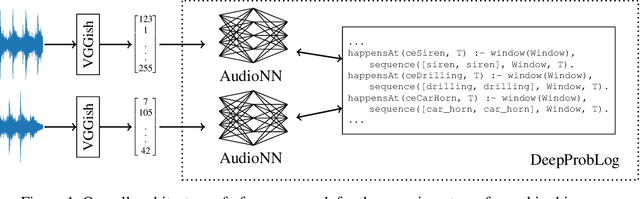

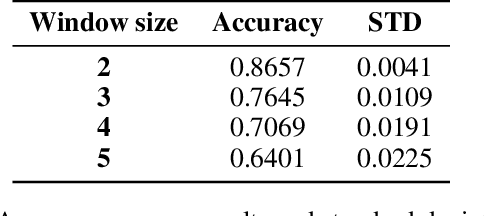

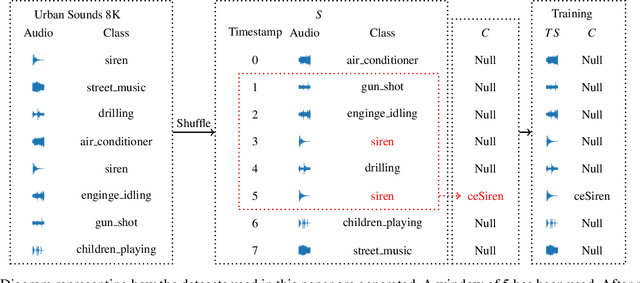

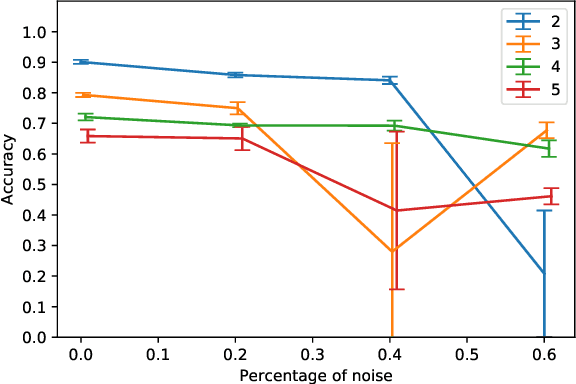

Using DeepProbLog to perform Complex Event Processing on an Audio Stream

Oct 15, 2021

Abstract:In this paper, we present an approach to Complex Event Processing (CEP) that is based on DeepProbLog. This approach has the following objectives: (i) allowing the use of subsymbolic data as an input, (ii) retaining the flexibility and modularity on the definitions of complex event rules, (iii) allowing the system to be trained in an end-to-end manner and (iv) being robust against noisily labelled data. Our approach makes use of DeepProbLog to create a neuro-symbolic architecture that combines a neural network to process the subsymbolic data with a probabilistic logic layer to allow the user to define the rules for the complex events. We demonstrate that our approach is capable of detecting complex events from an audio stream. We also demonstrate that our approach is capable of training even with a dataset that has a moderate proportion of noisy data.

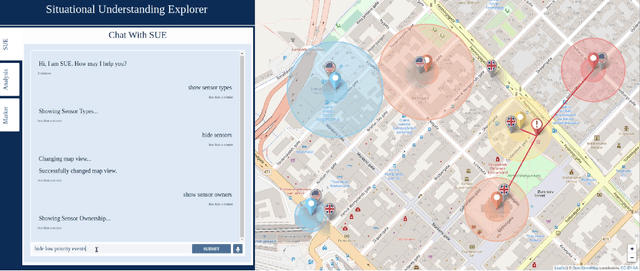

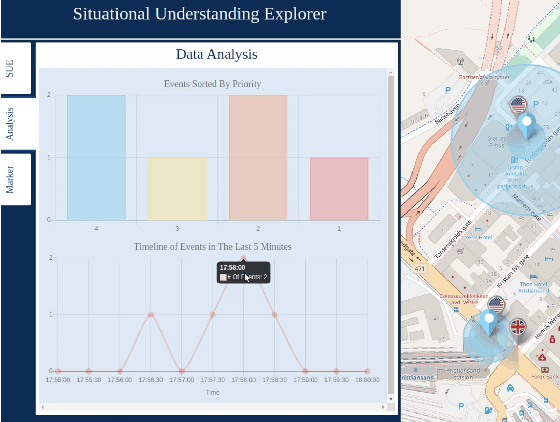

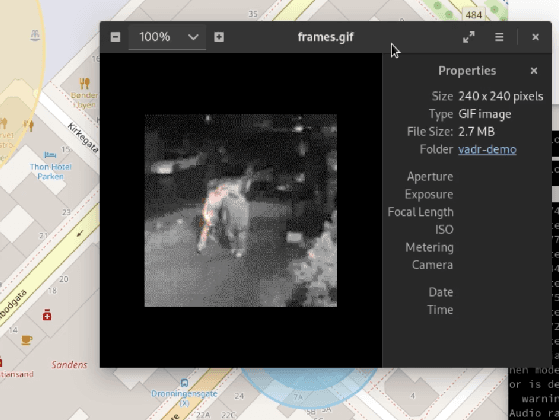

An Experimentation Platform for Explainable Coalition Situational Understanding

Nov 09, 2020

Abstract:We present an experimentation platform for coalition situational understanding research that highlights capabilities in explainable artificial intelligence/machine learning (AI/ML) and integration of symbolic and subsymbolic AI/ML approaches for event processing. The Situational Understanding Explorer (SUE) platform is designed to be lightweight, to easily facilitate experiments and demonstrations, and open. We discuss our requirements to support coalition multi-domain operations with emphasis on asset interoperability and ad hoc human-machine teaming in a dense urban terrain setting. We describe the interface functionality and give examples of SUE applied to coalition situational understanding tasks.

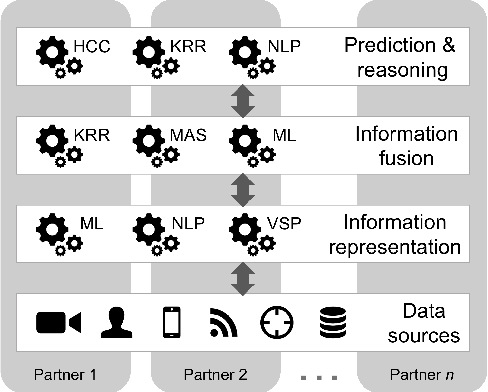

Towards human-agent knowledge fusion (HAKF) in support of distributed coalition teams

Oct 23, 2020

Abstract:Future coalition operations can be substantially augmented through agile teaming between human and machine agents, but in a coalition context these agents may be unfamiliar to the human users and expected to operate in a broad set of scenarios rather than being narrowly defined for particular purposes. In such a setting it is essential that the human agents can rapidly build trust in the machine agents through appropriate transparency of their behaviour, e.g., through explanations. The human agents are also able to bring their local knowledge to the team, observing the situation unfolding and deciding which key information should be communicated to the machine agents to enable them to better account for the particular environment. In this paper we describe the initial steps towards this human-agent knowledge fusion (HAKF) environment through a recap of the key requirements, and an explanation of how these can be fulfilled for an example situation. We show how HAKF has the potential to bring value to both human and machine agents working as part of a distributed coalition team in a complex event processing setting with uncertain sources.

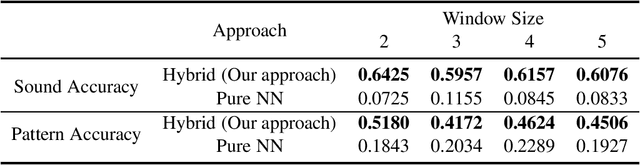

A Hybrid Neuro-Symbolic Approach for Complex Event Processing

Sep 18, 2020

Abstract:Training a model to detect patterns of interrelated events that form situations of interest can be a complex problem: such situations tend to be uncommon, and only sparse data is available. We propose a hybrid neuro-symbolic architecture based on Event Calculus that can perform Complex Event Processing (CEP). It leverages both a neural network to interpret inputs and logical rules that express the pattern of the complex event. Our approach is capable of training with much fewer labelled data than a pure neural network approach, and to learn to classify individual events even when training in an end-to-end manner. We demonstrate this comparing our approach against a pure neural network approach on a dataset based on Urban Sounds 8K.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge