Filip Ginter

Creating a Historical Migration Dataset from Finnish Church Records, 1800-1920

Jun 09, 2025

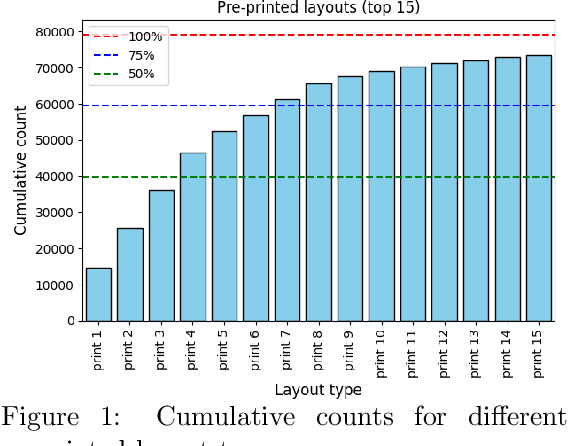

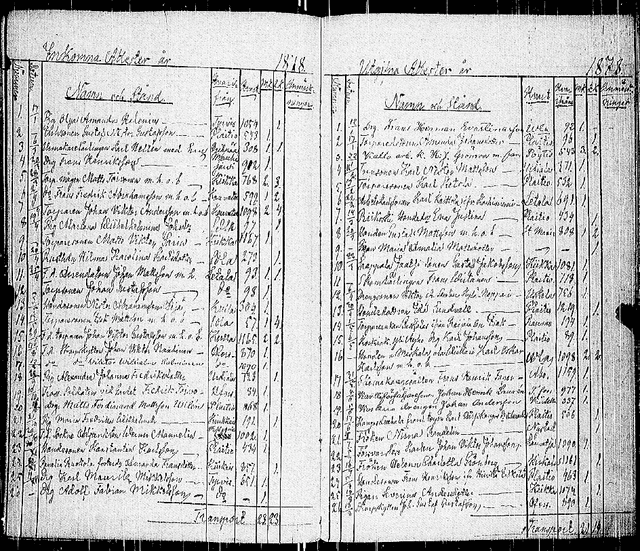

Abstract:This article presents a large-scale effort to create a structured dataset of internal migration in Finland between 1800 and 1920 using digitized church moving records. These records, maintained by Evangelical-Lutheran parishes, document the migration of individuals and families and offer a valuable source for studying historical demographic patterns. The dataset includes over six million entries extracted from approximately 200,000 images of handwritten migration records. The data extraction process was automated using a deep learning pipeline that included layout analysis, table detection, cell classification, and handwriting recognition. The complete pipeline was applied to all images, resulting in a structured dataset suitable for research. The dataset can be used to study internal migration, urbanization, and family migration, and the spread of disease in preindustrial Finland. A case study from the Elim\"aki parish shows how local migration histories can be reconstructed. The work demonstrates how large volumes of handwritten archival material can be transformed into structured data to support historical and demographic research.

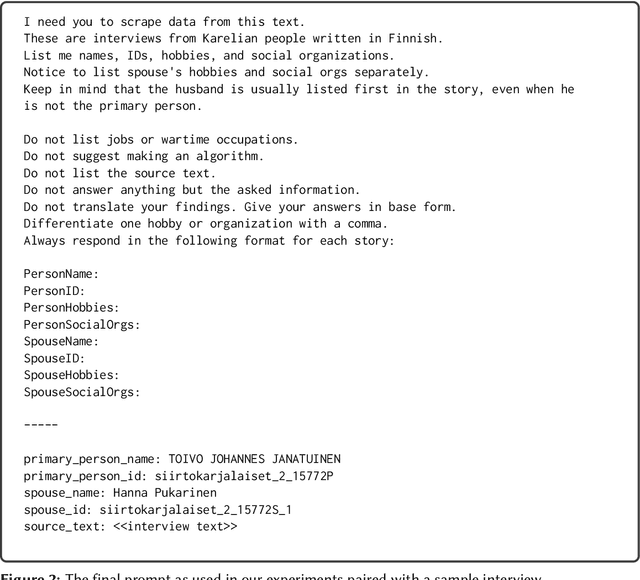

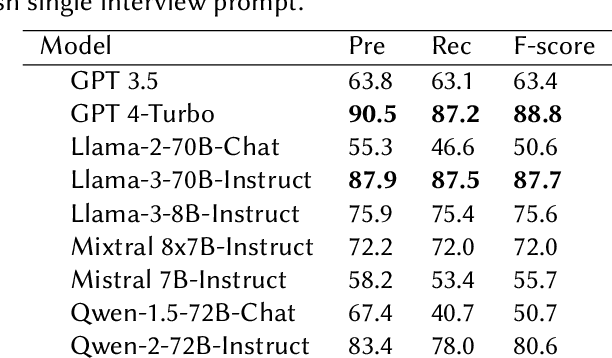

Extracting Social Connections from Finnish Karelian Refugee Interviews Using LLMs

Feb 19, 2025

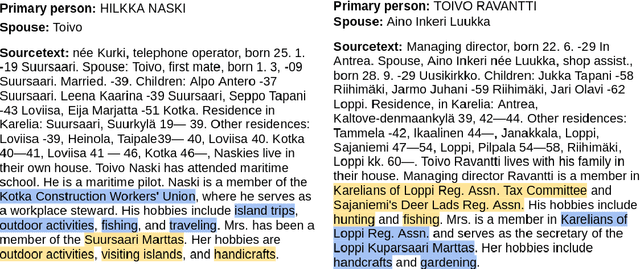

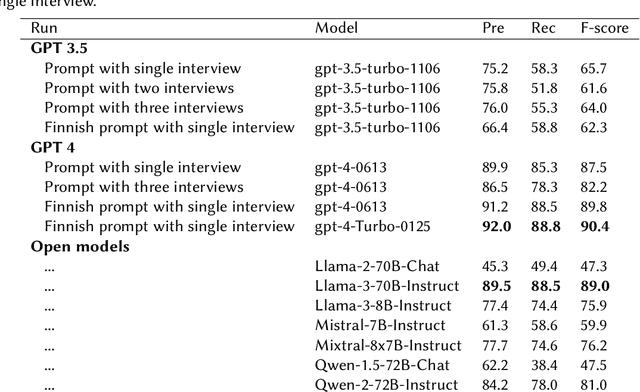

Abstract:We performed a zero-shot information extraction study on a historical collection of 89,339 brief Finnish-language interviews of refugee families relocated post-WWII from Finnish Eastern Karelia. Our research objective is two-fold. First, we aim to extract social organizations and hobbies from the free text of the interviews, separately for each family member. These can act as a proxy variable indicating the degree of social integration of refugees in their new environment. Second, we aim to evaluate several alternative ways to approach this task, comparing a number of generative models and a supervised learning approach, to gain a broader insight into the relative merits of these different approaches and their applicability in similar studies. We find that the best generative model (GPT-4) is roughly on par with human performance, at an F-score of 88.8%. Interestingly, the best open generative model (Llama-3-70B-Instruct) reaches almost the same performance, at 87.7% F-score, demonstrating that open models are becoming a viable alternative for some practical tasks even on non-English data. Additionally, we test a supervised learning alternative, where we fine-tune a Finnish BERT model (FinBERT) using GPT-4 generated training data. By this method, we achieved an F-score of 84.1% already with 6K interviews up to an F-score of 86.3% with 30k interviews. Such an approach would be particularly appealing in cases where the computational resources are limited, or there is a substantial mass of data to process.

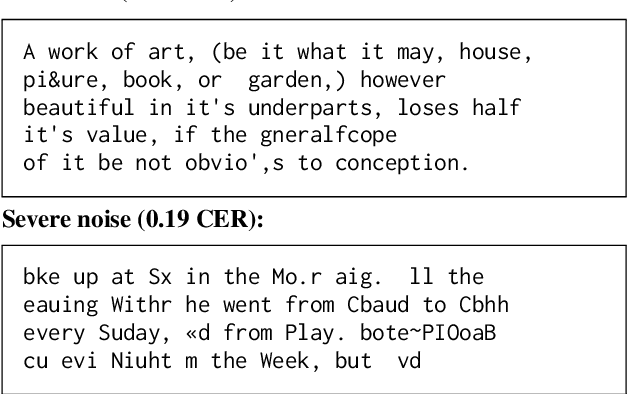

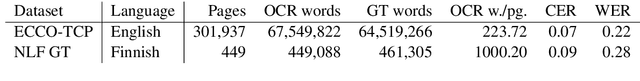

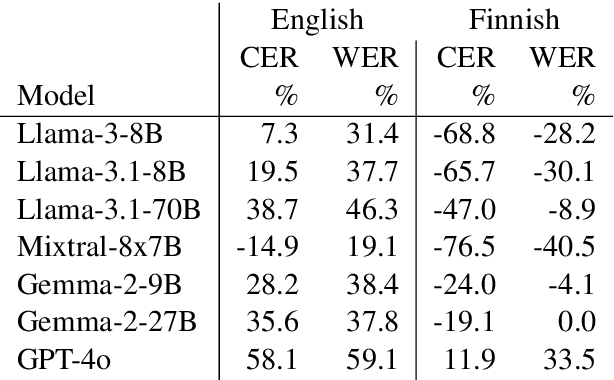

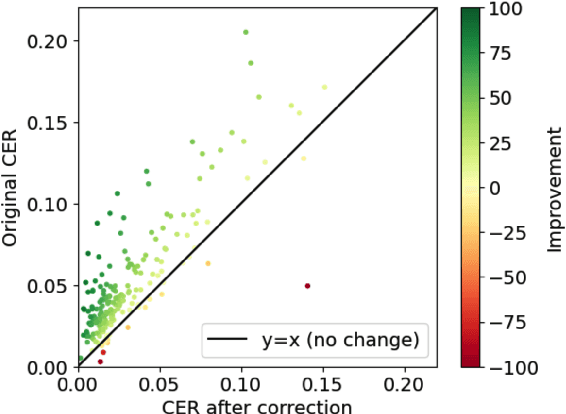

OCR Error Post-Correction with LLMs in Historical Documents: No Free Lunches

Feb 03, 2025

Abstract:Optical Character Recognition (OCR) systems often introduce errors when transcribing historical documents, leaving room for post-correction to improve text quality. This study evaluates the use of open-weight LLMs for OCR error correction in historical English and Finnish datasets. We explore various strategies, including parameter optimization, quantization, segment length effects, and text continuation methods. Our results demonstrate that while modern LLMs show promise in reducing character error rates (CER) in English, a practically useful performance for Finnish was not reached. Our findings highlight the potential and limitations of LLMs in scaling OCR post-correction for large historical corpora.

FinerWeb-10BT: Refining Web Data with LLM-Based Line-Level Filtering

Jan 13, 2025Abstract:Data quality is crucial for training Large Language Models (LLMs). Traditional heuristic filters often miss low-quality text or mistakenly remove valuable content. In this paper, we introduce an LLM-based line-level filtering method to enhance training data quality. We use GPT-4o mini to label a 20,000-document sample from FineWeb at the line level, allowing the model to create descriptive labels for low-quality lines. These labels are grouped into nine main categories, and we train a DeBERTa-v3 classifier to scale the filtering to a 10B-token subset of FineWeb. To test the impact of our filtering, we train GPT-2 models on both the original and the filtered datasets. The results show that models trained on the filtered data achieve higher accuracy on the HellaSwag benchmark and reach their performance targets faster, even with up to 25\% less data. This demonstrates that LLM-based line-level filtering can significantly improve data quality and training efficiency for LLMs. We release our quality-annotated dataset, FinerWeb-10BT, and the codebase to support further work in this area.

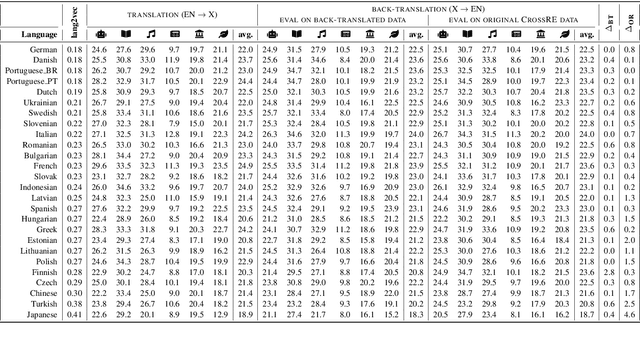

Finnish SQuAD: A Simple Approach to Machine Translation of Span Annotations

Jan 10, 2025Abstract:We apply a simple method to machine translate datasets with span-level annotation using the DeepL MT service and its ability to translate formatted documents. Using this method, we produce a Finnish version of the SQuAD2.0 question answering dataset and train QA retriever models on this new dataset. We evaluate the quality of the dataset and more generally the MT method through direct evaluation, indirect comparison to other similar datasets, a backtranslation experiment, as well as through the performance of downstream trained QA models. In all these evaluations, we find that the method of transfer is not only simple to use but produces consistently better translated data. Given its good performance on the SQuAD dataset, it is likely the method can be used to translate other similar span-annotated datasets for other tasks and languages as well. All code and data is available under an open license: data at HuggingFace TurkuNLP/squad_v2_fi, code on GitHub TurkuNLP/squad2-fi, and model at HuggingFace TurkuNLP/bert-base-finnish-cased-squad2.

FinGPT: Large Generative Models for a Small Language

Nov 03, 2023

Abstract:Large language models (LLMs) excel in many tasks in NLP and beyond, but most open models have very limited coverage of smaller languages and LLM work tends to focus on languages where nearly unlimited data is available for pretraining. In this work, we study the challenges of creating LLMs for Finnish, a language spoken by less than 0.1% of the world population. We compile an extensive dataset of Finnish combining web crawls, news, social media and eBooks. We pursue two approaches to pretrain models: 1) we train seven monolingual models from scratch (186M to 13B parameters) dubbed FinGPT, 2) we continue the pretraining of the multilingual BLOOM model on a mix of its original training data and Finnish, resulting in a 176 billion parameter model we call BLUUMI. For model evaluation, we introduce FIN-bench, a version of BIG-bench with Finnish tasks. We also assess other model qualities such as toxicity and bias. Our models and tools are openly available at https://turkunlp.org/gpt3-finnish.

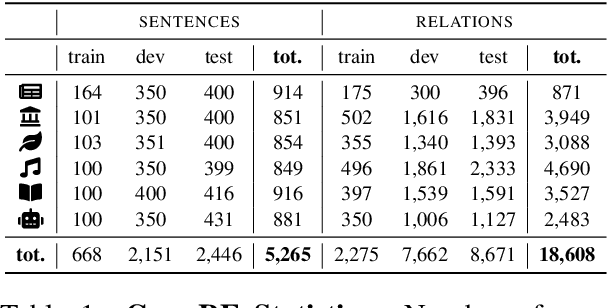

Multi-CrossRE A Multi-Lingual Multi-Domain Dataset for Relation Extraction

May 18, 2023

Abstract:Most research in Relation Extraction (RE) involves the English language, mainly due to the lack of multi-lingual resources. We propose Multi-CrossRE, the broadest multi-lingual dataset for RE, including 26 languages in addition to English, and covering six text domains. Multi-CrossRE is a machine translated version of CrossRE (Bassignana and Plank, 2022), with a sub-portion including more than 200 sentences in seven diverse languages checked by native speakers. We run a baseline model over the 26 new datasets and--as sanity check--over the 26 back-translations to English. Results on the back-translated data are consistent with the ones on the original English CrossRE, indicating high quality of the translation and the resulting dataset.

Silver Syntax Pre-training for Cross-Domain Relation Extraction

May 18, 2023Abstract:Relation Extraction (RE) remains a challenging task, especially when considering realistic out-of-domain evaluations. One of the main reasons for this is the limited training size of current RE datasets: obtaining high-quality (manually annotated) data is extremely expensive and cannot realistically be repeated for each new domain. An intermediate training step on data from related tasks has shown to be beneficial across many NLP tasks.However, this setup still requires supplementary annotated data, which is often not available. In this paper, we investigate intermediate pre-training specifically for RE. We exploit the affinity between syntactic structure and semantic RE, and identify the syntactic relations which are closely related to RE by being on the shortest dependency path between two entities. We then take advantage of the high accuracy of current syntactic parsers in order to automatically obtain large amounts of low-cost pre-training data. By pre-training our RE model on the relevant syntactic relations, we are able to outperform the baseline in five out of six cross-domain setups, without any additional annotated data.

Identifying gender bias in blockbuster movies through the lens of machine learning

Nov 21, 2022Abstract:The problem of gender bias is highly prevalent and well known. In this paper, we have analysed the portrayal of gender roles in English movies, a medium that effectively influences society in shaping people's beliefs and opinions. First, we gathered scripts of films from different genres and derived sentiments and emotions using natural language processing techniques. Afterwards, we converted the scripts into embeddings, i.e. a way of representing text in the form of vectors. With a thorough investigation, we found specific patterns in male and female characters' personality traits in movies that align with societal stereotypes. Furthermore, we used mathematical and machine learning techniques and found some biases wherein men are shown to be more dominant and envious than women, whereas women have more joyful roles in movies. In our work, we introduce, to the best of our knowledge, a novel technique to convert dialogues into an array of emotions by combining it with Plutchik's wheel of emotions. Our study aims to encourage reflections on gender equality in the domain of film and facilitate other researchers in analysing movies automatically instead of using manual approaches.

GEMv2: Multilingual NLG Benchmarking in a Single Line of Code

Jun 24, 2022

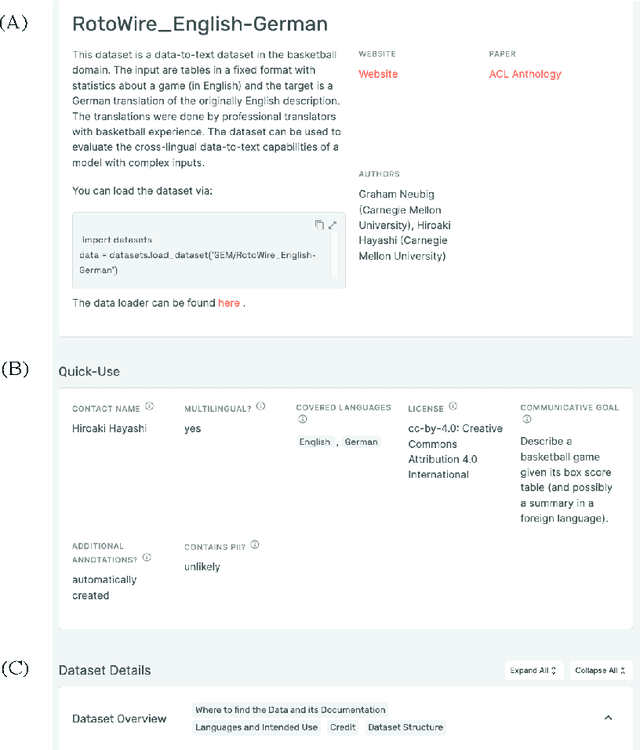

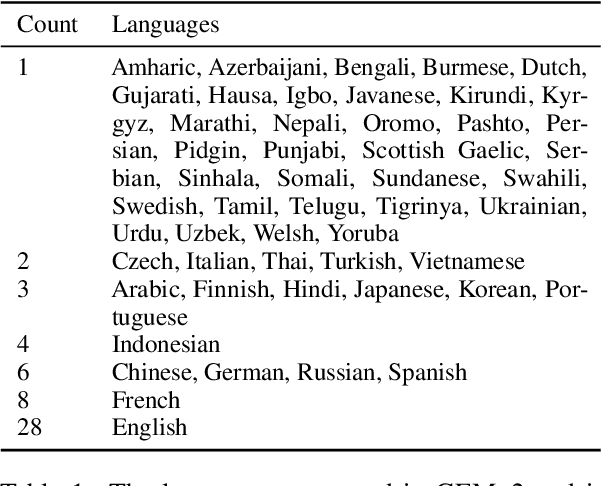

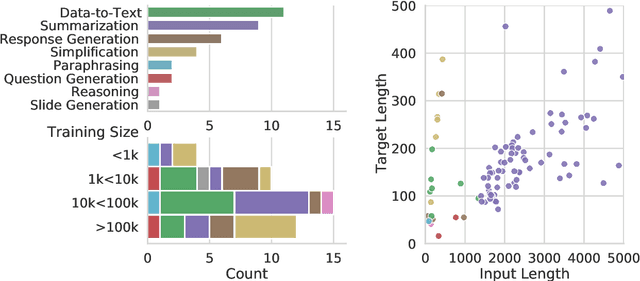

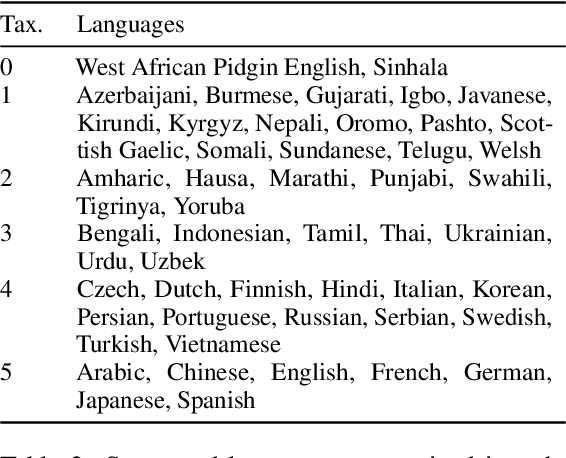

Abstract:Evaluation in machine learning is usually informed by past choices, for example which datasets or metrics to use. This standardization enables the comparison on equal footing using leaderboards, but the evaluation choices become sub-optimal as better alternatives arise. This problem is especially pertinent in natural language generation which requires ever-improving suites of datasets, metrics, and human evaluation to make definitive claims. To make following best model evaluation practices easier, we introduce GEMv2. The new version of the Generation, Evaluation, and Metrics Benchmark introduces a modular infrastructure for dataset, model, and metric developers to benefit from each others work. GEMv2 supports 40 documented datasets in 51 languages. Models for all datasets can be evaluated online and our interactive data card creation and rendering tools make it easier to add new datasets to the living benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge