Eric Marcus

Towards Robust Foundation Models for Digital Pathology

Jul 22, 2025

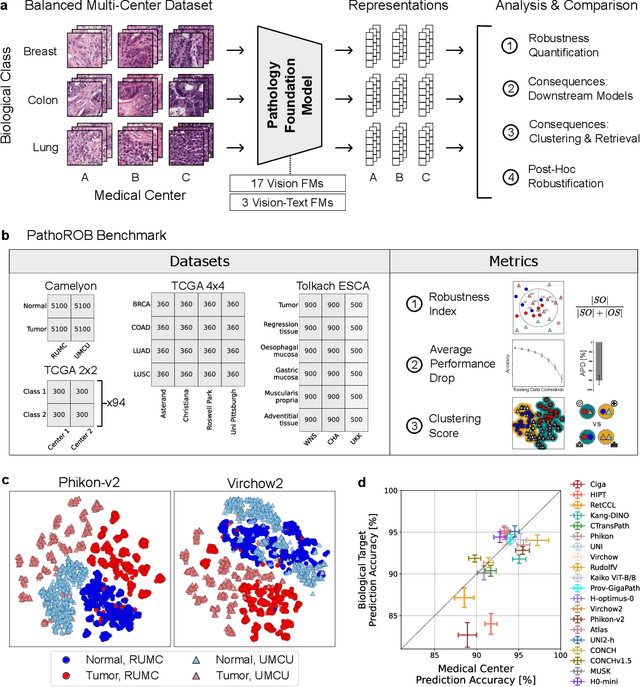

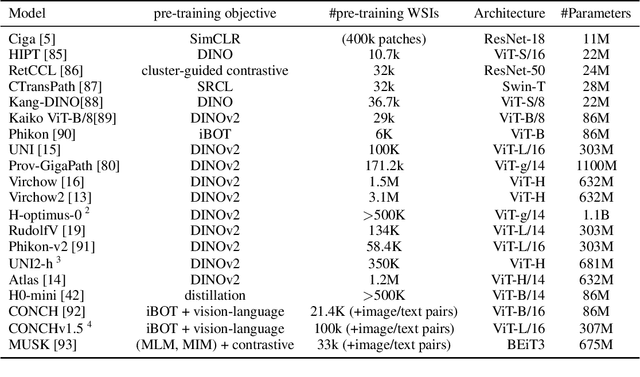

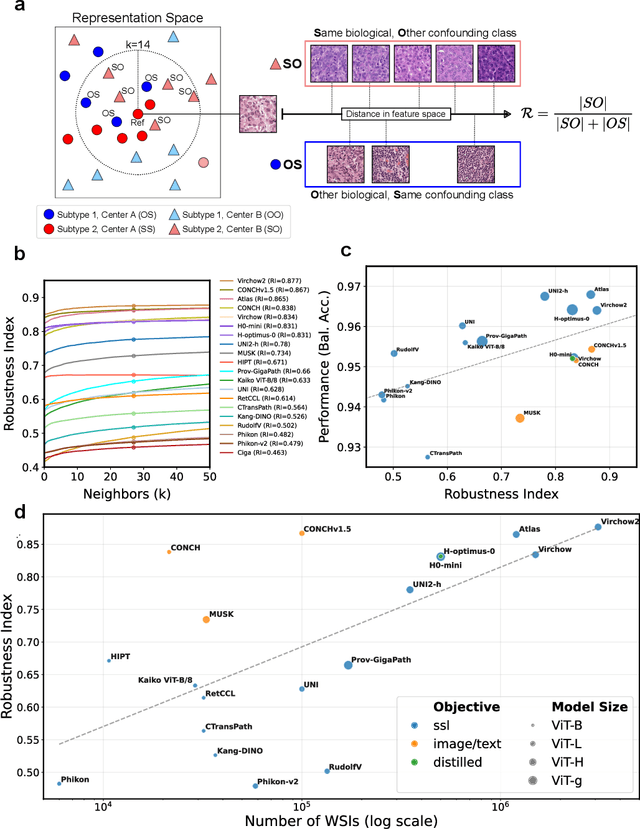

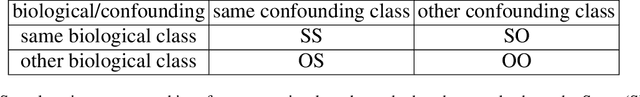

Abstract:Biomedical Foundation Models (FMs) are rapidly transforming AI-enabled healthcare research and entering clinical validation. However, their susceptibility to learning non-biological technical features -- including variations in surgical/endoscopic techniques, laboratory procedures, and scanner hardware -- poses risks for clinical deployment. We present the first systematic investigation of pathology FM robustness to non-biological features. Our work (i) introduces measures to quantify FM robustness, (ii) demonstrates the consequences of limited robustness, and (iii) proposes a framework for FM robustification to mitigate these issues. Specifically, we developed PathoROB, a robustness benchmark with three novel metrics, including the robustness index, and four datasets covering 28 biological classes from 34 medical centers. Our experiments reveal robustness deficits across all 20 evaluated FMs, and substantial robustness differences between them. We found that non-robust FM representations can cause major diagnostic downstream errors and clinical blunders that prevent safe clinical adoption. Using more robust FMs and post-hoc robustification considerably reduced (but did not yet eliminate) the risk of such errors. This work establishes that robustness evaluation is essential for validating pathology FMs before clinical adoption and demonstrates that future FM development must integrate robustness as a core design principle. PathoROB provides a blueprint for assessing robustness across biomedical domains, guiding FM improvement efforts towards more robust, representative, and clinically deployable AI systems that prioritize biological information over technical artifacts.

Foundation Models in Medical Imaging -- A Review and Outlook

Jun 10, 2025Abstract:Foundation models (FMs) are changing the way medical images are analyzed by learning from large collections of unlabeled data. Instead of relying on manually annotated examples, FMs are pre-trained to learn general-purpose visual features that can later be adapted to specific clinical tasks with little additional supervision. In this review, we examine how FMs are being developed and applied in pathology, radiology, and ophthalmology, drawing on evidence from over 150 studies. We explain the core components of FM pipelines, including model architectures, self-supervised learning methods, and strategies for downstream adaptation. We also review how FMs are being used in each imaging domain and compare design choices across applications. Finally, we discuss key challenges and open questions to guide future research.

Current Pathology Foundation Models are unrobust to Medical Center Differences

Jan 29, 2025

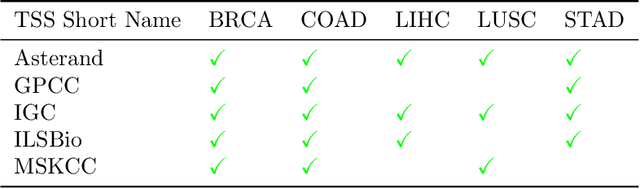

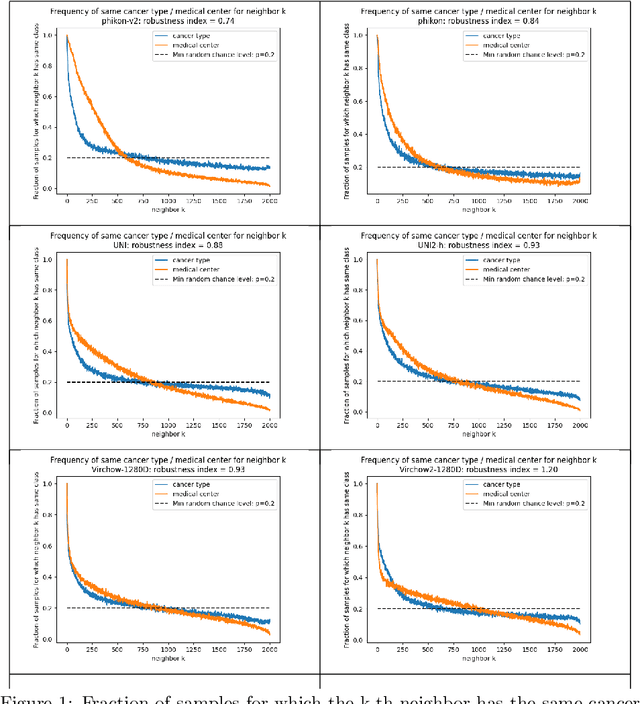

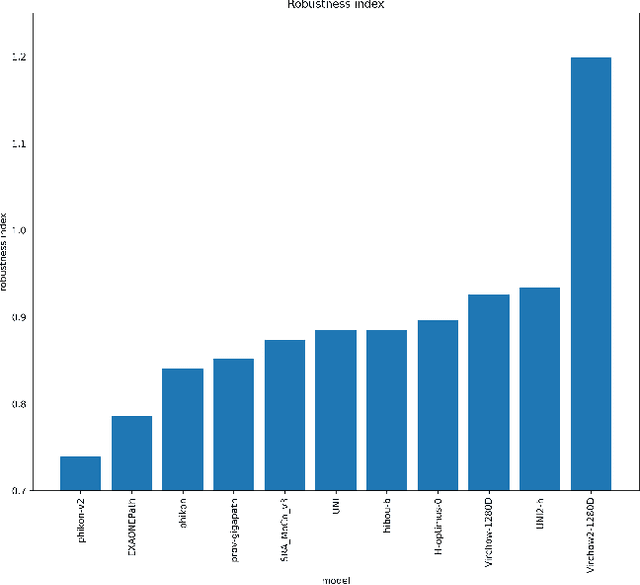

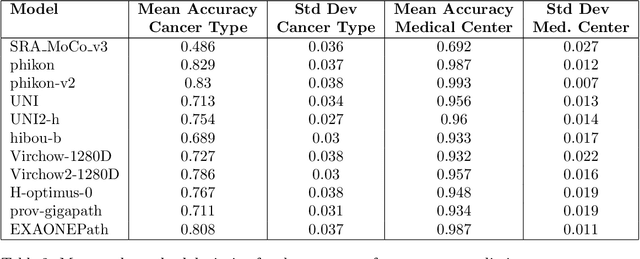

Abstract:Pathology Foundation Models (FMs) hold great promise for healthcare. Before they can be used in clinical practice, it is essential to ensure they are robust to variations between medical centers. We measure whether pathology FMs focus on biological features like tissue and cancer type, or on the well known confounding medical center signatures introduced by staining procedure and other differences. We introduce the Robustness Index. This novel robustness metric reflects to what degree biological features dominate confounding features. Ten current publicly available pathology FMs are evaluated. We find that all current pathology foundation models evaluated represent the medical center to a strong degree. Significant differences in the robustness index are observed. Only one model so far has a robustness index greater than one, meaning biological features dominate confounding features, but only slightly. A quantitative approach to measure the influence of medical center differences on FM-based prediction performance is described. We analyze the impact of unrobustness on classification performance of downstream models, and find that cancer-type classification errors are not random, but specifically attributable to same-center confounders: images of other classes from the same medical center. We visualize FM embedding spaces, and find these are more strongly organized by medical centers than by biological factors. As a consequence, the medical center of origin is predicted more accurately than the tissue source and cancer type. The robustness index introduced here is provided with the aim of advancing progress towards clinical adoption of robust and reliable pathology FMs.

Ordinal Learning: Longitudinal Attention Alignment Model for Predicting Time to Future Breast Cancer Events from Mammograms

Sep 10, 2024Abstract:Precision breast cancer (BC) risk assessment is crucial for developing individualized screening and prevention. Despite the promising potential of recent mammogram (MG) based deep learning models in predicting BC risk, they mostly overlook the 'time-to-future-event' ordering among patients and exhibit limited explorations into how they track history changes in breast tissue, thereby limiting their clinical application. In this work, we propose a novel method, named OA-BreaCR, to precisely model the ordinal relationship of the time to and between BC events while incorporating longitudinal breast tissue changes in a more explainable manner. We validate our method on public EMBED and inhouse datasets, comparing with existing BC risk prediction and time prediction methods. Our ordinal learning method OA-BreaCR outperforms existing methods in both BC risk and time-to-future-event prediction tasks. Additionally, ordinal heatmap visualizations show the model's attention over time. Our findings underscore the importance of interpretable and precise risk assessment for enhancing BC screening and prevention efforts. The code will be accessible to the public.

Problems in AI, their roots in philosophy, and implications for science and society

Jul 22, 2024Abstract:Artificial Intelligence (AI) is one of today's most relevant emergent technologies. In view thereof, this paper proposes that more attention should be paid to the philosophical aspects of AI technology and its use. It is argued that this deficit is generally combined with philosophical misconceptions about the growth of knowledge. To identify these misconceptions, reference is made to the ideas of the philosopher of science Karl Popper and the physicist David Deutsch. The works of both thinkers aim against mistaken theories of knowledge, such as inductivism, empiricism, and instrumentalism. This paper shows that these theories bear similarities to how current AI technology operates. It also shows that these theories are very much alive in the (public) discourse on AI, often called Bayesianism. In line with Popper and Deutsch, it is proposed that all these theories are based on mistaken philosophies of knowledge. This includes an analysis of the implications of these mistaken philosophies for the use of AI in science and society, including some of the likely problem situations that will arise. This paper finally provides a realistic outlook on Artificial General Intelligence (AGI) and three propositions on A(G)I and philosophy (i.e., epistemology).

Nodule detection and generation on chest X-rays: NODE21 Challenge

Jan 04, 2024

Abstract:Pulmonary nodules may be an early manifestation of lung cancer, the leading cause of cancer-related deaths among both men and women. Numerous studies have established that deep learning methods can yield high-performance levels in the detection of lung nodules in chest X-rays. However, the lack of gold-standard public datasets slows down the progression of the research and prevents benchmarking of methods for this task. To address this, we organized a public research challenge, NODE21, aimed at the detection and generation of lung nodules in chest X-rays. While the detection track assesses state-of-the-art nodule detection systems, the generation track determines the utility of nodule generation algorithms to augment training data and hence improve the performance of the detection systems. This paper summarizes the results of the NODE21 challenge and performs extensive additional experiments to examine the impact of the synthetically generated nodule training images on the detection algorithm performance.

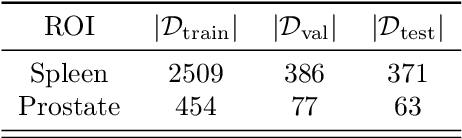

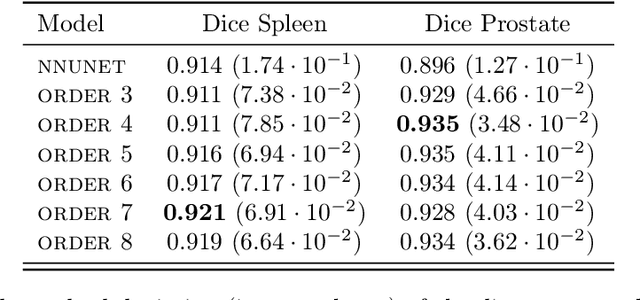

Kandinsky Conformal Prediction: Efficient Calibration of Image Segmentation Algorithms

Nov 20, 2023Abstract:Image segmentation algorithms can be understood as a collection of pixel classifiers, for which the outcomes of nearby pixels are correlated. Classifier models can be calibrated using Inductive Conformal Prediction, but this requires holding back a sufficiently large calibration dataset for computing the distribution of non-conformity scores of the model's predictions. If one only requires only marginal calibration on the image level, this calibration set consists of all individual pixels in the images available for calibration. However, if the goal is to attain proper calibration for each individual pixel classifier, the calibration set consists of individual images. In a scenario where data are scarce (such as the medical domain), it may not always be possible to set aside sufficiently many images for this pixel-level calibration. The method we propose, dubbed ``Kandinsky calibration'', makes use of the spatial structure present in the distribution of natural images to simultaneously calibrate the classifiers of ``similar'' pixels. This can be seen as an intermediate approach between marginal (imagewise) and conditional (pixelwise) calibration, where non-conformity scores are aggregated over similar image regions, thereby making more efficient use of the images available for calibration. We run experiments on segmentation algorithms trained and calibrated on subsets of the public MS-COCO and Medical Decathlon datasets, demonstrating that Kandinsky calibration method can significantly improve the coverage. When compared to both pixelwise and imagewise calibration on little data, the Kandinsky method achieves much lower coverage errors, indicating the data efficiency of the Kandinsky calibration.

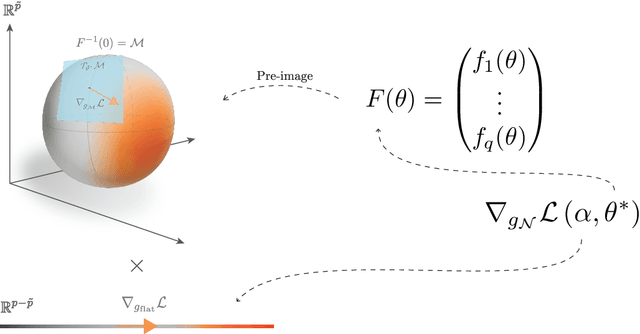

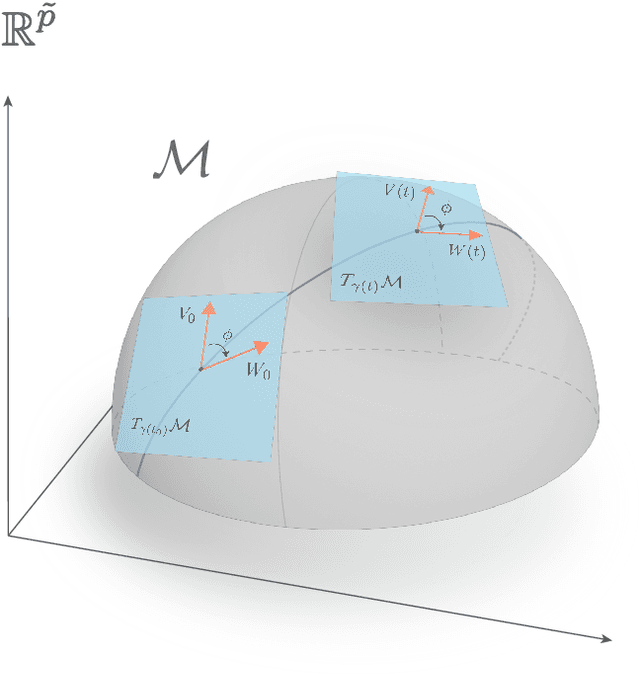

Constrained Empirical Risk Minimization: Theory and Practice

Feb 09, 2023

Abstract:Deep Neural Networks (DNNs) are widely used for their ability to effectively approximate large classes of functions. This flexibility, however, makes the strict enforcement of constraints on DNNs an open problem. Here we present a framework that, under mild assumptions, allows the exact enforcement of constraints on parameterized sets of functions such as DNNs. Instead of imposing "soft'' constraints via additional terms in the loss, we restrict (a subset of) the DNN parameters to a submanifold on which the constraints are satisfied exactly throughout the entire training procedure. We focus on constraints that are outside the scope of equivariant networks used in Geometric Deep Learning. As a major example of the framework, we restrict filters of a Convolutional Neural Network (CNN) to be wavelets, and apply these wavelet networks to the task of contour prediction in the medical domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge