Ecem Sogancioglu

Nodule detection and generation on chest X-rays: NODE21 Challenge

Jan 04, 2024

Abstract:Pulmonary nodules may be an early manifestation of lung cancer, the leading cause of cancer-related deaths among both men and women. Numerous studies have established that deep learning methods can yield high-performance levels in the detection of lung nodules in chest X-rays. However, the lack of gold-standard public datasets slows down the progression of the research and prevents benchmarking of methods for this task. To address this, we organized a public research challenge, NODE21, aimed at the detection and generation of lung nodules in chest X-rays. While the detection track assesses state-of-the-art nodule detection systems, the generation track determines the utility of nodule generation algorithms to augment training data and hence improve the performance of the detection systems. This paper summarizes the results of the NODE21 challenge and performs extensive additional experiments to examine the impact of the synthetically generated nodule training images on the detection algorithm performance.

Automated Estimation of Total Lung Volume using Chest Radiographs and Deep Learning

May 03, 2021

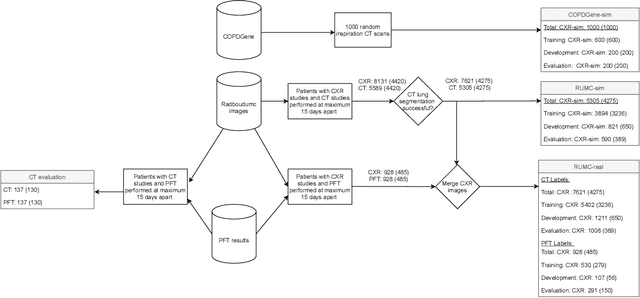

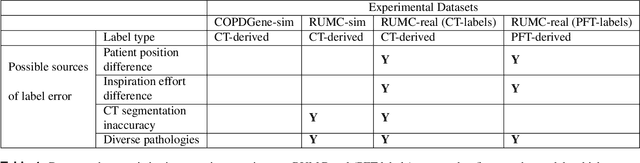

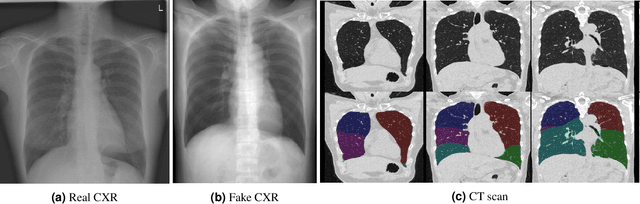

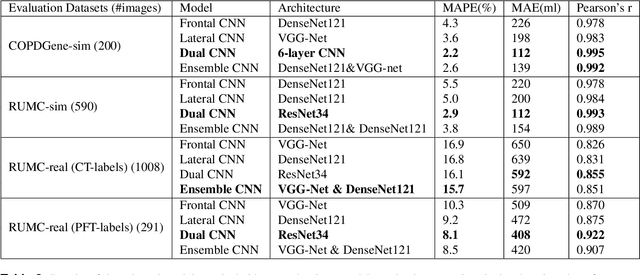

Abstract:Total lung volume is an important quantitative biomarker and is used for the assessment of restrictive lung diseases. In this study, we investigate the performance of several deep-learning approaches for automated measurement of total lung volume from chest radiographs. 7621 posteroanterior and lateral view chest radiographs (CXR) were collected from patients with chest CT available. Similarly, 928 CXR studies were chosen from patients with pulmonary function test (PFT) results. The reference total lung volume was calculated from lung segmentation on CT or PFT data, respectively. This dataset was used to train deep-learning architectures to predict total lung volume from chest radiographs. The experiments were constructed in a step-wise fashion with increasing complexity to demonstrate the effect of training with CT-derived labels only and the sources of error. The optimal models were tested on 291 CXR studies with reference lung volume obtained from PFT. The optimal deep-learning regression model showed an MAE of 408 ml and a MAPE of 8.1\% and Pearson's r = 0.92 using both frontal and lateral chest radiographs as input. CT-derived labels were useful for pre-training but the optimal performance was obtained by fine-tuning the network with PFT-derived labels. We demonstrate, for the first time, that state-of-the-art deep learning solutions can accurately measure total lung volume from plain chest radiographs. The proposed model can be used to obtain total lung volume from routinely acquired chest radiographs at no additional cost and could be a useful tool to identify trends over time in patients referred regularly for chest x-rays.

Deep Learning for Chest X-ray Analysis: A Survey

Mar 15, 2021

Abstract:Recent advances in deep learning have led to a promising performance in many medical image analysis tasks. As the most commonly performed radiological exam, chest radiographs are a particularly important modality for which a variety of applications have been researched. The release of multiple, large, publicly available chest X-ray datasets in recent years has encouraged research interest and boosted the number of publications. In this paper, we review all studies using deep learning on chest radiographs, categorizing works by task: image-level prediction (classification and regression), segmentation, localization, image generation and domain adaptation. Commercially available applications are detailed, and a comprehensive discussion of the current state of the art and potential future directions are provided.

FRODO: Free rejection of out-of-distribution samples: application to chest x-ray analysis

Jul 02, 2019

Abstract:In this work, we propose a method to reject out-of-distribution samples which can be adapted to any network architecture and requires no additional training data. Publicly available chest x-ray data (38,353 images) is used to train a standard ResNet-50 model to detect emphysema. Feature activations of intermediate layers are used as descriptors defining the training data distribution. A novel metric, FRODO, is measured by using the Mahalanobis distance of a new test sample to the training data distribution. The method is tested using a held-out test dataset of 21,176 chest x-rays (in-distribution) and a set of 14,821 out-of-distribution x-ray images of incorrect orientation or anatomy. In classifying test samples as in or out-of distribution, our method achieves an AUC score of 0.99.

Chest X-Rays Image Inpainting with Context Encoders

Dec 03, 2018

Abstract:Chest X-rays are one of the most commonly used technologies for medical diagnosis. Many deep learning models have been proposed to improve and automate the abnormality detection task on this type of data. In this paper, we propose a different approach based on image inpainting under adversarial training first introduced by Goodfellow et al. We configure the context encoder model for this task and train it over 1.1M 128x128 images from healthy X-rays. The goal of our model is to reconstruct the missing central 64x64 patch. Once the model has learned how to inpaint healthy tissue, we test its performance on images with and without abnormalities. We discuss and motivate our results considering PSNR, MSE and SSIM scores as evaluation metrics. In addition, we conduct a 2AFC observer study showing that in half of the times an expert is unable to distinguish real images from the ones reconstructed using our model. By computing and visualizing the pixel-wise difference between source and reconstructed images, we can highlight abnormalities to simplify further detection and classification tasks.

Chest X-ray Inpainting with Deep Generative Models

Aug 29, 2018

Abstract:Generative adversarial networks have been successfully applied to inpainting in natural images. However, the current state-of-the-art models have not yet been widely adopted in the medical imaging domain. In this paper, we investigate the performance of three recently published deep learning based inpainting models: context encoders, semantic image inpainting, and the contextual attention model, applied to chest x-rays, as the chest exam is the most commonly performed radiological procedure. We train these generative models on 1.2M 128 $\times$ 128 patches from 60K healthy x-rays, and learn to predict the center 64 $\times$ 64 region in each patch. We test the models on both the healthy and abnormal radiographs. We evaluate the results by visual inspection and comparing the PSNR scores. The outputs of the models are in most cases highly realistic. We show that the methods have potential to enhance and detect abnormalities. In addition, we perform a 2AFC observer study and show that an experienced human observer performs poorly in detecting inpainted regions, particularly those generated by the contextual attention model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge