David J. Yoon

EgoDex: Learning Dexterous Manipulation from Large-Scale Egocentric Video

May 16, 2025Abstract:Imitation learning for manipulation has a well-known data scarcity problem. Unlike natural language and 2D computer vision, there is no Internet-scale corpus of data for dexterous manipulation. One appealing option is egocentric human video, a passively scalable data source. However, existing large-scale datasets such as Ego4D do not have native hand pose annotations and do not focus on object manipulation. To this end, we use Apple Vision Pro to collect EgoDex: the largest and most diverse dataset of dexterous human manipulation to date. EgoDex has 829 hours of egocentric video with paired 3D hand and finger tracking data collected at the time of recording, where multiple calibrated cameras and on-device SLAM can be used to precisely track the pose of every joint of each hand. The dataset covers a wide range of diverse manipulation behaviors with everyday household objects in 194 different tabletop tasks ranging from tying shoelaces to folding laundry. Furthermore, we train and systematically evaluate imitation learning policies for hand trajectory prediction on the dataset, introducing metrics and benchmarks for measuring progress in this increasingly important area. By releasing this large-scale dataset, we hope to push the frontier of robotics, computer vision, and foundation models.

Balancing Act: Trading Off Doppler Odometry and Map Registration for Efficient Lidar Localization

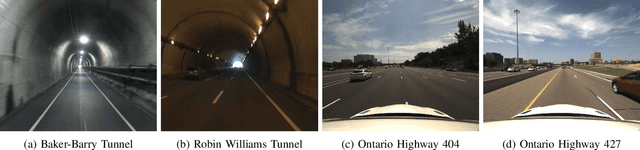

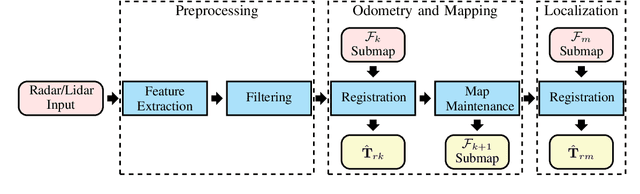

Mar 03, 2025Abstract:Most autonomous vehicles rely on accurate and efficient localization, which is achieved by comparing live sensor data to a preexisting map, to navigate their environment. Balancing the accuracy of localization with computational efficiency remains a significant challenge, as high-accuracy methods often come with higher computational costs. In this paper, we present two ways of improving lidar localization efficiency and study their impact on performance. First, we integrate a lightweight Doppler-based odometry method into a topometric localization pipeline and compare its performance against an iterative closest point (ICP)-based method. We highlight the trade-offs between these approaches: the Doppler estimator offers faster, lightweight updates, while ICP provides higher accuracy at the cost of increased computational load. Second, by controlling the frequency of localization updates and leveraging odometry estimates between them, we demonstrate that accurate localization can be maintained while optimizing for computational efficiency using either odometry method. Our experimental results show that localizing every 10 lidar frames strikes a favourable balance, achieving a localization accuracy below 0.05 meters in translation and below 0.1 degrees in orientation while reducing computational effort by over 30% in an ICP-based pipeline. We quantify the trade-off of accuracy to computational effort using over 100 kilometers of real-world driving data in different on-road environments.

Are Doppler Velocity Measurements Useful for Spinning Radar Odometry?

Apr 02, 2024Abstract:Spinning, frequency-modulated continuous-wave (FMCW) radar has been gaining popularity for autonomous vehicle navigation. The spinning radar is chosen over the more classic automotive `fixed' radar as it is able to capture the full 360 degree field of view without requiring multiple sensors and extensive calibration. However, commercially available spinning radar systems have not previously had the ability to extract radial velocities due to the lack of repeated measurements in the same direction and fundamental hardware setup. A new firmware upgrade now makes it possible to alternate the modulation of the radar signal between azimuths. In this paper, we first present a way to use this alternating modulation to extract radial Doppler velocity measurements from single raw radar intensity scans. We then incorporate these measurements in two different modern odometry pipelines and evaluate them in progressively challenging autonomous driving environments. We show that using Doppler velocity measurements enables our odometry to continue functioning at state-of-the-art even in severely geometrically degenerate environments.

Need for Speed: Fast Correspondence-Free Lidar Odometry Using Doppler Velocity

Mar 11, 2023Abstract:In this paper, we present a fast, lightweight odometry method that uses the Doppler velocity measurements from a Frequency-Modulated Continuous-Wave (FMCW) lidar without data association. FMCW lidar is a recently emerging technology that enables per-return relative radial velocity measurements via the Doppler effect. Since the Doppler measurement model is linear with respect to the 6-degrees-of-freedom (DOF) vehicle velocity, we can formulate a linear continuous-time estimation problem for the velocity and numerically integrate for the 6-DOF pose estimate afterward. The caveat is that angular velocity is not observable with a single FMCW lidar. We address this limitation by also incorporating the angular velocity measurements from a gyroscope. This results in an extremely efficient odometry method that processes lidar frames at an average wall-clock time of 5.8ms on a single thread, well below the 10Hz operating rate of the lidar we tested. We show experimental results on real-world driving sequences and compare against state-of-the-art Iterative Closest Point (ICP)-based odometry methods, presenting a compelling trade-off between accuracy and computation. We also present an algebraic observability study, where we demonstrate in theory that the Doppler measurements from multiple FMCW lidars are capable of observing all 6 degrees of freedom (translational and angular velocity).

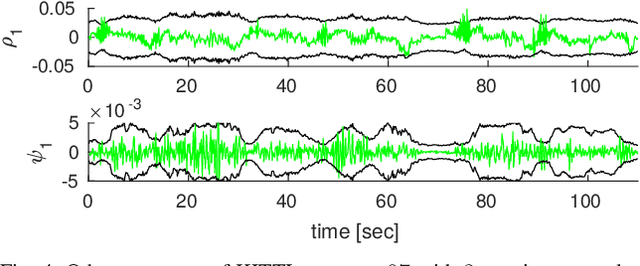

Towards Consistent Batch State Estimation Using a Time-Correlated Measurement Noise Model

Mar 11, 2023

Abstract:In this paper, we present an algorithm for learning time-correlated measurement covariances for application in batch state estimation. We parameterize the inverse measurement covariance matrix to be block-banded, which conveniently factorizes and results in a computationally efficient approach for correlating measurements across the entire trajectory. We train our covariance model through supervised learning using the groundtruth trajectory. In applications where the measurements are time-correlated, we demonstrate improved performance in both the mean posterior estimate and the covariance (i.e., improved estimator consistency). We use an experimental dataset collected using a mobile robot equipped with a laser rangefinder to demonstrate the improvement in performance. We also verify estimator consistency in a controlled simulation using a statistical test over several trials.

Picking Up Speed: Continuous-Time Lidar-Only Odometry using Doppler Velocity Measurements

Sep 07, 2022

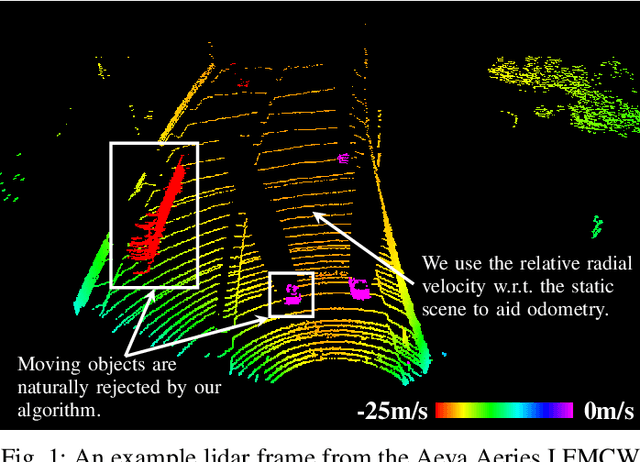

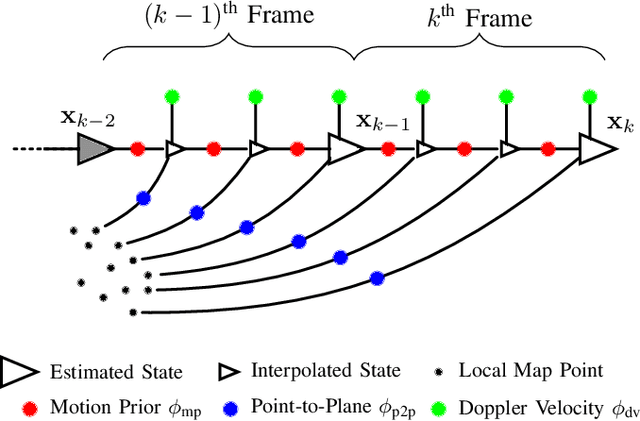

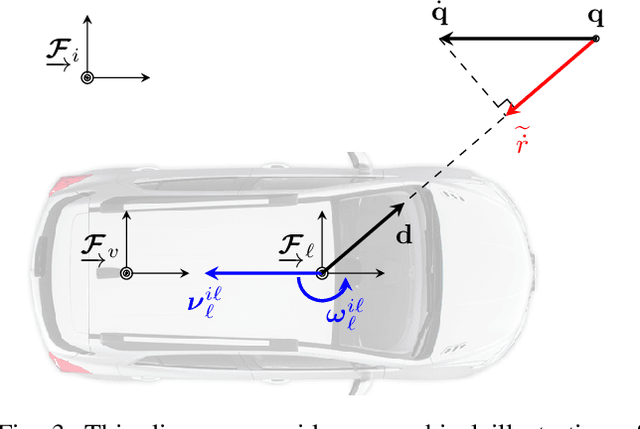

Abstract:Frequency-Modulated Continuous-Wave (FMCW) lidar is a recently emerging technology that additionally enables per-return instantaneous relative radial velocity measurements via the Doppler effect. In this letter, we present the first continuous-time lidar-only odometry algorithm using these Doppler velocity measurements from an FMCW lidar to aid odometry in geometrically degenerate environments. We apply an existing continuous-time framework that efficiently estimates the vehicle trajectory using Gaussian process regression to compensate for motion distortion due to the scanning-while-moving nature of any mechanically actuated lidar (FMCW and non-FMCW). We evaluate our proposed algorithm on several real-world datasets, including publicly available ones and datasets we collected. Our algorithm outperforms the only existing method that also uses Doppler velocity measurements, and we study difficult conditions where including this extra information greatly improves performance. We additionally demonstrate state-of-the-art performance of lidar-only odometry with and without using Doppler velocity measurements in nominal conditions. Code for this project can be found at: https://github.com/utiasASRL/steam_icp.

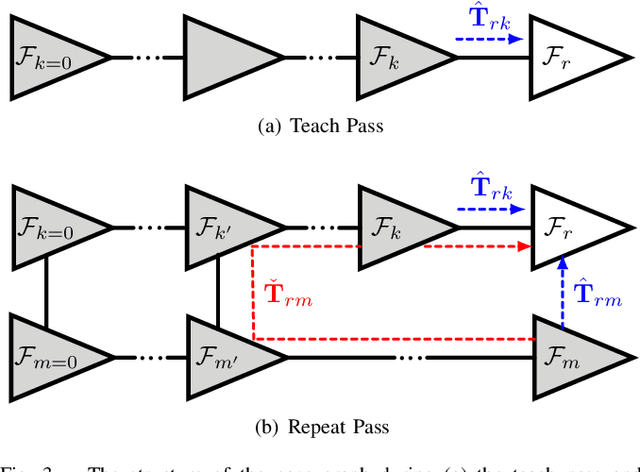

Should Radar Replace Lidar in All-Weather Mapping and Localization?

Mar 18, 2022

Abstract:Radar is a rich sensing modality that is a compelling alternative to lidar for its inherent robustness to precipitation, dust, and fog. In this paper, we investigate radar-based mapping and localization as an alternative to lidar by demonstrating the performance of our own radar-based pipeline. We present extensive comparisons against a lidar-based pipeline across varying seasonal and weather conditions using our own publicly available dataset collected on a repeated route over the course of one year. Our experiments show that while lidar achieves better overall localization accuracy, our radar-based pipeline achieves comparable SE(2) results using a fraction of the computational and memory resources required by the lidar-based pipeline. We additionally demonstrate our radar-based pipeline localizing against lidar maps with better accuracy than the current state of the art for radar-to-lidar localization. Code for this project can be found at: https://github.com/utiasASRL/vtr3

Boreas: A Multi-Season Autonomous Driving Dataset

Mar 18, 2022

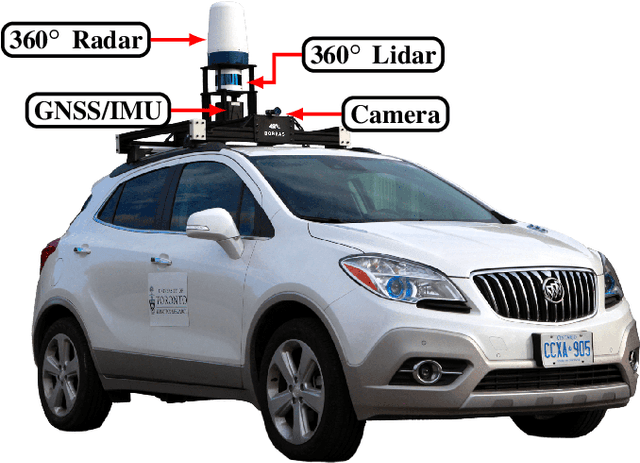

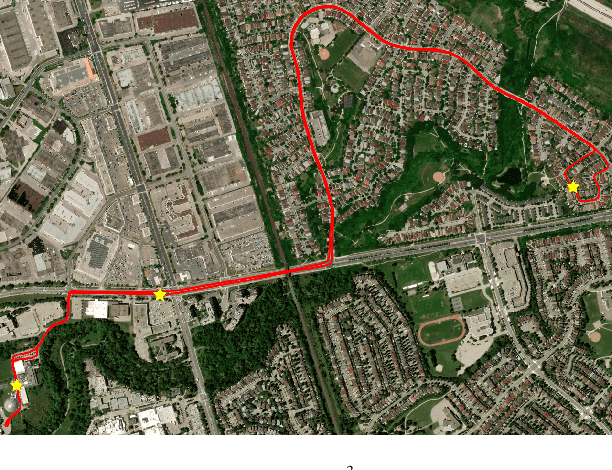

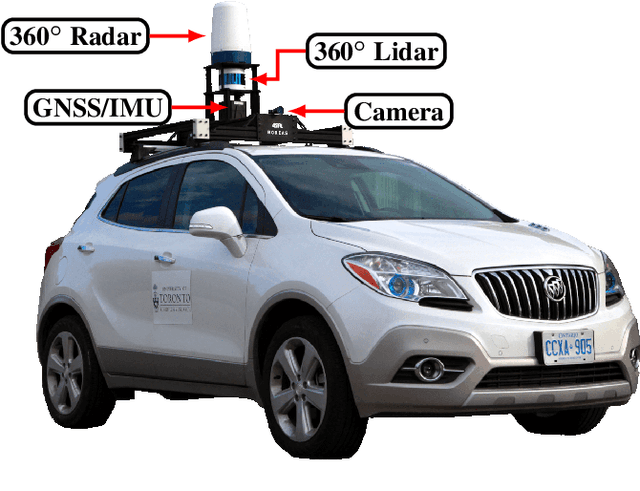

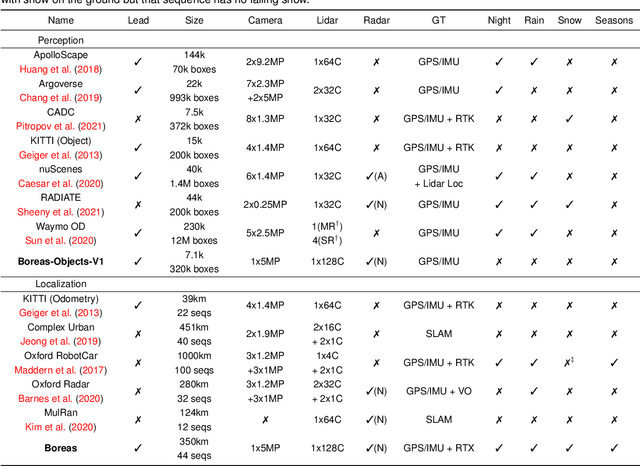

Abstract:The Boreas dataset was collected by driving a repeated route over the course of one year, resulting in stark seasonal variations and adverse weather conditions such as rain and falling snow. In total, the Boreas dataset contains over 350km of driving data featuring a 128-channel Velodyne Alpha-Prime lidar, a 360 degree Navtech CIR304-H scanning radar, a 5MP FLIR Blackfly S camera, and centimetre-accurate post-processed ground truth poses. At launch, our dataset will support live leaderboards for odometry, metric localization, and 3D object detection. The dataset and development kit are available at: https://www.boreas.utias.utoronto.ca

Radar Odometry Combining Probabilistic Estimation and Unsupervised Feature Learning

Jun 12, 2021

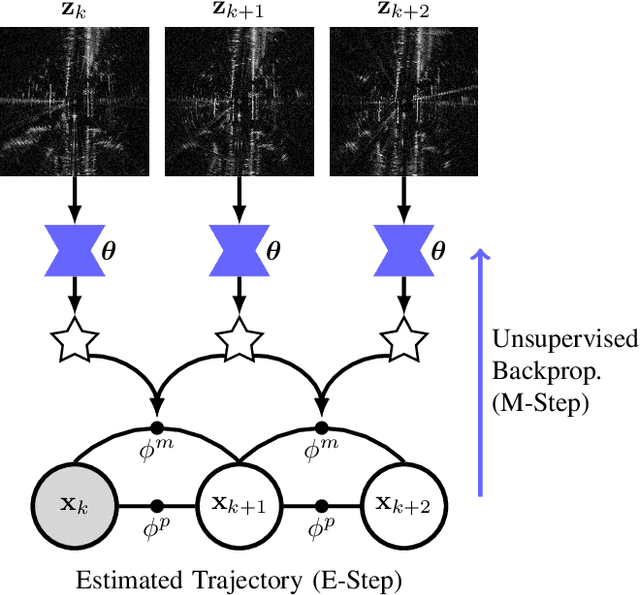

Abstract:This paper presents a radar odometry method that combines probabilistic trajectory estimation and deep learned features without needing groundtruth pose information. The feature network is trained unsupervised, using only the on-board radar data. With its theoretical foundation based on a data likelihood objective, our method leverages a deep network for processing rich radar data, and a non-differentiable classic estimator for probabilistic inference. We provide extensive experimental results on both the publicly available Oxford Radar RobotCar Dataset and an additional 100 km of driving collected in an urban setting. Our sliding-window implementation of radar odometry outperforms most hand-crafted methods and approaches the current state of the art without requiring a groundtruth trajectory for training. We also demonstrate the effectiveness of radar odometry under adverse weather conditions. Code for this project can be found at: https://github.com/utiasASRL/hero_radar_odometry

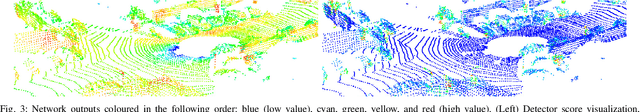

Unsupervised Learning of Lidar Features for Use in a Probabilistic Trajectory Estimator

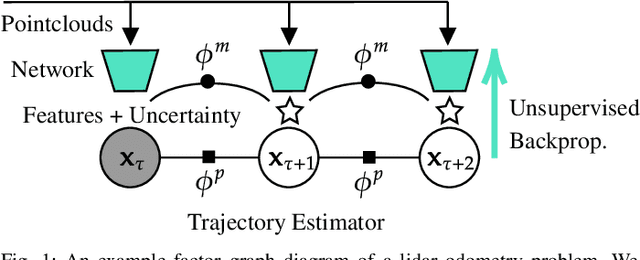

Feb 22, 2021

Abstract:We present unsupervised parameter learning in a Gaussian variational inference setting that combines classic trajectory estimation for mobile robots with deep learning for rich sensor data, all under a single learning objective. The framework is an extension of an existing system identification method that optimizes for the observed data likelihood, which we improve with modern advances in batch trajectory estimation and deep learning. Though the framework is general to any form of parameter learning and sensor modality, we demonstrate application to feature and uncertainty learning with a deep network for 3D lidar odometry. Our framework learns from only the on-board lidar data, and does not require any form of groundtruth supervision. We demonstrate that our lidar odometry performs better than existing methods that learn the full estimator with a deep network, and comparable to state-of-the-art ICP-based methods on the KITTI odometry dataset. We additionally show results on lidar data from the Oxford RobotCar dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge