Wei-Kang Tseng

Boreas: A Multi-Season Autonomous Driving Dataset

Mar 18, 2022

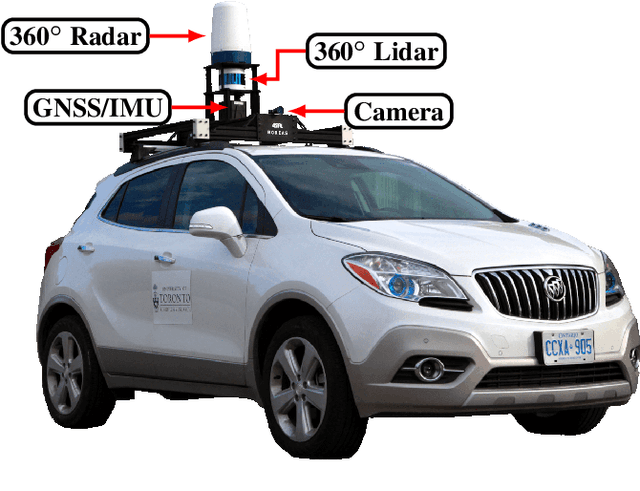

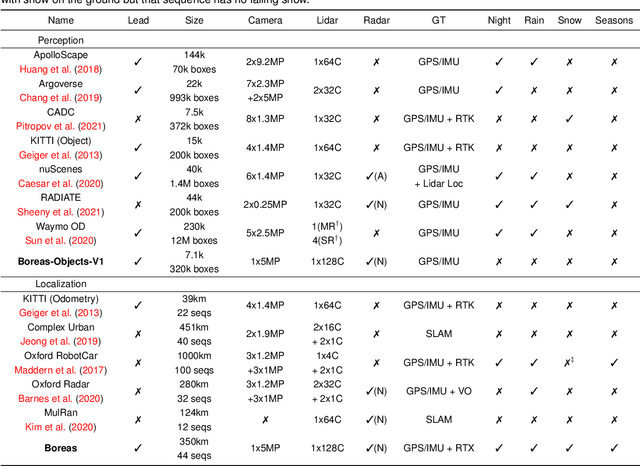

Abstract:The Boreas dataset was collected by driving a repeated route over the course of one year, resulting in stark seasonal variations and adverse weather conditions such as rain and falling snow. In total, the Boreas dataset contains over 350km of driving data featuring a 128-channel Velodyne Alpha-Prime lidar, a 360 degree Navtech CIR304-H scanning radar, a 5MP FLIR Blackfly S camera, and centimetre-accurate post-processed ground truth poses. At launch, our dataset will support live leaderboards for odometry, metric localization, and 3D object detection. The dataset and development kit are available at: https://www.boreas.utias.utoronto.ca

Self-Calibration of the Offset Between GPS and Semantic Map Frames for Robust Localization

May 25, 2021

Abstract:In self-driving, standalone GPS is generally considered to have insufficient positioning accuracy to stay in lane. Instead, many turn to LIDAR localization, but this comes at the expense of building LIDAR maps that can be costly to maintain. Another possibility is to use semantic cues such as lane lines and traffic lights to achieve localization, but these are usually not continuously visible. This issue can be remedied by combining semantic cues with GPS to fill in the gaps. However, due to elapsed time between mapping and localization, the live GPS frame can be offset from the semantic map frame, requiring calibration. In this paper, we propose a robust semantic localization algorithm that self-calibrates for the offset between the live GPS and semantic map frames by exploiting common semantic cues, including traffic lights and lane markings. We formulate the problem using a modified Iterated Extended Kalman Filter, which incorporates GPS and camera images for semantic cue detection via Convolutional Neural Networks. Experimental results show that our proposed algorithm achieves decimetre-level accuracy comparable to typical LIDAR localization performance and is robust against sparse semantic features and frequent GPS dropouts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge