Andrew Zou Li

Stepping Forward on the Last Mile

Nov 06, 2024

Abstract:Continuously adapting pre-trained models to local data on resource constrained edge devices is the $\emph{last mile}$ for model deployment. However, as models increase in size and depth, backpropagation requires a large amount of memory, which becomes prohibitive for edge devices. In addition, most existing low power neural processing engines (e.g., NPUs, DSPs, MCUs, etc.) are designed as fixed-point inference accelerators, without training capabilities. Forward gradients, solely based on directional derivatives computed from two forward calls, have been recently used for model training, with substantial savings in computation and memory. However, the performance of quantized training with fixed-point forward gradients remains unclear. In this paper, we investigate the feasibility of on-device training using fixed-point forward gradients, by conducting comprehensive experiments across a variety of deep learning benchmark tasks in both vision and audio domains. We propose a series of algorithm enhancements that further reduce the memory footprint, and the accuracy gap compared to backpropagation. An empirical study on how training with forward gradients navigates in the loss landscape is further explored. Our results demonstrate that on the last mile of model customization on edge devices, training with fixed-point forward gradients is a feasible and practical approach.

An Adaptive Robotics Framework for Chemistry Lab Automation

Dec 19, 2022Abstract:In the process of materials discovery, chemists currently need to perform many laborious, time-consuming, and often dangerous lab experiments. To accelerate this process, we propose a framework for robots to assist chemists by performing lab experiments autonomously. The solution allows a general-purpose robot to perform diverse chemistry experiments and efficiently make use of available lab tools. Our system can load high-level descriptions of chemistry experiments, perceive a dynamic workspace, and autonomously plan the required actions and motions to perform the given chemistry experiments with common tools found in the existing lab environment. Our architecture uses a modified PDDLStream solver for integrated task and constrained motion planning, which generates plans and motions that are guaranteed to be safe by preventing collisions and spillage. We present a modular framework that can scale to many different experiments, actions, and lab tools. In this work, we demonstrate the utility of our framework on three pouring skills and two foundational chemical experiments for materials synthesis: solubility and recrystallization. More experiments and updated evaluations can be found at https://ac-rad.github.io/arc-icra2023.

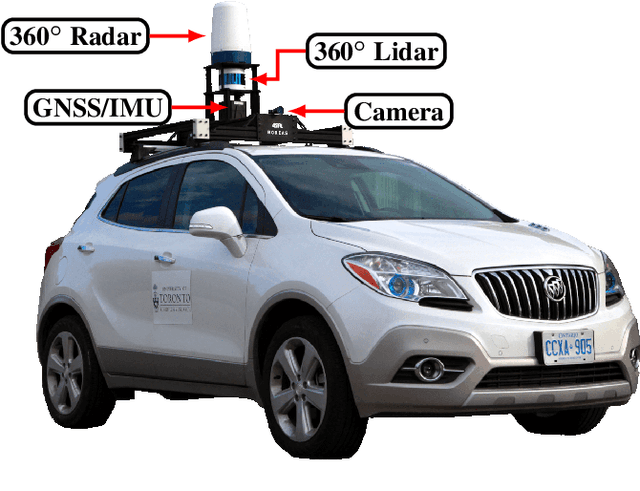

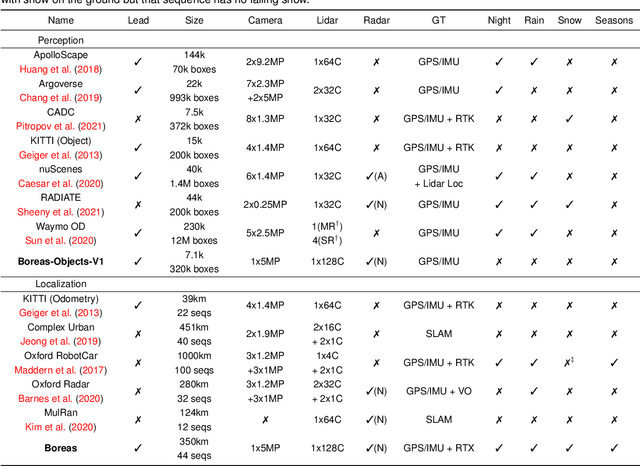

Boreas: A Multi-Season Autonomous Driving Dataset

Mar 18, 2022

Abstract:The Boreas dataset was collected by driving a repeated route over the course of one year, resulting in stark seasonal variations and adverse weather conditions such as rain and falling snow. In total, the Boreas dataset contains over 350km of driving data featuring a 128-channel Velodyne Alpha-Prime lidar, a 360 degree Navtech CIR304-H scanning radar, a 5MP FLIR Blackfly S camera, and centimetre-accurate post-processed ground truth poses. At launch, our dataset will support live leaderboards for odometry, metric localization, and 3D object detection. The dataset and development kit are available at: https://www.boreas.utias.utoronto.ca

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge