Daniel Schleich

Aerial Assistance System for Automated Firefighting during Turntable Ladder Operations

Nov 18, 2025Abstract:Fires in industrial facilities pose special challenges to firefighters, e.g., due to the sheer size and scale of the buildings. The resulting visual obstructions impair firefighting accuracy, further compounded by inaccurate assessments of the fire's location. Such imprecision simultaneously increases the overall damage and prolongs the fire-brigades operation unnecessarily. We propose an automated assistance system for firefighting using a motorized fire monitor on a turntable ladder with aerial support from an unmanned aerial vehicle (UAV). The UAV flies autonomously within an obstacle-free flight funnel derived from geodata, detecting and localizing heat sources. An operator supervises the operation on a handheld controller and selects a fire target in reach. After the selection, the UAV automatically plans and traverses between two triangulation poses for continued fire localization. Simultaneously, our system steers the fire monitor to ensure the water jet reaches the detected heat source. In preliminary tests, our assistance system successfully localized multiple heat sources and directed a water jet towards the fires.

Perception-aware Exploration for Consumer-grade UAVs

Nov 18, 2025Abstract:In our work, we extend the current state-of-the-art approach for autonomous multi-UAV exploration to consumer-level UAVs, such as the DJI Mini 3 Pro. We propose a pipeline that selects viewpoint pairs from which the depth can be estimated and plans the trajectory that satisfies motion constraints necessary for odometry estimation. For the multi-UAV exploration, we propose a semi-distributed communication scheme that distributes the workload in a balanced manner. We evaluate our model performance in simulation for different numbers of UAVs and prove its ability to safely explore the environment and reconstruct the map even with the hardware limitations of consumer-grade UAVs.

Quadrupedal Footstep Planning using Learned Motion Models of a Black-Box Controller

Jul 23, 2023Abstract:Legged robots are increasingly entering new domains and applications, including search and rescue, inspection, and logistics. However, for such systems to be valuable in real-world scenarios, they must be able to autonomously and robustly navigate irregular terrains. In many cases, robots that are sold on the market do not provide such abilities, being able to perform only blind locomotion. Furthermore, their controller cannot be easily modified by the end-user, requiring a new and time-consuming control synthesis. In this work, we present a fast local motion planning pipeline that extends the capabilities of a black-box walking controller that is only able to track high-level reference velocities. More precisely, we learn a set of motion models for such a controller that maps high-level velocity commands to Center of Mass (CoM) and footstep motions. We then integrate these models with a variant of the A star algorithm to plan the CoM trajectory, footstep sequences, and corresponding high-level velocity commands based on visual information, allowing the quadruped to safely traverse irregular terrains at demand.

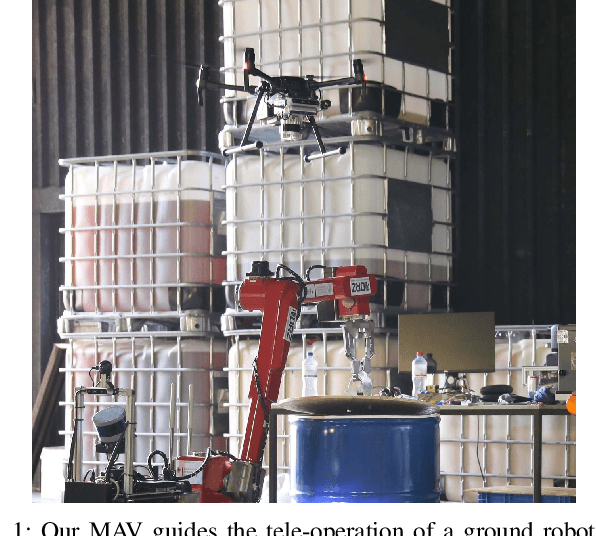

Lessons from Robot-Assisted Disaster Response Deployments by the German Rescue Robotics Center Task Force

Dec 19, 2022Abstract:Earthquakes, fire, and floods often cause structural collapses of buildings. The inspection of damaged buildings poses a high risk for emergency forces or is even impossible, though. We present three recent selected missions of the Robotics Task Force of the German Rescue Robotics Center, where both ground and aerial robots were used to explore destroyed buildings. We describe and reflect the missions as well as the lessons learned that have resulted from them. In order to make robots from research laboratories fit for real operations, realistic test environments were set up for outdoor and indoor use and tested in regular exercises by researchers and emergency forces. Based on this experience, the robots and their control software were significantly improved. Furthermore, top teams of researchers and first responders were formed, each with realistic assessments of the operational and practical suitability of robotic systems.

Predictive Angular Potential Field-based Obstacle Avoidance for Dynamic UAV Flights

Aug 11, 2022

Abstract:In recent years, unmanned aerial vehicles (UAVs) are used for numerous inspection and video capture tasks. Manually controlling UAVs in the vicinity of obstacles is challenging, however, and poses a high risk of collisions. Even for autonomous flight, global navigation planning might be too slow to react to newly perceived obstacles. Disturbances such as wind might lead to deviations from the planned trajectories. In this work, we present a fast predictive obstacle avoidance method that does not depend on higher-level localization or mapping and maintains the dynamic flight capabilities of UAVs. It directly operates on LiDAR range images in real time and adjusts the current flight direction by computing angular potential fields within the range image. The velocity magnitude is subsequently determined based on a trajectory prediction and time-to-contact estimation. Our method is evaluated using Hardware-in-the-Loop simulations. It keeps the UAV at a safe distance to obstacles, while allowing higher flight velocities than previous reactive obstacle avoidance methods that directly operate on sensor data.

Two-step Planning of Dynamic UAV Trajectories using Iterative $δ$-Spaces

May 04, 2022

Abstract:UAV trajectory planning is often done in a two-step approach, where a low-dimensional path is refined to a dynamic trajectory. The resulting trajectories are only locally optimal, however. On the other hand, direct planning in higher-dimensional state spaces generates globally optimal solutions but is time-consuming and thus infeasible for time-constrained applications. To address this issue, we propose $\delta$-Spaces, a pruned high-dimensional state space representation for trajectory refinement. It does not only contain the area around a single lower-dimensional path but consists of the union of multiple near-optimal paths. Thus, it is less prone to local minima. Furthermore, we propose an anytime algorithm using $\delta$-Spaces of increasing sizes. We compare our method against state-of-the-art search-based trajectory planning methods and evaluate it in 2D and 3D environments to generate second-order and third-order UAV trajectories.

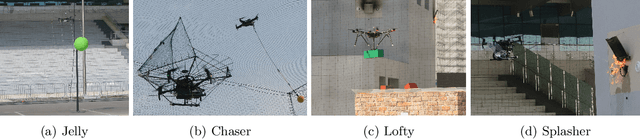

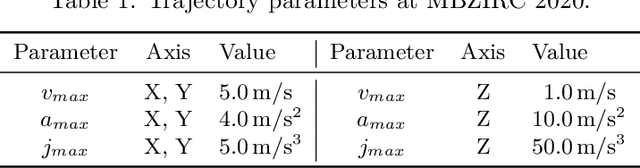

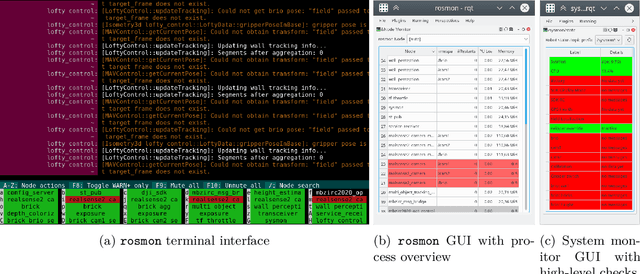

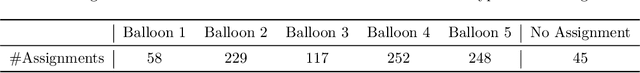

Target Chase, Wall Building, and Fire Fighting: Autonomous UAVs of Team NimbRo at MBZIRC 2020

Jan 11, 2022

Abstract:The Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020 posed diverse challenges for unmanned aerial vehicles (UAVs). We present our four tailored UAVs, specifically developed for individual aerial-robot tasks of MBZIRC, including custom hardware- and software components. In Challenge 1, a target UAV is pursued using a high-efficiency, onboard object detection pipeline to capture a ball from the target UAV. A second UAV uses a similar detection method to find and pop balloons scattered throughout the arena. For Challenge 2, we demonstrate a larger UAV capable of autonomous aerial manipulation: Bricks are found and tracked from camera images. Subsequently, they are approached, picked, transported, and placed on a wall. Finally, in Challenge 3, our UAV autonomously finds fires using LiDAR and thermal cameras. It extinguishes the fires with an onboard fire extinguisher. While every robot features task-specific subsystems, all UAVs rely on a standard software stack developed for this particular and future competitions. We present our mostly open-source software solutions, including tools for system configuration, monitoring, robust wireless communication, high-level control, and agile trajectory generation. For solving the MBZIRC 2020 tasks, we advanced the state of the art in multiple research areas like machine vision and trajectory generation. We present our scientific contributions that constitute the foundation for our algorithms and systems and analyze the results from the MBZIRC competition 2020 in Abu Dhabi, where our systems reached second place in the Grand Challenge. Furthermore, we discuss lessons learned from our participation in this complex robotic challenge.

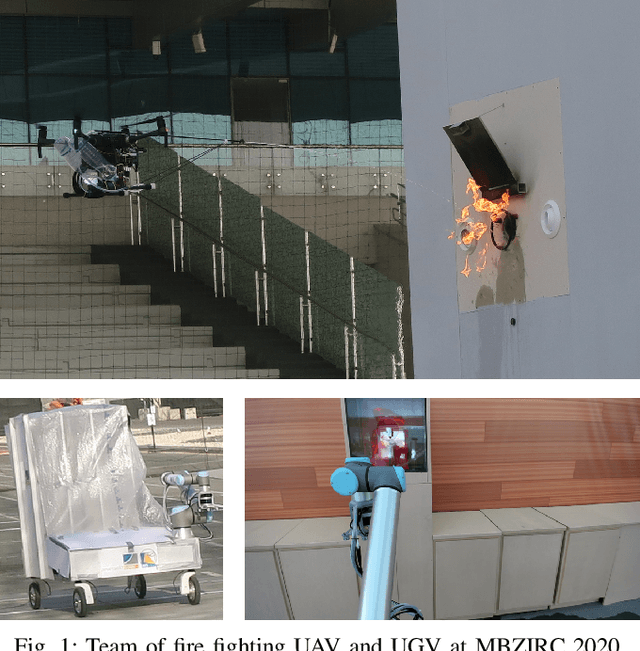

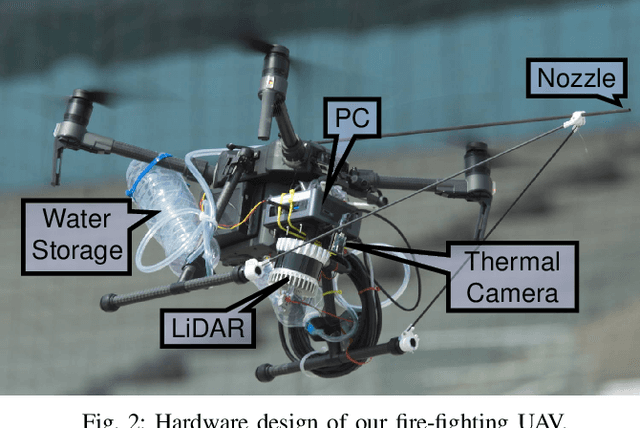

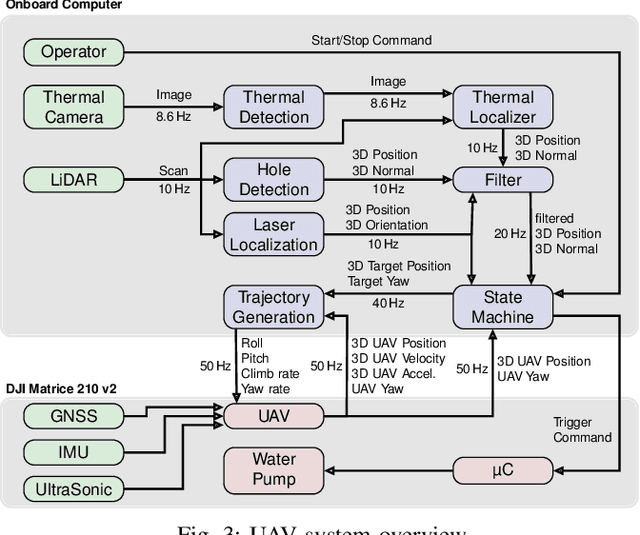

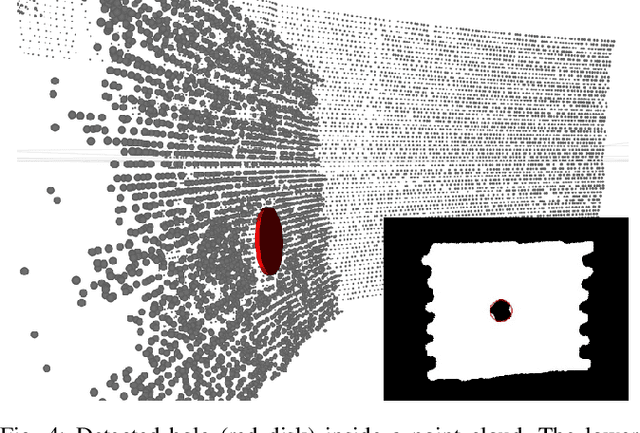

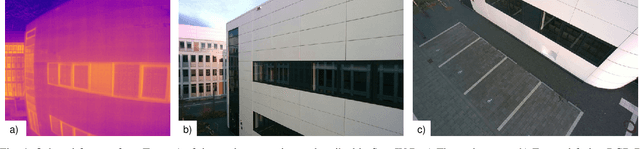

Autonomous Fire Fighting with a UAV-UGV Team at MBZIRC 2020

Jun 11, 2021

Abstract:Every day, burning buildings threaten the lives of occupants and first responders trying to save them. Quick action is of essence, but some areas might not be accessible or too dangerous to enter. Robotic systems have become a promising addition to firefighting, but at this stage, they are mostly manually controlled, which is error-prone and requires specially trained personal. We present two systems for autonomous firefighting from air and ground we developed for the Mohamed Bin Zayed International Robotics Challenge (MBZIRC) 2020. The systems use LiDAR for reliable localization within narrow, potentially GNSS-restricted environments while maneuvering close to obstacles. Measurements from LiDAR and thermal cameras are fused to track fires, while relative navigation ensures successful extinguishing. We analyze and discuss our successful participation during the MBZIRC 2020, present further experiments, and provide insights into our lessons learned from the competition.

Search-based Planning of Dynamic MAV Trajectories Using Local Multiresolution State Lattices

Mar 26, 2021

Abstract:Search-based methods that use motion primitives can incorporate the system's dynamics into the planning and thus generate dynamically feasible MAV trajectories that are globally optimal. However, searching high-dimensional state lattices is computationally expensive. Local multiresolution is a commonly used method to accelerate spatial path planning. While paths within the vicinity of the robot are represented at high resolution, the representation gets coarser for more distant parts. In this work, we apply the concept of local multiresolution to high-dimensional state lattices that include velocities and accelerations. Experiments show that our proposed approach significantly reduces planning times. Thus, it increases the applicability to large dynamic environments, where frequent replanning is necessary.

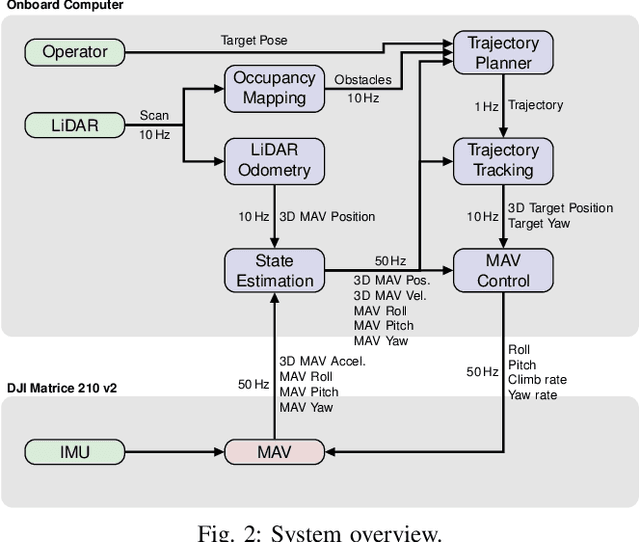

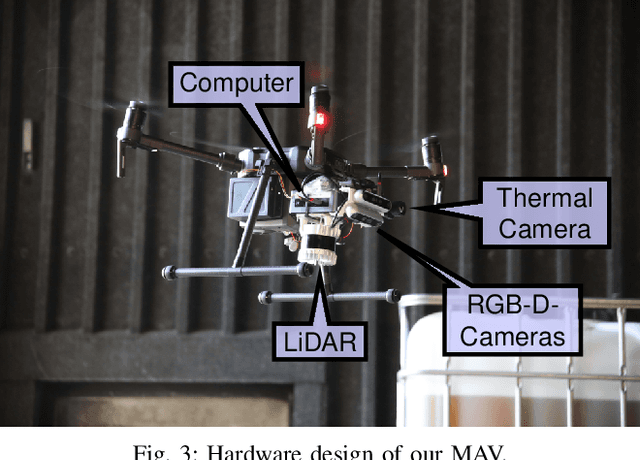

Autonomous Flight in Unknown GNSS-denied Environments for Disaster Examination

Mar 22, 2021

Abstract:Micro aerial vehicles (MAVs) exhibit high potential for information extraction tasks in search and rescue scenarios. Manually controlling MAVs in such scenarios requires experienced pilots and is error-prone, especially in stressful situations of real emergencies. The conditions of disaster scenarios are also challenging for autonomous MAV systems. The environment is usually not known in advance and GNSS might not always be available. We present a system for autonomous MAV flights in unknown environments which does not rely on global positioning systems. The method is evaluated in multiple search and rescue scenarios and allows for safe autonomous flights, even when transitioning between indoor and outdoor areas.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge