Hartmut Surmann

UAVs and Neural Networks for search and rescue missions

Oct 09, 2023

Abstract:In this paper, we present a method for detecting objects of interest, including cars, humans, and fire, in aerial images captured by unmanned aerial vehicles (UAVs) usually during vegetation fires. To achieve this, we use artificial neural networks and create a dataset for supervised learning. We accomplish the assisted labeling of the dataset through the implementation of an object detection pipeline that combines classic image processing techniques with pretrained neural networks. In addition, we develop a data augmentation pipeline to augment the dataset with automatically labeled images. Finally, we evaluate the performance of different neural networks.

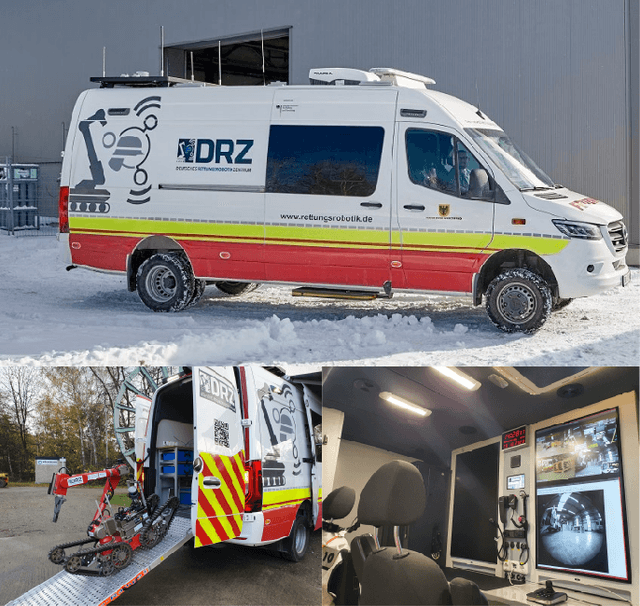

Lessons from Robot-Assisted Disaster Response Deployments by the German Rescue Robotics Center Task Force

Dec 19, 2022Abstract:Earthquakes, fire, and floods often cause structural collapses of buildings. The inspection of damaged buildings poses a high risk for emergency forces or is even impossible, though. We present three recent selected missions of the Robotics Task Force of the German Rescue Robotics Center, where both ground and aerial robots were used to explore destroyed buildings. We describe and reflect the missions as well as the lessons learned that have resulted from them. In order to make robots from research laboratories fit for real operations, realistic test environments were set up for outdoor and indoor use and tested in regular exercises by researchers and emergency forces. Based on this experience, the robots and their control software were significantly improved. Furthermore, top teams of researchers and first responders were formed, each with realistic assessments of the operational and practical suitability of robotic systems.

PatchMatch-Stereo-Panorama, a fast dense reconstruction from 360° video images

Nov 29, 2022Abstract:This work proposes a new method for real-time dense 3d reconstruction for common 360{\deg} action cams, which can be mounted on small scouting UAVs during USAR missions. The proposed method extends a feature based Visual monocular SLAM (OpenVSLAM, based on the popular ORB-SLAM) for robust long-term localization on equirectangular video input by adding an additional densification thread that computes dense correspondences for any given keyframe with respect to a local keyframe-neighboorhood using a PatchMatch-Stereo-approach. While PatchMatch-Stereo-types of algorithms are considered state of the art for large scale Mutli-View-Stereo they had not been adapted so far for real-time dense 3d reconstruction tasks. This work describes a new massively parallel variant of the PatchMatch-Stereo-algorithm that differs from current approaches in two ways: First it supports the equirectangular camera model while other solutions are limited to the pinhole camera model. Second it is optimized for low latency while keeping a high level of completeness and accuracy. To achieve this it operates only on small sequences of keyframes, but employs techniques to compensate for the potential loss of accuracy due to the limited number of frames. Results demonstrate that dense 3d reconstruction is possible on a consumer grade laptop with a recent mobile GPU and that it is possible with improved accuracy and completeness over common offline-MVS solutions with comparable quality settings.

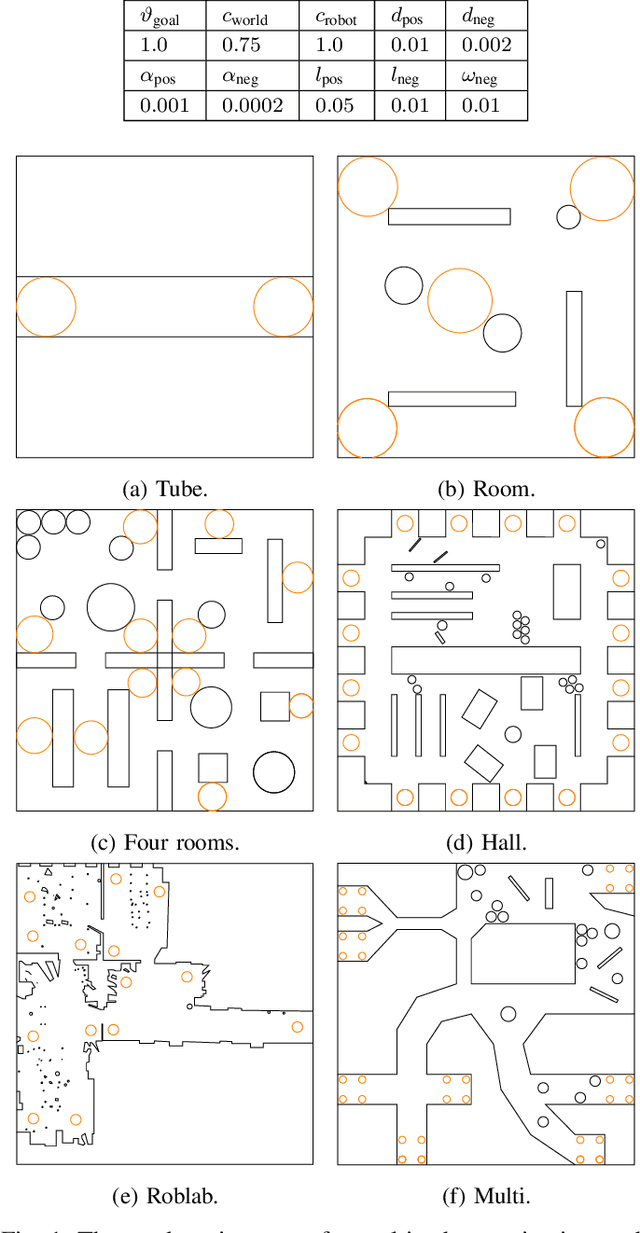

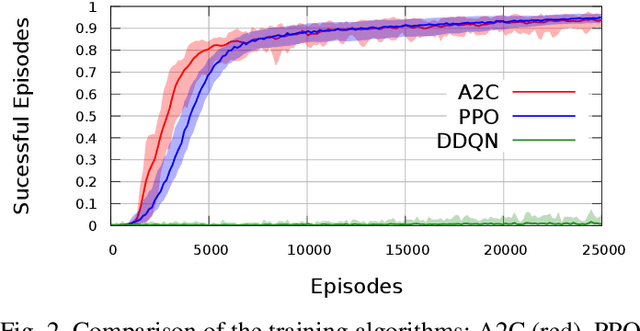

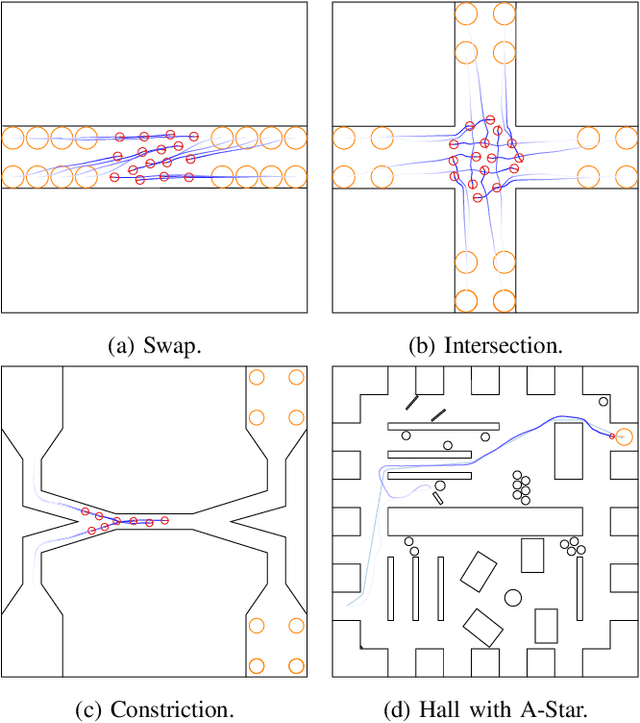

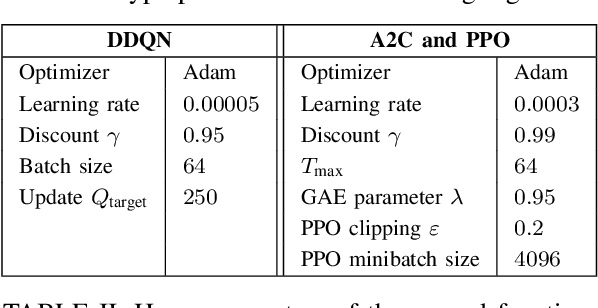

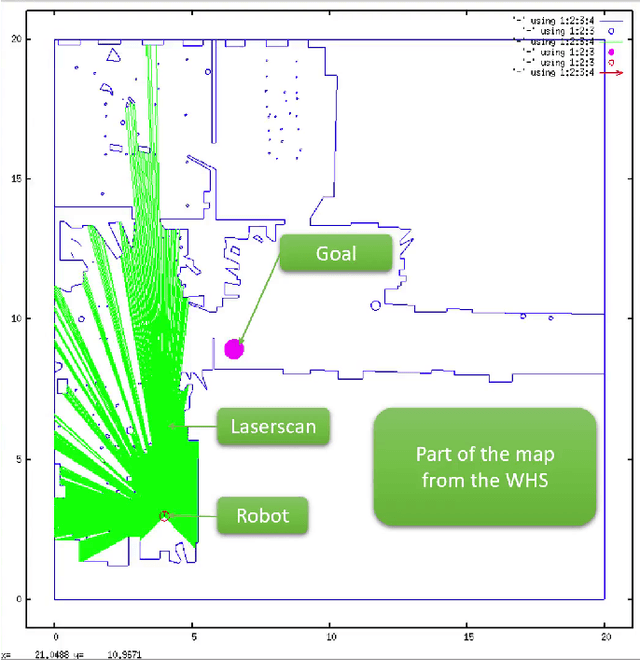

Obtaining Robust Control and Navigation Policies for Multi-Robot Navigation via Deep Reinforcement Learning

Sep 07, 2022

Abstract:Multi-robot navigation is a challenging task in which multiple robots must be coordinated simultaneously within dynamic environments. We apply deep reinforcement learning (DRL) to learn a decentralized end-to-end policy which maps raw sensor data to the command velocities of the agent. In order to enable the policy to generalize, the training is performed in different environments and scenarios. The learned policy is tested and evaluated in common multi-robot scenarios like switching a place, an intersection and a bottleneck situation. This policy allows the agent to recover from dead ends and to navigate through complex environments.

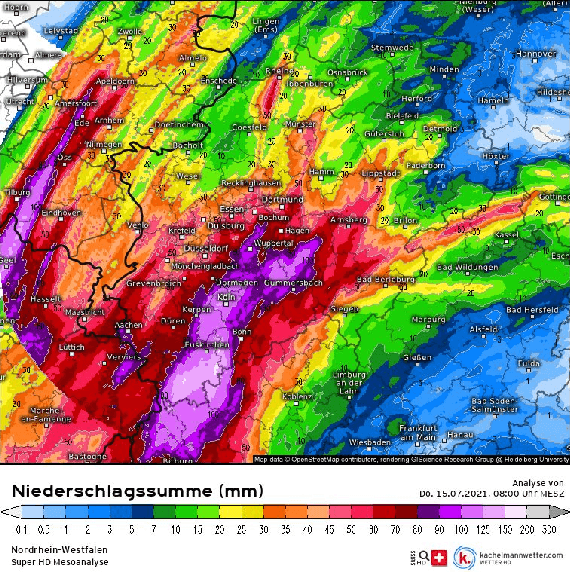

Deployment of Aerial Robots during the Flood Disaster in Erftstadt / Blessem in July 2021

Sep 07, 2022

Abstract:Climate change is leading to more and more extreme weather events such as heavy rainfall and flooding. This technical report deals with the question of how rescue commanders can be better and faster provided with current information during flood disasters using Unmanned Aerial Vehicles (UAVs), i.e. during the flood in July 2021 in Central Europe, more specifically in Erftstadt / Blessem. The UAVs were used for live observation and regular inspections of the flood edge on the one hand, and on the other hand for the systematic data acquisition in order to calculate 3D models using Structure from Motion and MultiView Stereo. The 3D models embedded in a GIS application serve as a planning basis for the systematic exploration and decision support for the deployment of additional smaller UAVs but also rescue forces. The systematic data acquisition of the UAVs by means of autonomous meander flights provides high-resolution images which are computed to a georeferenced 3D model of the surrounding area within 15 minutes in a specially equipped robotic command vehicle (RobLW). From the comparison of high-resolution elevation profiles extracted from the 3D model on successive days, changes in the water level become visible. This information enables the emergency management to plan further inspections of the buildings and to search for missing persons on site.

Deployment of Aerial Robots after a major fire of an industrial hall with hazardous substances, a report

Nov 29, 2021

Abstract:This technical report is about the mission and the experience gained during the reconnaissance of an industrial hall with hazardous substances after a major fire in Berlin. During this operation, only UAVs and cameras were used to obtain information about the site and the building. First, a geo-referenced 3D model of the building was created in order to plan the entry into the hall. Subsequently, the UAVs were used to fly in the heavily damaged interior and take pictures from inside of the hall. A 360{\deg} camera mounted under the UAV was used to collect images of the surrounding area especially from sections that were difficult to fly into. Since the collected data set contained similar images as well as blurred images, it was cleaned from non-optimal images using visual SLAM, bundle adjustment and blur detection so that a 3D model and overviews could be calculated. It was shown that the emergency services were not able to extract the necessary information from the 3D model. Therefore, an interactive panorama viewer with links to other 360{\deg} images was implemented where the links to the other images depends on the semi dense point cloud and located camera positions of the visual SLAM algorithm so that the emergency forces could view the surroundings.

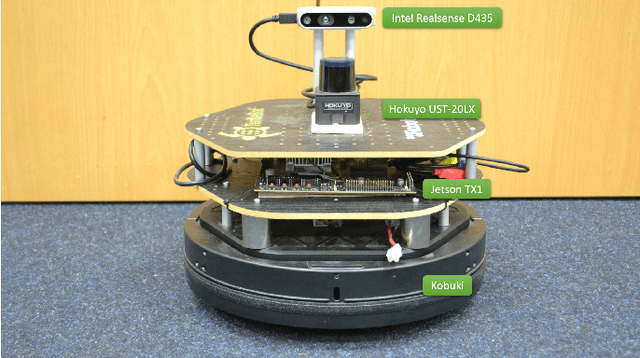

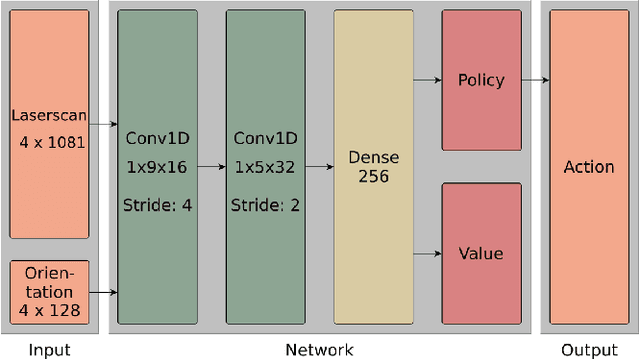

Deep Reinforcement learning for real autonomous mobile robot navigation in indoor environments

May 28, 2020

Abstract:Deep Reinforcement Learning has been successfully applied in various computer games [8]. However, it is still rarely used in real-world applications, especially for the navigation and continuous control of real mobile robots [13]. Previous approaches lack safety and robustness and/or need a structured environment. In this paper we present our proof of concept for autonomous self-learning robot navigation in an unknown environment for a real robot without a map or planner. The input for the robot is only the fused data from a 2D laser scanner and a RGB-D camera as well as the orientation to the goal. The map of the environment is unknown. The output actions of an Asynchronous Advantage Actor-Critic network (GA3C) are the linear and angular velocities for the robot. The navigator/controller network is pretrained in a high-speed, parallel, and self-implemented simulation environment to speed up the learning process and then deployed to the real robot. To avoid overfitting, we train relatively small networks, and we add random Gaussian noise to the input laser data. The sensor data fusion with the RGB-D camera allows the robot to navigate in real environments with real 3D obstacle avoidance and without the need to fit the environment to the sensory capabilities of the robot. To further increase the robustness, we train on environments of varying difficulties and run 32 training instances simultaneously. Video: supplementary File / YouTube, Code: GitHub

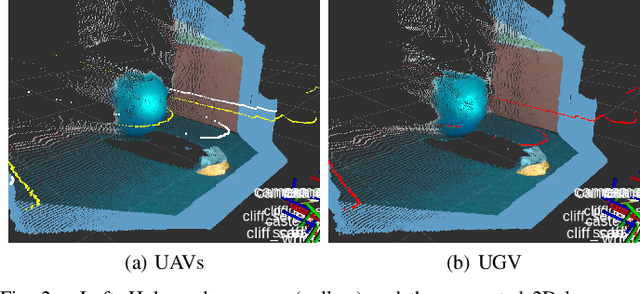

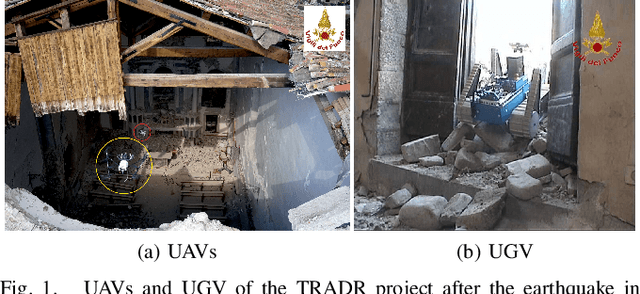

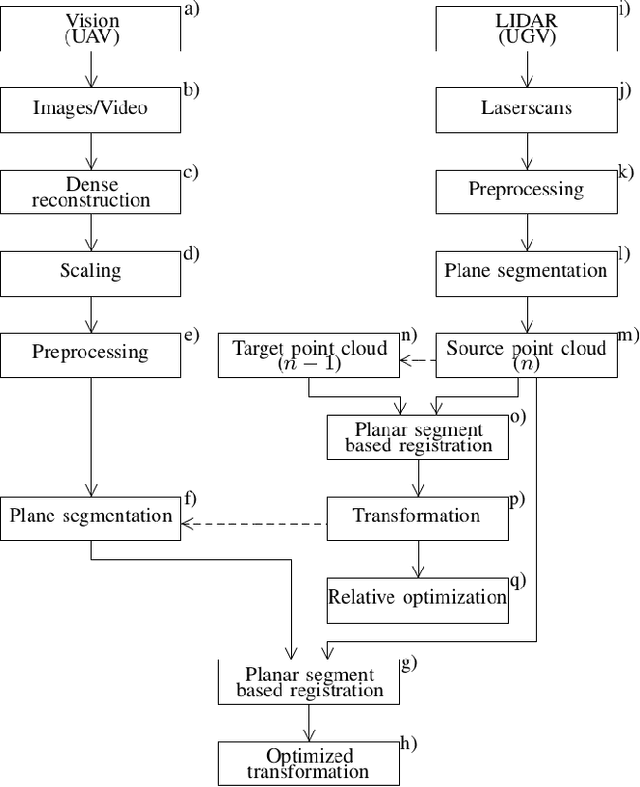

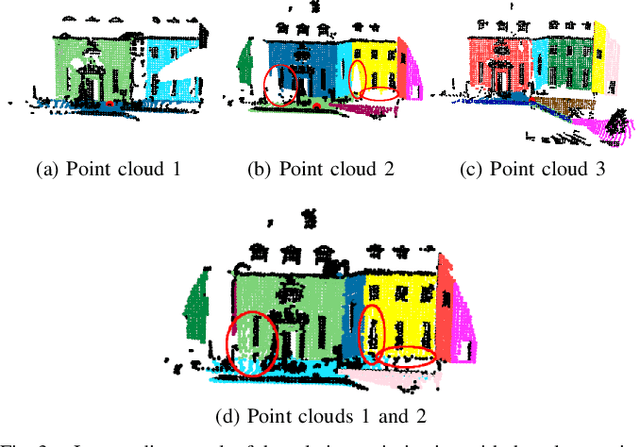

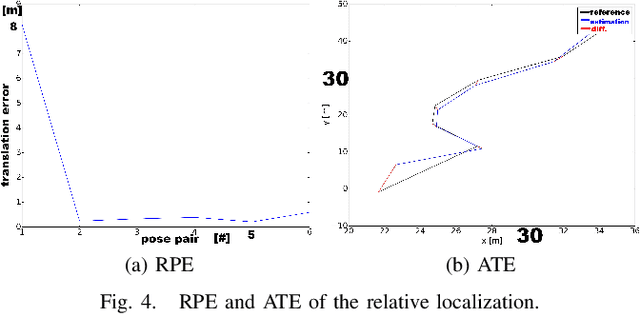

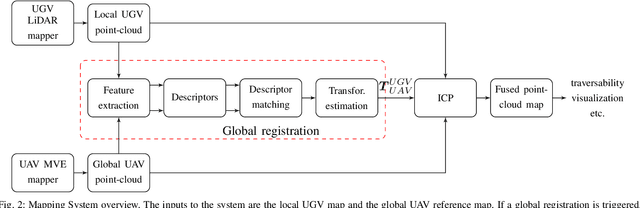

3D mapping for multi hybrid robot cooperation

Apr 08, 2019

Abstract:This paper presents a novel approach to build consistent 3D maps for multi robot cooperation in USAR environments. The sensor streams from unmanned aerial vehicles (UAVs) and ground robots (UGV) are fused in one consistent map. The UAV camera data are used to generate 3D point clouds that are fused with the 3D point clouds generated by a rolling 2D laser scanner at the UGV. The registration method is based on the matching of corresponding planar segments that are extracted from the point clouds. Based on the registration, an approach for a globally optimized localization is presented. Apart from the structural information of the point clouds, it is important to mention that no further information is required for the localization. Two examples show the performance of the overall registration.

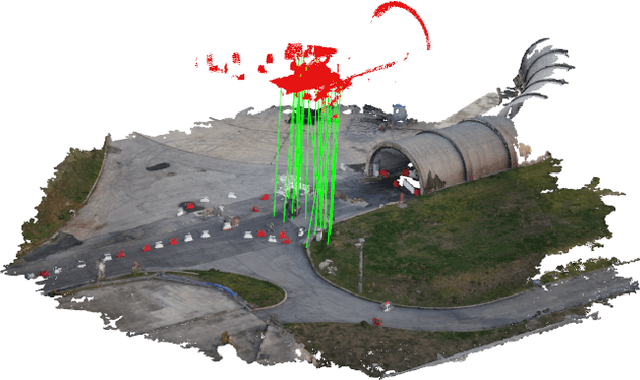

3D Registration of Aerial and Ground Robots for Disaster Response: An Evaluation of Features, Descriptors, and Transformation Estimation

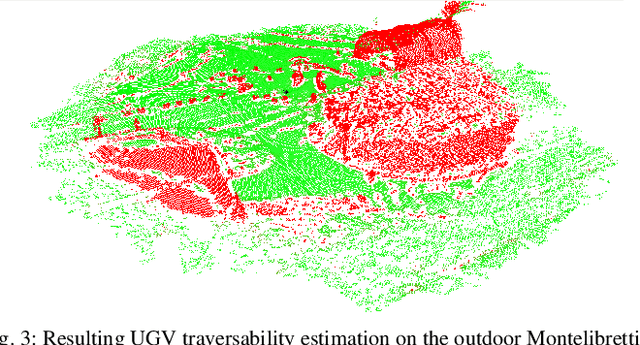

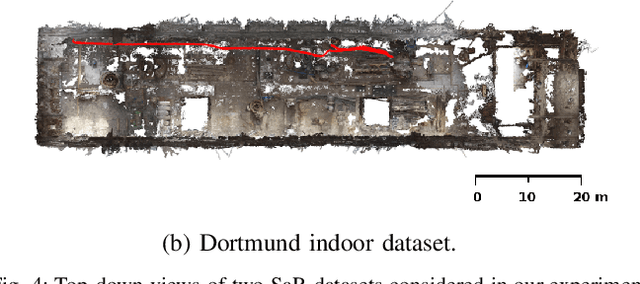

Oct 25, 2017

Abstract:Global registration of heterogeneous ground and aerial mapping data is a challenging task. This is especially difficult in disaster response scenarios when we have no prior information on the environment and cannot assume the regular order of man-made environments or meaningful semantic cues. In this work we extensively evaluate different approaches to globally register UGV generated 3D point-cloud data from LiDAR sensors with UAV generated point-cloud maps from vision sensors. The approaches are realizations of different selections for: a) local features: key-points or segments; b) descriptors: FPFH, SHOT, or ESF; and c) transformation estimations: RANSAC or FGR. Additionally, we compare the results against standard approaches like applying ICP after a good prior transformation has been given. The evaluation criteria include the distance which a UGV needs to travel to successfully localize, the registration error, and the computational cost. In this context, we report our findings on effectively performing the task on two new Search and Rescue datasets. Our results have the potential to help the community take informed decisions when registering point-cloud maps from ground robots to those from aerial robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge