Changkun Liu

OpenNavMap: Structure-Free Topometric Mapping via Large-Scale Collaborative Localization

Jan 18, 2026Abstract:Scalable and maintainable map representations are fundamental to enabling large-scale visual navigation and facilitating the deployment of robots in real-world environments. While collaborative localization across multi-session mapping enhances efficiency, traditional structure-based methods struggle with high maintenance costs and fail in feature-less environments or under significant viewpoint changes typical of crowd-sourced data. To address this, we propose OPENNAVMAP, a lightweight, structure-free topometric system leveraging 3D geometric foundation models for on-demand reconstruction. Our method unifies dynamic programming-based sequence matching, geometric verification, and confidence-calibrated optimization to robust, coarse-to-fine submap alignment without requiring pre-built 3D models. Evaluations on the Map-Free benchmark demonstrate superior accuracy over structure-from-motion and regression baselines, achieving an average translation error of 0.62m. Furthermore, the system maintains global consistency across 15km of multi-session data with an absolute trajectory error below 3m for map merging. Finally, we validate practical utility through 12 successful autonomous image-goal navigation tasks on simulated and physical robots. Code and datasets will be publicly available in https://rpl-cs-ucl.github.io/OpenNavMap_page.

LiteVLoc: Map-Lite Visual Localization for Image Goal Navigation

Oct 06, 2024

Abstract:This paper presents LiteVLoc, a hierarchical visual localization framework that uses a lightweight topo-metric map to represent the environment. The method consists of three sequential modules that estimate camera poses in a coarse-to-fine manner. Unlike mainstream approaches relying on detailed 3D representations, LiteVLoc reduces storage overhead by leveraging learning-based feature matching and geometric solvers for metric pose estimation. A novel dataset for the map-free relocalization task is also introduced. Extensive experiments including localization and navigation in both simulated and real-world scenarios have validate the system's performance and demonstrated its precision and efficiency for large-scale deployment. Code and data will be made publicly available.

GSLoc: Efficient Camera Pose Refinement via 3D Gaussian Splatting

Aug 20, 2024

Abstract:We leverage 3D Gaussian Splatting (3DGS) as a scene representation and propose a novel test-time camera pose refinement framework, GSLoc. This framework enhances the localization accuracy of state-of-the-art absolute pose regression and scene coordinate regression methods. The 3DGS model renders high-quality synthetic images and depth maps to facilitate the establishment of 2D-3D correspondences. GSLoc obviates the need for training feature extractors or descriptors by operating directly on RGB images, utilizing the 3D vision foundation model, MASt3R, for precise 2D matching. To improve the robustness of our model in challenging outdoor environments, we incorporate an exposure-adaptive module within the 3DGS framework. Consequently, GSLoc enables efficient pose refinement given a single RGB query and a coarse initial pose estimation. Our proposed approach surpasses leading NeRF-based optimization methods in both accuracy and runtime across indoor and outdoor visual localization benchmarks, achieving state-of-the-art accuracy on two indoor datasets.

AIR-HLoc: Adaptive Image Retrieval for Efficient Visual Localisation

Mar 27, 2024Abstract:State-of-the-art (SOTA) hierarchical localisation pipelines (HLoc) rely on image retrieval (IR) techniques to establish 2D-3D correspondences by selecting the $k$ most similar images from a reference image database for a given query image. Although higher values of $k$ enhance localisation robustness, the computational cost for feature matching increases linearly with $k$. In this paper, we observe that queries that are the most similar to images in the database result in a higher proportion of feature matches and, thus, more accurate positioning. Thus, a small number of images is sufficient for queries very similar to images in the reference database. We then propose a novel approach, AIR-HLoc, which divides query images into different localisation difficulty levels based on their similarity to the reference image database. We consider an image with high similarity to the reference image as an easy query and an image with low similarity as a hard query. Easy queries show a limited improvement in accuracy when increasing $k$. Conversely, higher values of $k$ significantly improve accuracy for hard queries. Given the limited improvement in accuracy when increasing $k$ for easy queries and the significant improvement for hard queries, we adapt the value of $k$ to the query's difficulty level. Therefore, AIR-HLoc optimizes processing time by adaptively assigning different values of $k$ based on the similarity between the query and reference images without losing accuracy. Our extensive experiments on the Cambridge Landmarks, 7Scenes, and Aachen Day-Night-v1.1 datasets demonstrate our algorithm's efficacy, reducing 30\%, 26\%, and 11\% in computational overhead while maintaining SOTA accuracy compared to HLoc with fixed image retrieval.

HR-APR: APR-agnostic Framework with Uncertainty Estimation and Hierarchical Refinement for Camera Relocalisation

Feb 22, 2024

Abstract:Absolute Pose Regressors (APRs) directly estimate camera poses from monocular images, but their accuracy is unstable for different queries. Uncertainty-aware APRs provide uncertainty information on the estimated pose, alleviating the impact of these unreliable predictions. However, existing uncertainty modelling techniques are often coupled with a specific APR architecture, resulting in suboptimal performance compared to state-of-the-art (SOTA) APR methods. This work introduces a novel APR-agnostic framework, HR-APR, that formulates uncertainty estimation as cosine similarity estimation between the query and database features. It does not rely on or affect APR network architecture, which is flexible and computationally efficient. In addition, we take advantage of the uncertainty for pose refinement to enhance the performance of APR. The extensive experiments demonstrate the effectiveness of our framework, reducing 27.4\% and 15.2\% of computational overhead on the 7Scenes and Cambridge Landmarks datasets while maintaining the SOTA accuracy in single-image APRs.

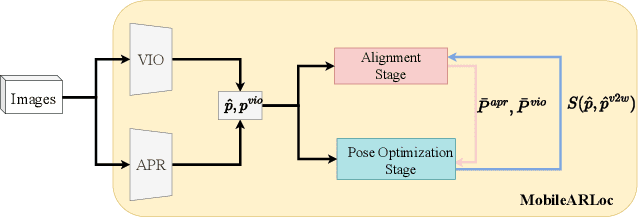

MobileARLoc: On-device Robust Absolute Localisation for Pervasive Markerless Mobile AR

Feb 04, 2024

Abstract:Recent years have seen significant improvement in absolute camera pose estimation, paving the way for pervasive markerless Augmented Reality (AR). However, accurate absolute pose estimation techniques are computation- and storage-heavy, requiring computation offloading. As such, AR systems rely on visual-inertial odometry (VIO) to track the device's relative pose between requests to the server. However, VIO suffers from drift, requiring frequent absolute repositioning. This paper introduces MobileARLoc, a new framework for on-device large-scale markerless mobile AR that combines an absolute pose regressor (APR) with a local VIO tracking system. Absolute pose regressors (APRs) provide fast on-device pose estimation at the cost of reduced accuracy. To address APR accuracy and reduce VIO drift, MobileARLoc creates a feedback loop where VIO pose estimations refine the APR predictions. The VIO system identifies reliable predictions of APR, which are then used to compensate for the VIO drift. We comprehensively evaluate MobileARLoc through dataset simulations. MobileARLoc halves the error compared to the underlying APR and achieve fast (80\,ms) on-device inference speed.

360Loc: A Dataset and Benchmark for Omnidirectional Visual Localization with Cross-device Queries

Nov 29, 2023Abstract:Portable 360$^\circ$ cameras are becoming a cheap and efficient tool to establish large visual databases. By capturing omnidirectional views of a scene, these cameras could expedite building environment models that are essential for visual localization. However, such an advantage is often overlooked due to the lack of valuable datasets. This paper introduces a new benchmark dataset, 360Loc, composed of 360$^\circ$ images with ground truth poses for visual localization. We present a practical implementation of 360$^\circ$ mapping combining 360$^\circ$ images with lidar data to generate the ground truth 6DoF poses. 360Loc is the first dataset and benchmark that explores the challenge of cross-device visual positioning, involving 360$^\circ$ reference frames, and query frames from pinhole, ultra-wide FoV fisheye, and 360$^\circ$ cameras. We propose a virtual camera approach to generate lower-FoV query frames from 360$^\circ$ images, which ensures a fair comparison of performance among different query types in visual localization tasks. We also extend this virtual camera approach to feature matching-based and pose regression-based methods to alleviate the performance loss caused by the cross-device domain gap, and evaluate its effectiveness against state-of-the-art baselines. We demonstrate that omnidirectional visual localization is more robust in challenging large-scale scenes with symmetries and repetitive structures. These results provide new insights into 360-camera mapping and omnidirectional visual localization with cross-device queries.

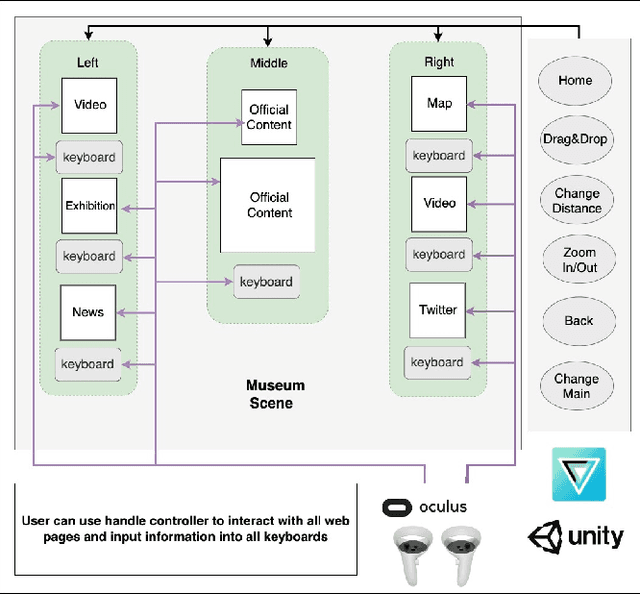

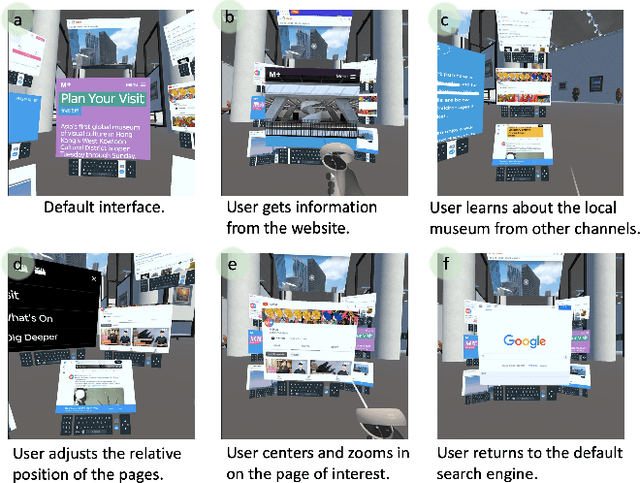

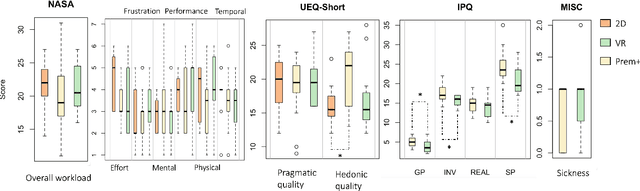

VR PreM+ : An Immersive Pre-learning Branching Visualization System for Museum Tours

Nov 01, 2023

Abstract:We present VR PreM+, an innovative VR system designed to enhance web exploration beyond traditional computer screens. Unlike static 2D displays, VR PreM+ leverages 3D environments to create an immersive pre-learning experience. Using keyword-based information retrieval allows users to manage and connect various content sources in a dynamic 3D space, improving communication and data comparison. We conducted preliminary and user studies that demonstrated efficient information retrieval, increased user engagement, and a greater sense of presence. These findings yielded three design guidelines for future VR information systems: display, interaction, and user-centric design. VR PreM+ bridges the gap between traditional web browsing and immersive VR, offering an interactive and comprehensive approach to information acquisition. It holds promise for research, education, and beyond.

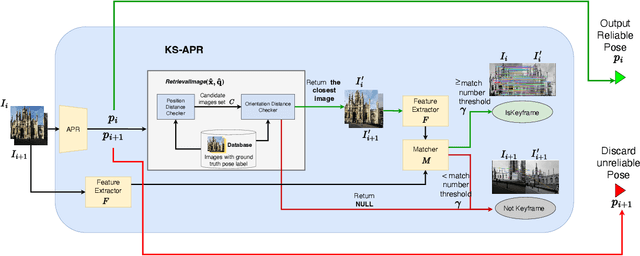

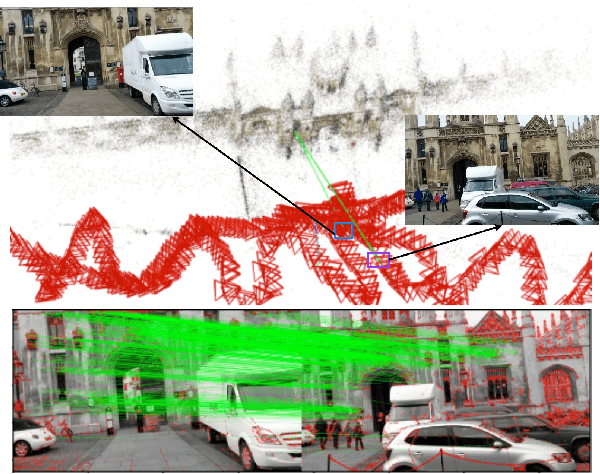

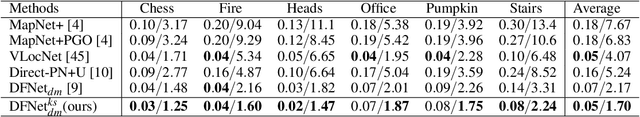

KS-APR: Keyframe Selection for Robust Absolute Pose Regression

Aug 10, 2023

Abstract:Markerless Mobile Augmented Reality (AR) aims to anchor digital content in the physical world without using specific 2D or 3D objects. Absolute Pose Regressors (APR) are end-to-end machine learning solutions that infer the device's pose from a single monocular image. Thanks to their low computation cost, they can be directly executed on the constrained hardware of mobile AR devices. However, APR methods tend to yield significant inaccuracies for input images that are too distant from the training set. This paper introduces KS-APR, a pipeline that assesses the reliability of an estimated pose with minimal overhead by combining the inference results of the APR and the prior images in the training set. Mobile AR systems tend to rely upon visual-inertial odometry to track the relative pose of the device during the experience. As such, KS-APR favours reliability over frequency, discarding unreliable poses. This pipeline can integrate most existing APR methods to improve accuracy by filtering unreliable images with their pose estimates. We implement the pipeline on three types of APR models on indoor and outdoor datasets. The median error on position and orientation is reduced for all models, and the proportion of large errors is minimized across datasets. Our method enables state-of-the-art APRs such as DFNetdm to outperform single-image and sequential APR methods. These results demonstrate the scalability and effectiveness of KS-APR for visual localization tasks that do not require one-shot decisions.

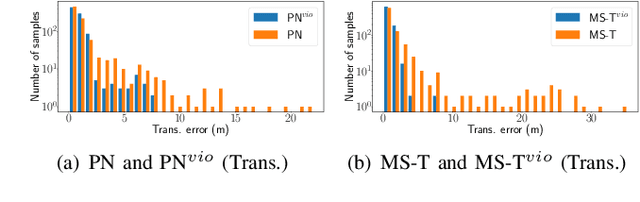

Robust Localization with Visual-Inertial Odometry Constraints for Markerless Mobile AR

Aug 10, 2023Abstract:Visual Inertial Odometry (VIO) is an essential component of modern Augmented Reality (AR) applications. However, VIO only tracks the relative pose of the device, leading to drift over time. Absolute pose estimation methods infer the device's absolute pose, but their accuracy depends on the input quality. This paper introduces VIO-APR, a new framework for markerless mobile AR that combines an absolute pose regressor (APR) with a local VIO tracking system. VIO-APR uses VIO to assess the reliability of the APR and the APR to identify and compensate for VIO drift. This feedback loop results in more accurate positioning and more stable AR experiences. To evaluate VIO-APR, we created a dataset that combines camera images with ARKit's VIO system output for six indoor and outdoor scenes of various scales. Over this dataset, VIO-APR improves the median accuracy of popular APR by up to 36\% in position and 29\% in orientation, increases the percentage of frames in the high ($0.25 m, 2^{\circ}$) accuracy level by up to 112\% and reduces the percentage of frames predicted below the low ($5 m, 10^\circ$) accuracy greatly. We implement VIO-APR into a mobile AR application using Unity to demonstrate its capabilities. VIO-APR results in noticeably more accurate localization and a more stable overall experience.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge