Brian Mak

Detecting Neurocognitive Disorders through Analyses of Topic Evolution and Cross-modal Consistency in Visual-Stimulated Narratives

Jan 07, 2025

Abstract:Early detection of neurocognitive disorders (NCDs) is crucial for timely intervention and disease management. Speech analysis offers a non-intrusive and scalable screening method, particularly through narrative tasks in neuropsychological assessment tools. Traditional narrative analysis often focuses on local indicators in microstructure, such as word usage and syntax. While these features provide insights into language production abilities, they often fail to capture global narrative patterns, or microstructures. Macrostructures include coherence, thematic organization, and logical progressions, reflecting essential cognitive skills potentially critical for recognizing NCDs. Addressing this gap, we propose to investigate specific cognitive and linguistic challenges by analyzing topical shifts, temporal dynamics, and the coherence of narratives over time, aiming to reveal cognitive deficits by identifying narrative impairments, and exploring their impact on communication and cognition. The investigation is based on the CU-MARVEL Rabbit Story corpus, which comprises recordings of a story-telling task from 758 older adults. We developed two approaches: the Dynamic Topic Models (DTM)-based temporal analysis to examine the evolution of topics over time, and the Text-Image Temporal Alignment Network (TITAN) to evaluate the coherence between spoken narratives and visual stimuli. DTM-based approach validated the effectiveness of dynamic topic consistency as a macrostructural metric (F1=0.61, AUC=0.78). The TITAN approach achieved the highest performance (F1=0.72, AUC=0.81), surpassing established microstructural and macrostructural feature sets. Cross-comparison and regression tasks further demonstrated the effectiveness of proposed dynamic macrostructural modeling approaches for NCD detection.

A Hong Kong Sign Language Corpus Collected from Sign-interpreted TV News

May 02, 2024

Abstract:This paper introduces TVB-HKSL-News, a new Hong Kong sign language (HKSL) dataset collected from a TV news program over a period of 7 months. The dataset is collected to enrich resources for HKSL and support research in large-vocabulary continuous sign language recognition (SLR) and translation (SLT). It consists of 16.07 hours of sign videos of two signers with a vocabulary of 6,515 glosses (for SLR) and 2,850 Chinese characters or 18K Chinese words (for SLT). One signer has 11.66 hours of sign videos and the other has 4.41 hours. One objective in building the dataset is to support the investigation of how well large-vocabulary continuous sign language recognition/translation can be done for a single signer given a (relatively) large amount of his/her training data, which could potentially lead to the development of new modeling methods. Besides, most parts of the data collection pipeline are automated with little human intervention; we believe that our collection method can be scaled up to collect more sign language data easily for SLT in the future for any sign languages if such sign-interpreted videos are available. We also run a SOTA SLR/SLT model on the dataset and get a baseline SLR word error rate of 34.08% and a baseline SLT BLEU-4 score of 23.58 for benchmarking future research on the dataset.

Towards Online Sign Language Recognition and Translation

Jan 10, 2024

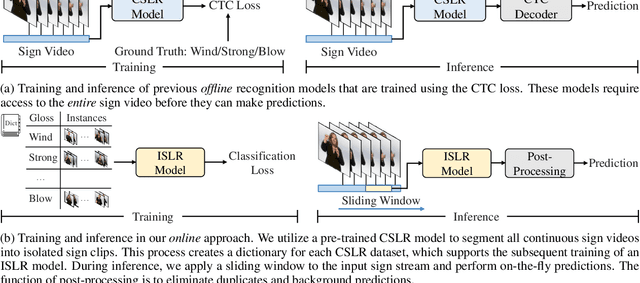

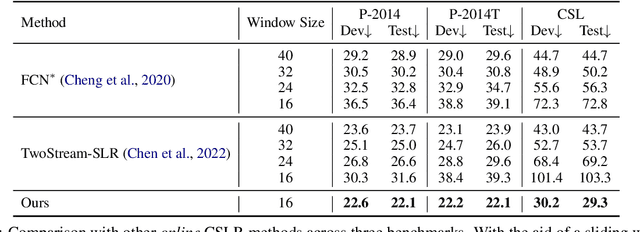

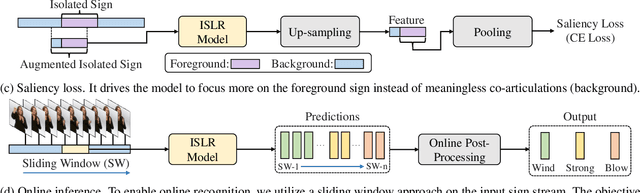

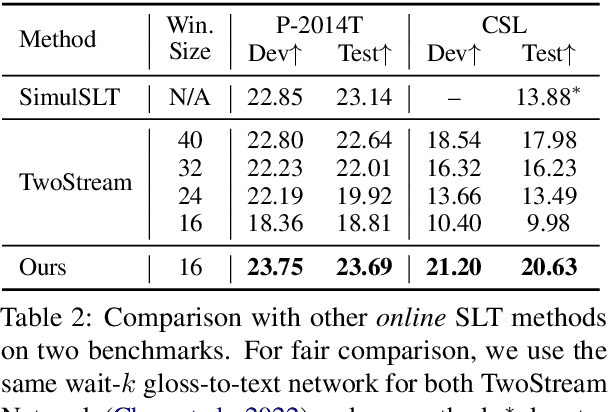

Abstract:The objective of sign language recognition is to bridge the communication gap between the deaf and the hearing. Numerous previous works train their models using the well-established connectionist temporal classification (CTC) loss. During the inference stage, the CTC-based models typically take the entire sign video as input to make predictions. This type of inference scheme is referred to as offline recognition. In contrast, while mature speech recognition systems can efficiently recognize spoken words on the fly, sign language recognition still falls short due to the lack of practical online solutions. In this work, we take the first step towards filling this gap. Our approach comprises three phases: 1) developing a sign language dictionary encompassing all glosses present in a target sign language dataset; 2) training an isolated sign language recognition model on augmented signs using both conventional classification loss and our novel saliency loss; 3) employing a sliding window approach on the input sign sequence and feeding each sign clip to the well-optimized model for online recognition. Furthermore, our online recognition model can be extended to boost the performance of any offline model, and to support online translation by appending a gloss-to-text network onto the recognition model. By integrating our online framework with the previously best-performing offline model, TwoStream-SLR, we achieve new state-of-the-art performance on three benchmarks: Phoenix-2014, Phoenix-2014T, and CSL-Daily. Code and models will be available at https://github.com/FangyunWei/SLRT

A Simple Baseline for Spoken Language to Sign Language Translation with 3D Avatars

Jan 09, 2024

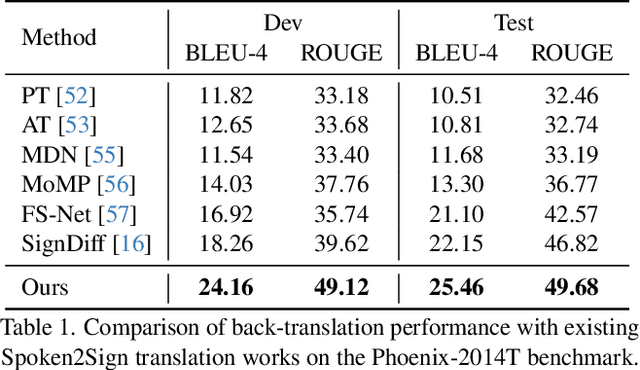

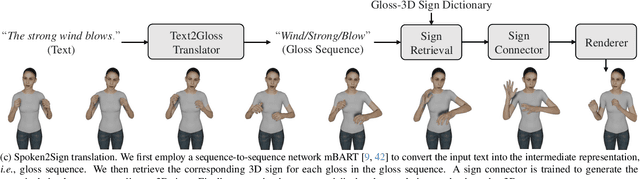

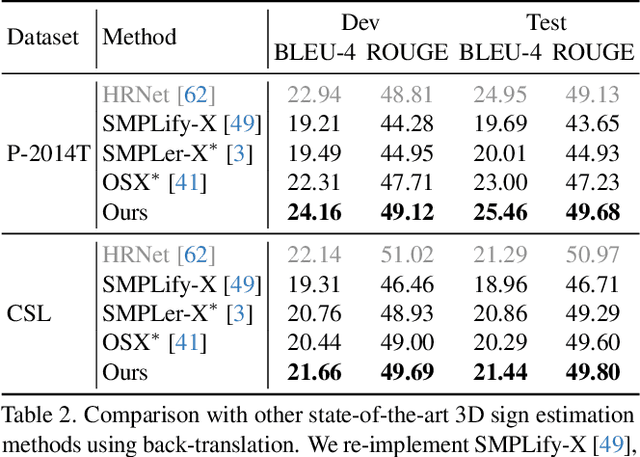

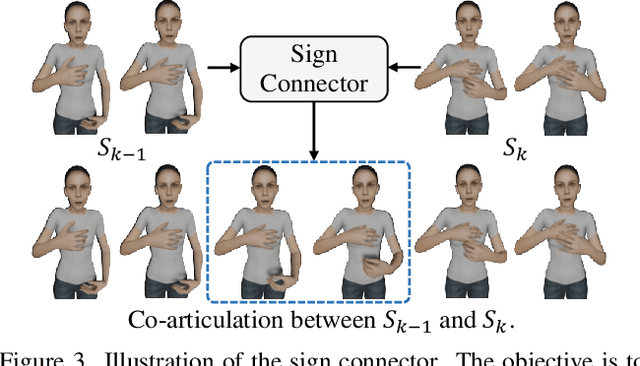

Abstract:The objective of this paper is to develop a functional system for translating spoken languages into sign languages, referred to as Spoken2Sign translation. The Spoken2Sign task is orthogonal and complementary to traditional sign language to spoken language (Sign2Spoken) translation. To enable Spoken2Sign translation, we present a simple baseline consisting of three steps: 1) creating a gloss-video dictionary using existing Sign2Spoken benchmarks; 2) estimating a 3D sign for each sign video in the dictionary; 3) training a Spoken2Sign model, which is composed of a Text2Gloss translator, a sign connector, and a rendering module, with the aid of the yielded gloss-3D sign dictionary. The translation results are then displayed through a sign avatar. As far as we know, we are the first to present the Spoken2Sign task in an output format of 3D signs. In addition to its capability of Spoken2Sign translation, we also demonstrate that two by-products of our approach-3D keypoint augmentation and multi-view understanding-can assist in keypoint-based sign language understanding. Code and models will be available at https://github.com/FangyunWei/SLRT

Natural Language-Assisted Sign Language Recognition

Mar 21, 2023

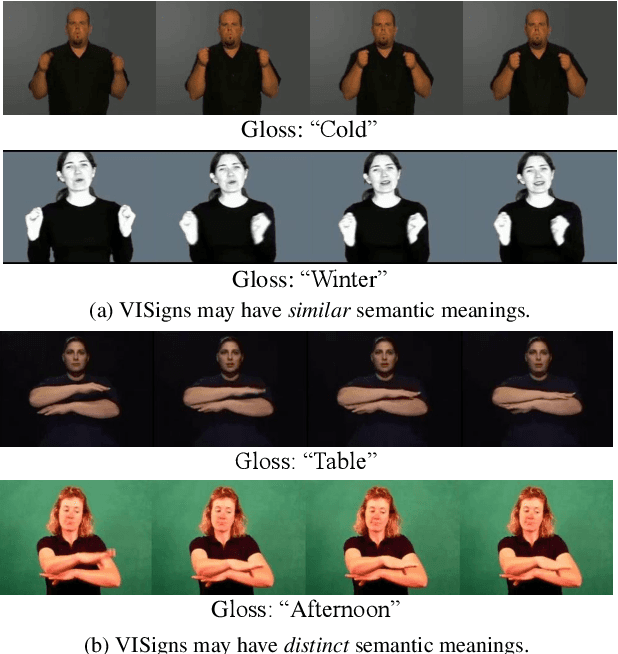

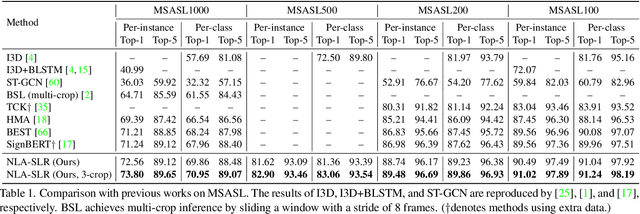

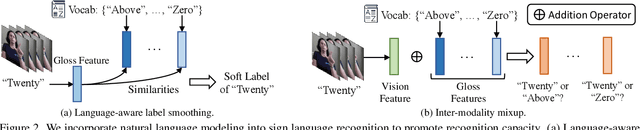

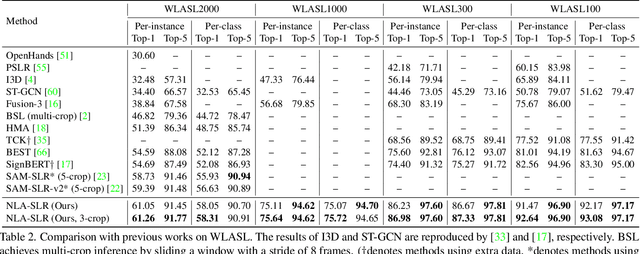

Abstract:Sign languages are visual languages which convey information by signers' handshape, facial expression, body movement, and so forth. Due to the inherent restriction of combinations of these visual ingredients, there exist a significant number of visually indistinguishable signs (VISigns) in sign languages, which limits the recognition capacity of vision neural networks. To mitigate the problem, we propose the Natural Language-Assisted Sign Language Recognition (NLA-SLR) framework, which exploits semantic information contained in glosses (sign labels). First, for VISigns with similar semantic meanings, we propose language-aware label smoothing by generating soft labels for each training sign whose smoothing weights are computed from the normalized semantic similarities among the glosses to ease training. Second, for VISigns with distinct semantic meanings, we present an inter-modality mixup technique which blends vision and gloss features to further maximize the separability of different signs under the supervision of blended labels. Besides, we also introduce a novel backbone, video-keypoint network, which not only models both RGB videos and human body keypoints but also derives knowledge from sign videos of different temporal receptive fields. Empirically, our method achieves state-of-the-art performance on three widely-adopted benchmarks: MSASL, WLASL, and NMFs-CSL. Codes are available at https://github.com/FangyunWei/SLRT.

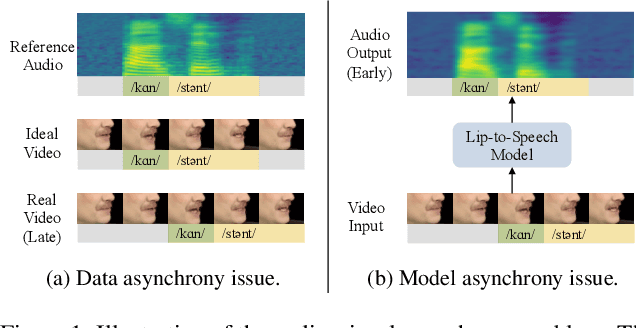

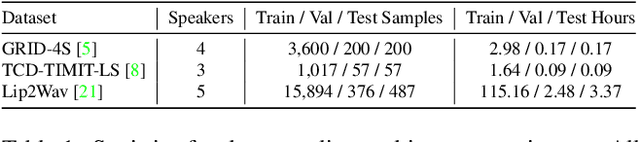

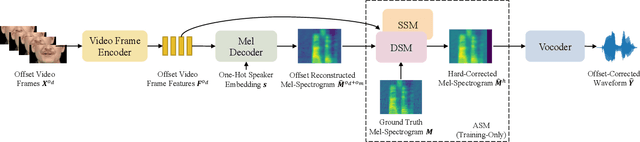

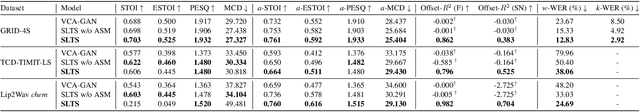

On the Audio-visual Synchronization for Lip-to-Speech Synthesis

Mar 01, 2023

Abstract:Most lip-to-speech (LTS) synthesis models are trained and evaluated under the assumption that the audio-video pairs in the dataset are perfectly synchronized. In this work, we show that the commonly used audio-visual datasets, such as GRID, TCD-TIMIT, and Lip2Wav, can have data asynchrony issues. Training lip-to-speech with such datasets may further cause the model asynchrony issue -- that is, the generated speech and the input video are out of sync. To address these asynchrony issues, we propose a synchronized lip-to-speech (SLTS) model with an automatic synchronization mechanism (ASM) to correct data asynchrony and penalize model asynchrony. We further demonstrate the limitation of the commonly adopted evaluation metrics for LTS with asynchronous test data and introduce an audio alignment frontend before the metrics sensitive to time alignment for better evaluation. We compare our method with state-of-the-art approaches on conventional and time-aligned metrics to show the benefits of synchronization training.

Improving Continuous Sign Language Recognition with Consistency Constraints and Signer Removal

Dec 26, 2022

Abstract:Most deep-learning-based continuous sign language recognition (CSLR) models share a similar backbone consisting of a visual module, a sequential module, and an alignment module. However, due to limited training samples, a connectionist temporal classification loss may not train such CSLR backbones sufficiently. In this work, we propose three auxiliary tasks to enhance the CSLR backbones. The first task enhances the visual module, which is sensitive to the insufficient training problem, from the perspective of consistency. Specifically, since the information of sign languages is mainly included in signers' facial expressions and hand movements, a keypoint-guided spatial attention module is developed to enforce the visual module to focus on informative regions, i.e., spatial attention consistency. Second, noticing that both the output features of the visual and sequential modules represent the same sentence, to better exploit the backbone's power, a sentence embedding consistency constraint is imposed between the visual and sequential modules to enhance the representation power of both features. We name the CSLR model trained with the above auxiliary tasks as consistency-enhanced CSLR, which performs well on signer-dependent datasets in which all signers appear during both training and testing. To make it more robust for the signer-independent setting, a signer removal module based on feature disentanglement is further proposed to remove signer information from the backbone. Extensive ablation studies are conducted to validate the effectiveness of these auxiliary tasks. More remarkably, with a transformer-based backbone, our model achieves state-of-the-art or competitive performance on five benchmarks, PHOENIX-2014, PHOENIX-2014-T, PHOENIX-2014-SI, CSL, and CSL-Daily.

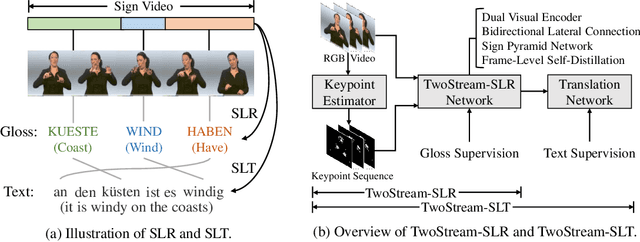

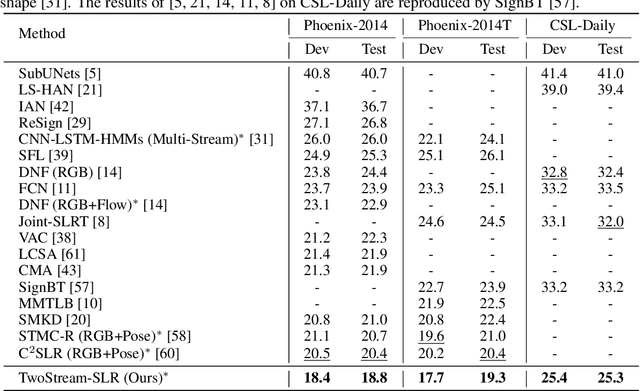

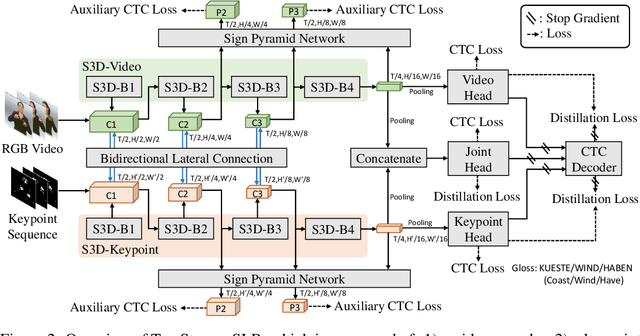

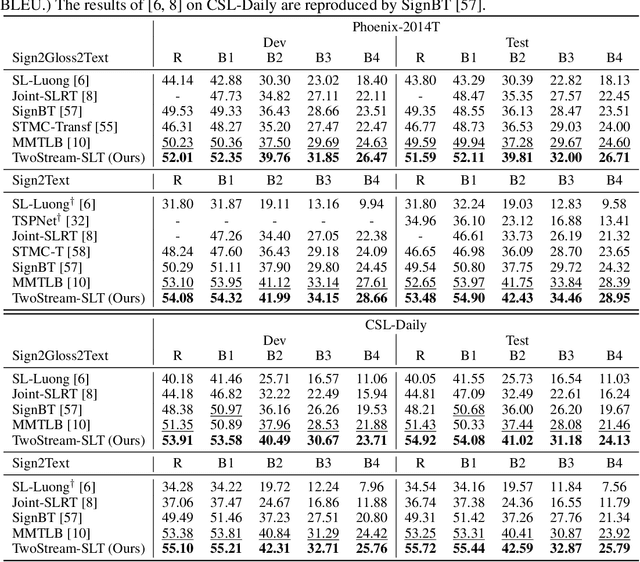

Two-Stream Network for Sign Language Recognition and Translation

Nov 02, 2022

Abstract:Sign languages are visual languages using manual articulations and non-manual elements to convey information. For sign language recognition and translation, the majority of existing approaches directly encode RGB videos into hidden representations. RGB videos, however, are raw signals with substantial visual redundancy, leading the encoder to overlook the key information for sign language understanding. To mitigate this problem and better incorporate domain knowledge, such as handshape and body movement, we introduce a dual visual encoder containing two separate streams to model both the raw videos and the keypoint sequences generated by an off-the-shelf keypoint estimator. To make the two streams interact with each other, we explore a variety of techniques, including bidirectional lateral connection, sign pyramid network with auxiliary supervision, and frame-level self-distillation. The resulting model is called TwoStream-SLR, which is competent for sign language recognition (SLR). TwoStream-SLR is extended to a sign language translation (SLT) model, TwoStream-SLT, by simply attaching an extra translation network. Experimentally, our TwoStream-SLR and TwoStream-SLT achieve state-of-the-art performance on SLR and SLT tasks across a series of datasets including Phoenix-2014, Phoenix-2014T, and CSL-Daily.

Transformer based Multilingual document Embedding model

Aug 20, 2020

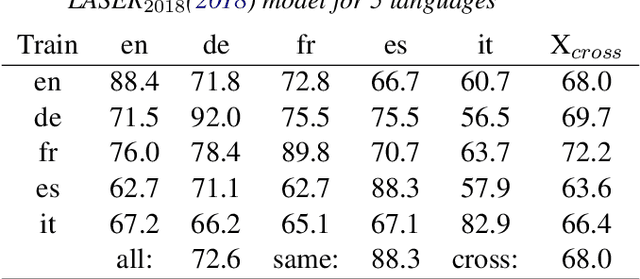

Abstract:One of the current state-of-the-art multilingual document embedding model LASER is based on the bidirectional LSTM neural machine translation model. This paper presents a transformer-based sentence/document embedding model, T-LASER, which makes three significant improvements. Firstly, the BiLSTM layers is replaced by the attention-based transformer layers, which is more capable of learning sequential patterns in longer texts. Secondly, due to the absence of recurrence, T-LASER enables faster parallel computations in the encoder to generate the text embedding. Thirdly, we augment the NMT translation loss function with an additional novel distance constraint loss. This distance constraint loss would further bring the embeddings of parallel sentences close together in the vector space; we call the T-LASER model trained with distance constraint, cT-LASER. Our cT-LASER model significantly outperforms both BiLSTM-based LASER and the simpler transformer-based T-LASER.

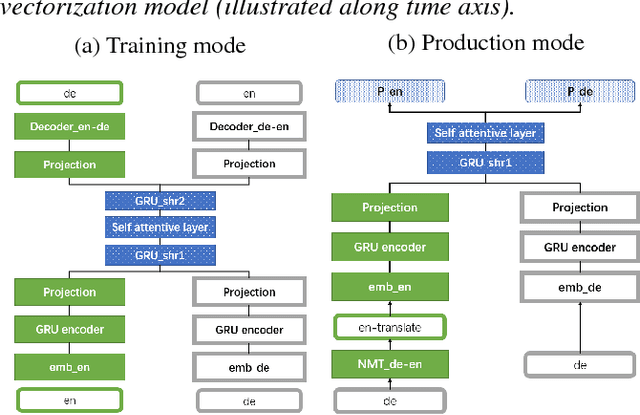

Neural machine translation framework based cross-lingual document vector with distance constraint training

Sep 04, 2018

Abstract:A universal cross-lingual representation of documents is very important for many natural language processing tasks. In this paper, we present a document vectorization method which can effectively create document vectors via self-attention mechanism using a neural machine translation (NMT) framework. The model used by our method can be trained with parallel corpora that are unrelated to the task at hand. During testing, our method will take a monolingual document and convert it into a "Neural machine Translation framework based crosslingual Document Vector with distance constraint training" (cNTDV). cNTDV is a follow-up study from our previous research on the neural machine translation framework based document vector. The cNTDV can produce the document vector from a forward-pass of the encoder with fast speed. Moreover, it is trained with a distance constraint, so that the document vector obtained from different language pair is always consistent with each other. In a cross-lingual document classification task, our cNTDV embeddings surpass the published state-of-the-art performance in the English-to-German classification test, and, to our best knowledge, it also achieves the second best performance in German-to-English classification test. Comparing to our previous research, it does not need a translator in the testing process, which makes the model faster and more convenient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge