Boshu Lei

ActiveGrasp: Information-Guided Active Grasping with Calibrated Energy-based Model

Nov 16, 2025Abstract:Grasping in a densely cluttered environment is a challenging task for robots. Previous methods tried to solve this problem by actively gathering multiple views before grasp pose generation. However, they either overlooked the importance of the grasp distribution for information gain estimation or relied on the projection of the grasp distribution, which ignores the structure of grasp poses on the SE(3) manifold. To tackle these challenges, we propose a calibrated energy-based model for grasp pose generation and an active view selection method that estimates information gain from grasp distribution. Our energy-based model captures the multi-modality nature of grasp distribution on the SE(3) manifold. The energy level is calibrated to the success rate of grasps so that the predicted distribution aligns with the real distribution. The next best view is selected by estimating the information gain for grasp from the calibrated distribution conditioned on the reconstructed environment, which could efficiently drive the robot to explore affordable parts of the target object. Experiments on simulated environments and real robot setups demonstrate that our model could successfully grasp objects in a cluttered environment with limited view budgets compared to previous state-of-the-art models. Our simulated environment can serve as a reproducible platform for future research on active grasping. The source code of our paper will be made public when the paper is released to the public.

TensorTouch: Calibration of Tactile Sensors for High Resolution Stress Tensor and Deformation for Dexterous Manipulation

Jun 09, 2025Abstract:Advanced dexterous manipulation involving multiple simultaneous contacts across different surfaces, like pinching coins from ground or manipulating intertwined objects, remains challenging for robotic systems. Such tasks exceed the capabilities of vision and proprioception alone, requiring high-resolution tactile sensing with calibrated physical metrics. Raw optical tactile sensor images, while information-rich, lack interpretability and cross-sensor transferability, limiting their real-world utility. TensorTouch addresses this challenge by integrating finite element analysis with deep learning to extract comprehensive contact information from optical tactile sensors, including stress tensors, deformation fields, and force distributions at pixel-level resolution. The TensorTouch framework achieves sub-millimeter position accuracy and precise force estimation while supporting large sensor deformations crucial for manipulating soft objects. Experimental validation demonstrates 90% success in selectively grasping one of two strings based on detected motion, enabling new contact-rich manipulation capabilities previously inaccessible to robotic systems.

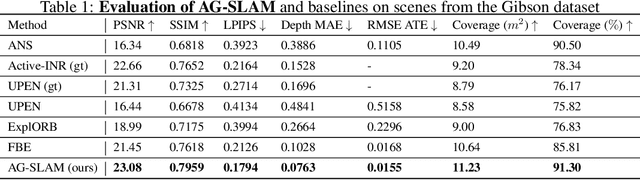

AG-SLAM: Active Gaussian Splatting SLAM

Oct 22, 2024

Abstract:We present AG-SLAM, the first active SLAM system utilizing 3D Gaussian Splatting (3DGS) for online scene reconstruction. In recent years, radiance field scene representations, including 3DGS have been widely used in SLAM and exploration, but actively planning trajectories for robotic exploration is still unvisited. In particular, many exploration methods assume precise localization and thus do not mitigate the significant risk of constructing a trajectory, which is difficult for a SLAM system to operate on. This can cause camera tracking failure and lead to failures in real-world robotic applications. Our method leverages Fisher Information to balance the dual objectives of maximizing the information gain for the environment while minimizing the cost of localization errors. Experiments conducted on the Gibson and Habitat-Matterport 3D datasets demonstrate state-of-the-art results of the proposed method.

Next Best Sense: Guiding Vision and Touch with FisherRF for 3D Gaussian Splatting

Oct 07, 2024

Abstract:We propose a framework for active next best view and touch selection for robotic manipulators using 3D Gaussian Splatting (3DGS). 3DGS is emerging as a useful explicit 3D scene representation for robotics, as it has the ability to represent scenes in a both photorealistic and geometrically accurate manner. However, in real-world, online robotic scenes where the number of views is limited given efficiency requirements, random view selection for 3DGS becomes impractical as views are often overlapping and redundant. We address this issue by proposing an end-to-end online training and active view selection pipeline, which enhances the performance of 3DGS in few-view robotics settings. We first elevate the performance of few-shot 3DGS with a novel semantic depth alignment method using Segment Anything Model 2 (SAM2) that we supplement with Pearson depth and surface normal loss to improve color and depth reconstruction of real-world scenes. We then extend FisherRF, a next-best-view selection method for 3DGS, to select views and touch poses based on depth uncertainty. We perform online view selection on a real robot system during live 3DGS training. We motivate our improvements to few-shot GS scenes, and extend depth-based FisherRF to them, where we demonstrate both qualitative and quantitative improvements on challenging robot scenes. For more information, please see our project page at https://armlabstanford.github.io/next-best-sense.

Beyond Uncertainty: Risk-Aware Active View Acquisition for Safe Robot Navigation and 3D Scene Understanding with FisherRF

Mar 18, 2024

Abstract:This work proposes a novel approach to bolster both the robot's risk assessment and safety measures while deepening its understanding of 3D scenes, which is achieved by leveraging Radiance Field (RF) models and 3D Gaussian Splatting. To further enhance these capabilities, we incorporate additional sampled views from the environment with the RF model. One of our key contributions is the introduction of Risk-aware Environment Masking (RaEM), which prioritizes crucial information by selecting the next-best-view that maximizes the expected information gain. This targeted approach aims to minimize uncertainties surrounding the robot's path and enhance the safety of its navigation. Our method offers a dual benefit: improved robot safety and increased efficiency in risk-aware 3D scene reconstruction and understanding. Extensive experiments in real-world scenarios demonstrate the effectiveness of our proposed approach, highlighting its potential to establish a robust and safety-focused framework for active robot exploration and 3D scene understanding.

FisherRF: Active View Selection and Uncertainty Quantification for Radiance Fields using Fisher Information

Nov 29, 2023Abstract:This study addresses the challenging problem of active view selection and uncertainty quantification within the domain of Radiance Fields. Neural Radiance Fields (NeRF) have greatly advanced image rendering and reconstruction, but the limited availability of 2D images poses uncertainties stemming from occlusions, depth ambiguities, and imaging errors. Efficiently selecting informative views becomes crucial, and quantifying NeRF model uncertainty presents intricate challenges. Existing approaches either depend on model architecture or are based on assumptions regarding density distributions that are not generally applicable. By leveraging Fisher Information, we efficiently quantify observed information within Radiance Fields without ground truth data. This can be used for the next best view selection and pixel-wise uncertainty quantification. Our method overcomes existing limitations on model architecture and effectiveness, achieving state-of-the-art results in both view selection and uncertainty quantification, demonstrating its potential to advance the field of Radiance Fields. Our method with the 3D Gaussian Splatting backend could perform view selections at 70 fps.

Multi-Risk-RRT: An Efficient Motion Planning Algorithm for Robotic Autonomous Luggage Trolley Collection at Airports

Sep 20, 2023

Abstract:Robots have become increasingly prevalent in dynamic and crowded environments such as airports and shopping malls. In these scenarios, the critical challenges for robot navigation are reliability and timely arrival at predetermined destinations. While existing risk-based motion planning algorithms effectively reduce collision risks with static and dynamic obstacles, there is still a need for significant performance improvements. Specifically, the dynamic environments demand more rapid responses and robust planning. To address this gap, we introduce a novel risk-based multi-directional sampling algorithm, Multi-directional Risk-based Rapidly-exploring Random Tree (Multi-Risk-RRT). Unlike traditional algorithms that solely rely on a rooted tree or double trees for state space exploration, our approach incorporates multiple sub-trees. Each sub-tree independently explores its surrounding environment. At the same time, the primary rooted tree collects the heuristic information from these sub-trees, facilitating rapid progress toward the goal state. Our evaluations, including simulation and real-world environmental studies, demonstrate that Multi-Risk-RRT outperforms existing unidirectional and bi-directional risk-based algorithms in planning efficiency and robustness.

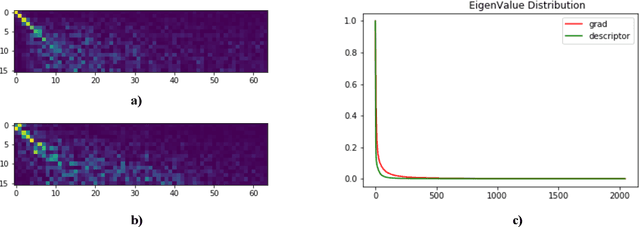

SuperGF: Unifying Local and Global Features for Visual Localization

Dec 23, 2022

Abstract:Advanced visual localization techniques encompass image retrieval challenges and 6 Degree-of-Freedom (DoF) camera pose estimation, such as hierarchical localization. Thus, they must extract global and local features from input images. Previous methods have achieved this through resource-intensive or accuracy-reducing means, such as combinatorial pipelines or multi-task distillation. In this study, we present a novel method called SuperGF, which effectively unifies local and global features for visual localization, leading to a higher trade-off between localization accuracy and computational efficiency. Specifically, SuperGF is a transformer-based aggregation model that operates directly on image-matching-specific local features and generates global features for retrieval. We conduct experimental evaluations of our method in terms of both accuracy and efficiency, demonstrating its advantages over other methods. We also provide implementations of SuperGF using various types of local features, including dense and sparse learning-based or hand-crafted descriptors.

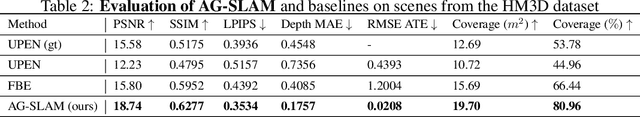

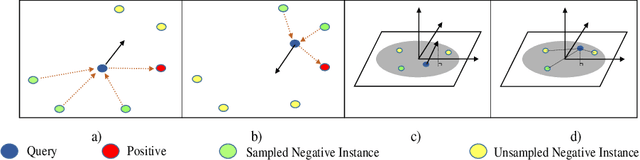

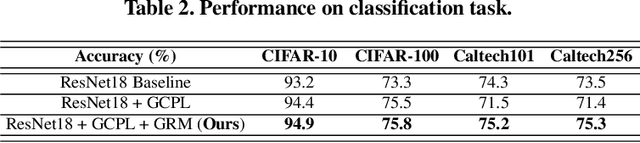

GRM: Gradient Rectification Module for Visual Place Retrieval

Apr 23, 2022

Abstract:Visual place retrieval aims to search images in the database that depict similar places as the query image. However, global descriptors encoded by the network usually fall into a low dimensional principal space, which is harmful to the retrieval performance. We first analyze the cause of this phenomenon, pointing out that it is due to degraded distribution of the gradients of descriptors. Then, a new module called Gradient Rectification Module(GRM) is proposed to alleviate this issue. It can be appended after the final pooling layer. This module can rectify the gradients to the complement space of the principal space. Therefore, the network is encouraged to generate descriptors more uniformly in the whole space. At last, we conduct experiments on multiple datasets and generalize our method to classification task under prototype learning framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge