Bo Cui

ERNIE 5.0 Technical Report

Feb 04, 2026Abstract:In this report, we introduce ERNIE 5.0, a natively autoregressive foundation model desinged for unified multimodal understanding and generation across text, image, video, and audio. All modalities are trained from scratch under a unified next-group-of-tokens prediction objective, based on an ultra-sparse mixture-of-experts (MoE) architecture with modality-agnostic expert routing. To address practical challenges in large-scale deployment under diverse resource constraints, ERNIE 5.0 adopts a novel elastic training paradigm. Within a single pre-training run, the model learns a family of sub-models with varying depths, expert capacities, and routing sparsity, enabling flexible trade-offs among performance, model size, and inference latency in memory- or time-constrained scenarios. Moreover, we systematically address the challenges of scaling reinforcement learning to unified foundation models, thereby guaranteeing efficient and stable post-training under ultra-sparse MoE architectures and diverse multimodal settings. Extensive experiments demonstrate that ERNIE 5.0 achieves strong and balanced performance across multiple modalities. To the best of our knowledge, among publicly disclosed models, ERNIE 5.0 represents the first production-scale realization of a trillion-parameter unified autoregressive model that supports both multimodal understanding and generation. To facilitate further research, we present detailed visualizations of modality-agnostic expert routing in the unified model, alongside comprehensive empirical analysis of elastic training, aiming to offer profound insights to the community.

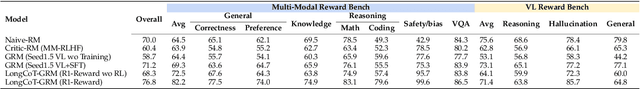

BaseReward: A Strong Baseline for Multimodal Reward Model

Sep 19, 2025

Abstract:The rapid advancement of Multimodal Large Language Models (MLLMs) has made aligning them with human preferences a critical challenge. Reward Models (RMs) are a core technology for achieving this goal, but a systematic guide for building state-of-the-art Multimodal Reward Models (MRMs) is currently lacking in both academia and industry. Through exhaustive experimental analysis, this paper aims to provide a clear ``recipe'' for constructing high-performance MRMs. We systematically investigate every crucial component in the MRM development pipeline, including \textit{reward modeling paradigms} (e.g., Naive-RM, Critic-based RM, and Generative RM), \textit{reward head architecture}, \textit{training strategies}, \textit{data curation} (covering over ten multimodal and text-only preference datasets), \textit{backbone model} and \textit{model scale}, and \textit{ensemble methods}. Based on these experimental insights, we introduce \textbf{BaseReward}, a powerful and efficient baseline for multimodal reward modeling. BaseReward adopts a simple yet effective architecture, built upon a {Qwen2.5-VL} backbone, featuring an optimized two-layer reward head, and is trained on a carefully curated mixture of high-quality multimodal and text-only preference data. Our results show that BaseReward establishes a new SOTA on major benchmarks such as MM-RLHF-Reward Bench, VL-Reward Bench, and Multimodal Reward Bench, outperforming previous models. Furthermore, to validate its practical utility beyond static benchmarks, we integrate BaseReward into a real-world reinforcement learning pipeline, successfully enhancing an MLLM's performance across various perception, reasoning, and conversational tasks. This work not only delivers a top-tier MRM but, more importantly, provides the community with a clear, empirically-backed guide for developing robust reward models for the next generation of MLLMs.

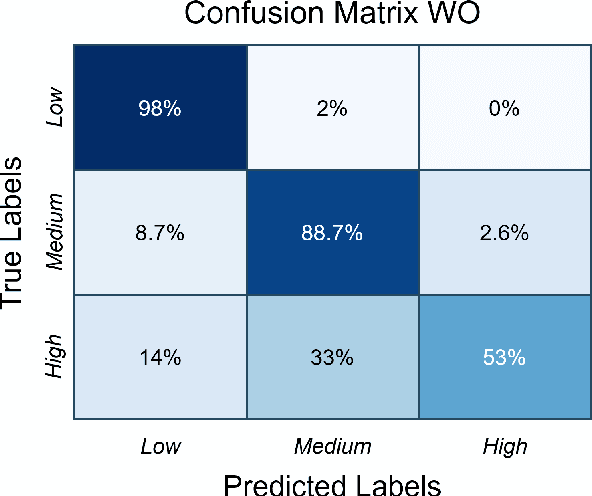

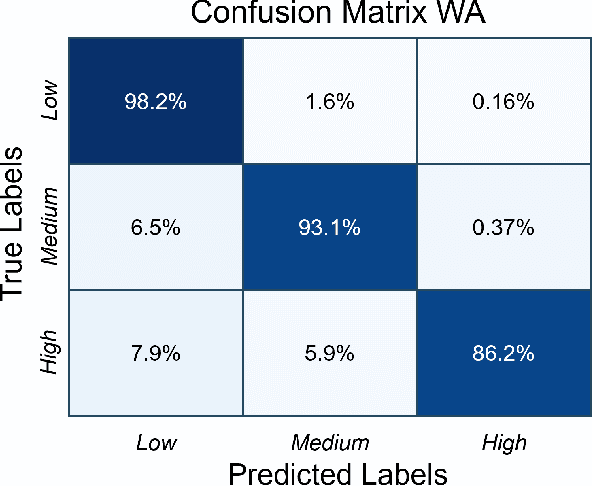

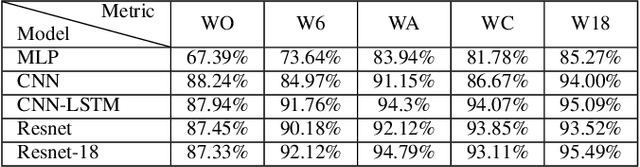

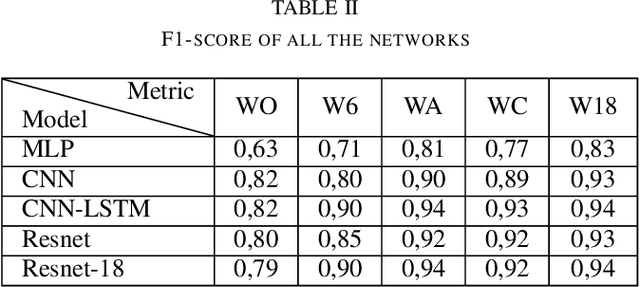

Evaluating Multi-Sensor Placement and Neural Network Architectures for Physical Activity Level Classification

Feb 20, 2025

Abstract:Accurate physical activity level (PAL) classification could be beneficial for osteoarthritis (OA) management. This study examines the impact of sensor placement and deep learning models on AL classification using the Metabolic Equivalent of Task values. The results show that the addition of anankle sensor (WA) significantly improves the classification of intensity activities compared to wrist-only configuration(53% to 86.2%). The CNN-LSTM model achieves the highest accuracy (95.09%). Statistical analysis confirms multi-sensor setups outperform single-sensor configurations (p < 0.05). The WA configuration offers a balance between usability and accuracy, making it a cost-effective solution for AL monitoring, particularly in OA management.

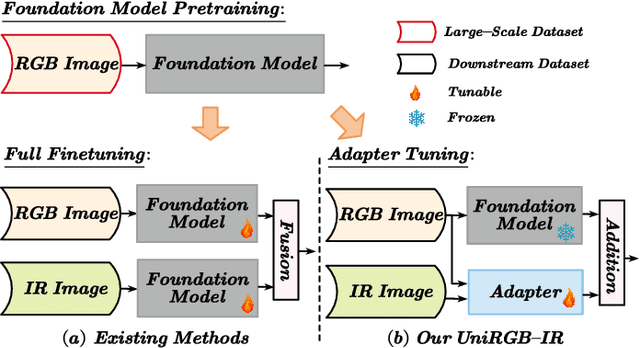

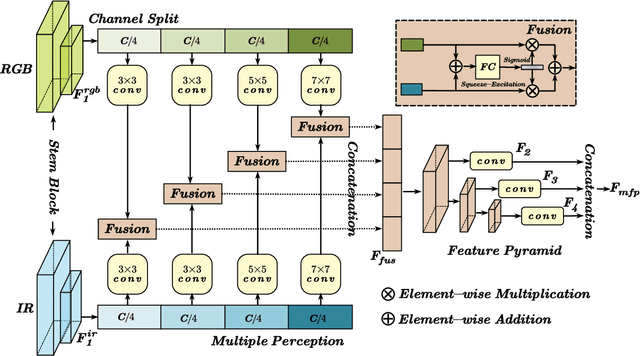

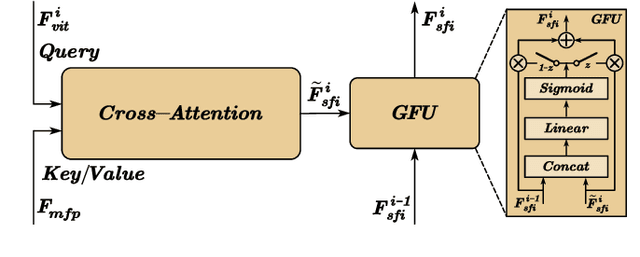

UniRGB-IR: A Unified Framework for Visible-Infrared Downstream Tasks via Adapter Tuning

Apr 26, 2024

Abstract:Semantic analysis on visible (RGB) and infrared (IR) images has gained attention for its ability to be more accurate and robust under low-illumination and complex weather conditions. Due to the lack of pre-trained foundation models on the large-scale infrared image datasets, existing methods prefer to design task-specific frameworks and directly fine-tune them with pre-trained foundation models on their RGB-IR semantic relevance datasets, which results in poor scalability and limited generalization. In this work, we propose a scalable and efficient framework called UniRGB-IR to unify RGB-IR downstream tasks, in which a novel adapter is developed to efficiently introduce richer RGB-IR features into the pre-trained RGB-based foundation model. Specifically, our framework consists of a vision transformer (ViT) foundation model, a Multi-modal Feature Pool (MFP) module and a Supplementary Feature Injector (SFI) module. The MFP and SFI modules cooperate with each other as an adpater to effectively complement the ViT features with the contextual multi-scale features. During training process, we freeze the entire foundation model to inherit prior knowledge and only optimize the MFP and SFI modules. Furthermore, to verify the effectiveness of our framework, we utilize the ViT-Base as the pre-trained foundation model to perform extensive experiments. Experimental results on various RGB-IR downstream tasks demonstrate that our method can achieve state-of-the-art performance. The source code and results are available at https://github.com/PoTsui99/UniRGB-IR.git.

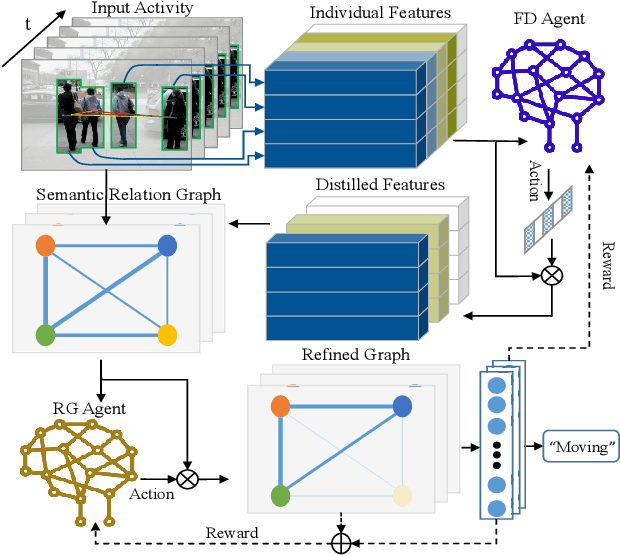

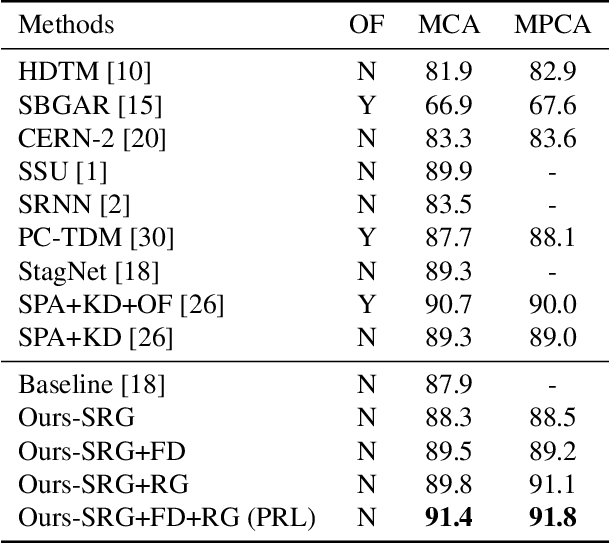

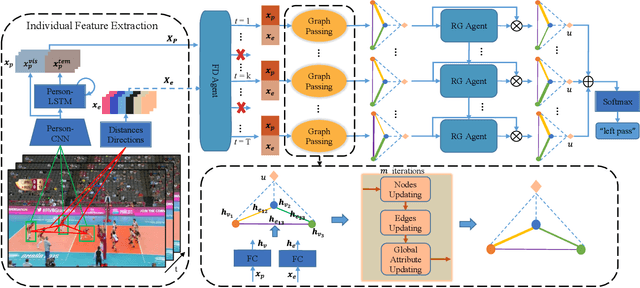

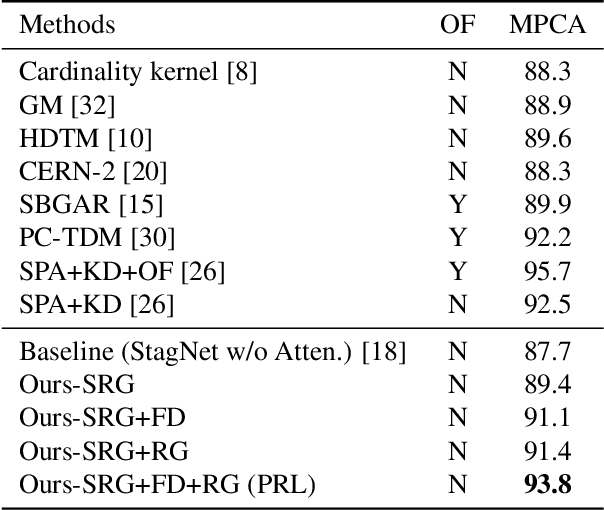

Progressive Relation Learning for Group Activity Recognition

Aug 08, 2019

Abstract:Group activities usually involve spatio-temporal dynamics among many interactive individuals, while only a few participants at several key frames essentially define the activity. Therefore, effectively modeling the group-relevant and suppressing the irrelevant actions (and interactions) are vital for group activity recognition. In this paper, we propose a novel method based on deep reinforcement learning to progressively refine the low-level features and high-level relations of group activities. Firstly, we construct a semantic relation graph (SRG) to explicitly model the relations among persons. Then, two agents adopting policy according to two Markov decision processes are applied to progressively refine the SRG. Specifically, one feature-distilling (FD) agent in the discrete action space refines the low-level spatio-temporal features by distilling the most informative frames. Another relation-gating (RG) agent in continuous action space adjusts the high-level semantic graph to pay more attention to group-relevant relations. The SRG, FD agent, and RG agent are optimized alternately to mutually boost the performance of each other. Extensive experiments on two widely used benchmarks demonstrate the effectiveness and superiority of the proposed approach.

Causally Driven Incremental Multi Touch Attribution Using a Recurrent Neural Network

Feb 05, 2019

Abstract:This paper describes a practical system for Multi Touch Attribution (MTA) for use by a publisher of digital ads. We developed this system for JD.com, an eCommerce company, which is also a publisher of digital ads in China. The approach has two steps. The first step ('response modeling') fits a user-level model for purchase of a product as a function of the user's exposure to ads. The second ('credit allocation') uses the fitted model to allocate the incremental part of the observed purchase due to advertising, to the ads the user is exposed to over the previous T days. To implement step one, we train a Recurrent Neural Network (RNN) on user-level conversion and exposure data. The RNN has the advantage of flexibly handling the sequential dependence in the data in a semi-parametric way. The specific RNN formulation we implement captures the impact of advertising intensity, timing, competition, and user-heterogeneity, which are known to be relevant to ad-response. To implement step two, we compute Shapley Values, which have the advantage of having axiomatic foundations and satisfying fairness considerations. The specific formulation of the Shapley Value we implement respects incrementality by allocating the overall incremental improvement in conversion to the exposed ads, while handling the sequence-dependence of exposures on the observed outcomes. The system is under production at JD.com, and scales to handle the high dimensionality of the problem on the platform (attribution of the orders of about 300M users, for roughly 160K brands, across 200+ ad-types, served about 80B ad-impressions over a typical 15-day period).

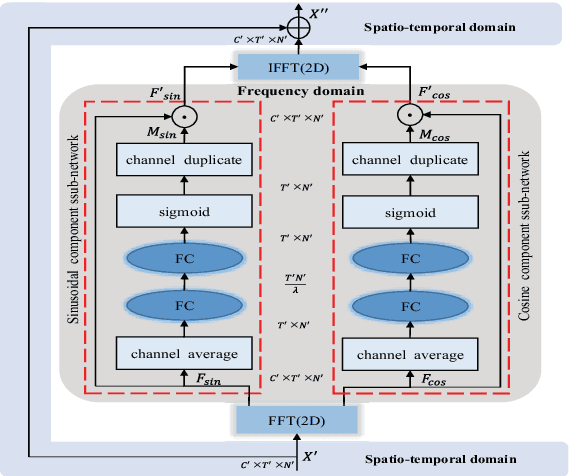

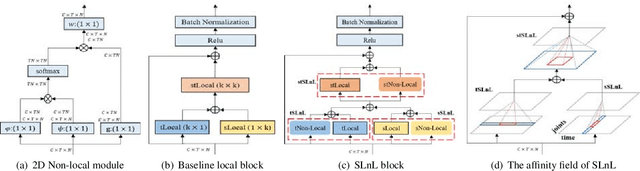

Skeleton-Based Action Recognition with Synchronous Local and Non-local Spatio-temporal Learning and Frequency Attention

Nov 10, 2018

Abstract:Benefiting from its succinctness and robustness, skeleton-based human action recognition has recently attracted much attention. Most existing methods utilize local networks, such as recurrent networks, convolutional neural networks, and graph convolutional networks, to extract spatio-temporal dynamics hierarchically. As a consequence, the local and non-local dependencies, which respectively contain more details and semantics, are asynchronously captured in different level of layers. Moreover, limited to the spatio-temporal domain, these methods ignored patterns in the frequency domain. To better extract information from multi-domains, we propose a residual frequency attention (rFA) to focus on discriminative patterns in the frequency domain, and a synchronous local and non-local (SLnL) block to simultaneously capture the details and semantics in the spatio-temporal domain. To optimize the whole process, we also propose a soft-margin focal loss (SMFL), which can automatically conducts adaptive data selection and encourages intrinsic margins in classifiers. Extensive experiments are performed on several large-scale action recognition datasets and our approach significantly outperforms other state-of-the-art methods.

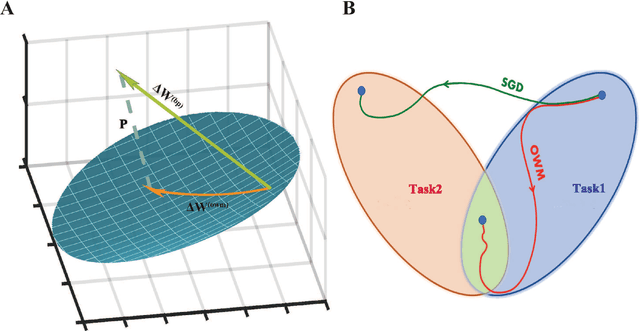

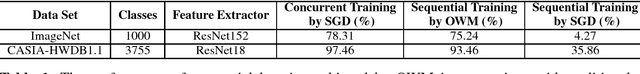

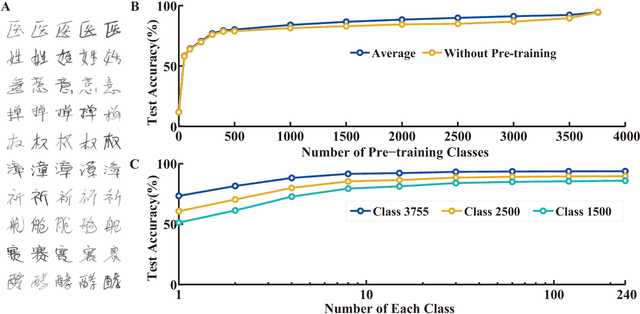

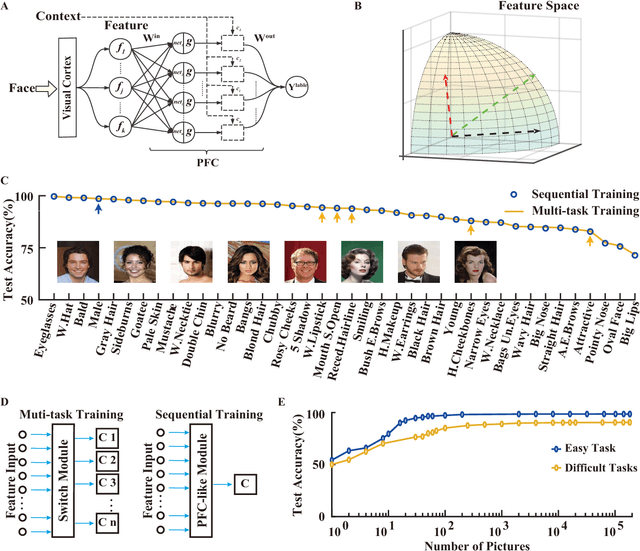

Continuous Learning of Context-dependent Processing in Neural Networks

Oct 05, 2018

Abstract:Deep artificial neural networks (DNNs) are powerful tools for recognition and classification as they learn sophisticated mapping rules between the inputs and the outputs. However, the rules that learned by the majority of current DNNs used for pattern recognition are largely fixed and do not vary with different conditions. This limits the network's ability to work in more complex and dynamical situations in which the mapping rules themselves are not fixed but constantly change according to contexts, such as different environments and goals. Inspired by the role of the prefrontal cortex (PFC) in mediating context-dependent processing in the primate brain, here we propose a novel approach, involving a learning algorithm named orthogonal weights modification (OWM) with the addition of a PFC-like module, that enables networks to continually learn different mapping rules in a context-dependent way. We demonstrate that with OWM to protect previously acquired knowledge, the networks could sequentially learn up to thousands of different mapping rules without interference, and needing as few as $\sim$10 samples to learn each, reaching a human level ability in online, continual learning. In addition, by using a PFC-like module to enable contextual information to modulate the representation of sensory features, a network could sequentially learn different, context-specific mappings for identical stimuli. Taken together, these approaches allow us to teach a single network numerous context-dependent mapping rules in an online, continual manner. This would enable highly compact systems to gradually learn myriad of regularities of the real world and eventually behave appropriately within it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge