Continuous Learning of Context-dependent Processing in Neural Networks

Paper and Code

Oct 05, 2018

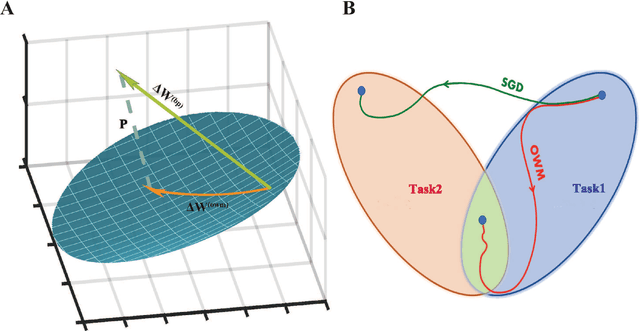

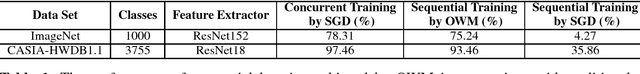

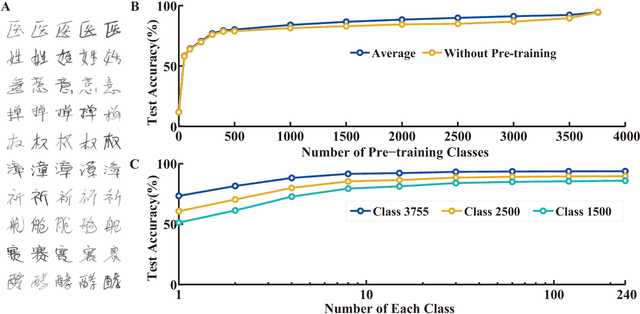

Deep artificial neural networks (DNNs) are powerful tools for recognition and classification as they learn sophisticated mapping rules between the inputs and the outputs. However, the rules that learned by the majority of current DNNs used for pattern recognition are largely fixed and do not vary with different conditions. This limits the network's ability to work in more complex and dynamical situations in which the mapping rules themselves are not fixed but constantly change according to contexts, such as different environments and goals. Inspired by the role of the prefrontal cortex (PFC) in mediating context-dependent processing in the primate brain, here we propose a novel approach, involving a learning algorithm named orthogonal weights modification (OWM) with the addition of a PFC-like module, that enables networks to continually learn different mapping rules in a context-dependent way. We demonstrate that with OWM to protect previously acquired knowledge, the networks could sequentially learn up to thousands of different mapping rules without interference, and needing as few as $\sim$10 samples to learn each, reaching a human level ability in online, continual learning. In addition, by using a PFC-like module to enable contextual information to modulate the representation of sensory features, a network could sequentially learn different, context-specific mappings for identical stimuli. Taken together, these approaches allow us to teach a single network numerous context-dependent mapping rules in an online, continual manner. This would enable highly compact systems to gradually learn myriad of regularities of the real world and eventually behave appropriately within it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge