Andrey Kravchenko

Vote'n'Rank: Revision of Benchmarking with Social Choice Theory

Oct 13, 2022

Abstract:The development of state-of-the-art systems in different applied areas of machine learning (ML) is driven by benchmarks, which have shaped the paradigm of evaluating generalisation capabilities from multiple perspectives. Although the paradigm is shifting towards more fine-grained evaluation across diverse tasks, the delicate question of how to aggregate the performances has received particular interest in the community. In general, benchmarks follow the unspoken utilitarian principles, where the systems are ranked based on their mean average score over task-specific metrics. Such aggregation procedure has been viewed as a sub-optimal evaluation protocol, which may have created the illusion of progress. This paper proposes Vote'n'Rank, a framework for ranking systems in multi-task benchmarks under the principles of the social choice theory. We demonstrate that our approach can be efficiently utilised to draw new insights on benchmarking in several ML sub-fields and identify the best-performing systems in research and development case studies. The Vote'n'Rank's procedures are more robust than the mean average while being able to handle missing performance scores and determine conditions under which the system becomes the winner.

A Differentiable Language Model Adversarial Attack on Text Classifiers

Jul 23, 2021

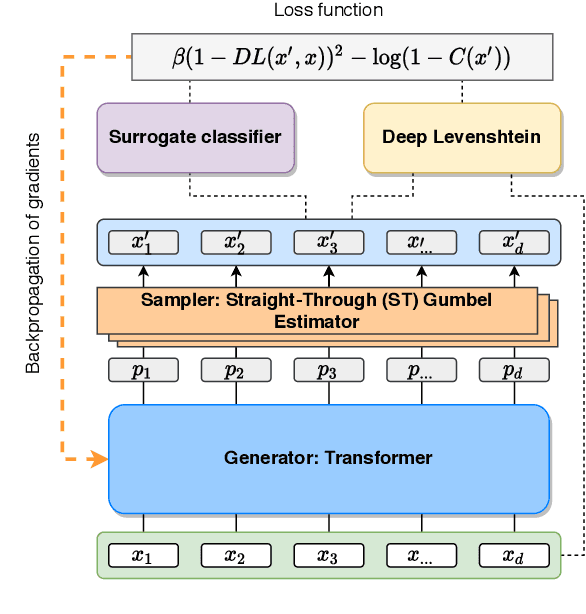

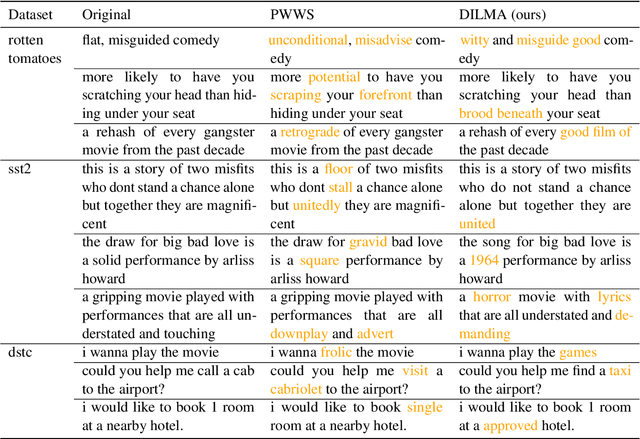

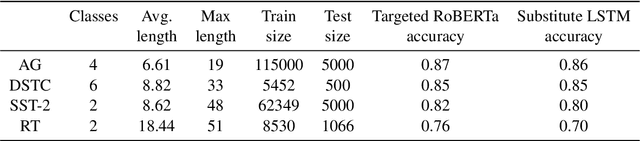

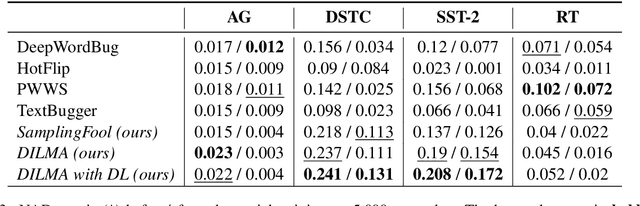

Abstract:Robustness of huge Transformer-based models for natural language processing is an important issue due to their capabilities and wide adoption. One way to understand and improve robustness of these models is an exploration of an adversarial attack scenario: check if a small perturbation of an input can fool a model. Due to the discrete nature of textual data, gradient-based adversarial methods, widely used in computer vision, are not applicable per~se. The standard strategy to overcome this issue is to develop token-level transformations, which do not take the whole sentence into account. In this paper, we propose a new black-box sentence-level attack. Our method fine-tunes a pre-trained language model to generate adversarial examples. A proposed differentiable loss function depends on a substitute classifier score and an approximate edit distance computed via a deep learning model. We show that the proposed attack outperforms competitors on a diverse set of NLP problems for both computed metrics and human evaluation. Moreover, due to the usage of the fine-tuned language model, the generated adversarial examples are hard to detect, thus current models are not robust. Hence, it is difficult to defend from the proposed attack, which is not the case for other attacks.

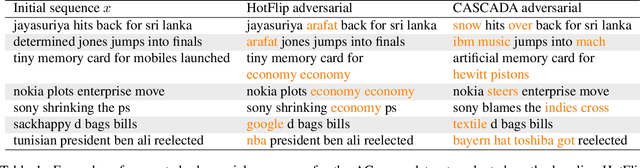

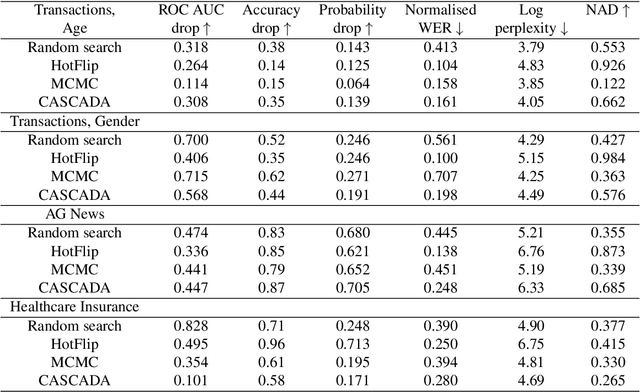

Gradient-based adversarial attacks on categorical sequence models via traversing an embedded world

Mar 09, 2020

Abstract:An adversarial attack paradigm explores various scenarios for vulnerability of machine and especially deep learning models: we can apply minor changes to the model input to force a classifier's failure for a particular example. Most of the state of the art frameworks focus on adversarial attacks for images and other structured model inputs. The adversarial attacks for categorical sequences can also be harmful if they are successful. However, successful attacks for inputs based on categorical sequences should address the following challenges: (1) non-differentiability of the target function, (2) constraints on transformations of initial sequences, and (3) diversity of possible problems. We handle these challenges using two approaches. The first approach adopts Monte-Carlo methods and allows usage in any scenario, the second approach uses a continuous relaxation of models and target metrics, and thus allows using general state of the art methods on adversarial attacks with little additional effort. Results for money transactions, medical fraud, and NLP datasets suggest the proposed methods generate reasonable adversarial sequences that are close to original ones, but fool machine learning models even for blackbox adversarial attacks.

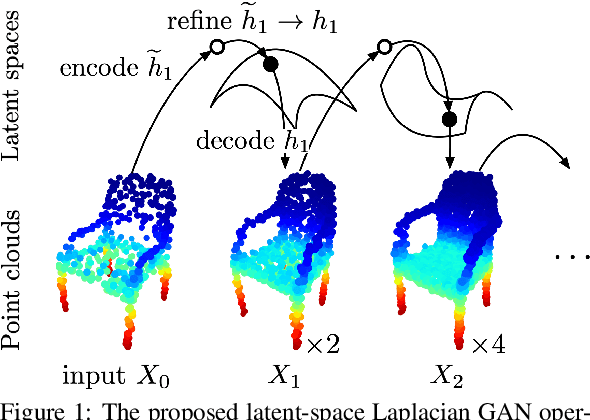

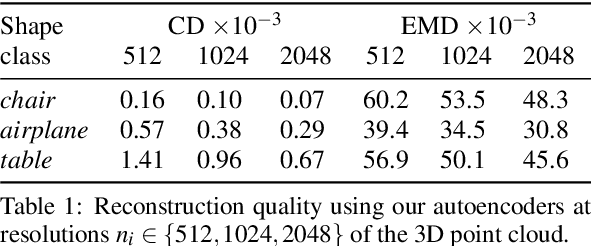

Latent-Space Laplacian Pyramids for Adversarial Representation Learning with 3D Point Clouds

Dec 13, 2019

Abstract:Constructing high-quality generative models for 3D shapes is a fundamental task in computer vision with diverse applications in geometry processing, engineering, and design. Despite the recent progress in deep generative modelling, synthesis of finely detailed 3D surfaces, such as high-resolution point clouds, from scratch has not been achieved with existing approaches. In this work, we propose to employ the latent-space Laplacian pyramid representation within a hierarchical generative model for 3D point clouds. We combine the recently proposed latent-space GAN and Laplacian GAN architectures to form a multi-scale model capable of generating 3D point clouds at increasing levels of detail. Our evaluation demonstrates that our model outperforms the existing generative models for 3D point clouds.

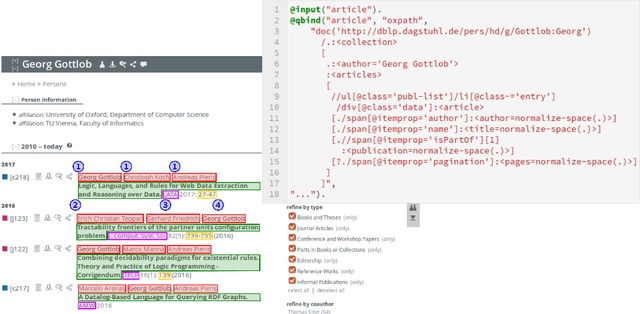

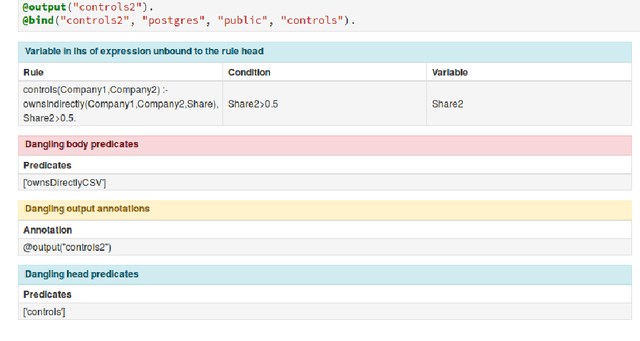

Data Science with Vadalog: Bridging Machine Learning and Reasoning

Jul 23, 2018

Abstract:Following the recent successful examples of large technology companies, many modern enterprises seek to build knowledge graphs to provide a unified view of corporate knowledge and to draw deep insights using machine learning and logical reasoning. There is currently a perceived disconnect between the traditional approaches for data science, typically based on machine learning and statistical modelling, and systems for reasoning with domain knowledge. In this paper we present a state-of-the-art Knowledge Graph Management System, Vadalog, which delivers highly expressive and efficient logical reasoning and provides seamless integration with modern data science toolkits, such as the Jupyter platform. We demonstrate how to use Vadalog to perform traditional data wrangling tasks, as well as complex logical and probabilistic reasoning. We argue that this is a significant step forward towards combining machine learning and reasoning in data science.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge