Andrew D. Bagdanov

No MoCap Needed: Post-Training Motion Diffusion Models with Reinforcement Learning using Only Textual Prompts

Oct 08, 2025

Abstract:Diffusion models have recently advanced human motion generation, producing realistic and diverse animations from textual prompts. However, adapting these models to unseen actions or styles typically requires additional motion capture data and full retraining, which is costly and difficult to scale. We propose a post-training framework based on Reinforcement Learning that fine-tunes pretrained motion diffusion models using only textual prompts, without requiring any motion ground truth. Our approach employs a pretrained text-motion retrieval network as a reward signal and optimizes the diffusion policy with Denoising Diffusion Policy Optimization, effectively shifting the model's generative distribution toward the target domain without relying on paired motion data. We evaluate our method on cross-dataset adaptation and leave-one-out motion experiments using the HumanML3D and KIT-ML datasets across both latent- and joint-space diffusion architectures. Results from quantitative metrics and user studies show that our approach consistently improves the quality and diversity of generated motions, while preserving performance on the original distribution. Our approach is a flexible, data-efficient, and privacy-preserving solution for motion adaptation.

NTRL: Encounter Generation via Reinforcement Learning for Dynamic Difficulty Adjustment in Dungeons and Dragons

Jun 24, 2025Abstract:Balancing combat encounters in Dungeons & Dragons (D&D) is a complex task that requires Dungeon Masters (DM) to manually assess party strength, enemy composition, and dynamic player interactions while avoiding interruption of the narrative flow. In this paper, we propose Encounter Generation via Reinforcement Learning (NTRL), a novel approach that automates Dynamic Difficulty Adjustment (DDA) in D&D via combat encounter design. By framing the problem as a contextual bandit, NTRL generates encounters based on real-time party members attributes. In comparison with classic DM heuristics, NTRL iteratively optimizes encounters to extend combat longevity (+200%), increases damage dealt to party members, reducing post-combat hit points (-16.67%), and raises the number of player deaths while maintaining low total party kills (TPK). The intensification of combat forces players to act wisely and engage in tactical maneuvers, even though the generated encounters guarantee high win rates (70%). Even in comparison with encounters designed by human Dungeon Masters, NTRL demonstrates superior performance by enhancing the strategic depth of combat while increasing difficulty in a manner that preserves overall game fairness.

EFC++: Elastic Feature Consolidation with Prototype Re-balancing for Cold Start Exemplar-free Incremental Learning

Mar 13, 2025Abstract:Exemplar-Free Class Incremental Learning (EFCIL) aims to learn from a sequence of tasks without having access to previous task data. In this paper, we consider the challenging Cold Start scenario in which insufficient data is available in the first task to learn a high-quality backbone. This is especially challenging for EFCIL since it requires high plasticity, resulting in feature drift which is difficult to compensate for in the exemplar-free setting. To address this problem, we propose an effective approach to consolidate feature representations by regularizing drift in directions highly relevant to previous tasks and employs prototypes to reduce task-recency bias. Our approach, which we call Elastic Feature Consolidation++ (EFC++) exploits a tractable second-order approximation of feature drift based on a proposed Empirical Feature Matrix (EFM). The EFM induces a pseudo-metric in feature space which we use to regularize feature drift in important directions and to update Gaussian prototypes. In addition, we introduce a post-training prototype re-balancing phase that updates classifiers to compensate for feature drift. Experimental results on CIFAR-100, Tiny-ImageNet, ImageNet-Subset, ImageNet-1K and DomainNet demonstrate that EFC++ is better able to learn new tasks by maintaining model plasticity and significantly outperform the state-of-the-art.

No Task Left Behind: Isotropic Model Merging with Common and Task-Specific Subspaces

Feb 07, 2025Abstract:Model merging integrates the weights of multiple task-specific models into a single multi-task model. Despite recent interest in the problem, a significant performance gap between the combined and single-task models remains. In this paper, we investigate the key characteristics of task matrices -- weight update matrices applied to a pre-trained model -- that enable effective merging. We show that alignment between singular components of task-specific and merged matrices strongly correlates with performance improvement over the pre-trained model. Based on this, we propose an isotropic merging framework that flattens the singular value spectrum of task matrices, enhances alignment, and reduces the performance gap. Additionally, we incorporate both common and task-specific subspaces to further improve alignment and performance. Our proposed approach achieves state-of-the-art performance across multiple scenarios, including various sets of tasks and model scales. This work advances the understanding of model merging dynamics, offering an effective methodology to merge models without requiring additional training. Code is available at https://github.com/danielm1405/iso-merging .

Cross the Gap: Exposing the Intra-modal Misalignment in CLIP via Modality Inversion

Feb 06, 2025

Abstract:Pre-trained multi-modal Vision-Language Models like CLIP are widely used off-the-shelf for a variety of applications. In this paper, we show that the common practice of individually exploiting the text or image encoders of these powerful multi-modal models is highly suboptimal for intra-modal tasks like image-to-image retrieval. We argue that this is inherently due to the CLIP-style inter-modal contrastive loss that does not enforce any intra-modal constraints, leading to what we call intra-modal misalignment. To demonstrate this, we leverage two optimization-based modality inversion techniques that map representations from their input modality to the complementary one without any need for auxiliary data or additional trained adapters. We empirically show that, in the intra-modal tasks of image-to-image and text-to-text retrieval, approaching these tasks inter-modally significantly improves performance with respect to intra-modal baselines on more than fifteen datasets. Additionally, we demonstrate that approaching a native inter-modal task (e.g. zero-shot image classification) intra-modally decreases performance, further validating our findings. Finally, we show that incorporating an intra-modal term in the pre-training objective or narrowing the modality gap between the text and image feature embedding spaces helps reduce the intra-modal misalignment. The code is publicly available at: https://github.com/miccunifi/Cross-the-Gap.

SPEQ: Stabilization Phases for Efficient Q-Learning in High Update-To-Data Ratio Reinforcement Learning

Jan 15, 2025Abstract:A key challenge in Deep Reinforcement Learning is sample efficiency, especially in real-world applications where collecting environment interactions is expensive or risky. Recent off-policy algorithms improve sample efficiency by increasing the Update-To-Data (UTD) ratio and performing more gradient updates per environment interaction. While this improves sample efficiency, it significantly increases computational cost due to the higher number of gradient updates required. In this paper we propose a sample-efficient method to improve computational efficiency by separating training into distinct learning phases in order to exploit gradient updates more effectively. Our approach builds on top of the Dropout Q-Functions (DroQ) algorithm and alternates between an online, low UTD ratio training phase, and an offline stabilization phase. During the stabilization phase, we fine-tune the Q-functions without collecting new environment interactions. This process improves the effectiveness of the replay buffer and reduces computational overhead. Our experimental results on continuous control problems show that our method achieves results comparable to state-of-the-art, high UTD ratio algorithms while requiring 56\% fewer gradient updates and 50\% less training time than DroQ. Our approach offers an effective and computationally economical solution while maintaining the same sample efficiency as the more costly, high UTD ratio state-of-the-art.

Covariances for Free: Exploiting Mean Distributions for Federated Learning with Pre-Trained Models

Dec 18, 2024

Abstract:Using pre-trained models has been found to reduce the effect of data heterogeneity and speed up federated learning algorithms. Recent works have investigated the use of first-order statistics and second-order statistics to aggregate local client data distributions at the server and achieve very high performance without any training. In this work we propose a training-free method based on an unbiased estimator of class covariance matrices. Our method, which only uses first-order statistics in the form of class means communicated by clients to the server, incurs only a fraction of the communication costs required by methods based on communicating second-order statistics. We show how these estimated class covariances can be used to initialize a linear classifier, thus exploiting the covariances without actually sharing them. When compared to state-of-the-art methods which also share only class means, our approach improves performance in the range of 4-26\% with exactly the same communication cost. Moreover, our method achieves performance competitive or superior to sharing second-order statistics with dramatically less communication overhead. Finally, using our method to initialize classifiers and then performing federated fine-tuning yields better and faster convergence. Code is available at https://github.com/dipamgoswami/FedCOF.

RE-tune: Incremental Fine Tuning of Biomedical Vision-Language Models for Multi-label Chest X-ray Classification

Oct 23, 2024Abstract:In this paper we introduce RE-tune, a novel approach for fine-tuning pre-trained Multimodal Biomedical Vision-Language models (VLMs) in Incremental Learning scenarios for multi-label chest disease diagnosis. RE-tune freezes the backbones and only trains simple adaptors on top of the Image and Text encoders of the VLM. By engineering positive and negative text prompts for diseases, we leverage the ability of Large Language Models to steer the training trajectory. We evaluate RE-tune in three realistic incremental learning scenarios: class-incremental, label-incremental, and data-incremental. Our results demonstrate that Biomedical VLMs are natural continual learners and prevent catastrophic forgetting. RE-tune not only achieves accurate multi-label classification results, but also prioritizes patient privacy and it distinguishes itself through exceptional computational efficiency, rendering it highly suitable for broad adoption in real-world healthcare settings.

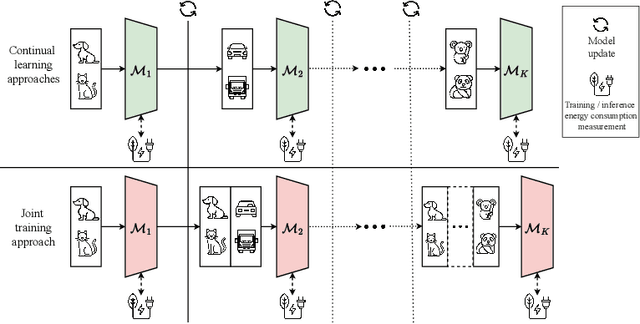

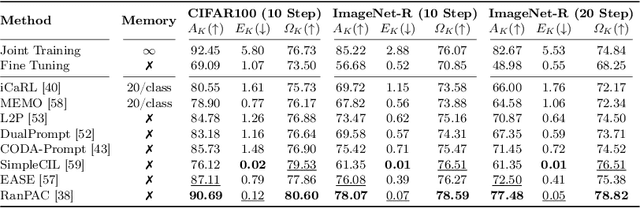

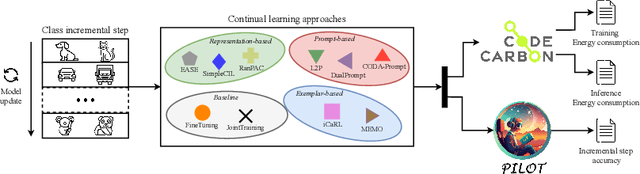

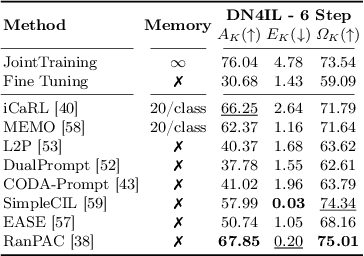

How green is continual learning, really? Analyzing the energy consumption in continual training of vision foundation models

Sep 27, 2024

Abstract:With the ever-growing adoption of AI, its impact on the environment is no longer negligible. Despite the potential that continual learning could have towards Green AI, its environmental sustainability remains relatively uncharted. In this work we aim to gain a systematic understanding of the energy efficiency of continual learning algorithms. To that end, we conducted an extensive set of empirical experiments comparing the energy consumption of recent representation-, prompt-, and exemplar-based continual learning algorithms and two standard baseline (fine tuning and joint training) when used to continually adapt a pre-trained ViT-B/16 foundation model. We performed our experiments on three standard datasets: CIFAR-100, ImageNet-R, and DomainNet. Additionally, we propose a novel metric, the Energy NetScore, which we use measure the algorithm efficiency in terms of energy-accuracy trade-off. Through numerous evaluations varying the number and size of the incremental learning steps, our experiments demonstrate that different types of continual learning algorithms have very different impacts on energy consumption during both training and inference. Although often overlooked in the continual learning literature, we found that the energy consumed during the inference phase is crucial for evaluating the environmental sustainability of continual learning models.

Offline Reinforcement Learning with Imputed Rewards

Jul 15, 2024Abstract:Offline Reinforcement Learning (ORL) offers a robust solution to training agents in applications where interactions with the environment must be strictly limited due to cost, safety, or lack of accurate simulation environments. Despite its potential to facilitate deployment of artificial agents in the real world, Offline Reinforcement Learning typically requires very many demonstrations annotated with ground-truth rewards. Consequently, state-of-the-art ORL algorithms can be difficult or impossible to apply in data-scarce scenarios. In this paper we propose a simple but effective Reward Model that can estimate the reward signal from a very limited sample of environment transitions annotated with rewards. Once the reward signal is modeled, we use the Reward Model to impute rewards for a large sample of reward-free transitions, thus enabling the application of ORL techniques. We demonstrate the potential of our approach on several D4RL continuous locomotion tasks. Our results show that, using only 1\% of reward-labeled transitions from the original datasets, our learned reward model is able to impute rewards for the remaining 99\% of the transitions, from which performant agents can be learned using Offline Reinforcement Learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge