Andreea Danielescu

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

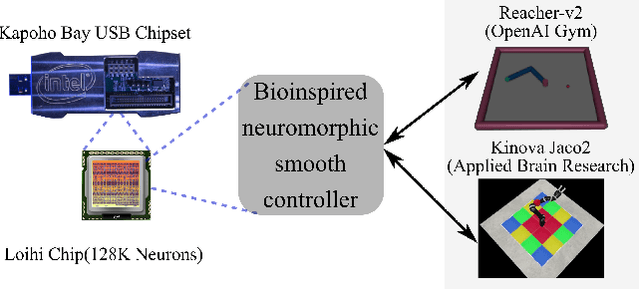

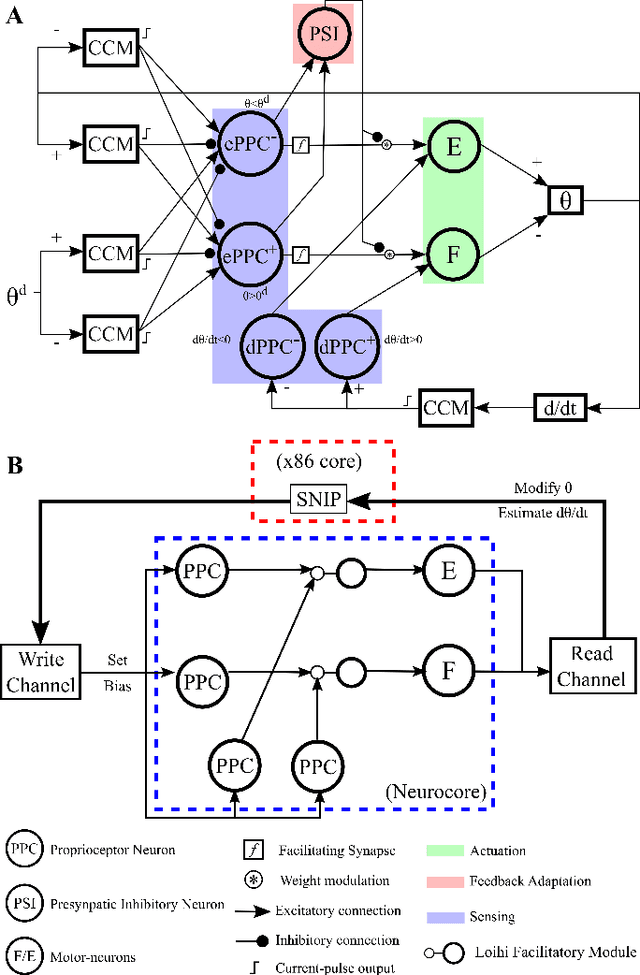

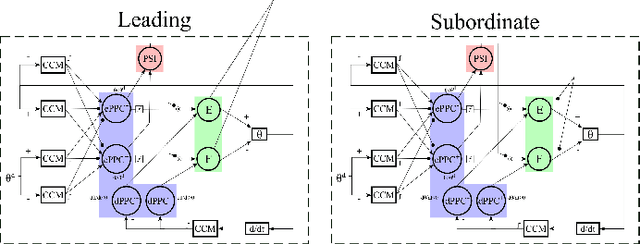

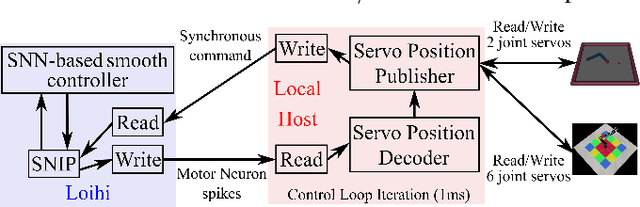

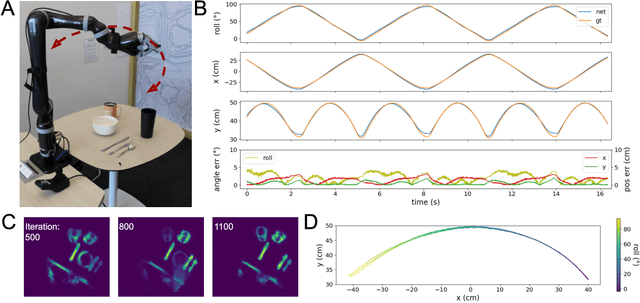

Bioinspired Smooth Neuromorphic Control for Robotic Arms

Sep 06, 2022

Abstract:Replicating natural human movements is a long-standing goal of robotics control theory. Drawing inspiration from biology, where reaching control networks give rise to smooth and precise movements, can narrow the performance gap between human and robot control. Neuromorphic processors, which mimic the brain's computational principles, are an ideal platform to approximate the accuracy and smoothness of such controllers while maximizing their energy efficiency and robustness. However, the incompatibility of conventional control methods with neuromorphic hardware limits the computational efficiency and explainability of their existing adaptations. In contrast, the neuronal connectome underlying smooth and accurate reaching movements is effective, minimal, and inherently compatible with neuromorphic processors. In this work, we emulate these networks and propose a biologically realistic spiking neural network for motor control. Our controller incorporates adaptive feedback to provide smooth and accurate motor control while inheriting the minimal complexity of its biological counterpart that controls reaching movements, allowing for direct deployment on Intel's neuromorphic processor. Using our controller as a building block and inspired by joint coordination in human arms, we scaled up our approach to control real-world robot arms. The trajectories and smooth, minimum-jerk velocity profiles of the resulting motions resembled those of humans, verifying the biological relevance of our controller. Notably, our method achieved state-of-the-art control performance while decreasing the motion jerk by 19\% to improve motion smoothness. Our work suggests that exploiting both the computational units of the brain and their connectivity may lead to the design of effective, efficient, and explainable neuromorphic controllers, paving the way for neuromorphic solutions in fully autonomous systems.

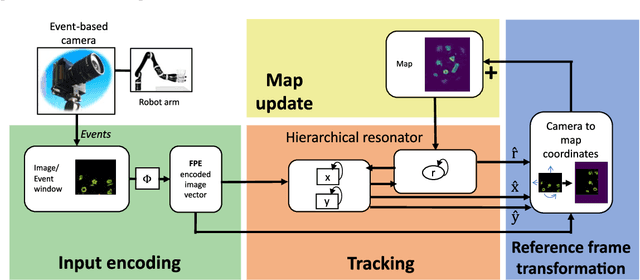

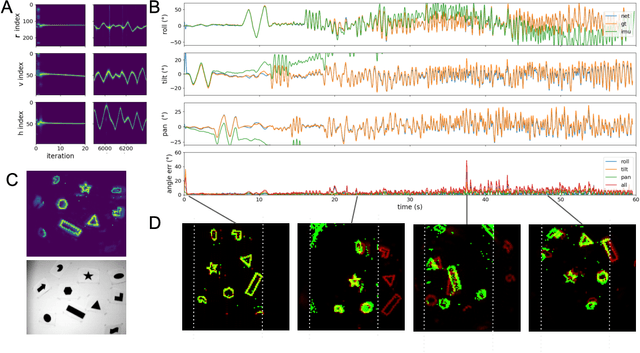

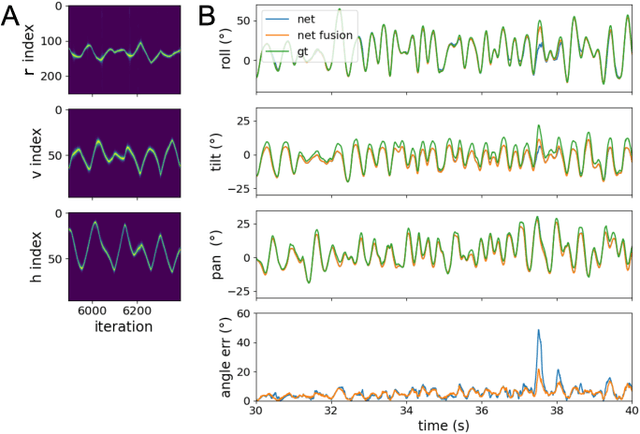

Neuromorphic Visual Odometry with Resonator Networks

Sep 05, 2022

Abstract:Autonomous agents require self-localization to navigate in unknown environments. They can use Visual Odometry (VO) to estimate self-motion and localize themselves using visual sensors. This motion-estimation strategy is not compromised by drift as inertial sensors or slippage as wheel encoders. However, VO with conventional cameras is computationally demanding, limiting its application in systems with strict low-latency, -memory, and -energy requirements. Using event-based cameras and neuromorphic computing hardware offers a promising low-power solution to the VO problem. However, conventional algorithms for VO are not readily convertible to neuromorphic hardware. In this work, we present a VO algorithm built entirely of neuronal building blocks suitable for neuromorphic implementation. The building blocks are groups of neurons representing vectors in the computational framework of Vector Symbolic Architecture (VSA) which was proposed as an abstraction layer to program neuromorphic hardware. The VO network we propose generates and stores a working memory of the presented visual environment. It updates this working memory while at the same time estimating the changing location and orientation of the camera. We demonstrate how VSA can be leveraged as a computing paradigm for neuromorphic robotics. Moreover, our results represent an important step towards using neuromorphic computing hardware for fast and power-efficient VO and the related task of simultaneous localization and mapping (SLAM). We validate this approach experimentally in a robotic task and with an event-based dataset, demonstrating state-of-the-art performance.

Neuromorphic Visual Scene Understanding with Resonator Networks

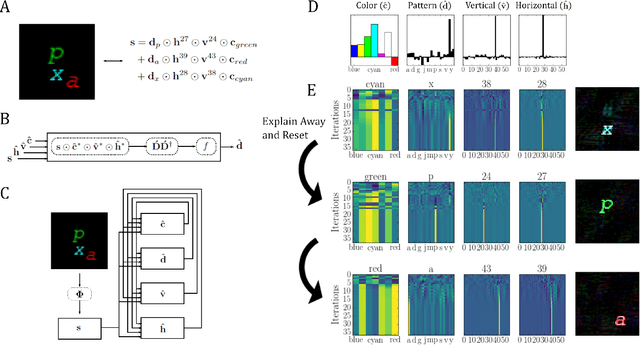

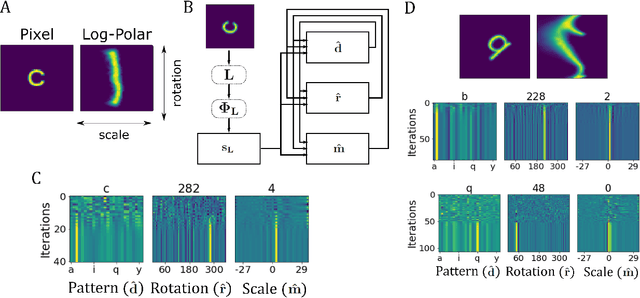

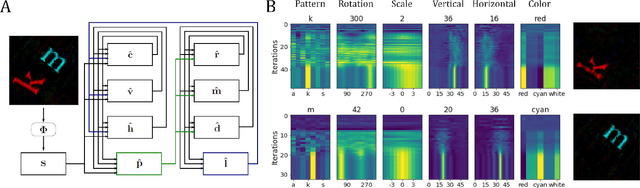

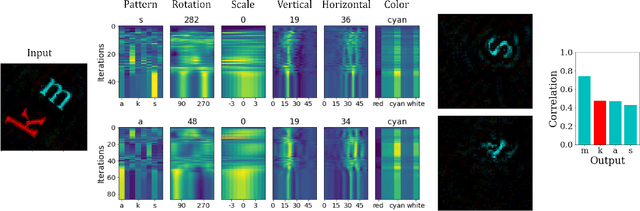

Aug 26, 2022

Abstract:Inferring the position of objects and their rigid transformations is still an open problem in visual scene understanding. Here we propose a neuromorphic solution that utilizes an efficient factorization network which is based on three key concepts: (1) a computational framework based on Vector Symbolic Architectures (VSA) with complex-valued vectors; (2) the design of Hierarchical Resonator Networks (HRN) to deal with the non-commutative nature of translation and rotation in visual scenes, when both are used in combination; (3) the design of a multi-compartment spiking phasor neuron model for implementing complex-valued vector binding on neuromorphic hardware. The VSA framework uses vector binding operations to produce generative image models in which binding acts as the equivariant operation for geometric transformations. A scene can therefore be described as a sum of vector products, which in turn can be efficiently factorized by a resonator network to infer objects and their poses. The HRN enables the definition of a partitioned architecture in which vector binding is equivariant for horizontal and vertical translation within one partition, and for rotation and scaling within the other partition. The spiking neuron model allows to map the resonator network onto efficient and low-power neuromorphic hardware. In this work, we demonstrate our approach using synthetic scenes composed of simple 2D shapes undergoing rigid geometric transformations and color changes. A companion paper demonstrates this approach in real-world application scenarios for machine vision and robotics.

OnTarget: An Electronic Archery Scoring

Apr 04, 2021

Abstract:There are several challenges in creating an electronic archery scoring system using computer vision techniques. Variability of light, reconstruction of the target from several images, variability of target configuration, and filtering noise were significant challenges during the creation of this scoring system. This paper discusses the approach used to determine where an arrow hits a target, for any possible single or set of targets and provides an algorithm that balances the difficulty of robust arrow detection while retaining the required accuracy.

Gesture Similarity Analysis on Event Data Using a Hybrid Guided Variational Auto Encoder

Mar 31, 2021

Abstract:While commercial mid-air gesture recognition systems have existed for at least a decade, they have not become a widespread method of interacting with machines. This is primarily due to the fact that these systems require rigid, dramatic gestures to be performed for accurate recognition that can be fatiguing and unnatural. The global pandemic has seen a resurgence of interest in touchless interfaces, so new methods that allow for natural mid-air gestural interactions are even more important. To address the limitations of recognition systems, we propose a neuromorphic gesture analysis system which naturally declutters the background and analyzes gestures at high temporal resolution. Our novel model consists of an event-based guided Variational Autoencoder (VAE) which encodes event-based data sensed by a Dynamic Vision Sensor (DVS) into a latent space representation suitable to analyze and compute the similarity of mid-air gesture data. Our results show that the features learned by the VAE provides a similarity measure capable of clustering and pseudo labeling of new gestures. Furthermore, we argue that the resulting event-based encoder and pseudo-labeling system are suitable for implementation in neuromorphic hardware for online adaptation and learning of natural mid-air gestures.

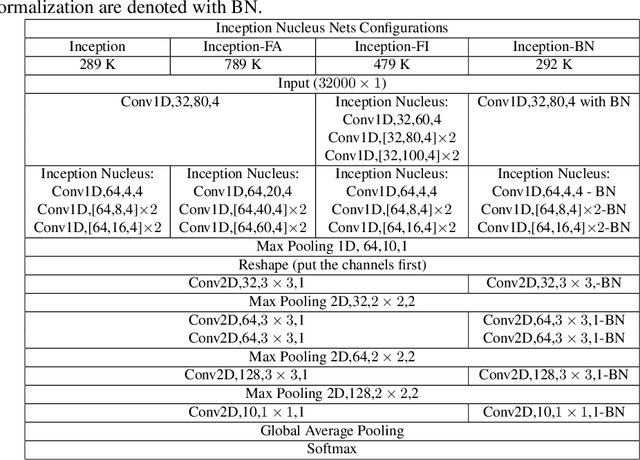

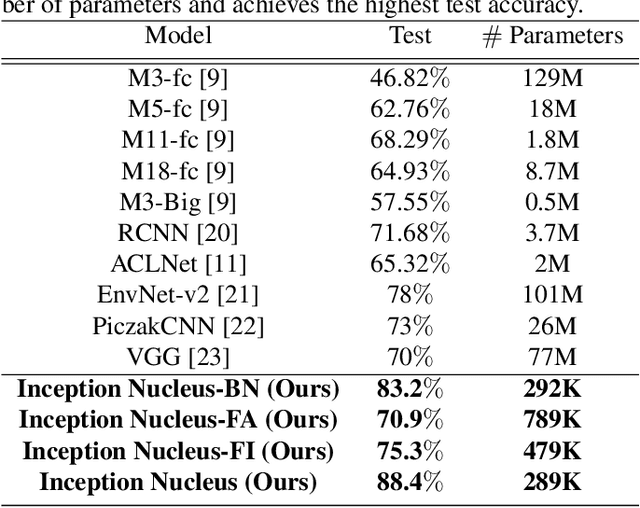

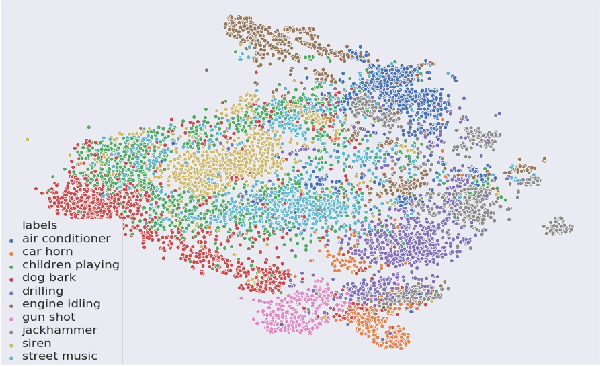

End-to-End Auditory Object Recognition via Inception Nucleus

May 25, 2020

Abstract:Machine learning approaches to auditory object recognition are traditionally based on engineered features such as those derived from the spectrum or cepstrum. More recently, end-to-end classification systems in image and auditory recognition systems have been developed to learn features jointly with classification and result in improved classification accuracy. In this paper, we propose a novel end-to-end deep neural network to map the raw waveform inputs to sound class labels. Our network includes an "inception nucleus" that optimizes the size of convolutional filters on the fly that results in reducing engineering efforts dramatically. Classification results compared favorably against current state-of-the-art approaches, besting them by 10.4 percentage points on the Urbansound8k dataset. Analyses of learned representations revealed that filters in the earlier hidden layers learned wavelet-like transforms to extract features that were informative for classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge