Ioannis Polykretis

Bioinspired Smooth Neuromorphic Control for Robotic Arms

Sep 06, 2022

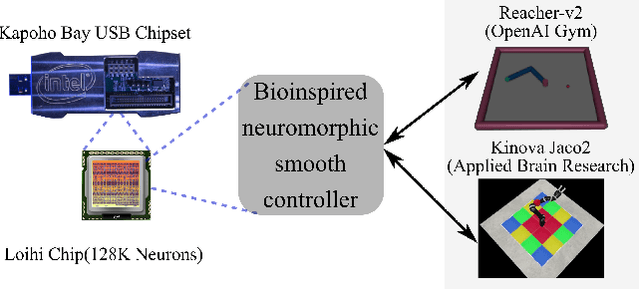

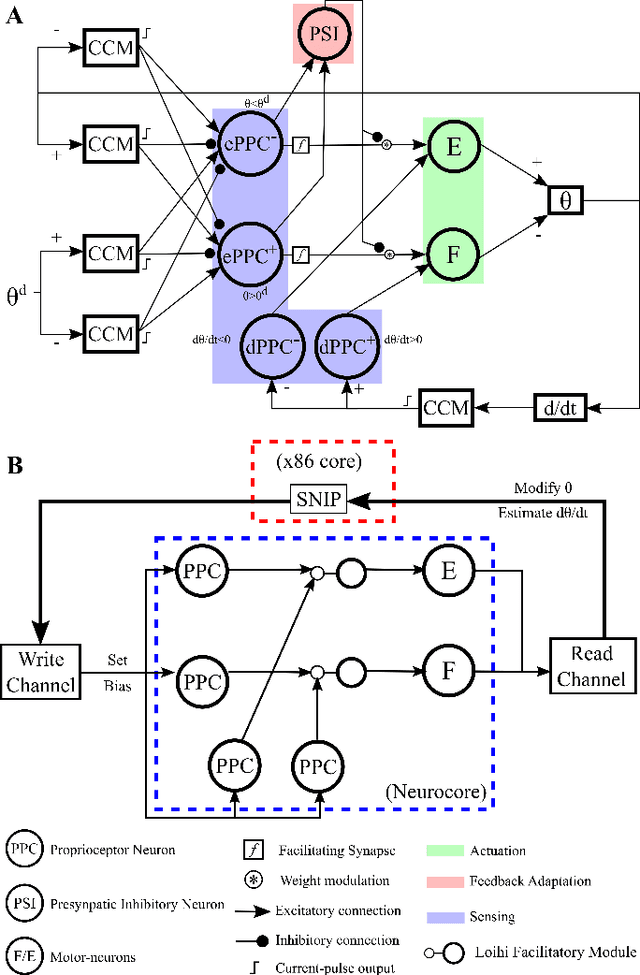

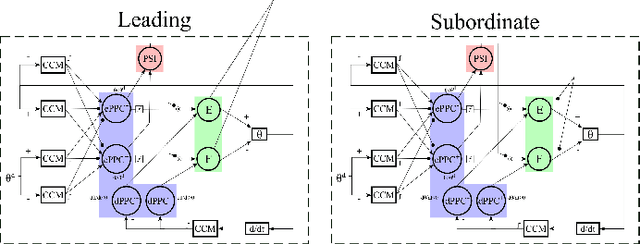

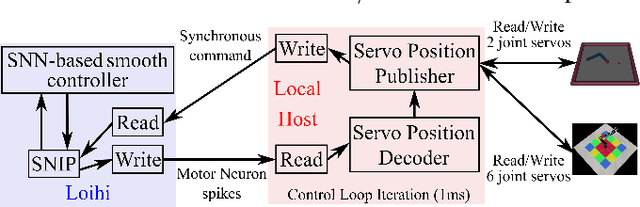

Abstract:Replicating natural human movements is a long-standing goal of robotics control theory. Drawing inspiration from biology, where reaching control networks give rise to smooth and precise movements, can narrow the performance gap between human and robot control. Neuromorphic processors, which mimic the brain's computational principles, are an ideal platform to approximate the accuracy and smoothness of such controllers while maximizing their energy efficiency and robustness. However, the incompatibility of conventional control methods with neuromorphic hardware limits the computational efficiency and explainability of their existing adaptations. In contrast, the neuronal connectome underlying smooth and accurate reaching movements is effective, minimal, and inherently compatible with neuromorphic processors. In this work, we emulate these networks and propose a biologically realistic spiking neural network for motor control. Our controller incorporates adaptive feedback to provide smooth and accurate motor control while inheriting the minimal complexity of its biological counterpart that controls reaching movements, allowing for direct deployment on Intel's neuromorphic processor. Using our controller as a building block and inspired by joint coordination in human arms, we scaled up our approach to control real-world robot arms. The trajectories and smooth, minimum-jerk velocity profiles of the resulting motions resembled those of humans, verifying the biological relevance of our controller. Notably, our method achieved state-of-the-art control performance while decreasing the motion jerk by 19\% to improve motion smoothness. Our work suggests that exploiting both the computational units of the brain and their connectivity may lead to the design of effective, efficient, and explainable neuromorphic controllers, paving the way for neuromorphic solutions in fully autonomous systems.

BioGrad: Biologically Plausible Gradient-Based Learning for Spiking Neural Networks

Oct 27, 2021

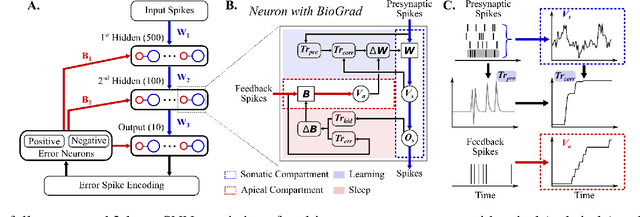

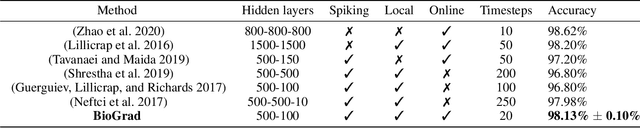

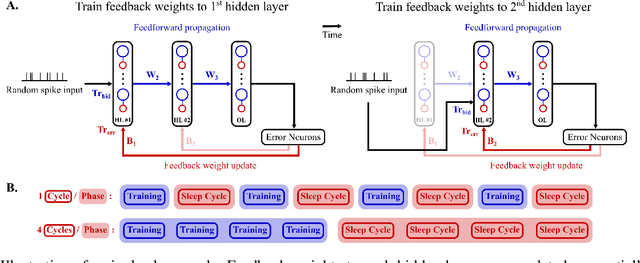

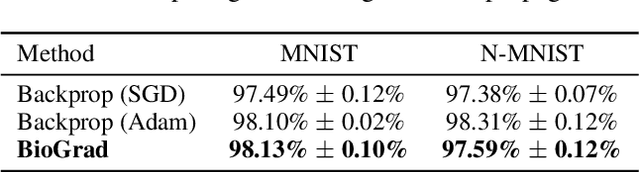

Abstract:Spiking neural networks (SNN) are delivering energy-efficient, massively parallel, and low-latency solutions to AI problems, facilitated by the emerging neuromorphic chips. To harness these computational benefits, SNN need to be trained by learning algorithms that adhere to brain-inspired neuromorphic principles, namely event-based, local, and online computations. Yet, the state-of-the-art SNN training algorithms are based on backprop that does not follow the above principles. Due to its limited biological plausibility, the application of backprop to SNN requires non-local feedback pathways for transmitting continuous-valued errors, and relies on gradients from future timesteps. The introduction of biologically plausible modifications to backprop has helped overcome several of its limitations, but limits the degree to which backprop is approximated, which hinders its performance. We propose a biologically plausible gradient-based learning algorithm for SNN that is functionally equivalent to backprop, while adhering to all three neuromorphic principles. We introduced multi-compartment spiking neurons with local eligibility traces to compute the gradients required for learning, and a periodic "sleep" phase to further improve the approximation to backprop during which a local Hebbian rule aligns the feedback and feedforward weights. Our method achieved the same level of performance as backprop with multi-layer fully connected SNN on MNIST (98.13%) and the event-based N-MNIST (97.59%) datasets. We deployed our learning algorithm on Intel's Loihi to train a 1-hidden-layer network for MNIST, and obtained 93.32% test accuracy while consuming 400 times less energy per training sample than BioGrad on GPU. Our work shows that optimal learning is feasible in neuromorphic computing, and further pursuing its biological plausibility can better capture the benefits of this emerging computing paradigm.

An Astrocyte-Modulated Neuromorphic Central Pattern Generator for Hexapod Robot Locomotion on Intel's Loihi

Jun 08, 2020

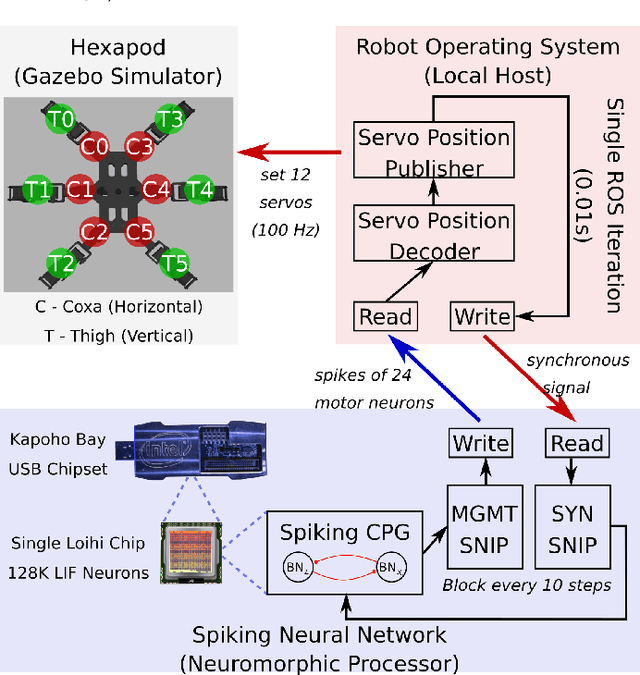

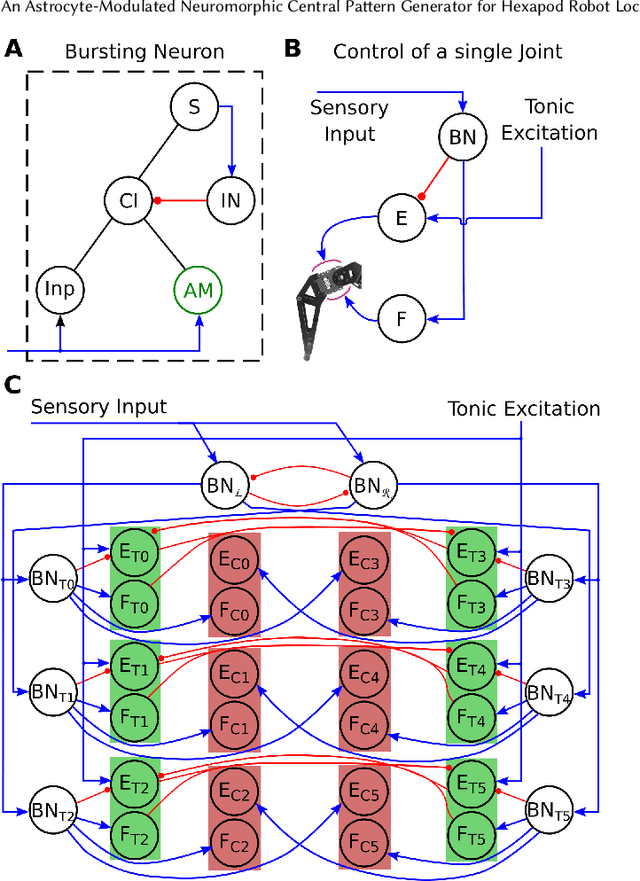

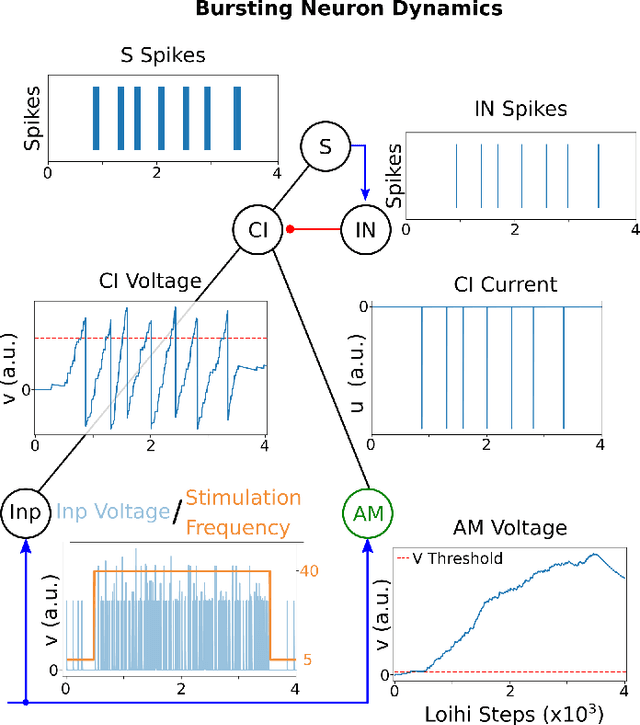

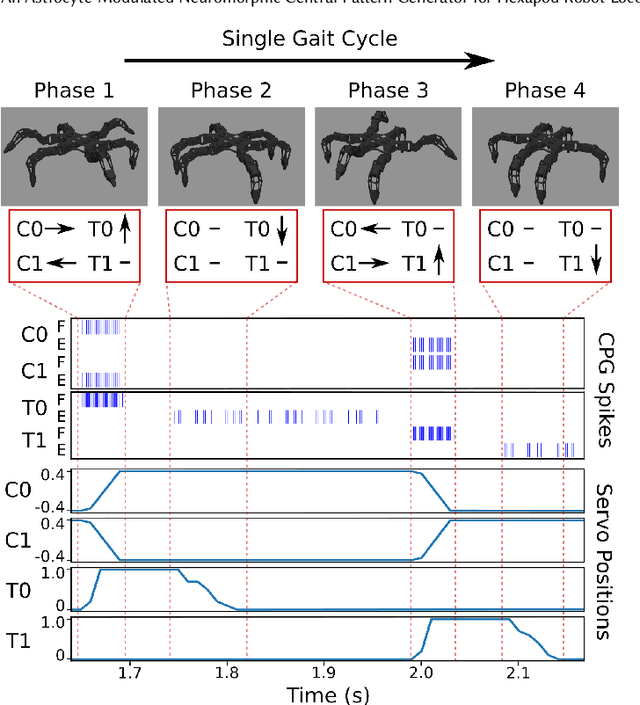

Abstract:Locomotion is a crucial challenge for legged robots that is addressed "effortlessly" by biological networks abundant in nature, named central pattern generators (CPG). The multitude of CPG network models that have so far become biomimetic robotic controllers is not applicable to the emerging neuromorphic hardware, depriving mobile robots of a robust walking mechanism that would result in inherently energy-efficient systems. Here, we propose a brain-morphic CPG controler based on a comprehensive spiking neural-astrocytic network that generates two gait patterns for a hexapod robot. Building on the recently identified astrocytic mechanisms for neuromodulation, our proposed CPG architecture is seamlessly integrated into Intel's Loihi neuromorphic chip by leveraging a real-time interaction framework between the chip and the robotic operating system (ROS) environment, that we also propose. Here, we demonstrate that a Loihi-run CPG can be used to control a walking robot with robustness to sensory noise and varying speed profiles. Our results pave the way for scaling this and other approaches towards Loihi-controlled locomotion in autonomous mobile robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge