Konstantinos P. Michmizos

BioGrad: Biologically Plausible Gradient-Based Learning for Spiking Neural Networks

Oct 27, 2021

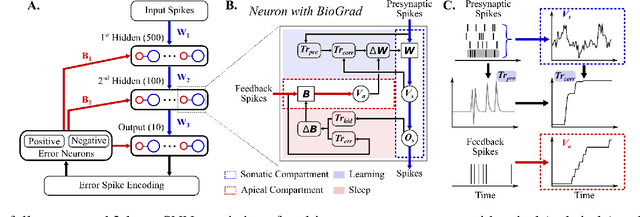

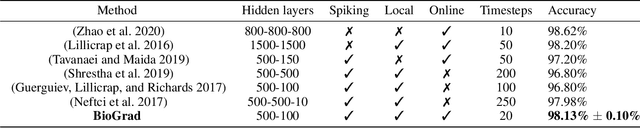

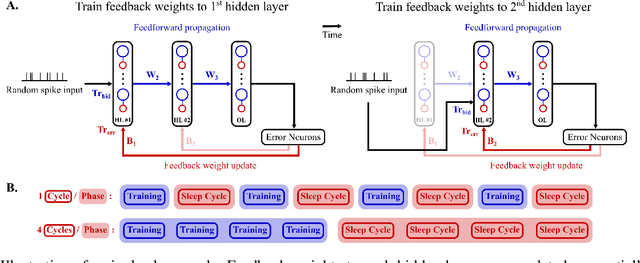

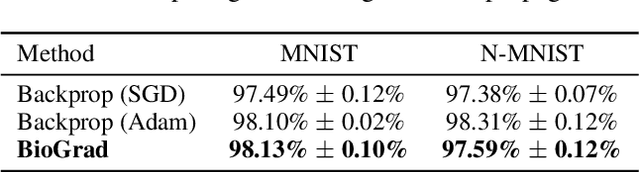

Abstract:Spiking neural networks (SNN) are delivering energy-efficient, massively parallel, and low-latency solutions to AI problems, facilitated by the emerging neuromorphic chips. To harness these computational benefits, SNN need to be trained by learning algorithms that adhere to brain-inspired neuromorphic principles, namely event-based, local, and online computations. Yet, the state-of-the-art SNN training algorithms are based on backprop that does not follow the above principles. Due to its limited biological plausibility, the application of backprop to SNN requires non-local feedback pathways for transmitting continuous-valued errors, and relies on gradients from future timesteps. The introduction of biologically plausible modifications to backprop has helped overcome several of its limitations, but limits the degree to which backprop is approximated, which hinders its performance. We propose a biologically plausible gradient-based learning algorithm for SNN that is functionally equivalent to backprop, while adhering to all three neuromorphic principles. We introduced multi-compartment spiking neurons with local eligibility traces to compute the gradients required for learning, and a periodic "sleep" phase to further improve the approximation to backprop during which a local Hebbian rule aligns the feedback and feedforward weights. Our method achieved the same level of performance as backprop with multi-layer fully connected SNN on MNIST (98.13%) and the event-based N-MNIST (97.59%) datasets. We deployed our learning algorithm on Intel's Loihi to train a 1-hidden-layer network for MNIST, and obtained 93.32% test accuracy while consuming 400 times less energy per training sample than BioGrad on GPU. Our work shows that optimal learning is feasible in neuromorphic computing, and further pursuing its biological plausibility can better capture the benefits of this emerging computing paradigm.

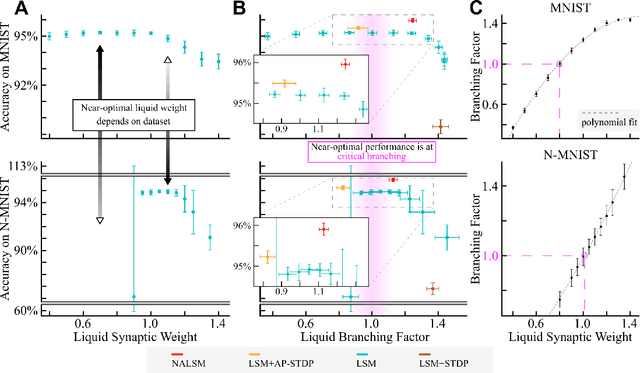

Increasing Liquid State Machine Performance with Edge-of-Chaos Dynamics Organized by Astrocyte-modulated Plasticity

Oct 26, 2021

Abstract:The liquid state machine (LSM) combines low training complexity and biological plausibility, which has made it an attractive machine learning framework for edge and neuromorphic computing paradigms. Originally proposed as a model of brain computation, the LSM tunes its internal weights without backpropagation of gradients, which results in lower performance compared to multi-layer neural networks. Recent findings in neuroscience suggest that astrocytes, a long-neglected non-neuronal brain cell, modulate synaptic plasticity and brain dynamics, tuning brain networks to the vicinity of the computationally optimal critical phase transition between order and chaos. Inspired by this disruptive understanding of how brain networks self-tune, we propose the neuron-astrocyte liquid state machine (NALSM) that addresses under-performance through self-organized near-critical dynamics. Similar to its biological counterpart, the astrocyte model integrates neuronal activity and provides global feedback to spike-timing-dependent plasticity (STDP), which self-organizes NALSM dynamics around a critical branching factor that is associated with the edge-of-chaos. We demonstrate that NALSM achieves state-of-the-art accuracy versus comparable LSM methods, without the need for data-specific hand-tuning. With a top accuracy of 97.61% on MNIST, 97.51% on N-MNIST, and 85.84% on Fashion-MNIST, NALSM achieved comparable performance to current fully-connected multi-layer spiking neural networks trained via backpropagation. Our findings suggest that the further development of brain-inspired machine learning methods has the potential to reach the performance of deep learning, with the added benefits of supporting robust and energy-efficient neuromorphic computing on the edge.

* 23 pages, 9 figures, NeurIPS 2021

Deep Reinforcement Learning with Population-Coded Spiking Neural Network for Continuous Control

Oct 19, 2020

Abstract:The energy-efficient control of mobile robots is crucial as the complexity of their real-world applications increasingly involves high-dimensional observation and action spaces, which cannot be offset by limited on-board resources. An emerging non-Von Neumann model of intelligence, where spiking neural networks (SNNs) are run on neuromorphic processors, is regarded as an energy-efficient and robust alternative to the state-of-the-art real-time robotic controllers for low dimensional control tasks. The challenge now for this new computing paradigm is to scale so that it can keep up with real-world tasks. To do so, SNNs need to overcome the inherent limitations of their training, namely the limited ability of their spiking neurons to represent information and the lack of effective learning algorithms. Here, we propose a population-coded spiking actor network (PopSAN) trained in conjunction with a deep critic network using deep reinforcement learning (DRL). The population coding scheme dramatically increased the representation capacity of the network and the hybrid learning combined the training advantages of deep networks with the energy-efficient inference of spiking networks. To show the general applicability of our approach, we integrated it with a spectrum of both on-policy and off-policy DRL algorithms. We deployed the trained PopSAN on Intel's Loihi neuromorphic chip and benchmarked our method against the mainstream DRL algorithms for continuous control. To allow for a fair comparison among all methods, we validated them on OpenAI gym tasks. Our Loihi-run PopSAN consumed 140 times less energy per inference when compared against the deep actor network on Jetson TX2, and had the same level of performance. Our results support the efficiency of neuromorphic controllers and suggest our hybrid RL as an alternative to deep learning, when both energy-efficiency and robustness are important.

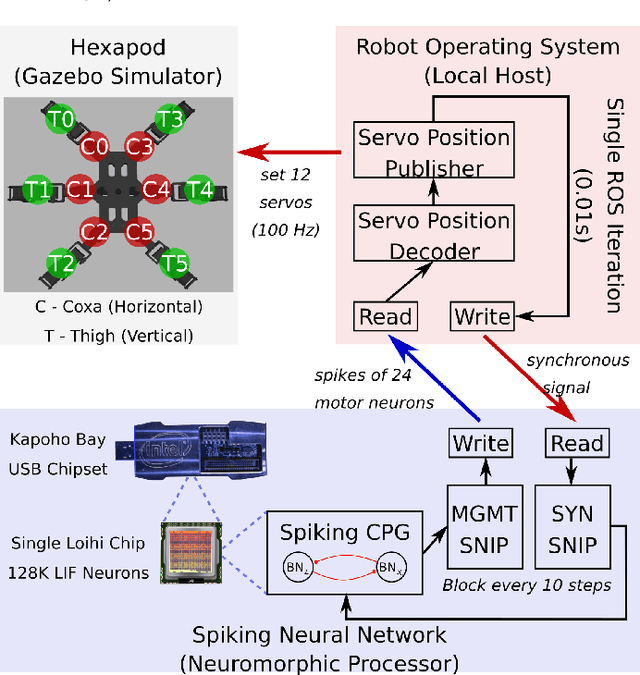

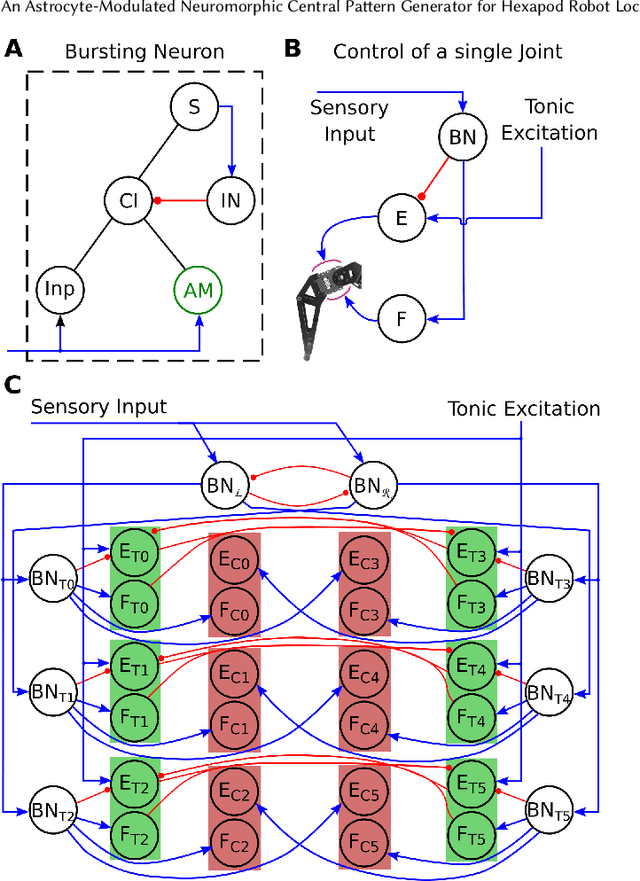

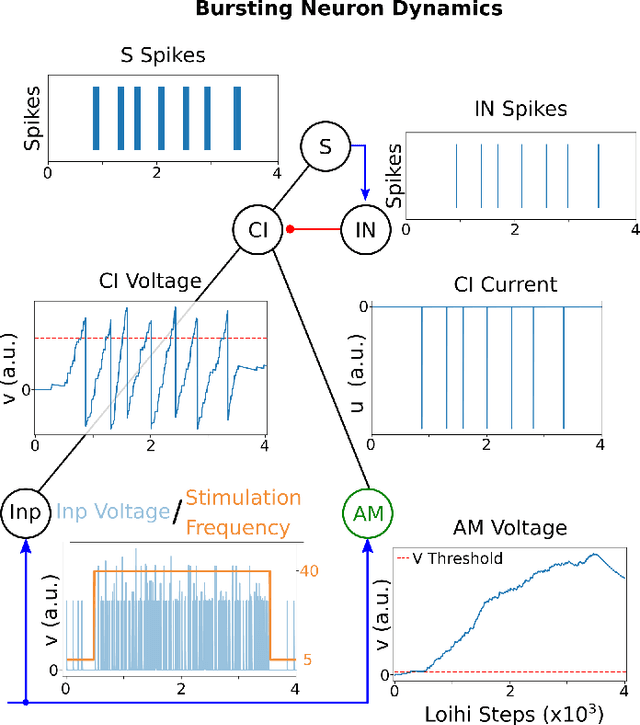

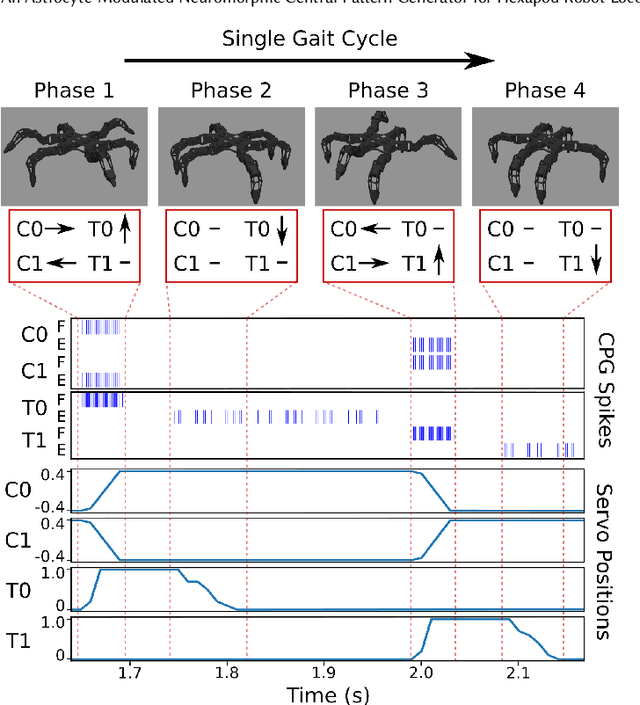

An Astrocyte-Modulated Neuromorphic Central Pattern Generator for Hexapod Robot Locomotion on Intel's Loihi

Jun 08, 2020

Abstract:Locomotion is a crucial challenge for legged robots that is addressed "effortlessly" by biological networks abundant in nature, named central pattern generators (CPG). The multitude of CPG network models that have so far become biomimetic robotic controllers is not applicable to the emerging neuromorphic hardware, depriving mobile robots of a robust walking mechanism that would result in inherently energy-efficient systems. Here, we propose a brain-morphic CPG controler based on a comprehensive spiking neural-astrocytic network that generates two gait patterns for a hexapod robot. Building on the recently identified astrocytic mechanisms for neuromodulation, our proposed CPG architecture is seamlessly integrated into Intel's Loihi neuromorphic chip by leveraging a real-time interaction framework between the chip and the robotic operating system (ROS) environment, that we also propose. Here, we demonstrate that a Loihi-run CPG can be used to control a walking robot with robustness to sensory noise and varying speed profiles. Our results pave the way for scaling this and other approaches towards Loihi-controlled locomotion in autonomous mobile robots.

Reinforcement co-Learning of Deep and Spiking Neural Networks for Energy-Efficient Mapless Navigation with Neuromorphic Hardware

Mar 02, 2020

Abstract:Energy-efficient mapless navigation is crucial for mobile robots as they explore unknown environments with limited on-board resources. Although the recent deep reinforcement learning (DRL) approaches have been successfully applied to navigation, their high energy consumption limits their use in many robotic applications. Here, we propose a neuromorphic approach that combines the energy-efficiency of spiking neural networks with the optimality of DRL to learn control policies for mapless navigation. Our hybrid framework, Spiking deep deterministic policy gradient (SDDPG), consists of a spiking actor network (SAN) and a deep critic network, where the two networks were trained jointly using gradient descent. The trained SAN was deployed on Intel's Loihi neuromorphic processor. The co-learning enabled synergistic information exchange between the two networks, allowing them to overcome each other's limitations through a shared representation learning. When validated on both simulated and real-world complex environments, our method on Loihi not only consumed 75 times less energy per inference as compared to DDPG on Jetson TX2, but also had a higher rate of successfully navigating to the goal which ranged by 1\% to 4.2\%, depending on the forward-propagation timestep size. These results reinforce our ongoing effort to design brain-inspired algorithms for controlling autonomous robots with neuromorphic hardware.

Machine Learning for Motor Learning: EEG-based Continuous Assessment of Cognitive Engagement for Adaptive Rehabilitation Robots

Feb 19, 2020

Abstract:Although cognitive engagement (CE) is crucial for motor learning, it remains underutilized in rehabilitation robots, partly because its assessment currently relies on subjective and gross measurements taken intermittently. Here, we propose an end-to-end computational framework that assesses CE in real-time, using electroencephalography (EEG) signals as objective measurements. The framework consists of i) a deep convolutional neural network (CNN) that extracts task-discriminative spatiotemporal EEG to predict the level of CE for two classes -- cognitively engaged vs. disengaged; and ii) a novel sliding window method that predicts continuous levels of CE in real-time. We evaluated our framework on 8 subjects using an in-house Go/No-Go experiment that adapted its gameplay parameters to induce cognitive fatigue. The proposed CNN had an average leave-one-out accuracy of 88.13\%. The CE prediction correlated well with a commonly used behavioral metric based on self-reports taken every 5 minutes ($\rho$=0.93). Our results objectify CE in real-time and pave the way for using CE as a rehabilitation parameter for tailoring robotic therapy to each patient's needs and skills.

Deep Learning of Movement Intent and Reaction Time for EEG-informed Adaptation of Rehabilitation Robots

Feb 18, 2020

Abstract:Mounting evidence suggests that adaptation is a crucial mechanism for rehabilitation robots in promoting motor learning. Yet, it is commonly based on robot-derived movement kinematics, which is a rather subjective measurement of performance, especially in the presence of a sensorimotor impairment. Here, we propose a deep convolutional neural network (CNN) that uses electroencephalography (EEG) as an objective measurement of two kinematics components that are typically used to assess motor learning and thereby adaptation: i) the intent to initiate a goal-directed movement, and ii) the reaction time (RT) of that movement. We evaluated our CNN on data acquired from an in-house experiment where 13 subjects moved a rehabilitation robotic arm in four directions on a plane, in response to visual stimuli. Our CNN achieved average test accuracies of 80.08% and 79.82% in a binary classification of the intent (intent vs. no intent) and RT (slow vs. fast), respectively. Our results demonstrate how individual movement components implicated in distinct types of motor learning can be predicted from synchronized EEG data acquired before the start of the movement. Our approach can, therefore, inform robotic adaptation in real-time and has the potential to further improve one's ability to perform the rehabilitation task.

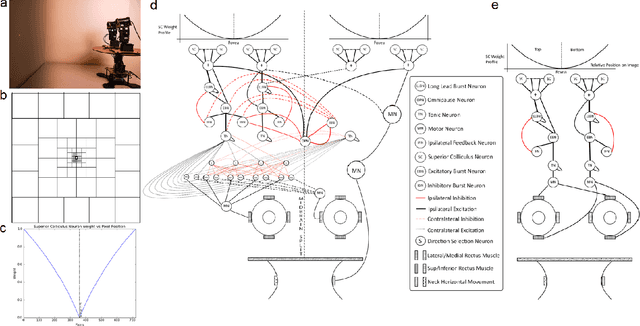

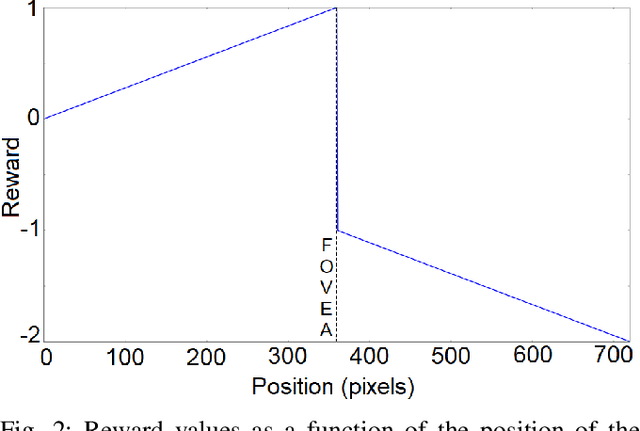

A Spiking Neural Network Emulating the Structure of the Oculomotor System Requires No Learning to Control a Biomimetic Robotic Head

Feb 18, 2020

Abstract:Robotic vision introduces requirements for real-time processing of fast-varying, noisy information in a continuously changing environment. In a real-world environment, convenient assumptions, such as static camera systems and deep learning algorithms devouring high volumes of ideally slightly-varying data are hard to survive. Leveraging on recent studies on the neural connectome associated with eye movements, we designed a neuromorphic oculomotor controller and placed it at the heart of our in-house biomimetic robotic head prototype. The controller is unique in the sense that (1) all data are encoded and processed by a spiking neural network (SNN), and (2) by mimicking the associated brain areas' connectivity, the SNN required no training to operate. A biologically-constrained Hebbian learning further improved the SNN performance in tracking a moving target. Here, we report the tracking performance of the robotic head and show that the robotic eye kinematics are similar to those reported in human eye studies. This work contributes to our ongoing effort to develop energy-efficient neuromorphic SNN and harness their emerging intelligence to control biomimetic robots with versatility and robustness.

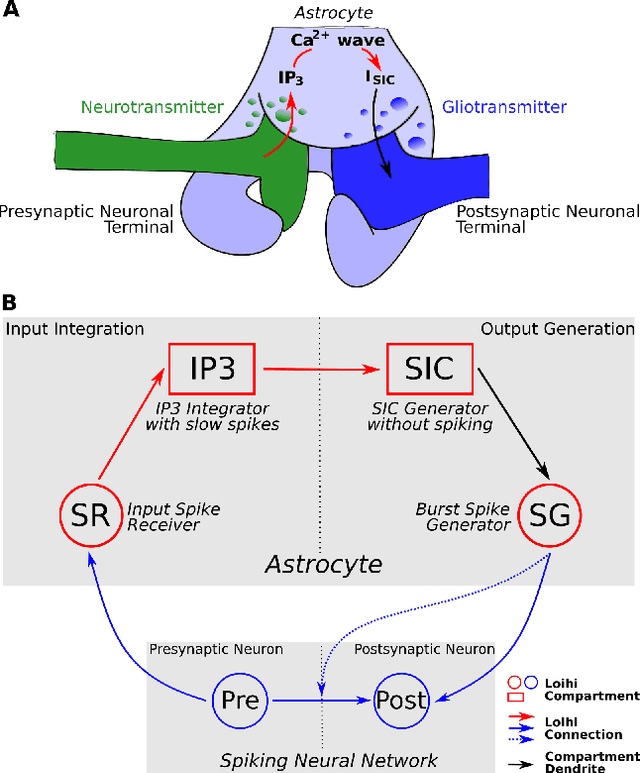

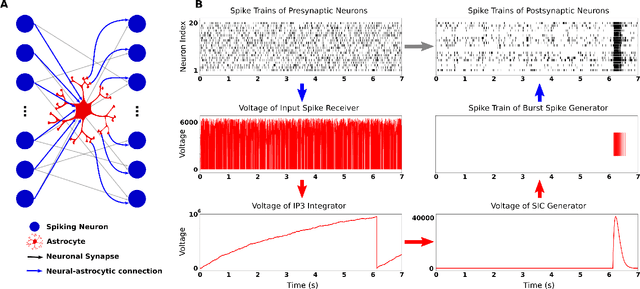

Introducing Astrocytes on a Neuromorphic Processor: Synchronization, Local Plasticity and Edge of Chaos

Jul 02, 2019

Abstract:While there is still a lot to learn about astrocytes and their neuromodulatory role associated with their spatial and temporal integration of synaptic activity, the introduction of an additional to neurons processing unit into neuromorphic hardware is timely, facilitating their computational exploration in basic science questions and their exploitation in real-world applications. Here, we present an astrocytic module that enables the development of a spiking Neuronal-Astrocytic Network (SNAN) into Intel's Loihi neuromorphic chip. The basis of our module is an end-to-end biophysically plausible compartmental model of an astrocyte that simulates how intracellular activity may encode synaptic activity in space and time. To demonstrate the functional role of astrocytes in SNANs, we describe how an astrocyte may sense and induce activity-dependent neuronal synchronization, can endow single-shot learning capabilities in spike-time-dependent plasticity (STDP), and sense the transition between ordered and chaotic activity in the neuronal component of an SNAN. Our astrocytic module may serve as a natural extension for neuromorphic hardware by mimicking the distinct computational roles of its biological counterpart.

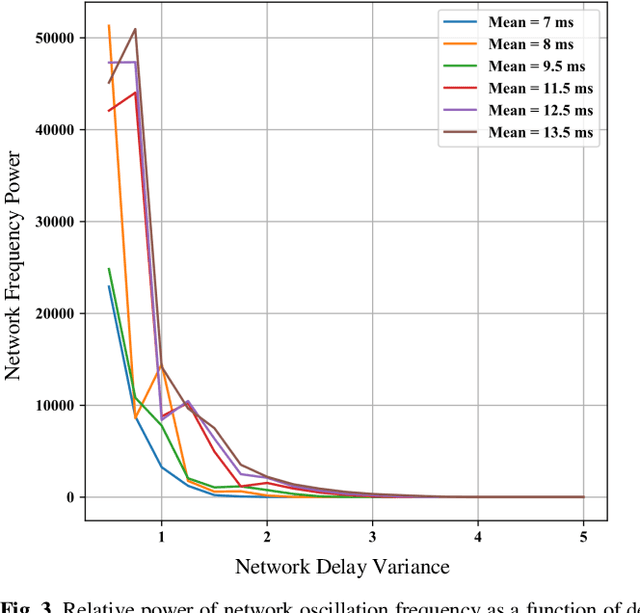

Axonal Conduction Velocity Impacts Neuronal Network Oscillations

Mar 22, 2019

Abstract:Increasing experimental evidence suggests that axonal action potential conduction velocity is a highly adaptive parameter in the adult central nervous system. Yet, the effects of this newfound plasticity on global brain dynamics is poorly understood. In this work, we analyzed oscillations in biologically plausible neuronal networks with different conduction velocity distributions. Changes of 1-2 (ms) in network mean signal transmission time resulted in substantial network oscillation frequency changes ranging in 0-120 (Hz). Our results suggest that changes in axonal conduction velocity may significantly affect both the frequency and synchrony of brain rhythms, which have well established connections to learning, memory, and other cognitive processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge