Ali Bahrami Rad

Department of Biomedical Informatics, Emory University, Atlanta, USA

Detection of Chagas Disease from the ECG: The George B. Moody PhysioNet Challenge 2025

Oct 02, 2025Abstract:Objective: Chagas disease is a parasitic infection that is endemic to South America, Central America, and, more recently, the U.S., primarily transmitted by insects. Chronic Chagas disease can cause cardiovascular diseases and digestive problems. Serological testing capacities for Chagas disease are limited, but Chagas cardiomyopathy often manifests in ECGs, providing an opportunity to prioritize patients for testing and treatment. Approach: The George B. Moody PhysioNet Challenge 2025 invites teams to develop algorithmic approaches for identifying Chagas disease from electrocardiograms (ECGs). Main results: This Challenge provides multiple innovations. First, we leveraged several datasets with labels from patient reports and serological testing, provided a large dataset with weak labels and smaller datasets with strong labels. Second, we augmented the data to support model robustness and generalizability to unseen data sources. Third, we applied an evaluation metric that captured the local serological testing capacity for Chagas disease to frame the machine learning problem as a triage task. Significance: Over 630 participants from 111 teams submitted over 1300 entries during the Challenge, representing diverse approaches from academia and industry worldwide.

A Data-Driven Gaussian Process Filter for Electrocardiogram Denoising

Jan 06, 2023

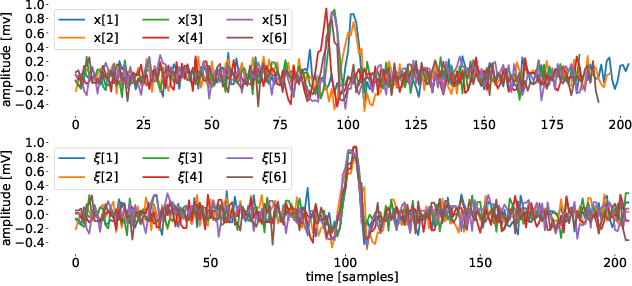

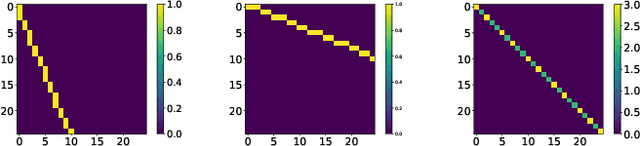

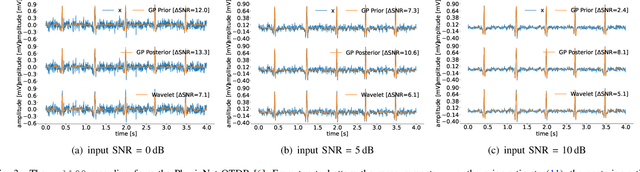

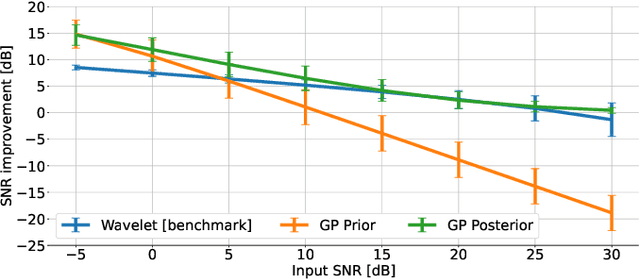

Abstract:Objective: Gaussian Processes (GP)-based filters, which have been effectively used for various applications including electrocardiogram (ECG) filtering can be computationally demanding and the choice of their hyperparameters is typically ad hoc. Methods: We develop a data-driven GP filter to address both issues, using the notion of the ECG phase domain -- a time-warped representation of the ECG beats onto a fixed number of samples and aligned R-peaks, which is assumed to follow a Gaussian distribution. Under this assumption, the computation of the sample mean and covariance matrix is simplified, enabling an efficient implementation of the GP filter in a data-driven manner, with no ad hoc hyperparameters. The proposed filter is evaluated and compared with a state-of-the-art wavelet-based filter, on the PhysioNet QT Database. The performance is evaluated by measuring the signal-to-noise ratio (SNR) improvement of the filter at SNR levels ranging from -5 to 30dB, in 5dB steps, using additive noise. For a clinical evaluation, the error between the estimated QT-intervals of the original and filtered signals is measured and compared with the benchmark filter. Results: It is shown that the proposed GP filter outperforms the benchmark filter for all the tested noise levels. It also outperforms the state-of-the-art filter in terms of QT-interval estimation error bias and variance. Conclusion: The proposed GP filter is a versatile technique for preprocessing the ECG in clinical and research applications, is applicable to ECG of arbitrary lengths and sampling frequencies, and provides confidence intervals for its performance.

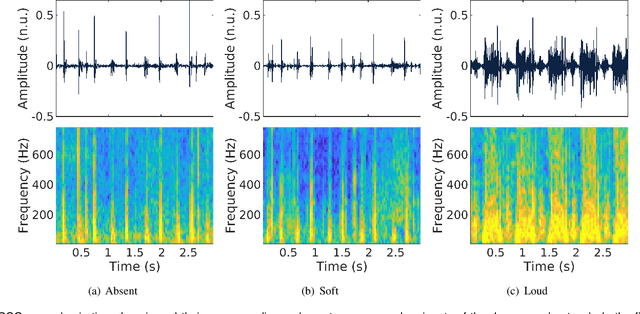

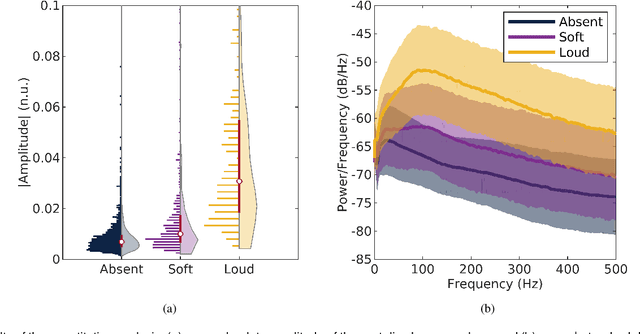

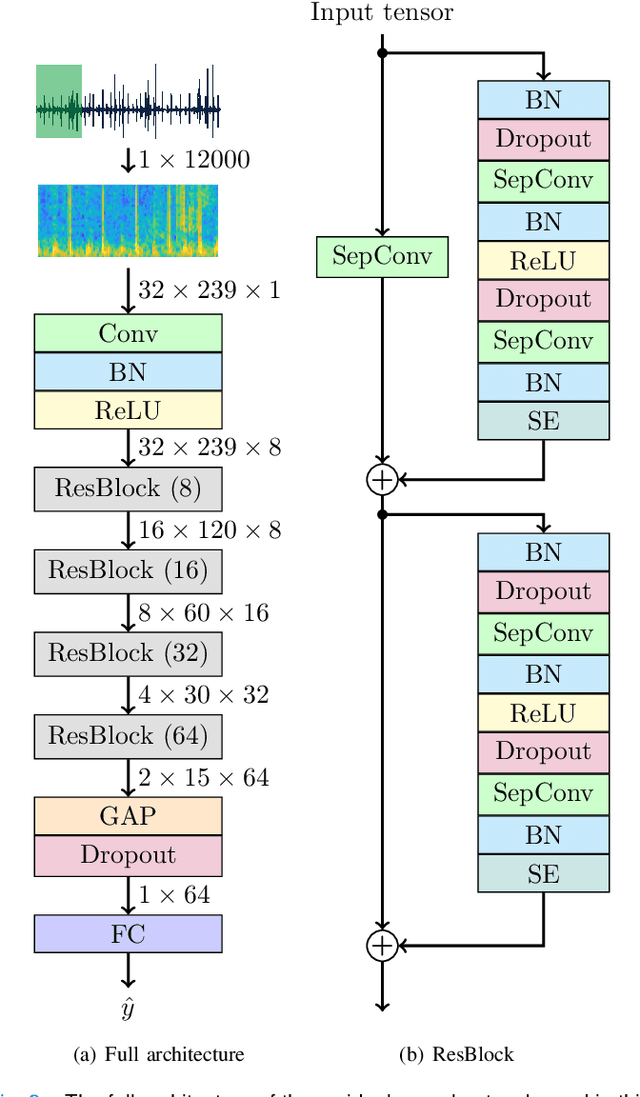

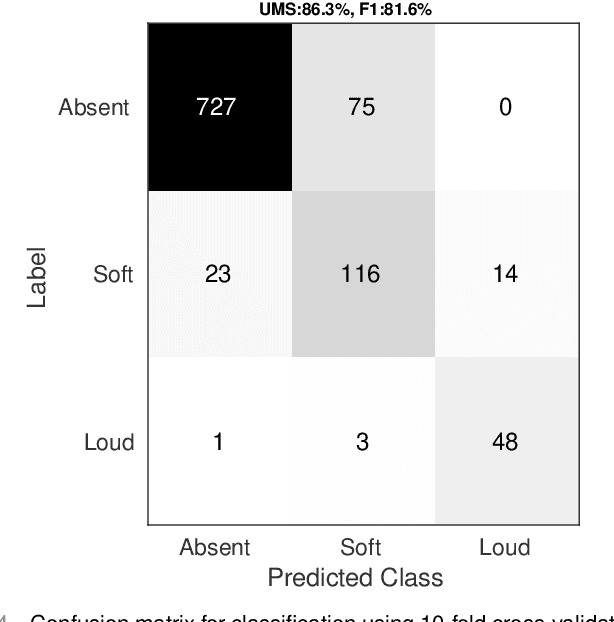

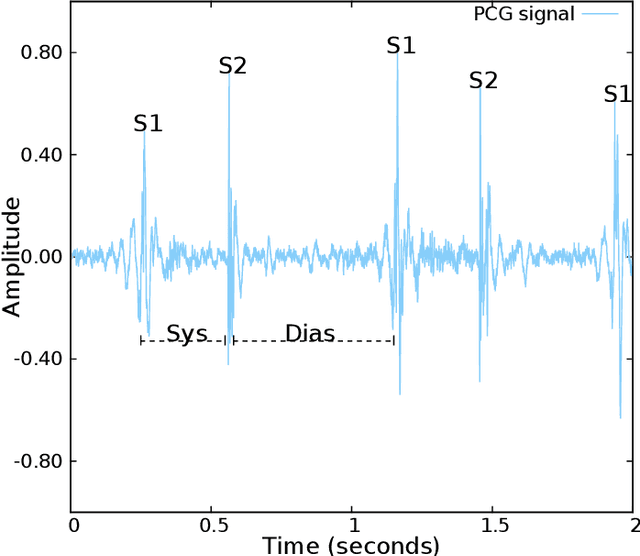

Beyond Heart Murmur Detection: Automatic Murmur Grading from Phonocardiogram

Sep 27, 2022

Abstract:Objective: Murmurs are abnormal heart sounds, identified by experts through cardiac auscultation. The murmur grade, a quantitative measure of the murmur intensity, is strongly correlated with the patient's clinical condition. This work aims to estimate each patient's murmur grade (i.e., absent, soft, loud) from multiple auscultation location phonocardiograms (PCGs) of a large population of pediatric patients from a low-resource rural area. Methods: The Mel spectrogram representation of each PCG recording is given to an ensemble of 15 convolutional residual neural networks with channel-wise attention mechanisms to classify each PCG recording. The final murmur grade for each patient is derived based on the proposed decision rule and considering all estimated labels for available recordings. The proposed method is cross-validated on a dataset consisting of 3456 PCG recordings from 1007 patients using a stratified ten-fold cross-validation. Additionally, the method was tested on a hidden test set comprised of 1538 PCG recordings from 442 patients. Results: The overall cross-validation performances for patient-level murmur gradings are 86.3% and 81.6% in terms of the unweighted average of sensitivities and F1-scores, respectively. The sensitivities (and F1-scores) for absent, soft, and loud murmurs are 90.7% (93.6%), 75.8% (66.8%), and 92.3% (84.2%), respectively. On the test set, the algorithm achieves an unweighted average of sensitivities of 80.4% and an F1-score of 75.8%. Conclusions: This study provides a potential approach for algorithmic pre-screening in low-resource settings with relatively high expert screening costs. Significance: The proposed method represents a significant step beyond detection of murmurs, providing characterization of intensity which may provide a enhanced classification of clinical outcomes.

Mythological Medical Machine Learning: Boosting the Performance of a Deep Learning Medical Data Classifier Using Realistic Physiological Models

Dec 28, 2021

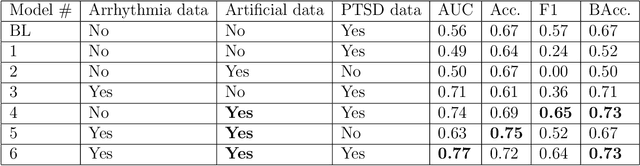

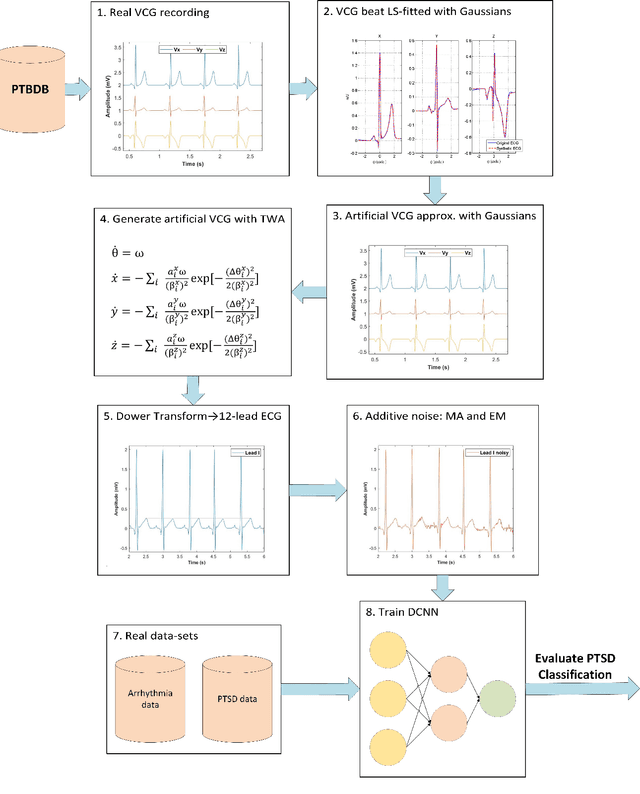

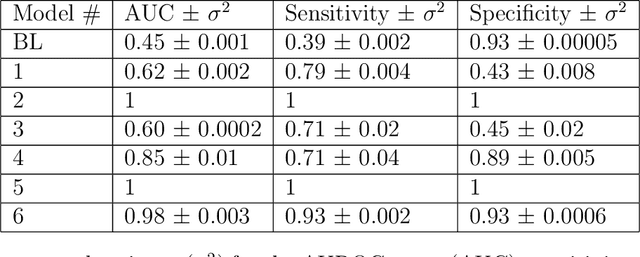

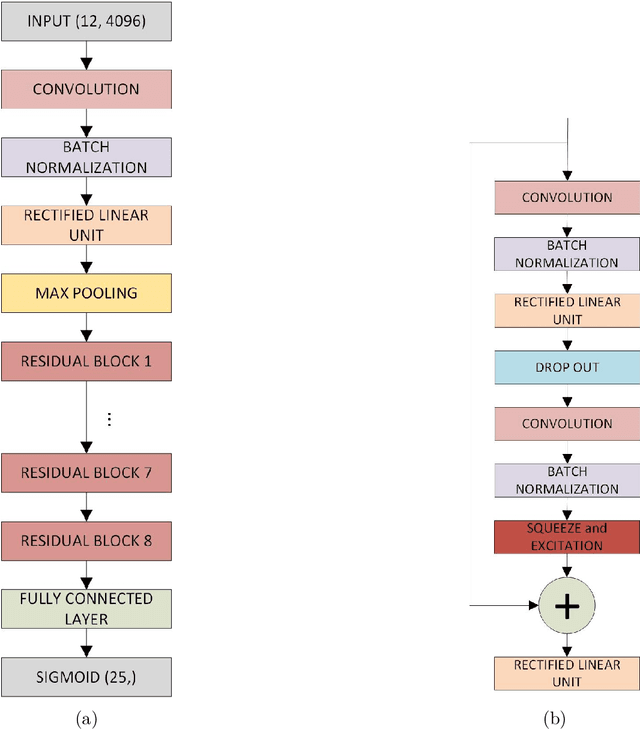

Abstract:Objective: To determine if a realistic, but computationally efficient model of the electrocardiogram can be used to pre-train a deep neural network (DNN) with a wide range of morphologies and abnormalities specific to a given condition - T-wave Alternans (TWA) as a result of Post-Traumatic Stress Disorder, or PTSD - and significantly boost performance on a small database of rare individuals. Approach: Using a previously validated artificial ECG model, we generated 180,000 artificial ECGs with or without significant TWA, with varying heart rate, breathing rate, TWA amplitude, and ECG morphology. A DNN, trained on over 70,000 patients to classify 25 different rhythms, was modified the output layer to a binary class (TWA or no-TWA, or equivalently, PTSD or no-PTSD), and transfer learning was performed on the artificial ECG. In a final transfer learning step, the DNN was trained and cross-validated on ECG from 12 PTSD and 24 controls for all combinations of using the three databases. Main results: The best performing approach (AUROC = 0.77, Accuracy = 0.72, F1-score = 0.64) was found by performing both transfer learning steps, using the pre-trained arrhythmia DNN, the artificial data and the real PTSD-related ECG data. Removing the artificial data from training led to the largest drop in performance. Removing the arrhythmia data from training provided a modest, but significant, drop in performance. The final model showed no significant drop in performance on the artificial data, indicating no overfitting. Significance: In healthcare, it is common to only have a small collection of high-quality data and labels, or a larger database with much lower quality (and less relevant) labels. The paradigm presented here, involving model-based performance boosting, provides a solution through transfer learning on a large realistic artificial database, and a partially relevant real database.

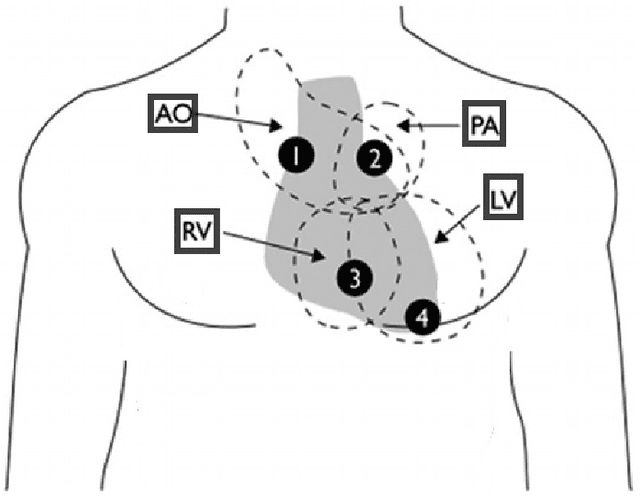

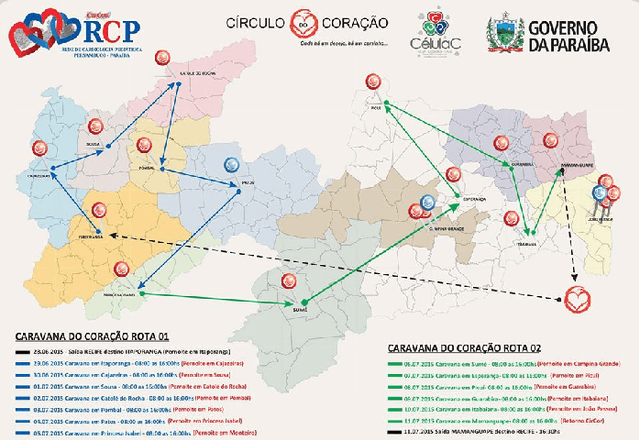

The CirCor DigiScope Dataset: From Murmur Detection to Murmur Classification

Aug 02, 2021

Abstract:Cardiac auscultation is one of the most cost-effective techniques used to detect and identify many heart conditions. Computer-assisted decision systems based on auscultation can support physicians in their decisions. Unfortunately, the application of such systems in clinical trials is still minimal since most of them only aim to detect the presence of extra or abnormal waves in the phonocardiogram signal. This is mainly due to the lack of large publicly available datasets, where a more detailed description of such abnormal waves (e.g., cardiac murmurs) exists. As a result, current machine learning algorithms are unable to classify such waves. To pave the way to more effective research on healthcare recommendation systems based on auscultation, our team has prepared the currently largest pediatric heart sound dataset. A total of 5282 recordings have been collected from the four main auscultation locations of 1568 patients, in the process 215780 heart sounds have been manually annotated. Furthermore, and for the first time, each cardiac murmur has been manually annotated by an expert annotator according to its timing, shape, pitch, grading and quality. In addition, the auscultation locations where the murmur is present were identified as well as the auscultation location where the murmur is detected more intensively.

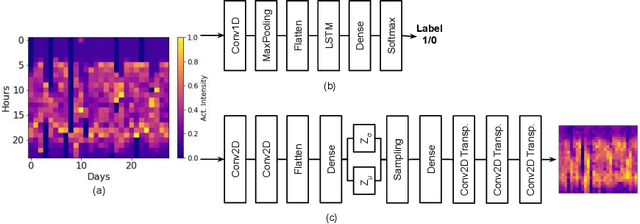

Using Convolutional Variational Autoencoders to Predict Post-Trauma Health Outcomes from Actigraphy Data

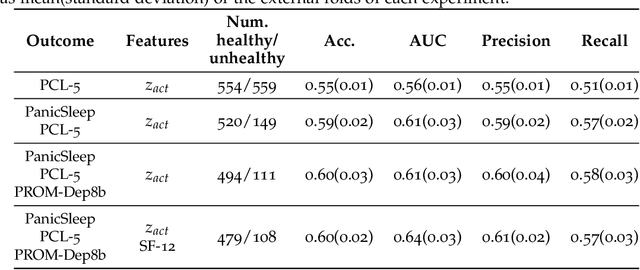

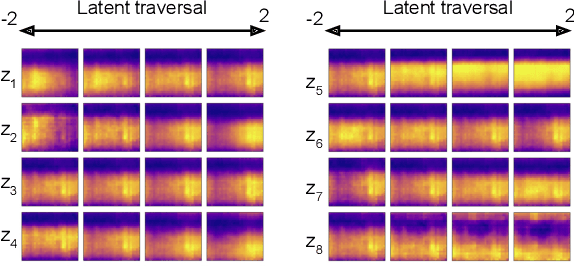

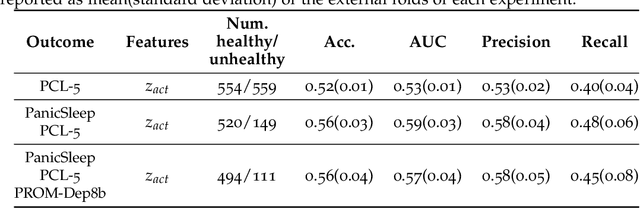

Nov 20, 2020

Abstract:Depression and post-traumatic stress disorder (PTSD) are psychiatric conditions commonly associated with experiencing a traumatic event. Estimating mental health status through non-invasive techniques such as activity-based algorithms can help to identify successful early interventions. In this work, we used locomotor activity captured from 1113 individuals who wore a research grade smartwatch post-trauma. A convolutional variational autoencoder (VAE) architecture was used for unsupervised feature extraction from four weeks of actigraphy data. By using VAE latent variables and the participant's pre-trauma physical health status as features, a logistic regression classifier achieved an area under the receiver operating characteristic curve (AUC) of 0.64 to estimate mental health outcomes. The results indicate that the VAE model is a promising approach for actigraphy data analysis for mental health outcomes in long-term studies.

Automated Polysomnography Analysis for Detection of Non-Apneic and Non-Hypopneic Arousals using Feature Engineering and a Bidirectional LSTM Network

Sep 06, 2019

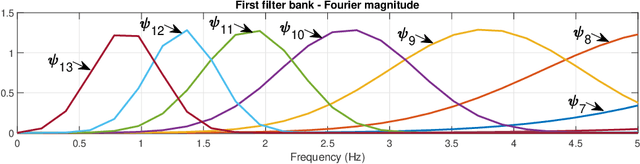

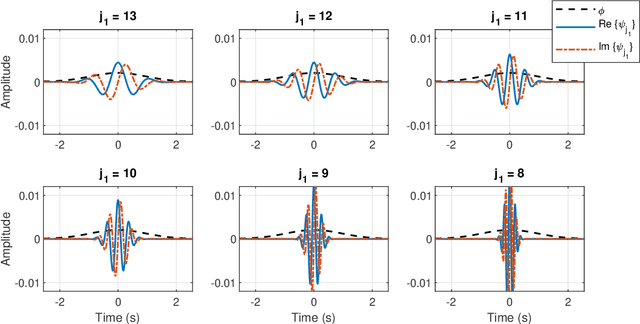

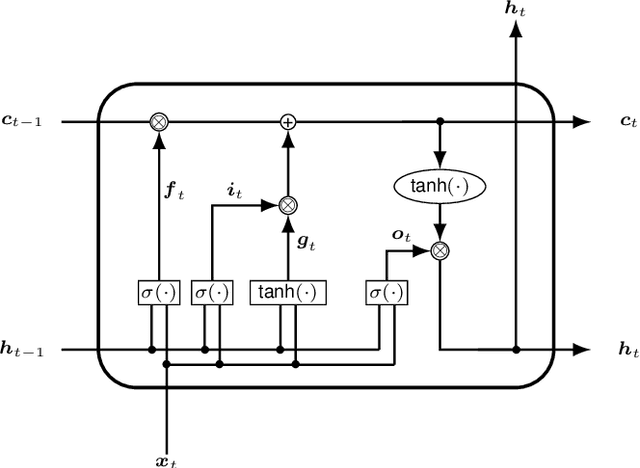

Abstract:Objective: The aim of this study is to develop an automated classification algorithm for polysomnography (PSG) recordings to detect non-apneic and non-hypopneic arousals. Our particular focus is on detecting the respiratory effort-related arousals (RERAs) which are very subtle respiratory events that do not meet the criteria for apnea or hypopnea, and are more challenging to detect. Methods: The proposed algorithm is based on a bidirectional long short-term memory (BiLSTM) classifier and 465 multi-domain features, extracted from multimodal clinical time series. The features consist of a set of physiology-inspired features (n = 75), obtained by multiple steps of feature selection and expert analysis, and a set of physiology-agnostic features (n = 390), derived from scattering transform. Results: The proposed algorithm is validated on the 2018 PhysioNet challenge dataset. The overall performance in terms of the area under the precision-recall curve (AUPRC) is 0.50 on the hidden test dataset. This result is tied for the second-best score during the follow-up and official phases of the 2018 PhysioNet challenge. Conclusions: The results demonstrate that it is possible to automatically detect subtle non-apneic/non-hypopneic arousal events from PSG recordings. Significance: Automatic detection of subtle respiratory events such as RERAs together with other non-apneic/non-hypopneic arousals will allow detailed annotations of large PSG databases. This contributes to a better retrospective analysis of sleep data, which may also improve the quality of treatment.

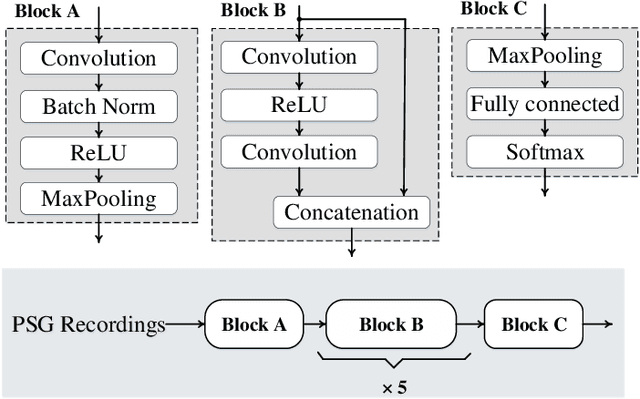

1D Convolutional Neural Network Models for Sleep Arousal Detection

Mar 01, 2019

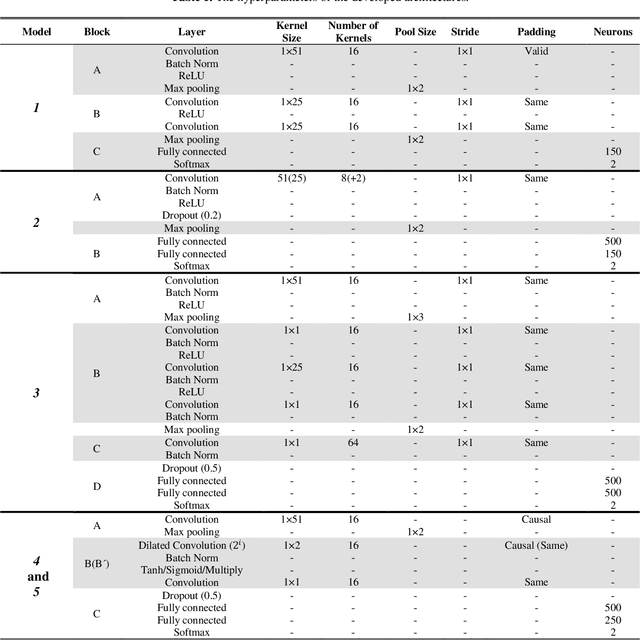

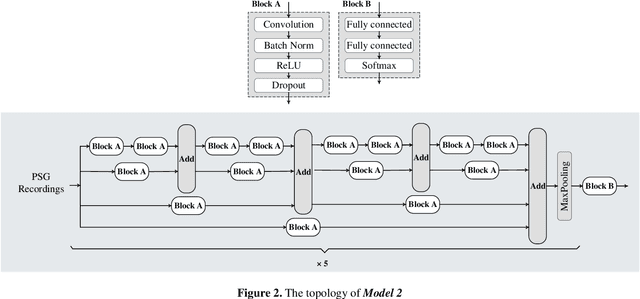

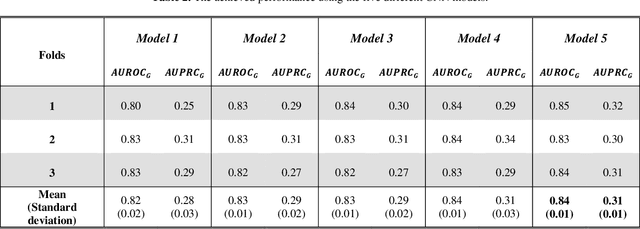

Abstract:Sleep arousals transition the depth of sleep to a more superficial stage. The occurrence of such events is often considered as a protective mechanism to alert the body of harmful stimuli. Thus, accurate sleep arousal detection can lead to an enhanced understanding of the underlying causes and influencing the assessment of sleep quality. Previous studies and guidelines have suggested that sleep arousals are linked mainly to abrupt frequency shifts in EEG signals, but the proposed rules are shown to be insufficient for a comprehensive characterization of arousals. This study investigates the application of five recent convolutional neural networks (CNNs) for sleep arousal detection and performs comparative evaluations to determine the best model for this task. The investigated state-of-the-art CNN models have originally been designed for image or speech processing. A detailed set of evaluations is performed on the benchmark dataset provided by PhysioNet/Computing in Cardiology Challenge 2018, and the results show that the best 1D CNN model has achieved an average of 0.31 and 0.84 for the area under the precision-recall and area under the ROC curves, respectively.

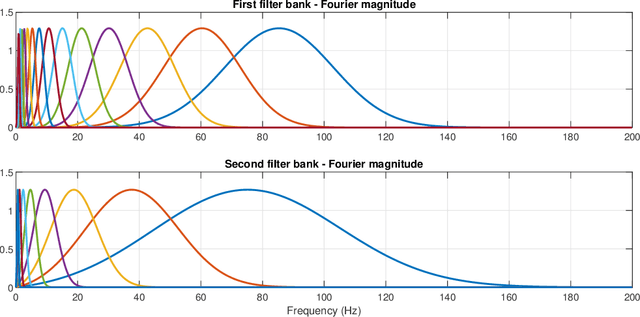

Kalman-based Spectro-Temporal ECG Analysis using Deep Convolutional Networks for Atrial Fibrillation Detection

Dec 12, 2018

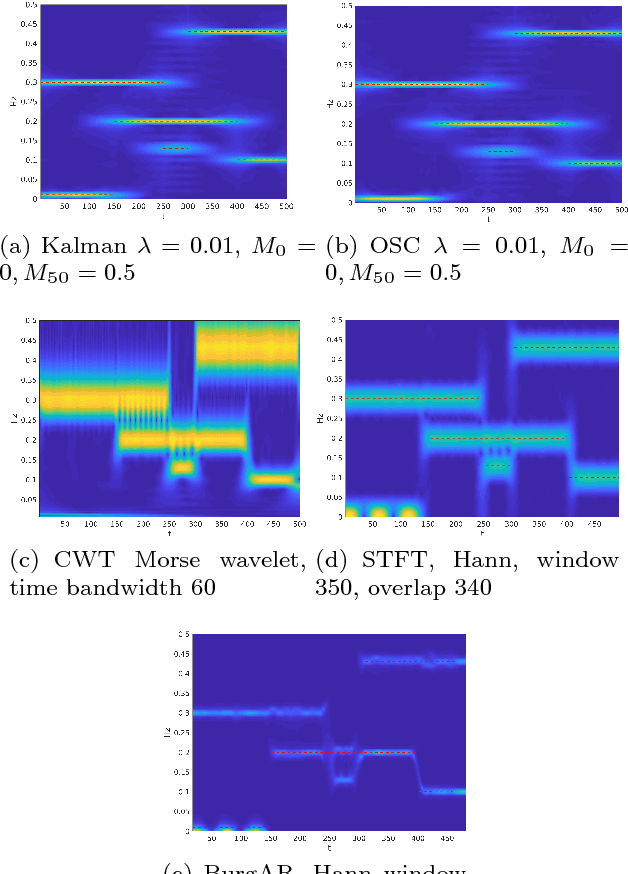

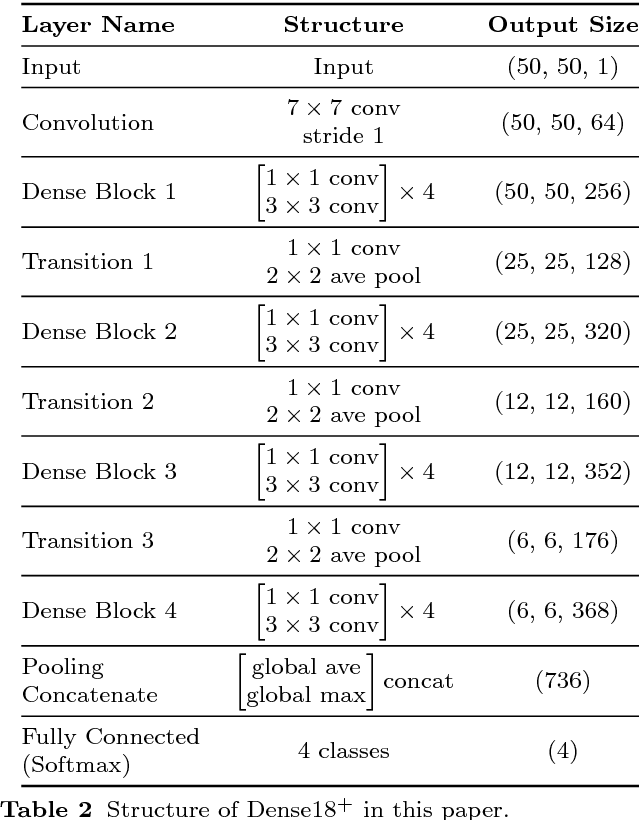

Abstract:In this article, we propose a novel ECG classification framework for atrial fibrillation (AF) detection using spectro-temporal representation (i.e., time varying spectrum) and deep convolutional networks. In the first step we use a Bayesian spectro-temporal representation based on the estimation of time-varying coefficients of Fourier series using Kalman filter and smoother. Next, we derive an alternative model based on a stochastic oscillator differential equation to accelerate the estimation of the spectro-temporal representation in lengthy signals. Finally, after comparative evaluations of different convolutional architectures, we propose an efficient deep convolutional neural network to classify the 2D spectro-temporal ECG data. The ECG spectro-temporal data are classified into four different classes: AF, non-AF normal rhythm (Normal), non-AF abnormal rhythm (Other), and noisy segments (Noisy). The performance of the proposed methods is evaluated and scored with the PhysioNet/Computing in Cardiology (CinC) 2017 dataset. The experimental results show that the proposed method achieves the overall F1 score of 80.2%, which is in line with the state-of-the-art algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge