Elisabete Aramendi

Robust Segmentation of CPR-Induced Capnogram Using U-net: Overcoming Challenges with Deep Learning

Oct 28, 2024Abstract:Objective: The accurate segmentation of capnograms during cardiopulmonary resuscitation (CPR) is essential for effective patient monitoring and advanced airway management. This study aims to develop a robust algorithm using a U-net architecture to segment capnograms into inhalation and non-inhalation phases, and to demonstrate its superiority over state-of-the-art (SoA) methods in the presence of CPR-induced artifacts. Materials and methods: A total of 24354 segments of one minute extracted from 1587 patients were used to train and evaluate the model. The proposed U-net architecture was tested using patient-wise 10-fold cross-validation. A set of five features was extracted for clustering analysis to evaluate the algorithm performance across different signal characteristics and contexts. The evaluation metrics included segmentation-level and ventilation-level metrics, including ventilation rate and end-tidal-CO$_2$ values. Results: The proposed U-net based algorithm achieved an F1-score of 98% for segmentation and 96% for ventilation detection, outperforming existing SoA methods by 4 points. The root mean square error for end-tidal-CO$_2$ and ventilation rate were 1.9 mmHg and 1.1 breaths per minute, respectively. Detailed performance metrics highlighted the algorithm's robustness against CPR-induced interferences and low amplitude signals. Clustering analysis further demonstrated consistent performance across various signal characteristics. Conclusion: The proposed U-net based segmentation algorithm improves the accuracy of capnogram analysis during CPR. Its enhanced performance in detecting inhalation phases and ventilation events offers a reliable tool for clinical applications, potentially improving patient outcomes during cardiac arrest.

Beyond Heart Murmur Detection: Automatic Murmur Grading from Phonocardiogram

Sep 27, 2022

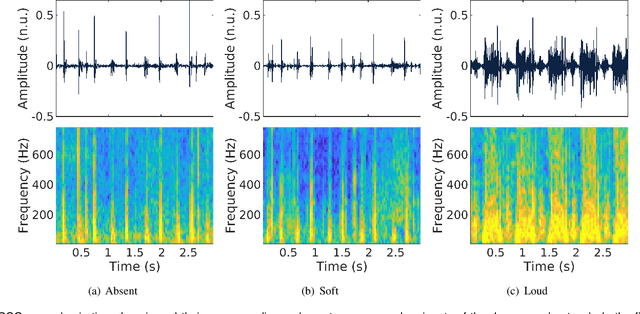

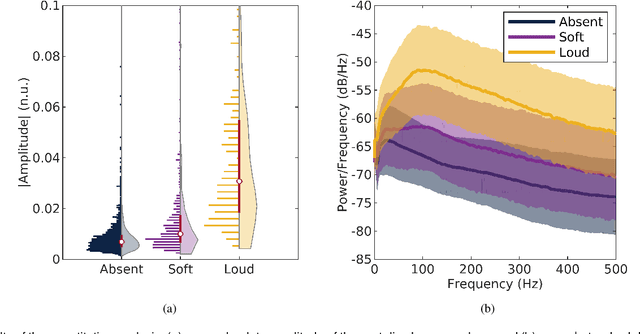

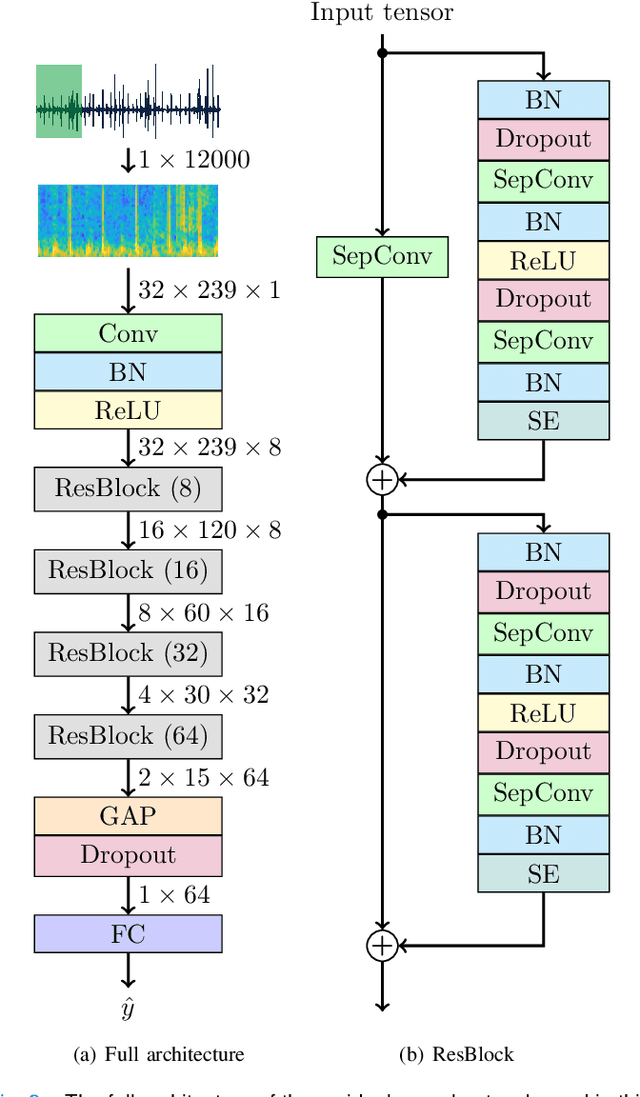

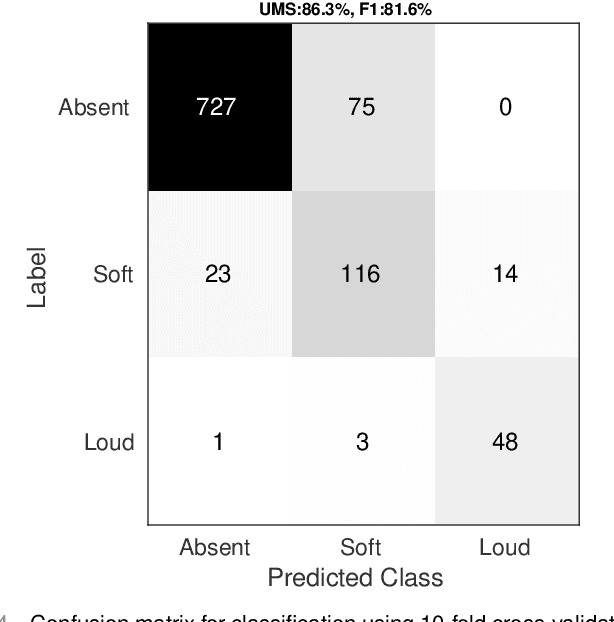

Abstract:Objective: Murmurs are abnormal heart sounds, identified by experts through cardiac auscultation. The murmur grade, a quantitative measure of the murmur intensity, is strongly correlated with the patient's clinical condition. This work aims to estimate each patient's murmur grade (i.e., absent, soft, loud) from multiple auscultation location phonocardiograms (PCGs) of a large population of pediatric patients from a low-resource rural area. Methods: The Mel spectrogram representation of each PCG recording is given to an ensemble of 15 convolutional residual neural networks with channel-wise attention mechanisms to classify each PCG recording. The final murmur grade for each patient is derived based on the proposed decision rule and considering all estimated labels for available recordings. The proposed method is cross-validated on a dataset consisting of 3456 PCG recordings from 1007 patients using a stratified ten-fold cross-validation. Additionally, the method was tested on a hidden test set comprised of 1538 PCG recordings from 442 patients. Results: The overall cross-validation performances for patient-level murmur gradings are 86.3% and 81.6% in terms of the unweighted average of sensitivities and F1-scores, respectively. The sensitivities (and F1-scores) for absent, soft, and loud murmurs are 90.7% (93.6%), 75.8% (66.8%), and 92.3% (84.2%), respectively. On the test set, the algorithm achieves an unweighted average of sensitivities of 80.4% and an F1-score of 75.8%. Conclusions: This study provides a potential approach for algorithmic pre-screening in low-resource settings with relatively high expert screening costs. Significance: The proposed method represents a significant step beyond detection of murmurs, providing characterization of intensity which may provide a enhanced classification of clinical outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge