Aditya Desai

Let the Barbarians In: How AI Can Accelerate Systems Performance Research

Dec 22, 2025Abstract:Artificial Intelligence (AI) is beginning to transform the research process by automating the discovery of new solutions. This shift depends on the availability of reliable verifiers, which AI-driven approaches require to validate candidate solutions. Research focused on improving systems performance is especially well-suited to this paradigm because system performance problems naturally admit such verifiers: candidates can be implemented in real systems or simulators and evaluated against predefined workloads. We term this iterative cycle of generation, evaluation, and refinement AI-Driven Research for Systems (ADRS). Using several open-source ADRS instances (i.e., OpenEvolve, GEPA, and ShinkaEvolve), we demonstrate across ten case studies (e.g., multi-region cloud scheduling, mixture-of-experts load balancing, LLM-based SQL, transaction scheduling) that ADRS-generated solutions can match or even outperform human state-of-the-art designs. Based on these findings, we outline best practices (e.g., level of prompt specification, amount of feedback, robust evaluation) for effectively using ADRS, and we discuss future research directions and their implications. Although we do not yet have a universal recipe for applying ADRS across all of systems research, we hope our preliminary findings, together with the challenges we identify, offer meaningful guidance for future work as researcher effort shifts increasingly toward problem formulation and strategic oversight. Note: This paper is an extension of our prior work [14]. It adds extensive evaluation across multiple ADRS frameworks and provides deeper analysis and insights into best practices.

The Danger of Overthinking: Examining the Reasoning-Action Dilemma in Agentic Tasks

Feb 12, 2025Abstract:Large Reasoning Models (LRMs) represent a breakthrough in AI problem-solving capabilities, but their effectiveness in interactive environments can be limited. This paper introduces and analyzes overthinking in LRMs. A phenomenon where models favor extended internal reasoning chains over environmental interaction. Through experiments on software engineering tasks using SWE Bench Verified, we observe three recurring patterns: Analysis Paralysis, Rogue Actions, and Premature Disengagement. We propose a framework to study these behaviors, which correlates with human expert assessments, and analyze 4018 trajectories. We observe that higher overthinking scores correlate with decreased performance, with reasoning models exhibiting stronger tendencies toward overthinking compared to non-reasoning models. Our analysis reveals that simple efforts to mitigate overthinking in agentic environments, such as selecting the solution with the lower overthinking score, can improve model performance by almost 30% while reducing computational costs by 43%. These results suggest that mitigating overthinking has strong practical implications. We suggest that by leveraging native function-calling capabilities and selective reinforcement learning overthinking tendencies could be mitigated. We also open-source our evaluation framework and dataset to facilitate research in this direction at https://github.com/AlexCuadron/Overthinking.

HashAttention: Semantic Sparsity for Faster Inference

Dec 19, 2024

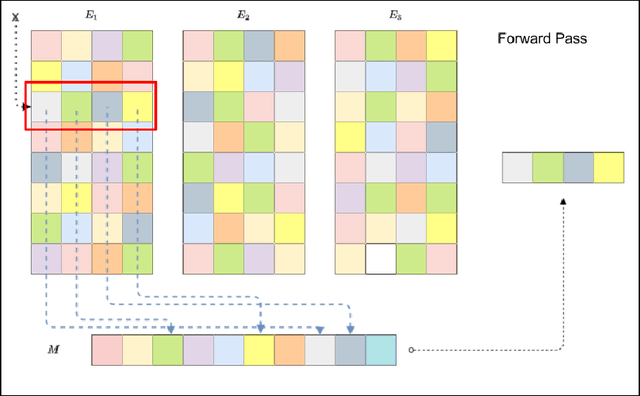

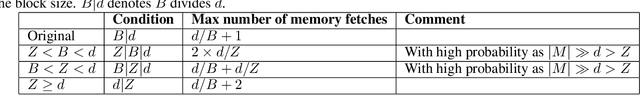

Abstract:Utilizing longer contexts is increasingly essential to power better AI systems. However, the cost of attending to long contexts is high due to the involved softmax computation. While the scaled dot-product attention (SDPA) exhibits token sparsity, with only a few pivotal tokens significantly contributing to attention, leveraging this sparsity effectively remains an open challenge. Previous methods either suffer from model degradation or require considerable additional resources. We propose HashAttention --a principled approach casting pivotal token identification as a recommendation problem. Given a query, HashAttention encodes keys and queries in Hamming space capturing the required semantic similarity using learned mapping functions. HashAttention efficiently identifies pivotal tokens for a given query in this Hamming space using bitwise operations, and only these pivotal tokens are used for attention computation, significantly improving overall attention efficiency. HashAttention can reduce the number of tokens used by a factor of $1/32\times$ for the Llama-3.1-8B model with LongBench, keeping average quality loss within 0.6 points, while using only 32 bits per token auxiliary memory. At $32\times$ sparsity, HashAttention is $3{-}6\times$ faster than LightLLM and $2.5{-}4.5\times$ faster than gpt-fast on Nvidia-L4 GPU.

IDentity with Locality: An ideal hash for gene sequence search

Jun 21, 2024

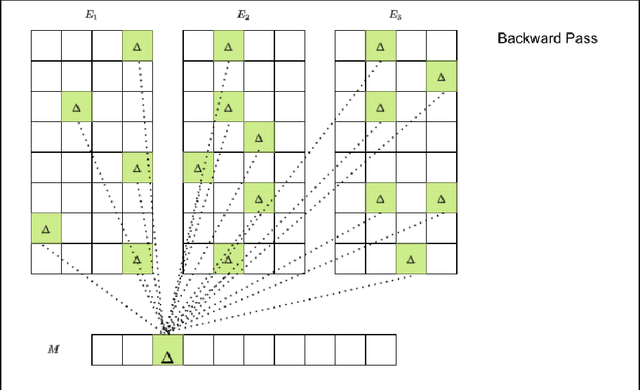

Abstract:Gene sequence search is a fundamental operation in computational genomics. Due to the petabyte scale of genome archives, most gene search systems now use hashing-based data structures such as Bloom Filters (BF). The state-of-the-art systems such as Compact bit-slicing signature index (COBS) and Repeated And Merged Bloom filters (RAMBO) use BF with Random Hash (RH) functions for gene representation and identification. The standard recipe is to cast the gene search problem as a sequence of membership problems testing if each subsequent gene substring (called kmer) of Q is present in the set of kmers of the entire gene database D. We observe that RH functions, which are crucial to the memory and the computational advantage of BF, are also detrimental to the system performance of gene-search systems. While subsequent kmers being queried are likely very similar, RH, oblivious to any similarity, uniformly distributes the kmers to different parts of potentially large BF, thus triggering excessive cache misses and causing system slowdown. We propose a novel hash function called the Identity with Locality (IDL) hash family, which co-locates the keys close in input space without causing collisions. This approach ensures both cache locality and key preservation. IDL functions can be a drop-in replacement for RH functions and help improve the performance of information retrieval systems. We give a simple but practical construction of IDL function families and show that replacing the RH with IDL functions reduces cache misses by a factor of 5x, thus improving query and indexing times of SOTA methods such as COBS and RAMBO by factors up to 2x without compromising their quality. We also provide a theoretical analysis of the false positive rate of BF with IDL functions. Our hash function is the first study that bridges Locality Sensitive Hash (LSH) and RH to obtain cache efficiency.

Heterogeneous federated collaborative filtering using FAIR: Federated Averaging in Random Subspaces

Nov 03, 2023

Abstract:Recommendation systems (RS) for items (e.g., movies, books) and ads are widely used to tailor content to users on various internet platforms. Traditionally, recommendation models are trained on a central server. However, due to rising concerns for data privacy and regulations like the GDPR, federated learning is an increasingly popular paradigm in which data never leaves the client device. Applying federated learning to recommendation models is non-trivial due to large embedding tables, which often exceed the memory constraints of most user devices. To include data from all devices in federated learning, we must enable collective training of embedding tables on devices with heterogeneous memory capacities. Current solutions to heterogeneous federated learning can only accommodate a small range of capacities and thus limit the number of devices that can participate in training. We present Federated Averaging in Random subspaces (FAIR), which allows arbitrary compression of embedding tables based on device capacity and ensures the participation of all devices in training. FAIR uses what we call consistent and collapsible subspaces defined by hashing-based random projections to jointly train large embedding tables while using varying amounts of compression on user devices. We evaluate FAIR on Neural Collaborative Filtering tasks with multiple datasets and verify that FAIR can gather and share information from a wide range of devices with varying capacities, allowing for seamless collaboration. We prove the convergence of FAIR in the homogeneous setting with non-i.i.d data distribution. Our code is open source at {https://github.com/apd10/FLCF}

In defense of parameter sharing for model-compression

Oct 17, 2023

Abstract:When considering a model architecture, there are several ways to reduce its memory footprint. Historically, popular approaches included selecting smaller architectures and creating sparse networks through pruning. More recently, randomized parameter-sharing (RPS) methods have gained traction for model compression at start of training. In this paper, we comprehensively assess the trade-off between memory and accuracy across RPS, pruning techniques, and building smaller models. Our findings demonstrate that RPS, which is both data and model-agnostic, consistently outperforms/matches smaller models and all moderately informed pruning strategies, such as MAG, SNIP, SYNFLOW, and GRASP, across the entire compression range. This advantage becomes particularly pronounced in higher compression scenarios. Notably, even when compared to highly informed pruning techniques like Lottery Ticket Rewinding (LTR), RPS exhibits superior performance in high compression settings. This points out inherent capacity advantage that RPS enjoys over sparse models. Theoretically, we establish RPS as a superior technique in terms of memory-efficient representation when compared to pruning for linear models. This paper argues in favor of paradigm shift towards RPS based models. During our rigorous evaluation of RPS, we identified issues in the state-of-the-art RPS technique ROAST, specifically regarding stability (ROAST's sensitivity to initialization hyperparameters, often leading to divergence) and Pareto-continuity (ROAST's inability to recover the accuracy of the original model at zero compression). We provably address both of these issues. We refer to the modified RPS, which incorporates our improvements, as STABLE-RPS.

Scissorhands: Exploiting the Persistence of Importance Hypothesis for LLM KV Cache Compression at Test Time

May 26, 2023

Abstract:Large language models(LLMs) have sparked a new wave of exciting AI applications. Hosting these models at scale requires significant memory resources. One crucial memory bottleneck for the deployment stems from the context window. It is commonly recognized that model weights are memory hungry; however, the size of key-value embedding stored during the generation process (KV cache) can easily surpass the model size. The enormous size of the KV cache puts constraints on the inference batch size, which is crucial for high throughput inference workload. Inspired by an interesting observation of the attention scores, we hypothesize the persistence of importance: only pivotal tokens, which had a substantial influence at one step, will significantly influence future generations. Based on our empirical verification and theoretical analysis around this hypothesis, we propose Scissorhands, a system that maintains the memory usage of the KV cache at a fixed budget without finetuning the model. In essence, Scissorhands manages the KV cache by storing the pivotal tokens with a higher probability. We validate that Scissorhands reduces the inference memory usage of the KV cache by up to 5X without compromising model quality. We further demonstrate that Scissorhands can be combined with 4-bit quantization, traditionally used to compress model weights, to achieve up to 20X compression.

The trade-offs of model size in large recommendation models : A 10000 $\times$ compressed criteo-tb DLRM model

Jul 21, 2022

Abstract:Embedding tables dominate industrial-scale recommendation model sizes, using up to terabytes of memory. A popular and the largest publicly available machine learning MLPerf benchmark on recommendation data is a Deep Learning Recommendation Model (DLRM) trained on a terabyte of click-through data. It contains 100GB of embedding memory (25+Billion parameters). DLRMs, due to their sheer size and the associated volume of data, face difficulty in training, deploying for inference, and memory bottlenecks due to large embedding tables. This paper analyzes and extensively evaluates a generic parameter sharing setup (PSS) for compressing DLRM models. We show theoretical upper bounds on the learnable memory requirements for achieving $(1 \pm \epsilon)$ approximations to the embedding table. Our bounds indicate exponentially fewer parameters suffice for good accuracy. To this end, we demonstrate a PSS DLRM reaching 10000$\times$ compression on criteo-tb without losing quality. Such a compression, however, comes with a caveat. It requires 4.5 $\times$ more iterations to reach the same saturation quality. The paper argues that this tradeoff needs more investigations as it might be significantly favorable. Leveraging the small size of the compressed model, we show a 4.3$\times$ improvement in training latency leading to similar overall training times. Thus, in the tradeoff between system advantage of a small DLRM model vs. slower convergence, we show that scales are tipped towards having a smaller DLRM model, leading to faster inference, easier deployment, and similar training times.

Efficient model compression with Random Operation Access Specific Tile (ROAST) hashing

Jul 21, 2022

Abstract:Advancements in deep learning are often associated with increasing model sizes. The model size dramatically affects the deployment cost and latency of deep models. For instance, models like BERT cannot be deployed on edge devices and mobiles due to their sheer size. As a result, most advances in Deep Learning are yet to reach the edge. Model compression has sought much-deserved attention in literature across natural language processing, vision, and recommendation domains. This paper proposes a model-agnostic, cache-friendly model compression approach: Random Operation Access Specific Tile (ROAST) hashing. ROAST collapses the parameters by clubbing them through a lightweight mapping. Notably, while clubbing these parameters, ROAST utilizes cache hierarchies by aligning the memory access pattern with the parameter access pattern. ROAST is up to $\sim 25 \times$ faster to train and $\sim 50 \times$ faster to infer than the popular parameter sharing method HashedNet. Additionally, ROAST introduces global weight sharing, which is empirically and theoretically superior to local weight sharing in HashedNet, and can be of independent interest in itself. With ROAST, we present the first compressed BERT, which is $100\times - 1000\times$ smaller but does not result in quality degradation. These compression levels on universal architecture like transformers are promising for the future of SOTA model deployment on resource-constrained devices like mobile and edge devices

Random Offset Block Embedding Array (ROBE) for CriteoTB Benchmark MLPerf DLRM Model : 1000$\times$ Compression and 2.7$\times$ Faster Inference

Aug 04, 2021

Abstract:Deep learning for recommendation data is the one of the most pervasive and challenging AI workload in recent times. State-of-the-art recommendation models are one of the largest models rivalling the likes of GPT-3 and Switch Transformer. Challenges in deep learning recommendation models (DLRM) stem from learning dense embeddings for each of the categorical values. These embedding tables in industrial scale models can be as large as hundreds of terabytes. Such large models lead to a plethora of engineering challenges, not to mention prohibitive communication overheads, and slower training and inference times. Of these, slower inference time directly impacts user experience. Model compression for DLRM is gaining traction and the community has recently shown impressive compression results. In this paper, we present Random Offset Block Embedding Array (ROBE) as a low memory alternative to embedding tables which provide orders of magnitude reduction in memory usage while maintaining accuracy and boosting execution speed. ROBE is a simple fundamental approach in improving both cache performance and the variance of randomized hashing, which could be of independent interest in itself. We demonstrate that we can successfully train DLRM models with same accuracy while using $1000 \times$ less memory. A $1000\times$ compressed model directly results in faster inference without any engineering. In particular, we show that we can train DLRM model using ROBE Array of size 100MB on a single GPU to achieve AUC of 0.8025 or higher as required by official MLPerf CriteoTB benchmark DLRM model of 100GB while achieving about $2.7\times$ (170\%) improvement in inference throughput.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge