Abhijit Das

Department of Physics and NanoLund, Lund University, Sweden

DenVisCoM: Dense Vision Correspondence Mamba for Efficient and Real-time Optical Flow and Stereo Estimation

Feb 02, 2026Abstract:In this work, we propose a novel Mamba block DenVisCoM, as well as a novel hybrid architecture specifically tailored for accurate and real-time estimation of optical flow and disparity estimation. Given that such multi-view geometry and motion tasks are fundamentally related, we propose a unified architecture to tackle them jointly. Specifically, the proposed hybrid architecture is based on DenVisCoM and a Transformer-based attention block that efficiently addresses real-time inference, memory footprint, and accuracy at the same time for joint estimation of motion and 3D dense perception tasks. We extensively analyze the benchmark trade-off of accuracy and real-time processing on a large number of datasets. Our experimental results and related analysis suggest that our proposed model can accurately estimate optical flow and disparity estimation in real time. All models and associated code are available at https://github.com/vimstereo/DenVisCoM.

Continual-learning for Modelling Low-Resource Languages from Large Language Models

Jan 09, 2026Abstract:Modelling a language model for a multi-lingual scenario includes several potential challenges, among which catastrophic forgetting is the major challenge. For example, small language models (SLM) built for low-resource languages by adapting large language models (LLMs) pose the challenge of catastrophic forgetting. This work proposes to employ a continual learning strategy using parts-of-speech (POS)-based code-switching along with a replay adapter strategy to mitigate the identified gap of catastrophic forgetting while training SLM from LLM. Experiments conducted on vision language tasks such as visual question answering and language modelling task exhibits the success of the proposed architecture.

Fusion-SSAT: Unleashing the Potential of Self-supervised Auxiliary Task by Feature Fusion for Generalized Deepfake Detection

Jan 02, 2026Abstract:In this work, we attempted to unleash the potential of self-supervised learning as an auxiliary task that can optimise the primary task of generalised deepfake detection. To explore this, we examined different combinations of the training schemes for these tasks that can be most effective. Our findings reveal that fusing the feature representation from self-supervised auxiliary tasks is a powerful feature representation for the problem at hand. Such a representation can leverage the ultimate potential and bring in a unique representation of both the self-supervised and primary tasks, achieving better performance for the primary task. We experimented on a large set of datasets, which includes DF40, FaceForensics++, Celeb-DF, DFD, FaceShifter, UADFV, and our results showed better generalizability on cross-dataset evaluation when compared with current state-of-the-art detectors.

Investigating the Viability of Employing Multi-modal Large Language Models in the Context of Audio Deepfake Detection

Jan 02, 2026Abstract:While Vision-Language Models (VLMs) and Multimodal Large Language Models (MLLMs) have shown strong generalisation in detecting image and video deepfakes, their use for audio deepfake detection remains largely unexplored. In this work, we aim to explore the potential of MLLMs for audio deepfake detection. Combining audio inputs with a range of text prompts as queries to find out the viability of MLLMs to learn robust representations across modalities for audio deepfake detection. Therefore, we attempt to explore text-aware and context-rich, question-answer based prompts with binary decisions. We hypothesise that such a feature-guided reasoning will help in facilitating deeper multimodal understanding and enable robust feature learning for audio deepfake detection. We evaluate the performance of two MLLMs, Qwen2-Audio-7B-Instruct and SALMONN, in two evaluation modes: (a) zero-shot and (b) fine-tuned. Our experiments demonstrate that combining audio with a multi-prompt approach could be a viable way forward for audio deepfake detection. Our experiments show that the models perform poorly without task-specific training and struggle to generalise to out-of-domain data. However, they achieve good performance on in-domain data with minimal supervision, indicating promising potential for audio deepfake detection.

DensePercept-NCSSD: Vision Mamba towards Real-time Dense Visual Perception with Non-Causal State Space Duality

Nov 16, 2025Abstract:In this work, we propose an accurate and real-time optical flow and disparity estimation model by fusing pairwise input images in the proposed non-causal selective state space for dense perception tasks. We propose a non-causal Mamba block-based model that is fast and efficient and aptly manages the constraints present in a real-time applications. Our proposed model reduces inference times while maintaining high accuracy and low GPU usage for optical flow and disparity map generation. The results and analysis, and validation in real-life scenario justify that our proposed model can be used for unified real-time and accurate 3D dense perception estimation tasks. The code, along with the models, can be found at https://github.com/vimstereo/DensePerceptNCSSD

Towards Obstacle-Avoiding Control of Planar Snake Robots Exploring Neuro-Evolution of Augmenting Topologies

Nov 15, 2025

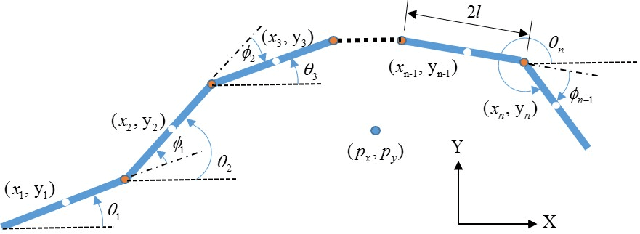

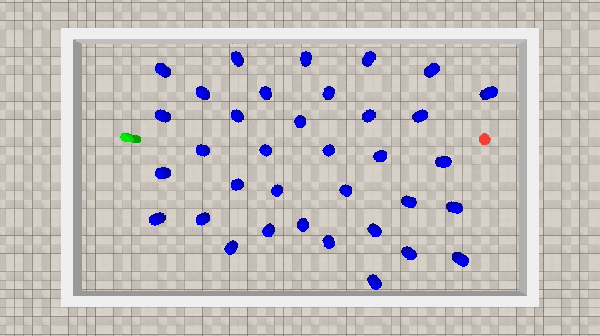

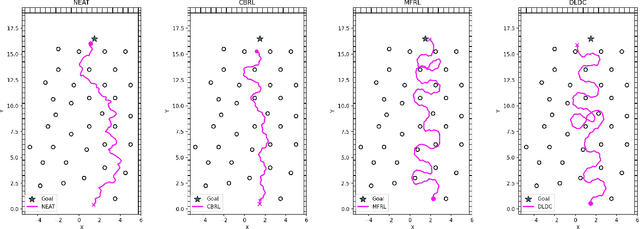

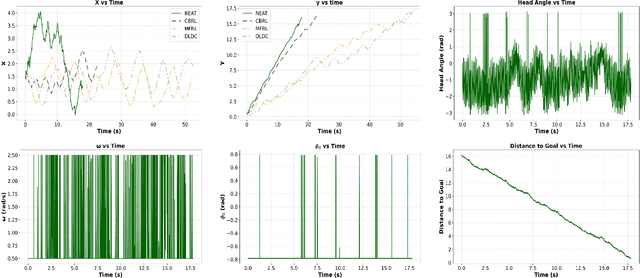

Abstract:This work aims to develop a resource-efficient solution for obstacle-avoiding tracking control of a planar snake robot in a densely cluttered environment with obstacles. Particularly, Neuro-Evolution of Augmenting Topologies (NEAT) has been employed to generate dynamic gait parameters for the serpenoid gait function, which is implemented on the joint angles of the snake robot, thus controlling the robot on a desired dynamic path. NEAT is a single neural-network based evolutionary algorithm that is known to work extremely well when the input layer is of significantly higher dimension and the output layer is of a smaller size. For the planar snake robot, the input layer consists of the joint angles, link positions, head link position as well as obstacle positions in the vicinity. However, the output layer consists of only the frequency and offset angle of the serpenoid gait that control the speed and heading of the robot, respectively. Obstacle data from a LiDAR and the robot data from various sensors, along with the location of the end goal and time, are employed to parametrize a reward function that is maximized over iterations by selective propagation of superior neural networks. The implementation and experimental results showcase that the proposed approach is computationally efficient, especially for large environments with many obstacles. The proposed framework has been verified through a physics engine simulation study on PyBullet. The approach shows superior results to existing state-of-the-art methodologies and comparable results to the very recent CBRL approach with significantly lower computational overhead. The video of the simulation can be found here: https://sites.google.com/view/neatsnakerobot

Neuromorphic Readout for Hadron Calorimeters

Feb 18, 2025Abstract:We simulate hadrons impinging on a homogeneous lead-tungstate (PbWO4) calorimeter to investigate how the resulting light yield and its temporal structure, as detected by an array of light-sensitive sensors, can be processed by a neuromorphic computing system. Our model encodes temporal photon distributions as spike trains and employs a fully connected spiking neural network to estimate the total deposited energy, as well as the position and spatial distribution of the light emissions within the sensitive material. The extracted primitives offer valuable topological information about the shower development in the material, achieved without requiring a segmentation of the active medium. A potential nanophotonic implementation using III-V semiconductor nanowires is discussed. It can be both fast and energy efficient.

Exploring the Potential of Wireless-enabled Multi-Chip AI Accelerators

Jan 29, 2025Abstract:The insatiable appetite of Artificial Intelligence (AI) workloads for computing power is pushing the industry to develop faster and more efficient accelerators. The rigidity of custom hardware, however, conflicts with the need for scalable and versatile architectures capable of catering to the needs of the evolving and heterogeneous pool of Machine Learning (ML) models in the literature. In this context, multi-chiplet architectures assembling multiple (perhaps heterogeneous) accelerators are an appealing option that is unfortunately hindered by the still rigid and inefficient chip-to-chip interconnects. In this paper, we explore the potential of wireless technology as a complement to existing wired interconnects in this multi-chiplet approach. Using an evaluation framework from the state-of-the-art, we show that wireless interconnects can lead to speedups of 10% on average and 20% maximum. We also highlight the importance of load balancing between the wired and wireless interconnects, which will be further explored in future work.

ViM-Disparity: Bridging the Gap of Speed, Accuracy and Memory for Disparity Map Generation

Dec 21, 2024

Abstract:In this work we propose a Visual Mamba (ViM) based architecture, to dissolve the existing trade-off for real-time and accurate model with low computation overhead for disparity map generation (DMG). Moreover, we proposed a performance measure that can jointly evaluate the inference speed, computation overhead and the accurateness of a DMG model.

A Data-Driven Approach to Dataflow-Aware Online Scheduling for Graph Neural Network Inference

Nov 25, 2024Abstract:Graph Neural Networks (GNNs) have shown significant promise in various domains, such as recommendation systems, bioinformatics, and network analysis. However, the irregularity of graph data poses unique challenges for efficient computation, leading to the development of specialized GNN accelerator architectures that surpass traditional CPU and GPU performance. Despite this, the structural diversity of input graphs results in varying performance across different GNN accelerators, depending on their dataflows. This variability in performance due to differing dataflows and graph properties remains largely unexplored, limiting the adaptability of GNN accelerators. To address this, we propose a data-driven framework for dataflow-aware latency prediction in GNN inference. Our approach involves training regressors to predict the latency of executing specific graphs on particular dataflows, using simulations on synthetic graphs. Experimental results indicate that our regressors can predict the optimal dataflow for a given graph with up to 91.28% accuracy and a Mean Absolute Percentage Error (MAPE) of 3.78%. Additionally, we introduce an online scheduling algorithm that uses these regressors to enhance scheduling decisions. Our experiments demonstrate that this algorithm achieves up to $3.17\times$ speedup in mean completion time and $6.26\times$ speedup in mean execution time compared to the best feasible baseline across all datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge