Sergi Abadal

Neural 3D Object Reconstruction with Small-Scale Unmanned Aerial Vehicles

Sep 15, 2025

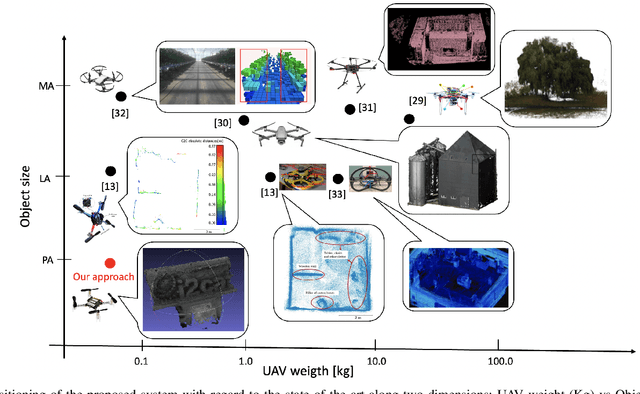

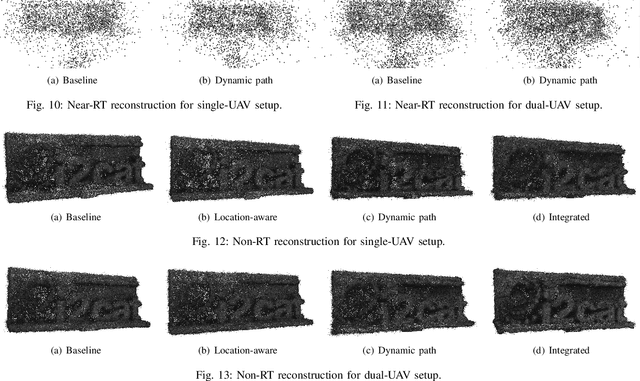

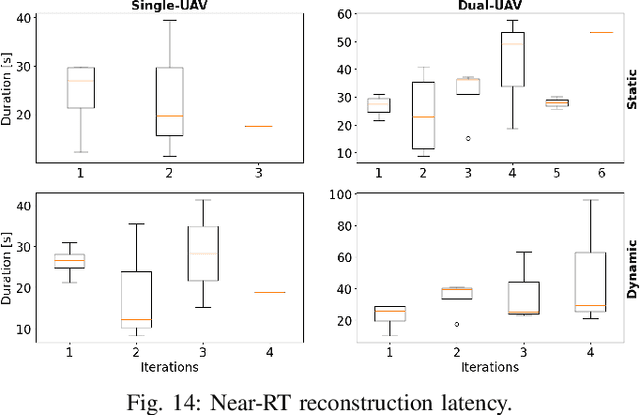

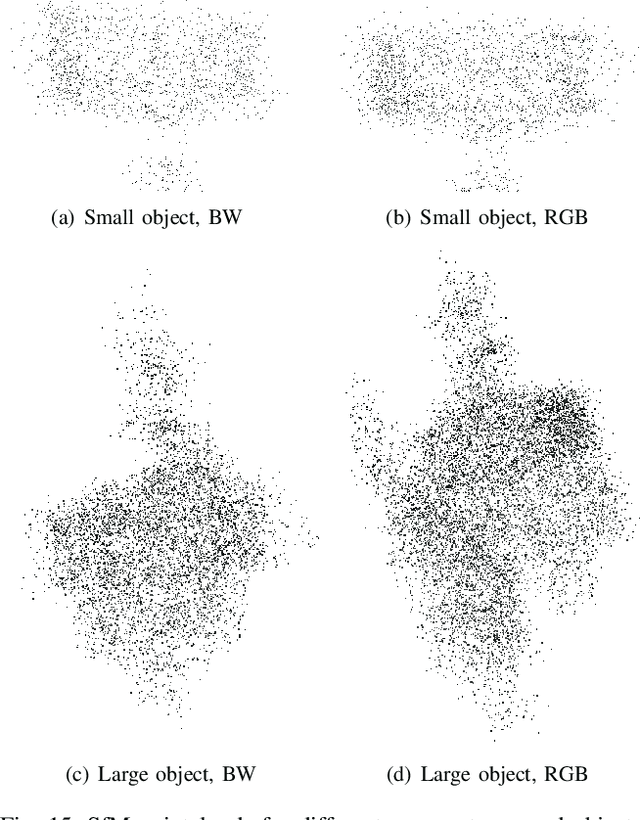

Abstract:Small Unmanned Aerial Vehicles (UAVs) exhibit immense potential for navigating indoor and hard-to-reach areas, yet their significant constraints in payload and autonomy have largely prevented their use for complex tasks like high-quality 3-Dimensional (3D) reconstruction. To overcome this challenge, we introduce a novel system architecture that enables fully autonomous, high-fidelity 3D scanning of static objects using UAVs weighing under 100 grams. Our core innovation lies in a dual-reconstruction pipeline that creates a real-time feedback loop between data capture and flight control. A near-real-time (near-RT) process uses Structure from Motion (SfM) to generate an instantaneous pointcloud of the object. The system analyzes the model quality on the fly and dynamically adapts the UAV's trajectory to intelligently capture new images of poorly covered areas. This ensures comprehensive data acquisition. For the final, detailed output, a non-real-time (non-RT) pipeline employs a Neural Radiance Fields (NeRF)-based Neural 3D Reconstruction (N3DR) approach, fusing SfM-derived camera poses with precise Ultra Wide-Band (UWB) location data to achieve superior accuracy. We implemented and validated this architecture using Crazyflie 2.1 UAVs. Our experiments, conducted in both single- and multi-UAV configurations, conclusively show that dynamic trajectory adaptation consistently improves reconstruction quality over static flight paths. This work demonstrates a scalable and autonomous solution that unlocks the potential of miniaturized UAVs for fine-grained 3D reconstruction in constrained environments, a capability previously limited to much larger platforms.

Quantum Computing for Large-scale Network Optimization: Opportunities and Challenges

Sep 09, 2025Abstract:The complexity of large-scale 6G-and-beyond networks demands innovative approaches for multi-objective optimization over vast search spaces, a task often intractable. Quantum computing (QC) emerges as a promising technology for efficient large-scale optimization. We present our vision of leveraging QC to tackle key classes of problems in future mobile networks. By analyzing and identifying common features, particularly their graph-centric representation, we propose a unified strategy involving QC algorithms. Specifically, we outline a methodology for optimization using quantum annealing as well as quantum reinforcement learning. Additionally, we discuss the main challenges that QC algorithms and hardware must overcome to effectively optimize future networks.

Communicating Smartly in the Molecular Domain: Neural Networks in the Internet of Bio-Nano Things

Jun 26, 2025Abstract:Recent developments in the Internet of Bio-Nano Things (IoBNT) are laying the groundwork for innovative applications across the healthcare sector. Nanodevices designed to operate within the body, managed remotely via the internet, are envisioned to promptly detect and actuate on potential diseases. In this vision, an inherent challenge arises due to the limited capabilities of individual nanosensors; specifically, nanosensors must communicate with one another to collaborate as a cluster. Aiming to research the boundaries of the clustering capabilities, this survey emphasizes data-driven communication strategies in molecular communication (MC) channels as a means of linking nanosensors. Relying on the flexibility and robustness of machine learning (ML) methods to tackle the dynamic nature of MC channels, the MC research community frequently refers to neural network (NN) architectures. This interdisciplinary research field encompasses various aspects, including the use of NNs to facilitate communication in MC environments, their implementation at the nanoscale, explainable approaches for NNs, and dataset generation for training. Within this survey, we provide a comprehensive analysis of fundamental perspectives on recent trends in NN architectures for MC, the feasibility of their implementation at the nanoscale, applied explainable artificial intelligence (XAI) techniques, and the accessibility of datasets along with best practices for their generation. Additionally, we offer open-source code repositories that illustrate NN-based methods to support reproducible research for key MC scenarios. Finally, we identify emerging research challenges, such as robust NN architectures, biologically integrated NN modules, and scalable training strategies.

Experimental Assessment of A Framework for In-body RF-backscattering Localization

Jun 24, 2025Abstract:Localization of in-body devices is beneficial for Gastrointestinal (GI) diagnosis and targeted treatment. Traditional methods such as imaging and endoscopy are invasive and limited in resolution, highlighting the need for innovative alternatives. This study presents an experimental framework for Radio Frequency (RF)-backscatter-based in-body localization, inspired by the ReMix approach, and evaluates its performance in real-world conditions. The experimental setup includes an in-body backscatter device and various off-body antenna configurations to investigate harmonic generation and reception in air, chicken and pork tissues. The results indicate that optimal backscatter device positioning, antenna selection, and gain settings significantly impact performance, with denser biological tissues leading to greater attenuation. The study also highlights challenges such as external interference and plastic enclosures affecting propagation. The findings emphasize the importance of interference mitigation and refined propagation models to enhance performance.

Experimental Assessment of Neural 3D Reconstruction for Small UAV-based Applications

Jun 24, 2025Abstract:The increasing miniaturization of Unmanned Aerial Vehicles (UAVs) has expanded their deployment potential to indoor and hard-to-reach areas. However, this trend introduces distinct challenges, particularly in terms of flight dynamics and power consumption, which limit the UAVs' autonomy and mission capabilities. This paper presents a novel approach to overcoming these limitations by integrating Neural 3D Reconstruction (N3DR) with small UAV systems for fine-grained 3-Dimensional (3D) digital reconstruction of small static objects. Specifically, we design, implement, and evaluate an N3DR-based pipeline that leverages advanced models, i.e., Instant-ngp, Nerfacto, and Splatfacto, to improve the quality of 3D reconstructions using images of the object captured by a fleet of small UAVs. We assess the performance of the considered models using various imagery and pointcloud metrics, comparing them against the baseline Structure from Motion (SfM) algorithm. The experimental results demonstrate that the N3DR-enhanced pipeline significantly improves reconstruction quality, making it feasible for small UAVs to support high-precision 3D mapping and anomaly detection in constrained environments. In more general terms, our results highlight the potential of N3DR in advancing the capabilities of miniaturized UAV systems.

Beyond One-Size-Fits-All: A Study of Neural and Behavioural Variability Across Different Recommendation Categories

Jun 16, 2025Abstract:Traditionally, Recommender Systems (RS) have primarily measured performance based on the accuracy and relevance of their recommendations. However, this algorithmic-centric approach overlooks how different types of recommendations impact user engagement and shape the overall quality of experience. In this paper, we shift the focus to the user and address for the first time the challenge of decoding the neural and behavioural variability across distinct recommendation categories, considering more than just relevance. Specifically, we conducted a controlled study using a comprehensive e-commerce dataset containing various recommendation types, and collected Electroencephalography and behavioural data. We analysed both neural and behavioural responses to recommendations that were categorised as Exact, Substitute, Complement, or Irrelevant products within search query results. Our findings offer novel insights into user preferences and decision-making processes, revealing meaningful relationships between behavioural and neural patterns for each category, but also indicate inter-subject variability.

Frequency-Dependent Power Consumption Modeling of CMOS Transmitters for WNoC Architectures

May 19, 2025Abstract:Wireless Network-on-Chip (WNoC) systems, which wirelessly interconnect the chips of a computing system, have been proposed as a complement to existing chip-to-chip wired links. However, their feasibility depends on the availability of custom-designed high-speed, tiny, ultra-efficient transceivers. This represents a challenge due to the tradeoffs between bandwidth, area, and energy efficiency that are found as frequency increases, which suggests that there is an optimal frequency region. To aid in the search for such an optimal design point, this paper presents a behavioral model that quantifies the expected power consumption of oscillators, mixers, and power amplifiers as a function of frequency. The model is built on extensive surveys of the respective sub-blocks, all based on experimental data. By putting together the models of the three sub-blocks, a comprehensive power model is obtained, which will aid in selecting the optimal operating frequency for WNoC systems.

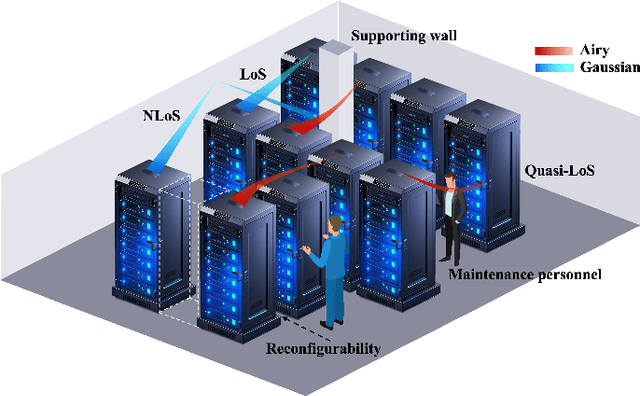

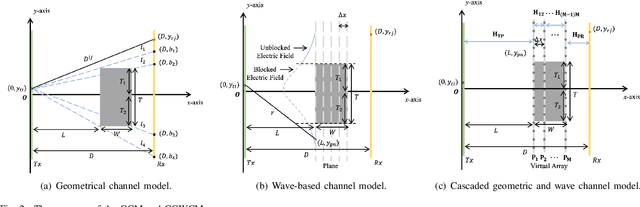

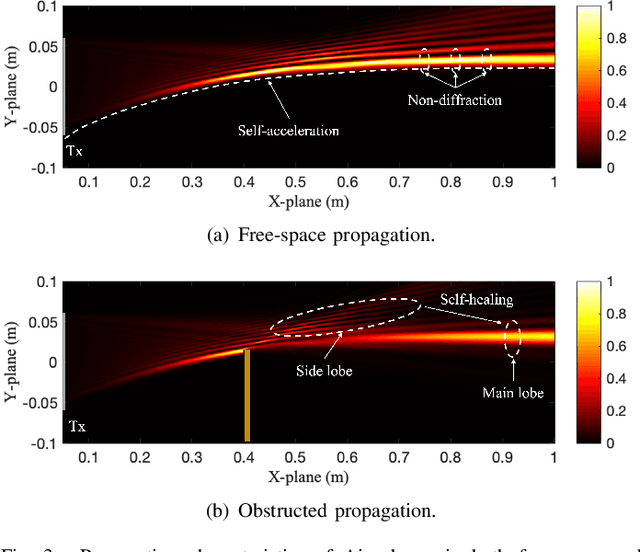

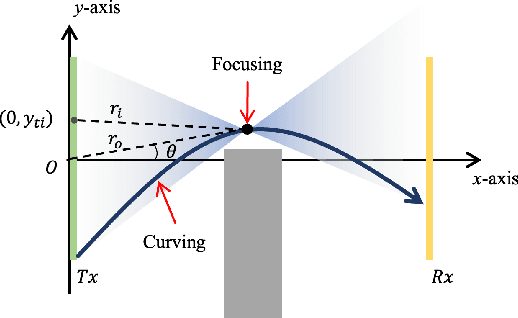

Terahertz Wireless Data Center: Gaussian Beam or Airy Beam?

Apr 29, 2025

Abstract:Terahertz (THz) communication is emerging as a pivotal enabler for 6G and beyond wireless systems owing to its multi-GHz bandwidth. One of its novel applications is in wireless data centers, where it enables ultra-high data rates while enhancing network reconfigurability and scalability. However, due to numerous racks, supporting walls, and densely deployed antennas, the line-of-sight (LoS) path in data centers is often instead of fully obstructed, resulting in quasi-LoS propagation and degradation of spectral efficiency. To address this issue, Airy beam-based hybrid beamforming is investigated in this paper as a promising technique to mitigate quasi-LoS propagation and enhance spectral efficiency in THz wireless data centers. Specifically, a cascaded geometrical and wave-based channel model (CGWCM) is proposed for quasi-LoS scenarios, which accounts for diffraction effects while being more simplified than conventional wave-based model. Then, the characteristics and generation of the Airy beam are analyzed, and beam search methods for quasi-LoS scenarios are proposed, including hierarchical focusing-Airy beam search, and low-complexity beam search. Simulation results validate the effectiveness of the CGWCM and demonstrate the superiority of the Airy beam over Gaussian beams in mitigating blockages, verifying its potential for practical THz wireless communication in data centers.

OASST-ETC Dataset: Alignment Signals from Eye-tracking Analysis of LLM Responses

Mar 13, 2025

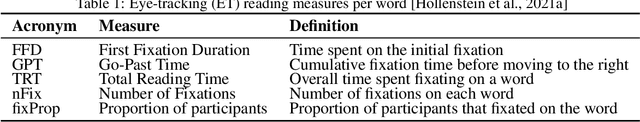

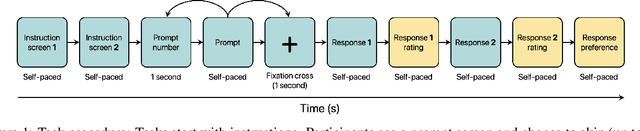

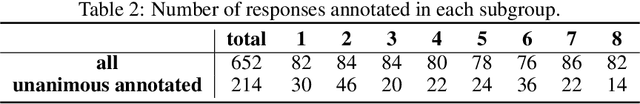

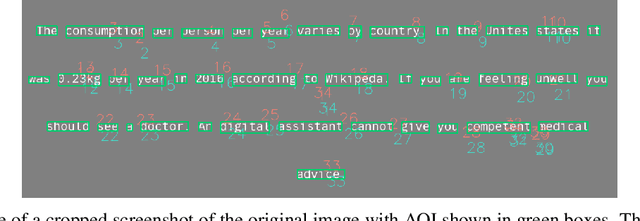

Abstract:While Large Language Models (LLMs) have significantly advanced natural language processing, aligning them with human preferences remains an open challenge. Although current alignment methods rely primarily on explicit feedback, eye-tracking (ET) data offers insights into real-time cognitive processing during reading. In this paper, we present OASST-ETC, a novel eye-tracking corpus capturing reading patterns from 24 participants, while evaluating LLM-generated responses from the OASST1 dataset. Our analysis reveals distinct reading patterns between preferred and non-preferred responses, which we compare with synthetic eye-tracking data. Furthermore, we examine the correlation between human reading measures and attention patterns from various transformer-based models, discovering stronger correlations in preferred responses. This work introduces a unique resource for studying human cognitive processing in LLM evaluation and suggests promising directions for incorporating eye-tracking data into alignment methods. The dataset and analysis code are publicly available.

Exploring the Potential of Wireless-enabled Multi-Chip AI Accelerators

Jan 29, 2025Abstract:The insatiable appetite of Artificial Intelligence (AI) workloads for computing power is pushing the industry to develop faster and more efficient accelerators. The rigidity of custom hardware, however, conflicts with the need for scalable and versatile architectures capable of catering to the needs of the evolving and heterogeneous pool of Machine Learning (ML) models in the literature. In this context, multi-chiplet architectures assembling multiple (perhaps heterogeneous) accelerators are an appealing option that is unfortunately hindered by the still rigid and inefficient chip-to-chip interconnects. In this paper, we explore the potential of wireless technology as a complement to existing wired interconnects in this multi-chiplet approach. Using an evaluation framework from the state-of-the-art, we show that wireless interconnects can lead to speedups of 10% on average and 20% maximum. We also highlight the importance of load balancing between the wired and wireless interconnects, which will be further explored in future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge