Zih-Yun Chiu

Differentiable Rendering-based Pose Estimation for Surgical Robotic Instruments

Mar 07, 2025

Abstract:Robot pose estimation is a challenging and crucial task for vision-based surgical robotic automation. Typical robotic calibration approaches, however, are not applicable to surgical robots, such as the da Vinci Research Kit (dVRK), due to joint angle measurement errors from cable-drives and the partially visible kinematic chain. Hence, previous works in surgical robotic automation used tracking algorithms to estimate the pose of the surgical tool in real-time and compensate for the joint angle errors. However, a big limitation of these previous tracking works is the initialization step which relied on only keypoints and SolvePnP. In this work, we fully explore the potential of geometric primitives beyond just keypoints with differentiable rendering, cylinders, and construct a versatile pose matching pipeline in a novel pose hypothesis space. We demonstrate the state-of-the-art performance of our single-shot calibration method with both calibration consistency and real surgical tasks. As a result, this marker-less calibration approach proves to be a robust and generalizable initialization step for surgical tool tracking.

SURESTEP: An Uncertainty-Aware Trajectory Optimization Framework to Enhance Visual Tool Tracking for Robust Surgical Automation

Mar 29, 2024Abstract:Inaccurate tool localization is one of the main reasons for failures in automating surgical tasks. Imprecise robot kinematics and noisy observations caused by the poor visual acuity of an endoscopic camera make tool tracking challenging. Previous works in surgical automation adopt environment-specific setups or hard-coded strategies instead of explicitly considering motion and observation uncertainty of tool tracking in their policies. In this work, we present SURESTEP, an uncertainty-aware trajectory optimization framework for robust surgical automation. We model the uncertainty of tool tracking with the components motivated by the sources of noise in typical surgical scenes. Using a Gaussian assumption to propagate our uncertainty models through a given tool trajectory, SURESTEP provides a general framework that minimizes the upper bound on the entropy of the final estimated tool distribution. We compare SURESTEP with a baseline method on a real-world suture needle regrasping task under challenging environmental conditions, such as poor lighting and a moving endoscopic camera. The results over 60 regrasps on the da Vinci Research Kit (dVRK) demonstrate that our optimized trajectories significantly outperform the un-optimized baseline.

Robust Surgical Tool Tracking with Pixel-based Probabilities for Projected Geometric Primitives

Mar 08, 2024

Abstract:Controlling robotic manipulators via visual feedback requires a known coordinate frame transformation between the robot and the camera. Uncertainties in mechanical systems as well as camera calibration create errors in this coordinate frame transformation. These errors result in poor localization of robotic manipulators and create a significant challenge for applications that rely on precise interactions between manipulators and the environment. In this work, we estimate the camera-to-base transform and joint angle measurement errors for surgical robotic tools using an image based insertion-shaft detection algorithm and probabilistic models. We apply our proposed approach in both a structured environment as well as an unstructured environment and measure to demonstrate the efficacy of our methods.

Finding Biomechanically Safe Trajectories for Robot Manipulation of the Human Body in a Search and Rescue Scenario

Sep 26, 2023

Abstract:There has been increasing awareness of the difficulties in reaching and extracting people from mass casualty scenarios, such as those arising from natural disasters. While platforms have been designed to consider reaching casualties and even carrying them out of harm's way, the challenge of repositioning a casualty from its found configuration to one suitable for extraction has not been explicitly explored. Furthermore, this planning problem needs to incorporate biomechanical safety considerations for the casualty. Thus, we present a first solution to biomechanically safe trajectory generation for repositioning limbs of unconscious human casualties. We describe biomechanical safety as mathematical constraints, mechanical descriptions of the dynamics for the robot-human coupled system, and the planning and trajectory optimization process that considers this coupled and constrained system. We finally evaluate our approach over several variations of the problem and demonstrate it on a real robot and human subject. This work provides a crucial part of search and rescue that can be used in conjunction with past and present works involving robots and vision systems designed for search and rescue.

Semantic-SuPer: A Semantic-aware Surgical Perception Framework for Endoscopic Tissue Classification, Reconstruction, and Tracking

Oct 29, 2022

Abstract:Accurate and robust tracking and reconstruction of the surgical scene is a critical enabling technology toward autonomous robotic surgery. Existing algorithms for 3D perception in surgery mainly rely on geometric information, while we propose to also leverage semantic information inferred from the endoscopic video using image segmentation algorithms. In this paper, we present a novel, comprehensive surgical perception framework, Semantic-SuPer, that integrates geometric and semantic information to facilitate data association, 3D reconstruction, and tracking of endoscopic scenes, benefiting downstream tasks like surgical navigation. The proposed framework is demonstrated on challenging endoscopic data with deforming tissue, showing its advantages over our baseline and several other state-of the-art approaches. Our code and dataset will be available at https://github.com/ucsdarclab/Python-SuPer.

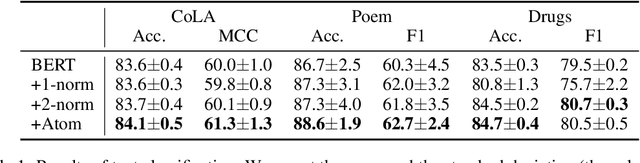

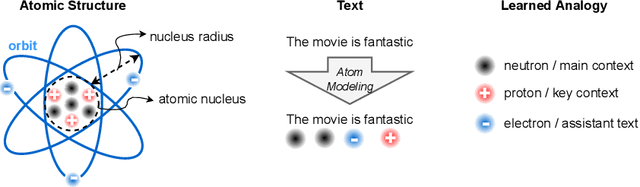

Atomized Deep Learning Models

Oct 07, 2022

Abstract:Deep learning models often tackle the intra-sample structure, such as the order of words in a sentence and pixels in an image, but have not pay much attention to the inter-sample relationship. In this paper, we show that explicitly modeling the inter-sample structure to be more discretized can potentially help model's expressivity. We propose a novel method, Atom Modeling, that can discretize a continuous latent space by drawing an analogy between a data point and an atom, which is naturally spaced away from other atoms with distances depending on their intra structures. Specifically, we model each data point as an atom composed of electrons, protons, and neutrons and minimize the potential energy caused by the interatomic force among data points. Through experiments with qualitative analysis in our proposed Atom Modeling on synthetic and real datasets, we find that Atom Modeling can improve the performance by maintaining the inter-sample relation and can capture an interpretable intra-sample relation by mapping each component in a data point to electron/proton/neutron.

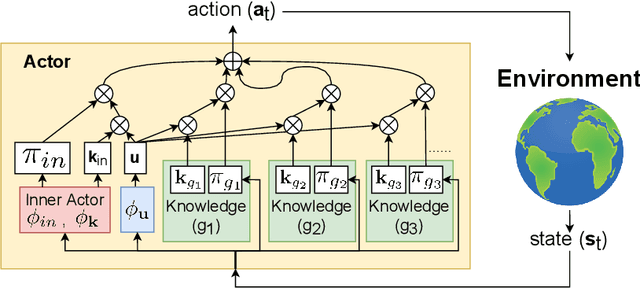

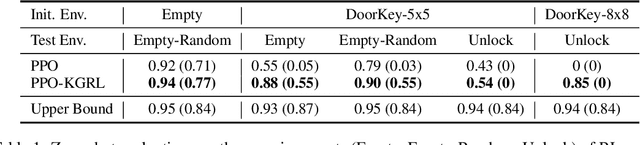

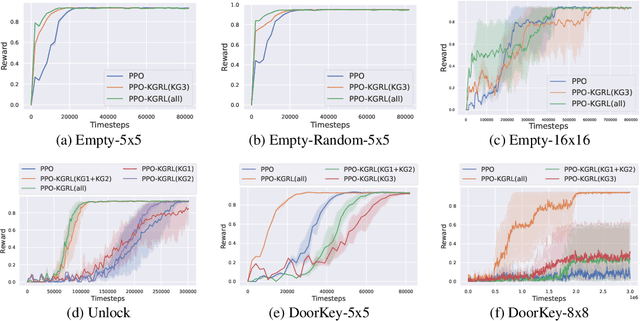

Knowledge-Grounded Reinforcement Learning

Oct 07, 2022

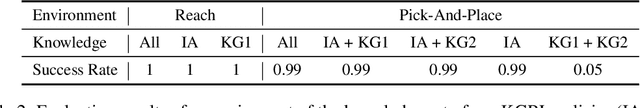

Abstract:Receiving knowledge, abiding by laws, and being aware of regulations are common behaviors in human society. Bearing in mind that reinforcement learning (RL) algorithms benefit from mimicking humanity, in this work, we propose that an RL agent can act on external guidance in both its learning process and model deployment, making the agent more socially acceptable. We introduce the concept, Knowledge-Grounded RL (KGRL), with a formal definition that an agent learns to follow external guidelines and develop its own policy. Moving towards the goal of KGRL, we propose a novel actor model with an embedding-based attention mechanism that can attend to either a learnable internal policy or external knowledge. The proposed method is orthogonal to training algorithms, and the external knowledge can be flexibly recomposed, rearranged, and reused in both training and inference stages. Through experiments on tasks with discrete and continuous action space, our KGRL agent is shown to be more sample efficient and generalizable, and it has flexibly rearrangeable knowledge embeddings and interpretable behaviors.

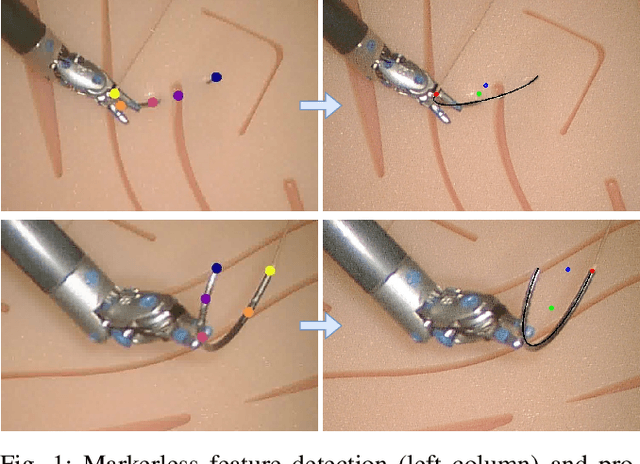

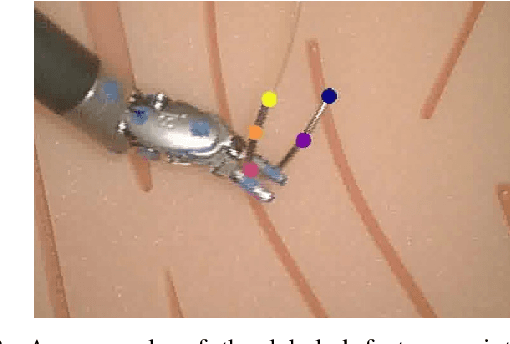

Markerless Suture Needle 6D Pose Tracking with Robust Uncertainty Estimation for Autonomous Minimally Invasive Robotic Surgery

Sep 26, 2021

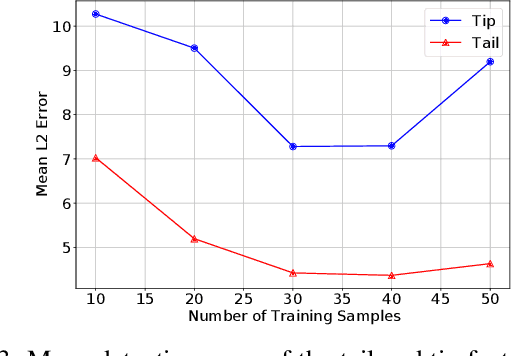

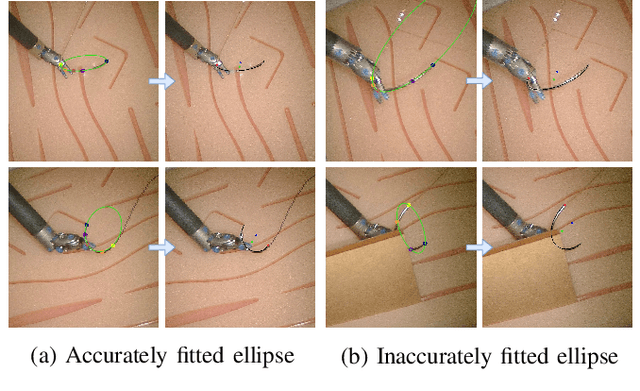

Abstract:Suture needle localization plays a crucial role towards autonomous suturing. To track the 6D pose of a suture needle robustly, previous approaches usually add markers on the needle or perform complex operations for feature extraction, making these methods difficult to be applicable to real-world environments. Therefore in this work, we present a novel approach for markerless suture needle pose tracking using Bayesian filters. A data-efficient feature point detector is trained to extract the feature points on the needle. Then based on these detections, we propose a novel observation model that measures the overlap between the detections and the expected projection of the needle, which can be calculated efficiently. In addition, for the proposed method, we derive the approximation for the covariance of the observation noise, making this model more robust to the uncertainty in the detections. The experimental results in simulation show that the proposed observation model achieves low tracking errors of approximately 1.5mm in position in space and 1 degree in orientation. We also demonstrate the qualitative results of our trained markerless feature detector combined with the proposed observation model in real-world environments. The results show high consistency between the projection of the tracked pose and that of the real pose.

Parallelized Reverse Curriculum Generation

Aug 04, 2021

Abstract:For reinforcement learning (RL), it is challenging for an agent to master a task that requires a specific series of actions due to sparse rewards. To solve this problem, reverse curriculum generation (RCG) provides a reverse expansion approach that automatically generates a curriculum for the agent to learn. More specifically, RCG adapts the initial state distribution from the neighborhood of a goal to a distance as training proceeds. However, the initial state distribution generated for each iteration might be biased, thus making the policy overfit or slowing down the reverse expansion rate. While training RCG for actor-critic (AC) based RL algorithms, this poor generalization and slow convergence might be induced by the tight coupling between an AC pair. Therefore, we propose a parallelized approach that simultaneously trains multiple AC pairs and periodically exchanges their critics. We empirically demonstrate that this proposed approach can improve RCG in performance and convergence, and it can also be applied to other AC based RL algorithms with adapted initial state distribution.

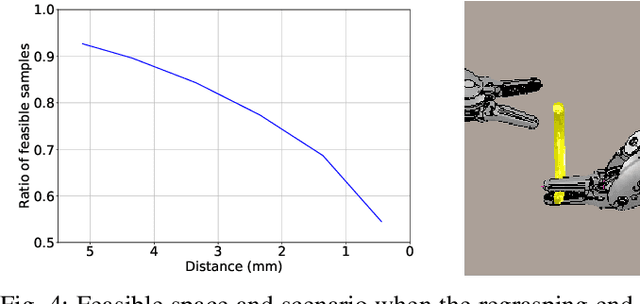

Bimanual Regrasping for Suture Needles using Reinforcement Learning for Rapid Motion Planning

Nov 09, 2020

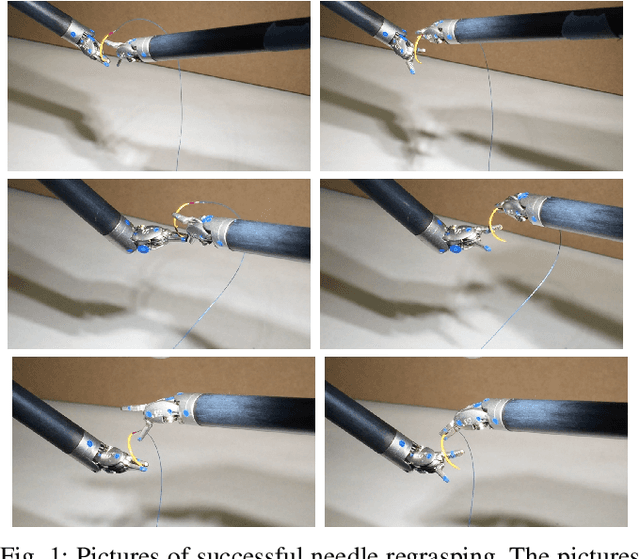

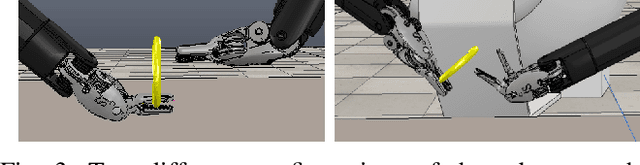

Abstract:Regrasping a suture needle is an important process in suturing, and previous study has shown that it takes on average 7.4s before the needle is thrown again. To bring efficiency into suturing, prior work either designs a task-specific mechanism or guides the gripper toward some specific pick-up point for proper grasping of a needle. Yet, these methods are usually not deployable when the working space is changed. These prior efforts highlight the need for more efficient regrasping and more generalizability of a proposed method. Therefore, in this work, we present rapid trajectory generation for bimanual needle regrasping via reinforcement learning (RL). Demonstrations from a sampling-based motion planning algorithm is incorporated to speed up the learning. In addition, we propose the ego-centric state and action spaces for this bimanual planning problem, where the reference frames are on the end-effectors instead of some fixed frame. Thus, the learned policy can be directly applied to any robot configuration and even to different robot arms. Our experiments in simulation show that the success rate of a single pass is 97%, and the planning time is 0.0212s on average, which outperforms other widely used motion planning algorithms. For the real-world experiments, the success rate is 73.3% if the needle pose is reconstructed from an RGB image, with a planning time of 0.0846s and a run time of 5.1454s. If the needle pose is known beforehand, the success rate becomes 90.5%, with a planning time of 0.0807s and a run time of 2.8801s.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge