Ryan K. Orosco

HemoSet: The First Blood Segmentation Dataset for Automation of Hemostasis Management

Mar 24, 2024

Abstract:Hemorrhaging occurs in surgeries of all types, forcing surgeons to quickly adapt to the visual interference that results from blood rapidly filling the surgical field. Introducing automation into the crucial surgical task of hemostasis management would offload mental and physical tasks from the surgeon and surgical assistants while simultaneously increasing the efficiency and safety of the operation. The first step in automation of hemostasis management is detection of blood in the surgical field. To propel the development of blood detection algorithms in surgeries, we present HemoSet, the first blood segmentation dataset based on bleeding during a live animal robotic surgery. Our dataset features vessel hemorrhage scenarios where turbulent flow leads to abnormal pooling geometries in surgical fields. These pools are formed in conditions endemic to surgical procedures -- uneven heterogeneous tissue, under glossy lighting conditions and rapid tool movement. We benchmark several state-of-the-art segmentation models and provide insight into the difficulties specific to blood detection. We intend for HemoSet to spur development of autonomous blood suction tools by providing a platform for training and refining blood segmentation models, addressing the precision needed for such robotics.

Image Based Reconstruction of Liquids from 2D Surface Detections

Nov 22, 2021

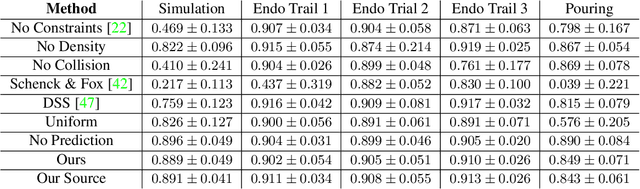

Abstract:In this work, we present a solution to the challenging problem of reconstructing liquids from image data. The challenges in reconstructing liquids, which is not faced in previous reconstruction works on rigid and deforming surfaces, lies in the inability to use depth sensing and color features due the variable index of refraction, opacity, and environmental reflections. Therefore, we limit ourselves to only surface detections (i.e. binary mask) of liquids as observations and do not assume any prior knowledge on the liquids properties. A novel optimization problem is posed which reconstructs the liquid as particles by minimizing the error between a rendered surface from the particles and the surface detections while satisfying liquid constraints. Our solvers to this optimization problem are presented and no training data is required to apply them. We also propose a dynamic prediction to seed the reconstruction optimization from the previous time-step. We test our proposed methods in simulation and on two new liquid datasets which we open source so the broader research community can continue developing in this under explored area.

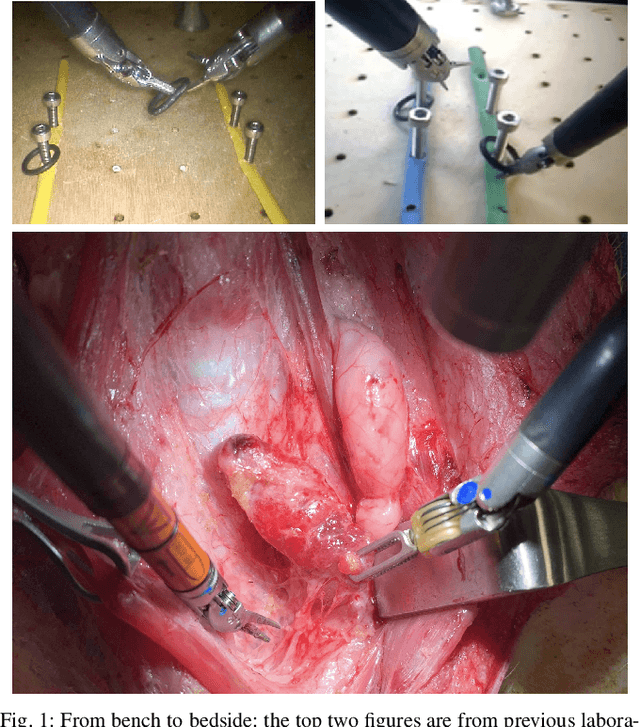

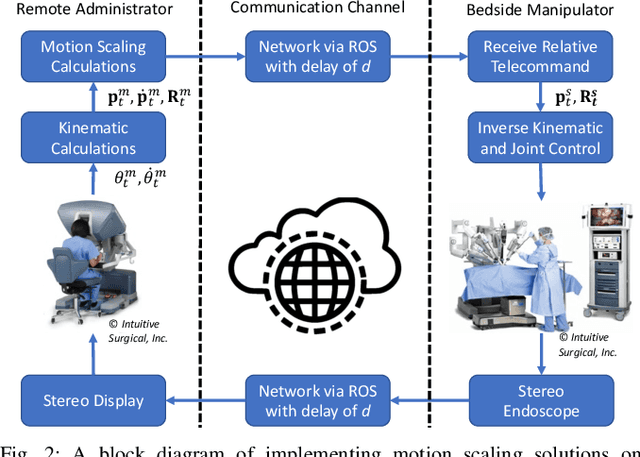

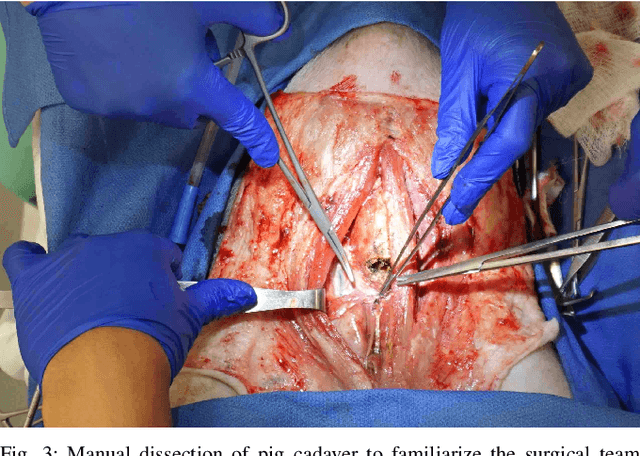

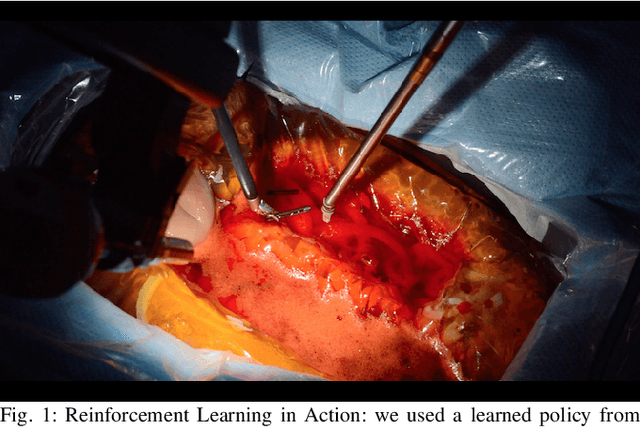

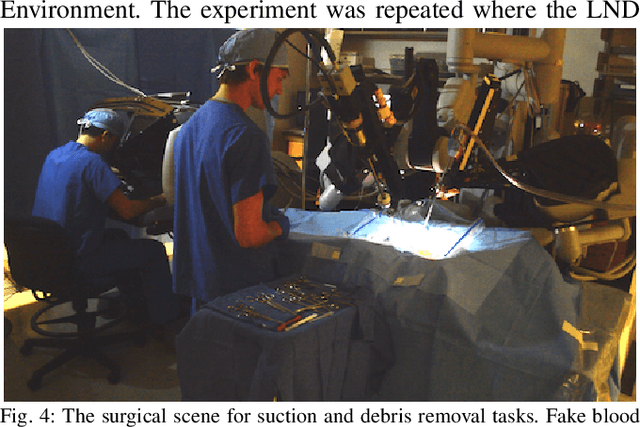

From Bench to Bedside: The First Live Robotic Surgery on the dVRK to Enable Remote Telesurgery with Motion Scaling

Sep 24, 2021

Abstract:Innovations from surgical robotic research rarely translates to live surgery due to the significant difference between the lab and a live environment. Live environments require considerations that are often overlooked during early stages of research such as surgical staff, surgical procedure, and the challenges of working with live tissue. One such example is the da Vinci Research Kit (dVRK) which is used by over 40 robotics research groups and represents an open-sourced version of the da Vinci Surgical System. Despite dVRK being available for nearly a decade and the ideal candidate for translating research to practice on over 5,000 da Vinci Systems used in hospitals around the world, not one live surgery has been conducted with it. In this paper, we address the challenges, considerations, and solutions for translating surgical robotic research from bench-to-bedside. This is explained from the perspective of a remote telesurgery scenario where motion scaling solutions previously experimented in a lab setting are translated to a live pig surgery. This study presents results from the first ever use of a dVRK in a live animal and discusses how the surgical robotics community can approach translating their research to practice.

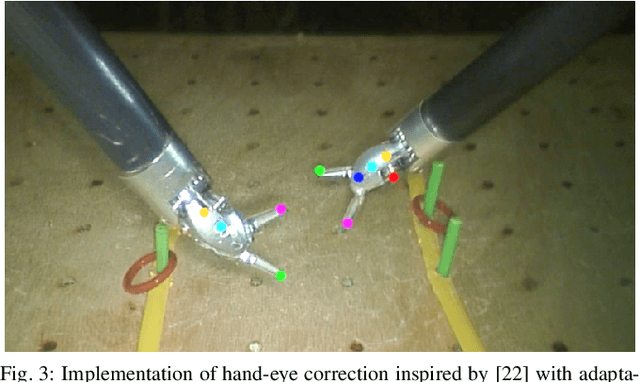

Robotic Tool Tracking under Partially Visible Kinematic Chain: A Unified Approach

Feb 11, 2021

Abstract:Anytime a robot manipulator is controlled via visual feedback, the transformation between the robot and camera frame must be known. However, in the case where cameras can only capture a portion of the robot manipulator in order to better perceive the environment being interacted with, there is greater sensitivity to errors in calibration of the base-to-camera transform. A secondary source of uncertainty during robotic control are inaccuracies in joint angle measurements which can be caused by biases in positioning and complex transmission effects such as backlash and cable stretch. In this work, we bring together these two sets of unknown parameters into a unified problem formulation when the kinematic chain is partially visible in the camera view. We prove that these parameters are non-identifiable implying that explicit estimation of them is infeasible. To overcome this, we derive a smaller set of parameters we call Lumped Error since it lumps together the errors of calibration and joint angle measurements. A particle filter method is presented and tested in simulation and on two real world robots to estimate the Lumped Error and show the efficiency of this parameter reduction.

Bimanual Regrasping for Suture Needles using Reinforcement Learning for Rapid Motion Planning

Nov 09, 2020

Abstract:Regrasping a suture needle is an important process in suturing, and previous study has shown that it takes on average 7.4s before the needle is thrown again. To bring efficiency into suturing, prior work either designs a task-specific mechanism or guides the gripper toward some specific pick-up point for proper grasping of a needle. Yet, these methods are usually not deployable when the working space is changed. These prior efforts highlight the need for more efficient regrasping and more generalizability of a proposed method. Therefore, in this work, we present rapid trajectory generation for bimanual needle regrasping via reinforcement learning (RL). Demonstrations from a sampling-based motion planning algorithm is incorporated to speed up the learning. In addition, we propose the ego-centric state and action spaces for this bimanual planning problem, where the reference frames are on the end-effectors instead of some fixed frame. Thus, the learned policy can be directly applied to any robot configuration and even to different robot arms. Our experiments in simulation show that the success rate of a single pass is 97%, and the planning time is 0.0212s on average, which outperforms other widely used motion planning algorithms. For the real-world experiments, the success rate is 73.3% if the needle pose is reconstructed from an RGB image, with a planning time of 0.0846s and a run time of 5.1454s. If the needle pose is known beforehand, the success rate becomes 90.5%, with a planning time of 0.0807s and a run time of 2.8801s.

Autonomous Robotic Suction to Clear the Surgical Field for Hemostasis using Image-based Blood Flow Detection

Oct 16, 2020

Abstract:Autonomous robotic surgery has seen significant progression over the last decade with the aims of reducing surgeon fatigue, improving procedural consistency, and perhaps one day take over surgery itself. However, automation has not been applied to the critical surgical task of controlling tissue and blood vessel bleeding--known as hemostasis. The task of hemostasis covers a spectrum of bleeding sources and a range of blood velocity, trajectory, and volume. In an extreme case, an un-controlled blood vessel fills the surgical field with flowing blood. In this work, we present the first, automated solution for hemostasis through development of a novel probabilistic blood flow detection algorithm and a trajectory generation technique that guides autonomous suction tools towards pooling blood. The blood flow detection algorithm is tested in both simulated scenes and in a real-life trauma scenario involving a hemorrhage that occurred during thyroidectomy. The complete solution is tested in a physical lab setting with the da Vinci Research Kit (dVRK) and a simulated surgical cavity for blood to flow through. The results show that our automated solution has accurate detection, a fast reaction time, and effective removal of the flowing blood. Therefore, the proposed methods are powerful tools to clearing the surgical field which can be followed by either a surgeon or future robotic automation developments to close the vessel rupture.

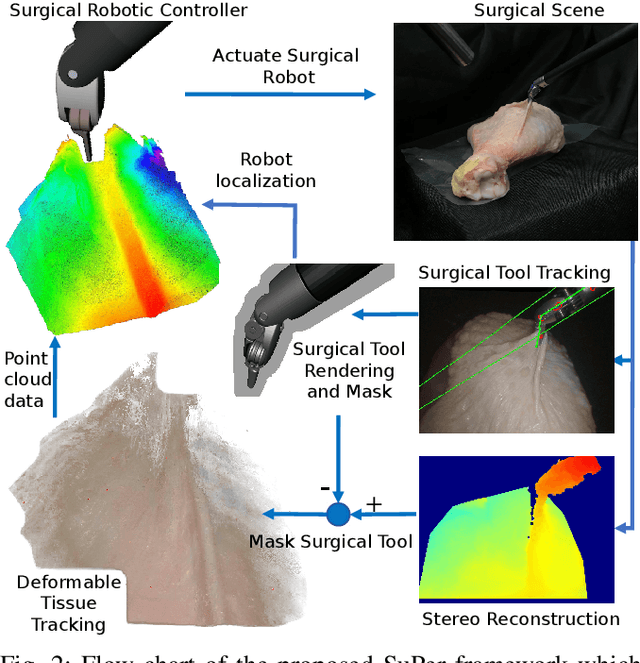

SuPer: A Surgical Perception Framework for Endoscopic Tissue Manipulation with Surgical Robotics

Sep 11, 2019

Abstract:Traditional control and task automation have been successfully demonstrated in a variety of structured, controlled environments through the use of highly specialized modeled robotic systems in conjunction with multiple sensors. However, application of autonomy in endoscopic surgery is very challenging, particularly in soft tissue work, due to the lack of high-quality images and the unpredictable, constantly deforming environment. In this work, we propose a novel surgical perception framework, SuPer, for surgical robotic control. This framework continuously collects 3D geometric information that allows for mapping of a deformable surgical field while tracking rigid instruments within the field. To achieve this, a model-based tracker is employed to localize the surgical tool with a kinematic prior in conjunction with a model-free tracker to reconstruct the deformable environment and provide an estimated point cloud as a mapping of the environment. The proposed framework was implemented on the da Vinci Surgical System in real-time with an end-effector controller where the target configurations are set and regulated through the framework. Our proposed framework successfully completed autonomous soft tissue manipulation tasks with high accuracy. The demonstration of this novel framework is promising for the future of surgical autonomy. In addition, we provide our dataset for further surgical research.

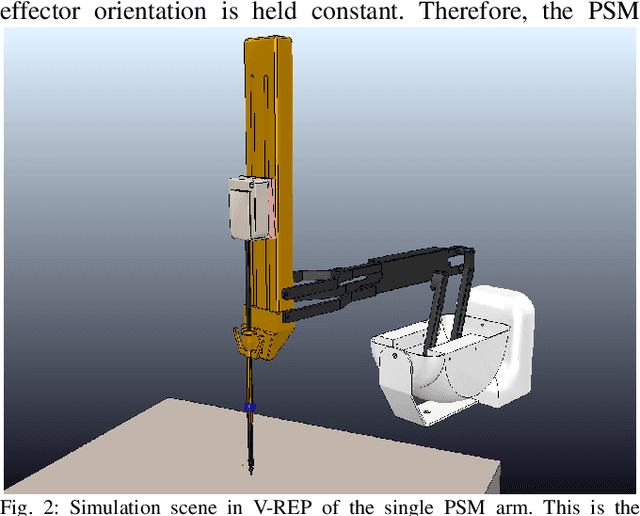

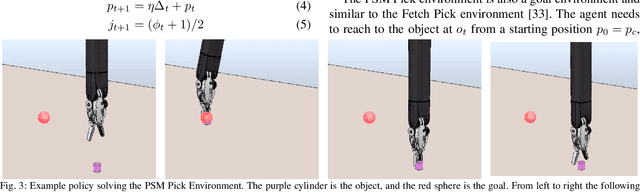

Open-Sourced Reinforcement Learning Environments for Surgical Robotics

Mar 05, 2019

Abstract:Reinforcement Learning (RL) is a machine learning framework for artificially intelligent systems to solve a variety of complex problems. Recent years has seen a surge of successes solving challenging games and smaller domain problems, including simple though non-specific robotic manipulation and grasping tasks. Rapid successes in RL have come in part due to the strong collaborative effort by the RL community to work on common, open-sourced environment simulators such as OpenAI's Gym that allow for expedited development and valid comparisons between different, state-of-art strategies. In this paper, we aim to bridge the RL and the surgical robotics communities by presenting the first open-sourced reinforcement learning environments for surgical robotics, called dVRL. Through the proposed RL environment, which are functionally equivalent to Gym, we show that it is easy to prototype and implement state-of-art RL algorithms on surgical robotics problems that aim to introduce autonomous robotic precision and accuracy to assisting, collaborative, or repetitive tasks during surgery. Learned policies are furthermore successfully transferable to a real robot. Finally, combining dVRL with the over 40+ international network of da Vinci Surgical Research Kits in active use at academic institutions, we see dVRL as enabling the broad surgical robotics community to fully leverage the newest strategies in reinforcement learning, and for reinforcement learning scientists with no knowledge of surgical robotics to test and develop new algorithms that can solve the real-world, high-impact challenges in autonomous surgery.

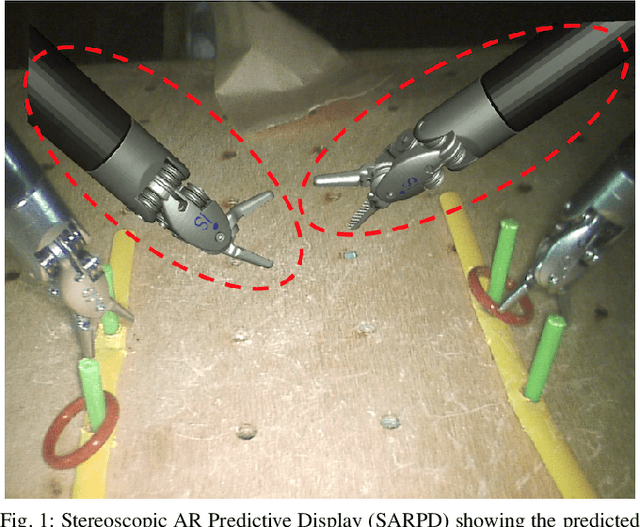

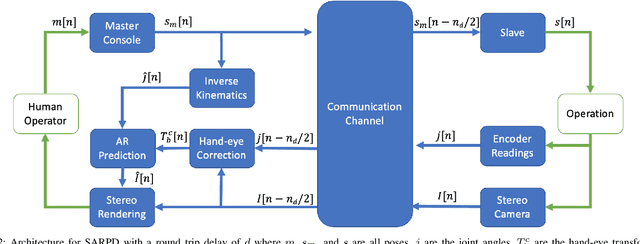

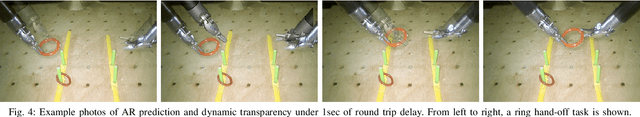

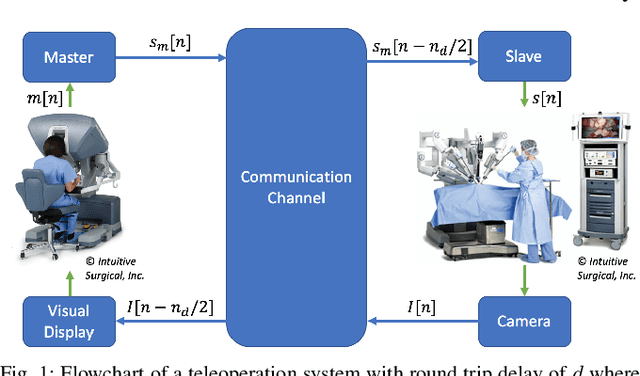

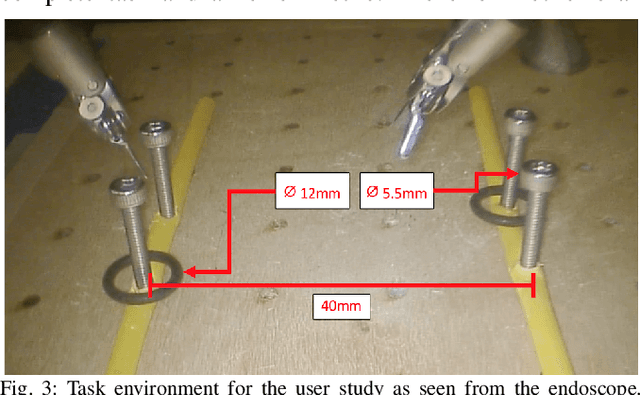

Augmented Reality Predictive Displays to Help Mitigate the Effects of Delayed Telesurgery

Feb 21, 2019

Abstract:Surgical robots offer the exciting potential for remote telesurgery, but advances are needed to make this technology efficient and accurate to ensure patient safety. Achieving these goals is hindered by the deleterious effects of latency between the remote operator and the bedside robot. Predictive displays have found success in overcoming these effects by giving the operator immediate visual feedback. However, previously developed predictive displays can not be directly applied to telesurgery due to the unique challenges in tracking the 3D geometry of the surgical environment. In this paper, we present the first predictive display for teleoperated surgical robots. The predicted display is stereoscopic, utilizes Augmented Reality (AR) to show the predicted motions alongside the complex tissue found in-situ within surgical environments, and overcomes the challenges in accurately tracking slave-tools in real-time. We call this a Stereoscopic AR Predictive Display (SARPD). To test the SARPD's performance, we conducted a user study with ten participants on the da Vinci\textregistered{} Surgical System. The results showed with statistical significance that using SARPD decreased time to complete task while having no effect on error rates when operating under delay.

Motion Scaling Solutions for Improved Performance in High Delay Surgical Teleoperation

Feb 08, 2019

Abstract:Robotic teleoperation brings great potential for advances within the field of surgery. The ability of a surgeon to reach patient remotely opens exciting opportunities. Early experience with telerobotic surgery has been interesting, but the clinical feasibility remains out of reach, largely due to the deleterious effects of communication delays. Teleoperation tasks are significantly impacted by unavoidable signal latency, which directly results in slower operations, less precision in movements, and increased human errors. Introducing significant changes to the surgical workflow, for example by introducing semi-automation or self-correction, present too significant a technological and ethical burden for commercial surgical robotic systems to adopt. In this paper, we present three simple and intuitive motion scaling solutions to combat teleoperated robotic systems under delay and help improve operator accuracy. Motion scaling offers potentially improved user performance and reduction in errors with minimal change to the underlying teleoperation architecture. To validate the use of motion scaling as a performance enhancer in telesurgery, we conducted a user study with 17 participants, and our results show that the proposed solutions do indeed reduce the error rate when operating under high delay.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge