Yuheng Zhi

KineDepth: Utilizing Robot Kinematics for Online Metric Depth Estimation

Sep 29, 2024Abstract:Depth perception is essential for a robot's spatial and geometric understanding of its environment, with many tasks traditionally relying on hardware-based depth sensors like RGB-D or stereo cameras. However, these sensors face practical limitations, including issues with transparent and reflective objects, high costs, calibration complexity, spatial and energy constraints, and increased failure rates in compound systems. While monocular depth estimation methods offer a cost-effective and simpler alternative, their adoption in robotics is limited due to their output of relative rather than metric depth, which is crucial for robotics applications. In this paper, we propose a method that utilizes a single calibrated camera, enabling the robot to act as a ``measuring stick" to convert relative depth estimates into metric depth in real-time as tasks are performed. Our approach employs an LSTM-based metric depth regressor, trained online and refined through probabilistic filtering, to accurately restore the metric depth across the monocular depth map, particularly in areas proximal to the robot's motion. Experiments with real robots demonstrate that our method significantly outperforms current state-of-the-art monocular metric depth estimation techniques, achieving a 22.1% reduction in depth error and a 52% increase in success rate for a downstream task.

SURESTEP: An Uncertainty-Aware Trajectory Optimization Framework to Enhance Visual Tool Tracking for Robust Surgical Automation

Mar 29, 2024Abstract:Inaccurate tool localization is one of the main reasons for failures in automating surgical tasks. Imprecise robot kinematics and noisy observations caused by the poor visual acuity of an endoscopic camera make tool tracking challenging. Previous works in surgical automation adopt environment-specific setups or hard-coded strategies instead of explicitly considering motion and observation uncertainty of tool tracking in their policies. In this work, we present SURESTEP, an uncertainty-aware trajectory optimization framework for robust surgical automation. We model the uncertainty of tool tracking with the components motivated by the sources of noise in typical surgical scenes. Using a Gaussian assumption to propagate our uncertainty models through a given tool trajectory, SURESTEP provides a general framework that minimizes the upper bound on the entropy of the final estimated tool distribution. We compare SURESTEP with a baseline method on a real-world suture needle regrasping task under challenging environmental conditions, such as poor lighting and a moving endoscopic camera. The results over 60 regrasps on the da Vinci Research Kit (dVRK) demonstrate that our optimized trajectories significantly outperform the un-optimized baseline.

Finding Biomechanically Safe Trajectories for Robot Manipulation of the Human Body in a Search and Rescue Scenario

Sep 26, 2023

Abstract:There has been increasing awareness of the difficulties in reaching and extracting people from mass casualty scenarios, such as those arising from natural disasters. While platforms have been designed to consider reaching casualties and even carrying them out of harm's way, the challenge of repositioning a casualty from its found configuration to one suitable for extraction has not been explicitly explored. Furthermore, this planning problem needs to incorporate biomechanical safety considerations for the casualty. Thus, we present a first solution to biomechanically safe trajectory generation for repositioning limbs of unconscious human casualties. We describe biomechanical safety as mathematical constraints, mechanical descriptions of the dynamics for the robot-human coupled system, and the planning and trajectory optimization process that considers this coupled and constrained system. We finally evaluate our approach over several variations of the problem and demonstrate it on a real robot and human subject. This work provides a crucial part of search and rescue that can be used in conjunction with past and present works involving robots and vision systems designed for search and rescue.

SemHint-MD: Learning from Noisy Semantic Labels for Self-Supervised Monocular Depth Estimation

Mar 31, 2023

Abstract:Without ground truth supervision, self-supervised depth estimation can be trapped in a local minimum due to the gradient-locality issue of the photometric loss. In this paper, we present a framework to enhance depth by leveraging semantic segmentation to guide the network to jump out of the local minimum. Prior works have proposed to share encoders between these two tasks or explicitly align them based on priors like the consistency between edges in the depth and segmentation maps. Yet, these methods usually require ground truth or high-quality pseudo labels, which may not be easily accessible in real-world applications. In contrast, we investigate self-supervised depth estimation along with a segmentation branch that is supervised with noisy labels provided by models pre-trained with limited data. We extend parameter sharing from the encoder to the decoder and study the influence of different numbers of shared decoder parameters on model performance. Also, we propose to use cross-task information to refine current depth and segmentation predictions to generate pseudo-depth and semantic labels for training. The advantages of the proposed method are demonstrated through extensive experiments on the KITTI benchmark and a downstream task for endoscopic tissue deformation tracking.

Biomedical image analysis competitions: The state of current participation practice

Dec 16, 2022Abstract:The number of international benchmarking competitions is steadily increasing in various fields of machine learning (ML) research and practice. So far, however, little is known about the common practice as well as bottlenecks faced by the community in tackling the research questions posed. To shed light on the status quo of algorithm development in the specific field of biomedical imaging analysis, we designed an international survey that was issued to all participants of challenges conducted in conjunction with the IEEE ISBI 2021 and MICCAI 2021 conferences (80 competitions in total). The survey covered participants' expertise and working environments, their chosen strategies, as well as algorithm characteristics. A median of 72% challenge participants took part in the survey. According to our results, knowledge exchange was the primary incentive (70%) for participation, while the reception of prize money played only a minor role (16%). While a median of 80 working hours was spent on method development, a large portion of participants stated that they did not have enough time for method development (32%). 25% perceived the infrastructure to be a bottleneck. Overall, 94% of all solutions were deep learning-based. Of these, 84% were based on standard architectures. 43% of the respondents reported that the data samples (e.g., images) were too large to be processed at once. This was most commonly addressed by patch-based training (69%), downsampling (37%), and solving 3D analysis tasks as a series of 2D tasks. K-fold cross-validation on the training set was performed by only 37% of the participants and only 50% of the participants performed ensembling based on multiple identical models (61%) or heterogeneous models (39%). 48% of the respondents applied postprocessing steps.

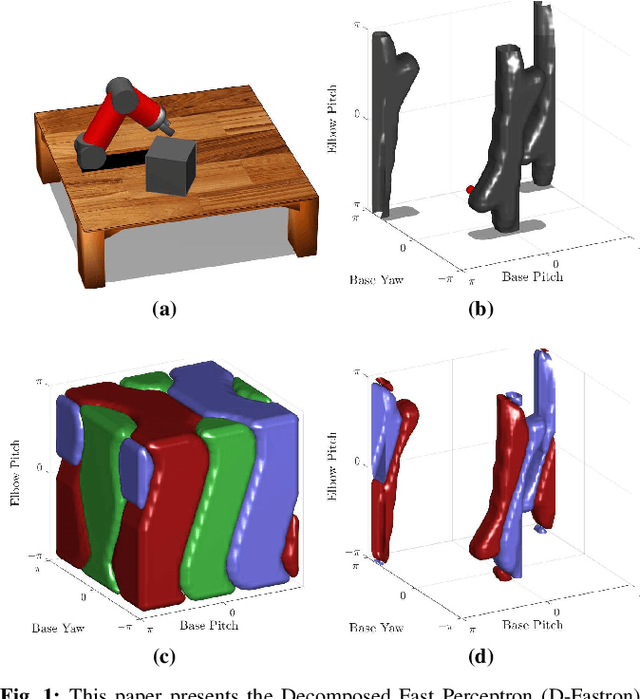

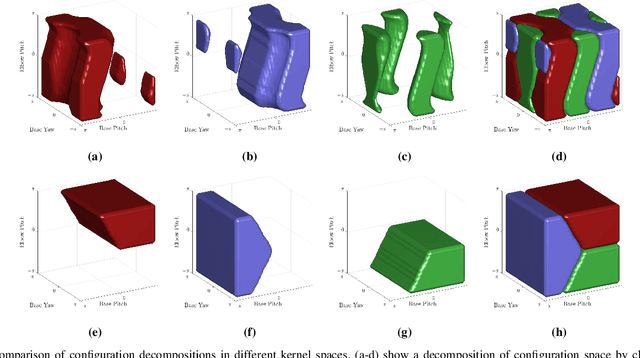

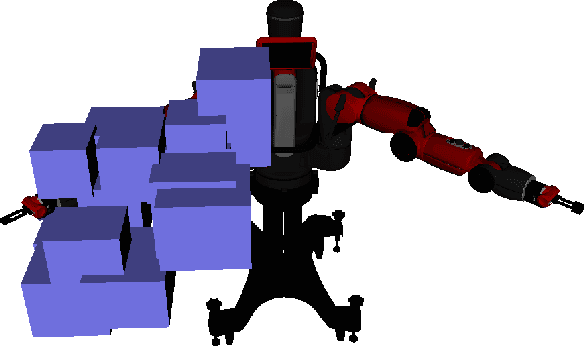

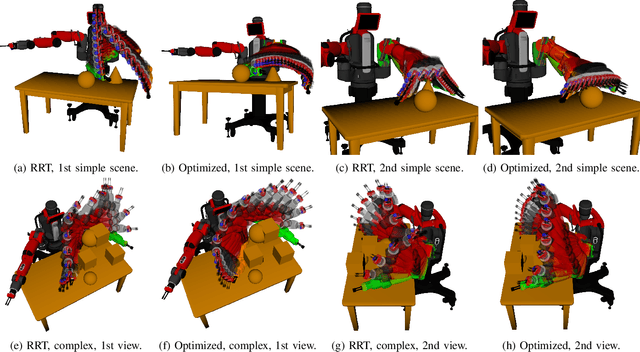

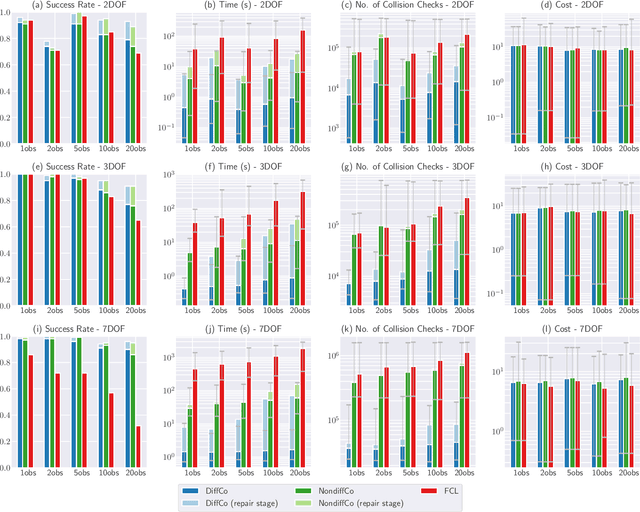

Configuration Space Decomposition for Scalable Proxy Collision Checking in Robot Planning and Control

Jan 26, 2022

Abstract:Real-time robot motion planning in complex high-dimensional environments remains an open problem. Motion planning algorithms, and their underlying collision checkers, are crucial to any robot control stack. Collision checking takes up a large portion of the computational time in robot motion planning. Existing collision checkers make trade-offs between speed and accuracy and scale poorly to high-dimensional, complex environments. We present a novel space decomposition method using K-Means clustering in the Forward Kinematics space to accelerate proxy collision checking. We train individual configuration space models using Fastron, a kernel perceptron algorithm, on these decomposed subspaces, yielding compact yet highly accurate models that can be queried rapidly and scale better to more complex environments. We demonstrate this new method, called Decomposed Fast Perceptron (D-Fastron), on the 7-DOF Baxter robot producing on average 29x faster collision checks and up to 9.8x faster motion planning compared to state-of-the-art geometric collision checkers.

Data-driven Actuator Selection for Artificial Muscle-Powered Robots

Apr 15, 2021

Abstract:Even though artificial muscles have gained popularity due to their compliant, flexible, and compact properties, there currently does not exist an easy way of making informed decisions on the appropriate actuation strategy when designing a muscle-powered robot; thus limiting the transition of such technologies into broader applications. What's more, when a new muscle actuation technology is developed, it is difficult to compare it against existing robot muscles. To accelerate the development of artificial muscle applications, we propose a data driven approach for robot muscle actuator selection using Support Vector Machines (SVM). This first-of-its-kind method gives users gives users insight into which actuators fit their specific needs and actuation performance criteria, making it possible for researchers and engineer with little to no prior knowledge of artificial muscles to focus on application design. It also provides a platform to benchmark existing, new, or yet-to-be-discovered artificial muscle technologies. We test our method on unseen existing robot muscle designs to prove its usability on real-world applications. We provide an open-access, web-searchable interface for easy access to our models that will additionally allow for continuous contribution of new actuator data from groups around the world to enhance and expand these models.

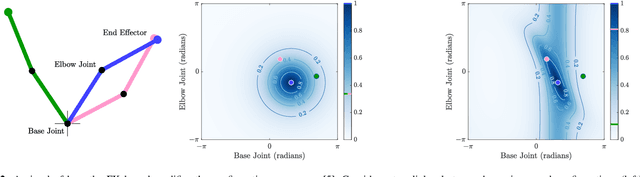

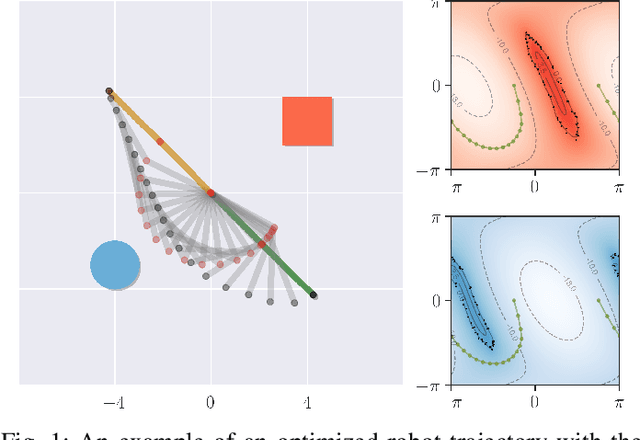

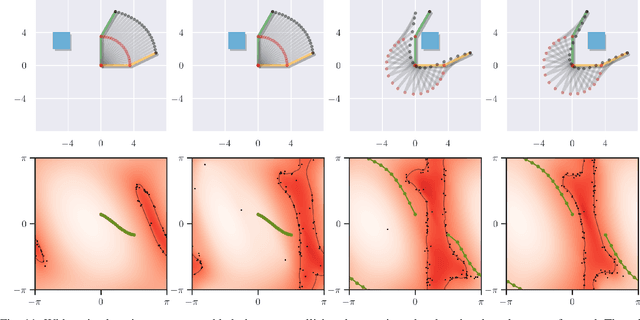

DiffCo: Auto-Differentiable Proxy Collision Detection with Multi-class Labels for Safety-Aware Trajectory Optimization

Feb 15, 2021

Abstract:The objective of trajectory optimization algorithms is to achieve an optimal collision-free path between a start and goal state. In real-world scenarios where environments can be complex and non-homogeneous, a robot needs to be able to gauge whether a state will be in collision with various objects in order to meet some safety metrics. The collision detector should be computationally efficient and, ideally, analytically differentiable to facilitate stable and rapid gradient descent during optimization. However, methods today lack an elegant approach to detect collision differentiably, relying rather on numerical gradients that can be unstable. We present DiffCo, the first, fully auto-differentiable, non-parametric model for collision detection. Its non-parametric behavior allows one to compute collision boundaries on-the-fly and update them, requiring no pre-training and allowing it to update continuously in dynamic environments. It provides robust gradients for trajectory optimization via backpropagation and is often 10-100x faster to compute than its geometric counterparts. DiffCo also extends trivially to modeling different object collision classes for semantically informed trajectory optimization.

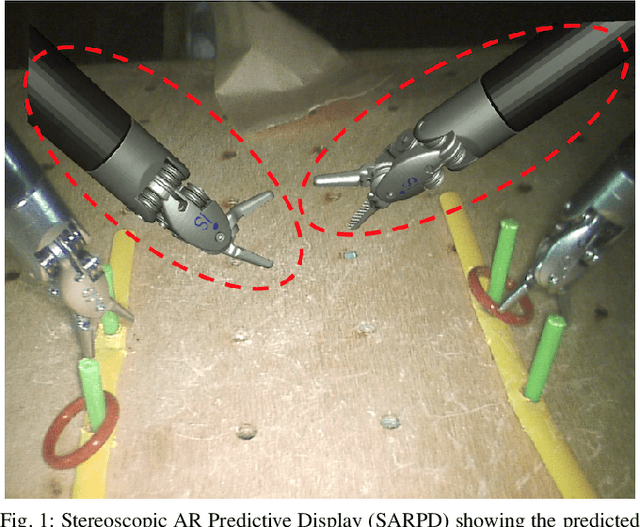

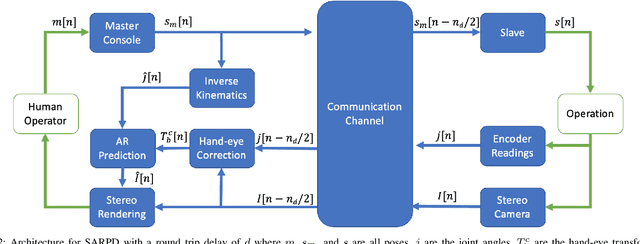

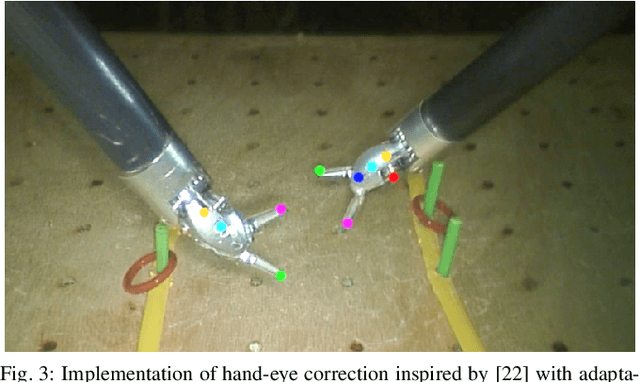

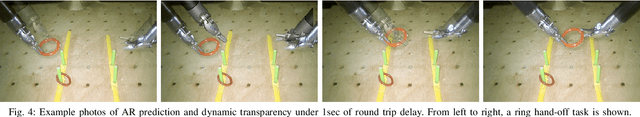

Augmented Reality Predictive Displays to Help Mitigate the Effects of Delayed Telesurgery

Feb 21, 2019

Abstract:Surgical robots offer the exciting potential for remote telesurgery, but advances are needed to make this technology efficient and accurate to ensure patient safety. Achieving these goals is hindered by the deleterious effects of latency between the remote operator and the bedside robot. Predictive displays have found success in overcoming these effects by giving the operator immediate visual feedback. However, previously developed predictive displays can not be directly applied to telesurgery due to the unique challenges in tracking the 3D geometry of the surgical environment. In this paper, we present the first predictive display for teleoperated surgical robots. The predicted display is stereoscopic, utilizes Augmented Reality (AR) to show the predicted motions alongside the complex tissue found in-situ within surgical environments, and overcomes the challenges in accurately tracking slave-tools in real-time. We call this a Stereoscopic AR Predictive Display (SARPD). To test the SARPD's performance, we conducted a user study with ten participants on the da Vinci\textregistered{} Surgical System. The results showed with statistical significance that using SARPD decreased time to complete task while having no effect on error rates when operating under delay.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge