Michael Yip

Dexterous Control of an 11-DOF Redundant Robot for CT-Guided Needle Insertion With Task-Oriented Weighted Policies

Mar 18, 2025

Abstract:Computed tomography (CT)-guided needle biopsies are critical for diagnosing a range of conditions, including lung cancer, but present challenges such as limited in-bore space, prolonged procedure times, and radiation exposure. Robotic assistance offers a promising solution by improving needle trajectory accuracy, reducing radiation exposure, and enabling real-time adjustments. In our previous work, we introduced a redundant robotic platform designed for dexterous needle insertion within the confined CT bore. However, its limited base mobility restricts flexible deployment in clinical settings. In this study, we present an improved 11-degree-of-freedom (DOF) robotic system that integrates a 6-DOF robotic base with a 5-DOF cable-driven end-effector, significantly enhancing workspace flexibility and precision. With the hyper-redundant degrees of freedom, we introduce a weighted inverse kinematics controller with a two-stage priority scheme for large-scale movement and fine in-bore adjustments, along with a null-space control strategy to optimize dexterity. We validate our system through both simulation and real-world experiments, demonstrating superior tracking accuracy and enhanced manipulability in CT-guided procedures. The study provides a strong case for hyper-redundancy and null-space control formulations for robot-assisted needle biopsy scenarios.

Humanoids in Hospitals: A Technical Study of Humanoid Surrogates for Dexterous Medical Interventions

Mar 17, 2025

Abstract:The increasing demand for healthcare workers, driven by aging populations and labor shortages, presents a significant challenge for hospitals. Humanoid robots have the potential to alleviate these pressures by leveraging their human-like dexterity and adaptability to assist in medical procedures. This work conducted an exploratory study on the feasibility of humanoid robots performing direct clinical tasks through teleoperation. A bimanual teleoperation system was developed for the Unitree G1 Humanoid Robot, integrating high-fidelity pose tracking, custom grasping configurations, and an impedance controller to safely and precisely manipulate medical tools. The system is evaluated in seven diverse medical procedures, including physical examinations, emergency interventions, and precision needle tasks. Our results demonstrate that humanoid robots can successfully replicate critical aspects of human medical assessments and interventions, with promising quantitative performance in ventilation and ultrasound-guided tasks. However, challenges remain, including limitations in force output for procedures requiring high strength and sensor sensitivity issues affecting clinical accuracy. This study highlights the potential and current limitations of humanoid robots in hospital settings and lays the groundwork for future research on robotic healthcare integration.

KineDepth: Utilizing Robot Kinematics for Online Metric Depth Estimation

Sep 29, 2024Abstract:Depth perception is essential for a robot's spatial and geometric understanding of its environment, with many tasks traditionally relying on hardware-based depth sensors like RGB-D or stereo cameras. However, these sensors face practical limitations, including issues with transparent and reflective objects, high costs, calibration complexity, spatial and energy constraints, and increased failure rates in compound systems. While monocular depth estimation methods offer a cost-effective and simpler alternative, their adoption in robotics is limited due to their output of relative rather than metric depth, which is crucial for robotics applications. In this paper, we propose a method that utilizes a single calibrated camera, enabling the robot to act as a ``measuring stick" to convert relative depth estimates into metric depth in real-time as tasks are performed. Our approach employs an LSTM-based metric depth regressor, trained online and refined through probabilistic filtering, to accurately restore the metric depth across the monocular depth map, particularly in areas proximal to the robot's motion. Experiments with real robots demonstrate that our method significantly outperforms current state-of-the-art monocular metric depth estimation techniques, achieving a 22.1% reduction in depth error and a 52% increase in success rate for a downstream task.

MEDiC: Autonomous Surgical Robotic Assistance to Maximizing Exposure for Dissection and Cautery

Sep 22, 2024Abstract:Surgical automation has the capability to improve the consistency of patient outcomes and broaden access to advanced surgical care in underprivileged communities. Shared autonomy, where the robot automates routine subtasks while the surgeon retains partial teleoperative control, offers great potential to make an impact. In this paper we focus on one important skill within surgical shared autonomy: Automating robotic assistance to maximize visual exposure and apply tissue tension for dissection and cautery. Ensuring consistent exposure to visualize the surgical site is crucial for both efficiency and patient safety. However, achieving this is highly challenging due to the complexities of manipulating deformable volumetric tissues that are prevalent in surgery.To address these challenges we propose \methodname, a framework for autonomous surgical robotic assistance to \methodfullname. We integrate a differentiable physics model with perceptual feedback to achieve our two key objectives: 1) Maximizing tissue exposure and applying tension for a specified dissection site through visual-servoing conrol and 2) Selecting optimal control positions for a dissection target based on deformable Jacobian analysis. We quantitatively assess our method through repeated real robot experiments on a tissue phantom, and showcase its capabilities through dissection experiments using shared autonomy on real animal tissue.

AutoPeel: Adhesion-aware Safe Peeling Trajectory Optimization for Robotic Wound Care

Sep 22, 2024

Abstract:Chronic wounds, including diabetic ulcers, pressure ulcers, and ulcers secondary to venous hypertension, affects more than 6.5 million patients and a yearly cost of more than $25 billion in the United States alone. Chronic wound treatment is currently a manual process, and we envision a future where robotics and automation will aid in this treatment to reduce cost and improve patient care. In this work, we present the development of the first robotic system for wound dressing removal which is reported to be the worst aspect of living with chronic wounds. Our method leverages differentiable physics-based simulation to perform gradient-based Model Predictive Control (MPC) for optimized trajectory planning. By integrating fracture mechanics of adhesion, we are able to model the peeling effect inherent to dressing adhesion. The system is further guided by carefully designed objective functions that promote both efficient and safe control, reducing the risk of tissue damage. We validated the efficacy of our approach through a series of experiments conducted on both synthetic skin phantoms and real human subjects. Our results demonstrate the system's ability to achieve precise and safe dressing removal trajectories, offering a promising solution for automating this essential healthcare procedure.

Tracking and Mapping in Medical Computer Vision: A Review

Oct 17, 2023

Abstract:As computer vision algorithms are becoming more capable, their applications in clinical systems will become more pervasive. These applications include diagnostics such as colonoscopy and bronchoscopy, guiding biopsies and minimally invasive interventions and surgery, automating instrument motion and providing image guidance using pre-operative scans. Many of these applications depend on the specific visual nature of medical scenes and require designing and applying algorithms to perform in this environment. In this review, we provide an update to the field of camera-based tracking and scene mapping in surgery and diagnostics in medical computer vision. We begin with describing our review process, which results in a final list of 515 papers that we cover. We then give a high-level summary of the state of the art and provide relevant background for those who need tracking and mapping for their clinical applications. We then review datasets provided in the field and the clinical needs therein. Then, we delve in depth into the algorithmic side, and summarize recent developments, which should be especially useful for algorithm designers and to those looking to understand the capability of off-the-shelf methods. We focus on algorithms for deformable environments while also reviewing the essential building blocks in rigid tracking and mapping since there is a large amount of crossover in methods. Finally, we discuss the current state of the tracking and mapping methods along with needs for future algorithms, needs for quantification, and the viability of clinical applications in the field. We conclude that new methods need to be designed or combined to support clinical applications in deformable environments, and more focus needs to be put into collecting datasets for training and evaluation.

BASED: Bundle-Adjusting Surgical Endoscopic Dynamic Video Reconstruction using Neural Radiance Fields

Sep 27, 2023Abstract:Reconstruction of deformable scenes from endoscopic videos is important for many applications such as intraoperative navigation, surgical visual perception, and robotic surgery. It is a foundational requirement for realizing autonomous robotic interventions for minimally invasive surgery. However, previous approaches in this domain have been limited by their modular nature and are confined to specific camera and scene settings. Our work adopts the Neural Radiance Fields (NeRF) approach to learning 3D implicit representations of scenes that are both dynamic and deformable over time, and furthermore with unknown camera poses. We demonstrate this approach on endoscopic surgical scenes from robotic surgery. This work removes the constraints of known camera poses and overcomes the drawbacks of the state-of-the-art unstructured dynamic scene reconstruction technique, which relies on the static part of the scene for accurate reconstruction. Through several experimental datasets, we demonstrate the versatility of our proposed model to adapt to diverse camera and scene settings, and show its promise for both current and future robotic surgical systems.

Real-to-Sim Deformable Object Manipulation: Optimizing Physics Models with Residual Mappings for Robotic Surgery

Sep 20, 2023Abstract:Accurate deformable object manipulation (DOM) is essential for achieving autonomy in robotic surgery, where soft tissues are being displaced, stretched, and dissected. Many DOM methods can be powered by simulation, which ensures realistic deformation by adhering to the governing physical constraints and allowing for model prediction and control. However, real soft objects in robotic surgery, such as membranes and soft tissues, have complex, anisotropic physical parameters that a simulation with simple initialization from cameras may not fully capture. To use the simulation techniques in real surgical tasks, the "real-to-sim" gap needs to be properly compensated. In this work, we propose an online, adaptive parameter tuning approach for simulation optimization that (1) bridges the real-to-sim gap between a physics simulation and observations obtained 3D perceptions through estimating a residual mapping and (2) optimizes its stiffness parameters online. Our method ensures a small residual gap between the simulation and observation and improves the simulation's predictive capabilities. The effectiveness of the proposed mechanism is evaluated in the manipulation of both a thin-shell and volumetric tissue, representative of most tissue scenarios. This work contributes to the advancement of simulation-based deformable tissue manipulation and holds potential for improving surgical autonomy.

Achieving Autonomous Cloth Manipulation with Optimal Control via Differentiable Physics-Aware Regularization and Safety Constraints

Sep 20, 2023

Abstract:Cloth manipulation is a category of deformable object manipulation of great interest to the robotics community, from applications of automated laundry-folding and home organizing and cleaning to textiles and flexible manufacturing. Despite the desire for automated cloth manipulation, the thin-shell dynamics and under-actuation nature of cloth present significant challenges for robots to effectively interact with them. Many recent works omit explicit modeling in favor of learning-based methods that may yield control policies directly. However, these methods require large training sets that must be collected and curated. In this regard, we create a framework for differentiable modeling of cloth dynamics leveraging an Extended Position-based Dynamics (XPBD) algorithm. Together with the desired control objective, physics-aware regularization terms are designed for better results, including trajectory smoothness and elastic potential energy. In addition, safety constraints, such as avoiding obstacles, can be specified using signed distance functions (SDFs). We formulate the cloth manipulation task with safety constraints as a constrained optimization problem, which can be effectively solved by mainstream gradient-based optimizers thanks to the end-to-end differentiability of our framework. Finally, we assess the proposed framework for manipulation tasks with various safety thresholds and demonstrate the feasibility of result trajectories on a surgical robot. The effects of the regularization terms are analyzed in an additional ablation study.

BAA-NGP: Bundle-Adjusting Accelerated Neural Graphics Primitives

Jun 09, 2023

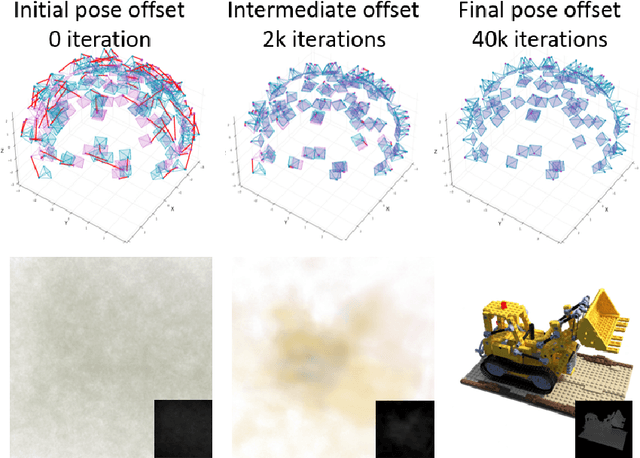

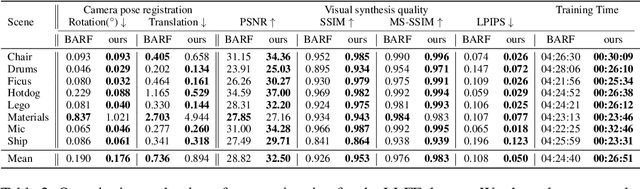

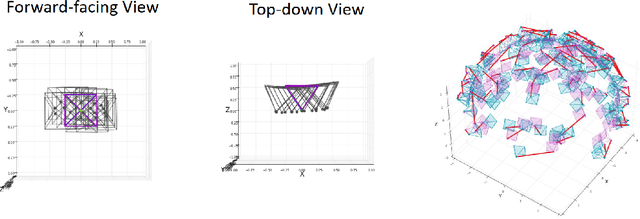

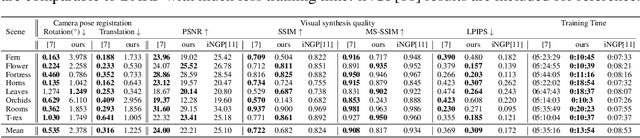

Abstract:Implicit neural representation has emerged as a powerful method for reconstructing 3D scenes from 2D images. Given a set of camera poses and associated images, the models can be trained to synthesize novel, unseen views. In order to expand the use cases for implicit neural representations, we need to incorporate camera pose estimation capabilities as part of the representation learning, as this is necessary for reconstructing scenes from real-world video sequences where cameras are generally not being tracked. Existing approaches like COLMAP and, most recently, bundle-adjusting neural radiance field methods often suffer from lengthy processing times. These delays ranging from hours to days, arise from laborious feature matching, hardware limitations, dense point sampling, and long training times required by a multi-layer perceptron structure with a large number of parameters. To address these challenges, we propose a framework called bundle-adjusting accelerated neural graphics primitives (BAA-NGP). Our approach leverages accelerated sampling and hash encoding to expedite both pose refinement/estimation and 3D scene reconstruction. Experimental results demonstrate that our method achieves a more than 10 to 20 $\times$ speed improvement in novel view synthesis compared to other bundle-adjusting neural radiance field methods without sacrificing the quality of pose estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge