Markerless Suture Needle 6D Pose Tracking with Robust Uncertainty Estimation for Autonomous Minimally Invasive Robotic Surgery

Paper and Code

Sep 26, 2021

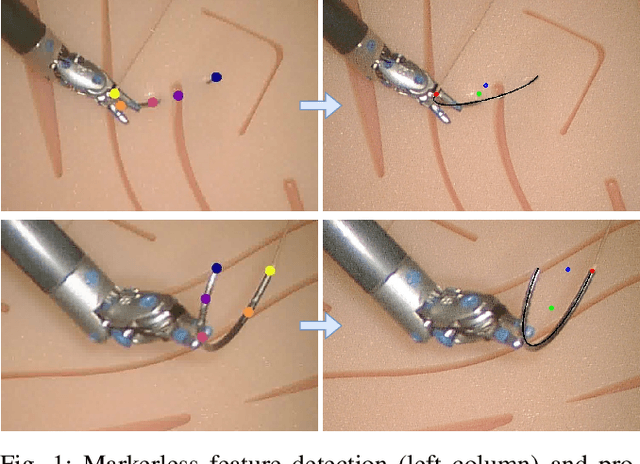

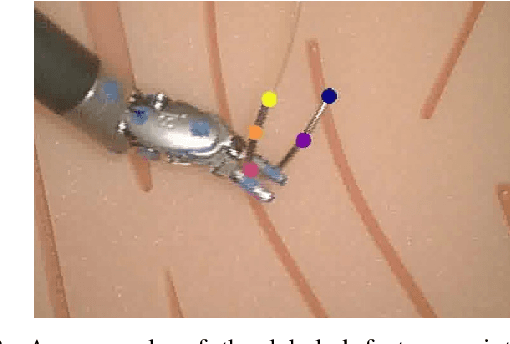

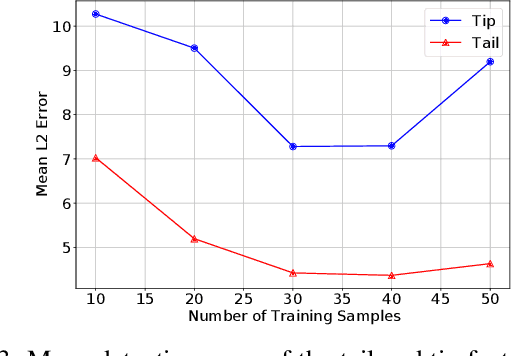

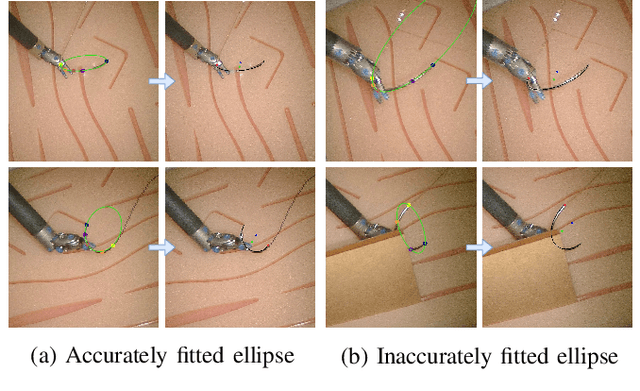

Suture needle localization plays a crucial role towards autonomous suturing. To track the 6D pose of a suture needle robustly, previous approaches usually add markers on the needle or perform complex operations for feature extraction, making these methods difficult to be applicable to real-world environments. Therefore in this work, we present a novel approach for markerless suture needle pose tracking using Bayesian filters. A data-efficient feature point detector is trained to extract the feature points on the needle. Then based on these detections, we propose a novel observation model that measures the overlap between the detections and the expected projection of the needle, which can be calculated efficiently. In addition, for the proposed method, we derive the approximation for the covariance of the observation noise, making this model more robust to the uncertainty in the detections. The experimental results in simulation show that the proposed observation model achieves low tracking errors of approximately 1.5mm in position in space and 1 degree in orientation. We also demonstrate the qualitative results of our trained markerless feature detector combined with the proposed observation model in real-world environments. The results show high consistency between the projection of the tracked pose and that of the real pose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge