Zhiyuan Zha

College of Communication Engineering, Jilin University

Triply Laplacian Scale Mixture Modeling for Seismic Data Noise Suppression

Feb 20, 2025Abstract:Sparsity-based tensor recovery methods have shown great potential in suppressing seismic data noise. These methods exploit tensor sparsity measures capturing the low-dimensional structures inherent in seismic data tensors to remove noise by applying sparsity constraints through soft-thresholding or hard-thresholding operators. However, in these methods, considering that real seismic data are non-stationary and affected by noise, the variances of tensor coefficients are unknown and may be difficult to accurately estimate from the degraded seismic data, leading to undesirable noise suppression performance. In this paper, we propose a novel triply Laplacian scale mixture (TLSM) approach for seismic data noise suppression, which significantly improves the estimation accuracy of both the sparse tensor coefficients and hidden scalar parameters. To make the optimization problem manageable, an alternating direction method of multipliers (ADMM) algorithm is employed to solve the proposed TLSM-based seismic data noise suppression problem. Extensive experimental results on synthetic and field seismic data demonstrate that the proposed TLSM algorithm outperforms many state-of-the-art seismic data noise suppression methods in both quantitative and qualitative evaluations while providing exceptional computational efficiency.

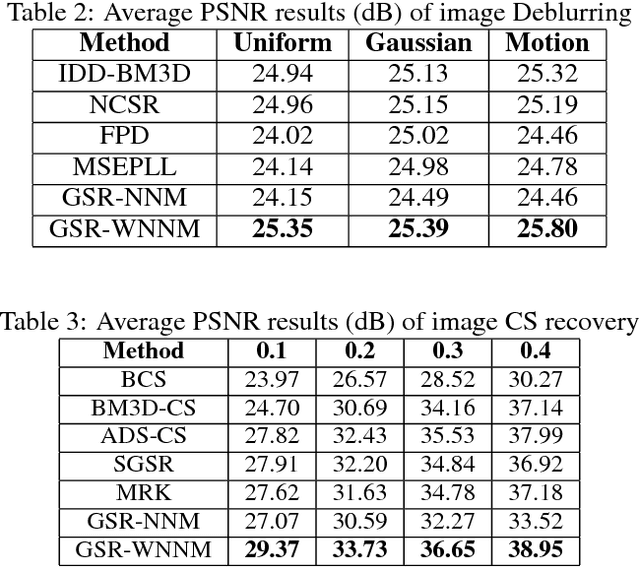

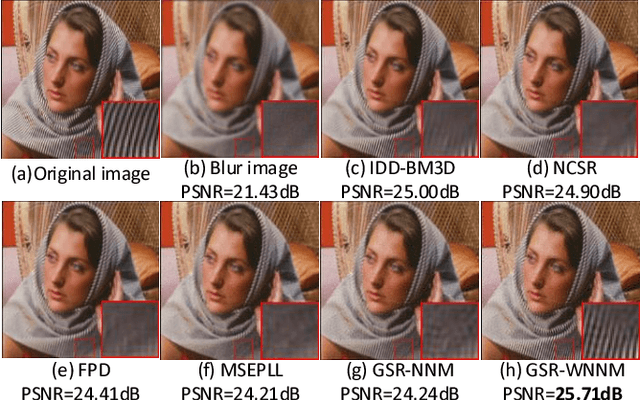

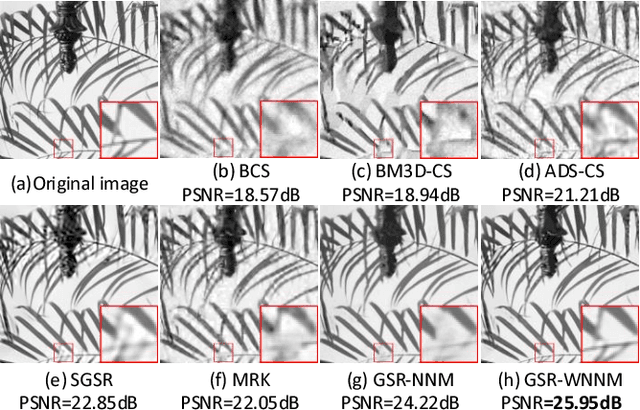

Learning Nonlocal Sparse and Low-Rank Models for Image Compressive Sensing

Mar 22, 2022

Abstract:The compressive sensing (CS) scheme exploits much fewer measurements than suggested by the Nyquist-Shannon sampling theorem to accurately reconstruct images, which has attracted considerable attention in the computational imaging community. While classic image CS schemes employed sparsity using analytical transforms or bases, the learning-based approaches have become increasingly popular in recent years. Such methods can effectively model the structures of image patches by optimizing their sparse representations or learning deep neural networks, while preserving the known or modeled sensing process. Beyond exploiting local image properties, advanced CS schemes adopt nonlocal image modeling, by extracting similar or highly correlated patches at different locations of an image to form a group to process jointly. More recent learning-based CS schemes apply nonlocal structured sparsity prior using group sparse representation (GSR) and/or low-rank (LR) modeling, which have demonstrated promising performance in various computational imaging and image processing applications. This article reviews some recent works in image CS tasks with a focus on the advanced GSR and LR based methods. Furthermore, we present a unified framework for incorporating various GSR and LR models and discuss the relationship between GSR and LR models. Finally, we discuss the open problems and future directions in the field.

R3L: Connecting Deep Reinforcement Learning to Recurrent Neural Networks for Image Denoising via Residual Recovery

Jul 12, 2021

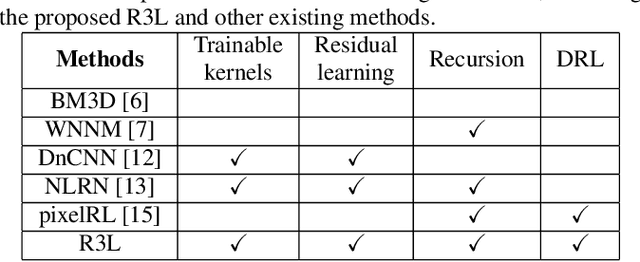

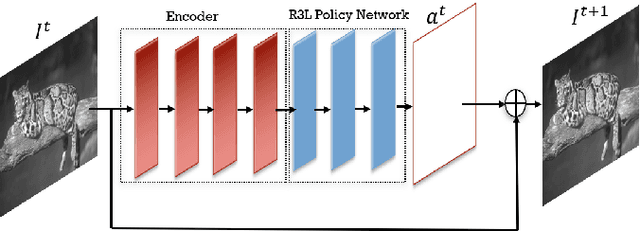

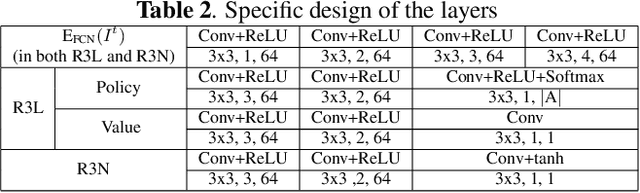

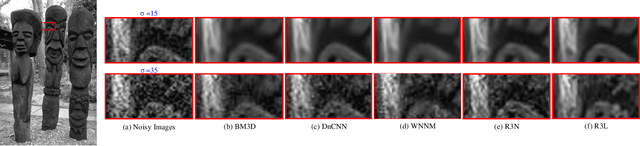

Abstract:State-of-the-art image denoisers exploit various types of deep neural networks via deterministic training. Alternatively, very recent works utilize deep reinforcement learning for restoring images with diverse or unknown corruptions. Though deep reinforcement learning can generate effective policy networks for operator selection or architecture search in image restoration, how it is connected to the classic deterministic training in solving inverse problems remains unclear. In this work, we propose a novel image denoising scheme via Residual Recovery using Reinforcement Learning, dubbed R3L. We show that R3L is equivalent to a deep recurrent neural network that is trained using a stochastic reward, in contrast to many popular denoisers using supervised learning with deterministic losses. To benchmark the effectiveness of reinforcement learning in R3L, we train a recurrent neural network with the same architecture for residual recovery using the deterministic loss, thus to analyze how the two different training strategies affect the denoising performance. With such a unified benchmarking system, we demonstrate that the proposed R3L has better generalizability and robustness in image denoising when the estimated noise level varies, comparing to its counterparts using deterministic training, as well as various state-of-the-art image denoising algorithms.

The Power of Triply Complementary Priors for Image Compressive Sensing

May 16, 2020

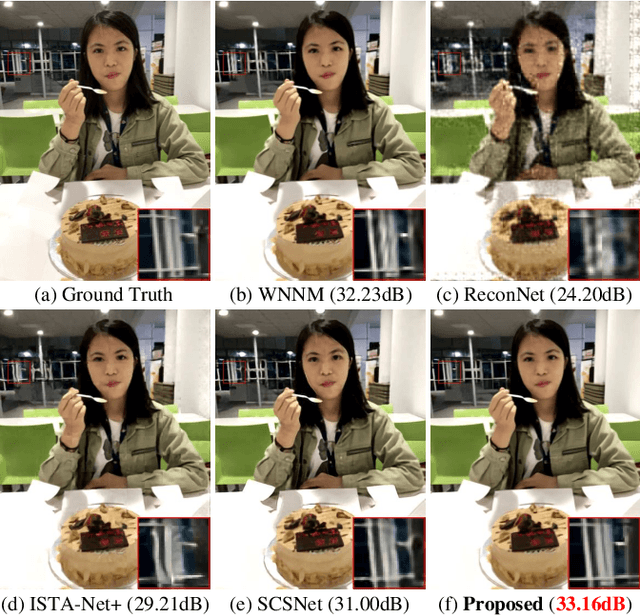

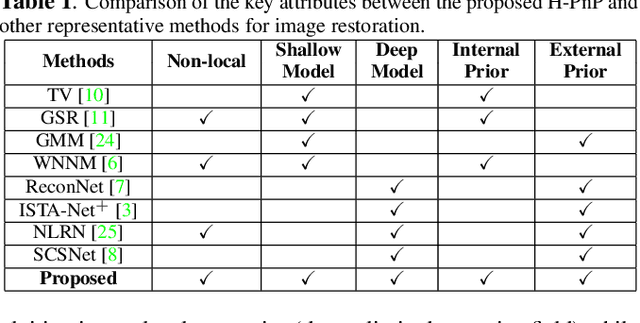

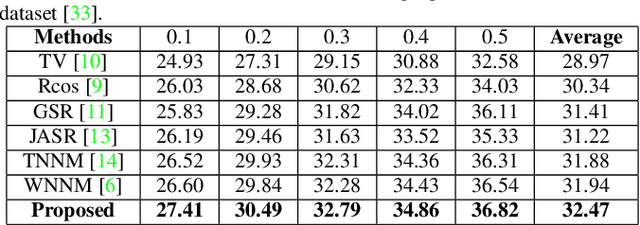

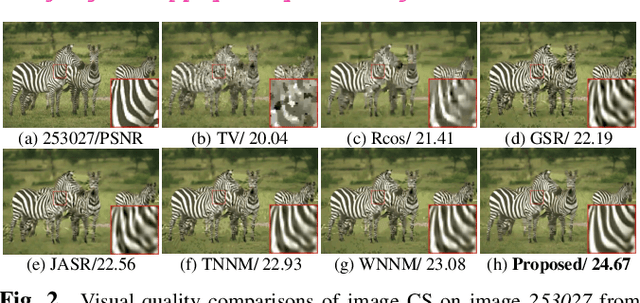

Abstract:Recent works that utilized deep models have achieved superior results in various image restoration applications. Such approach is typically supervised which requires a corpus of training images with distribution similar to the images to be recovered. On the other hand, the shallow methods which are usually unsupervised remain promising performance in many inverse problems, \eg, image compressive sensing (CS), as they can effectively leverage non-local self-similarity priors of natural images. However, most of such methods are patch-based leading to the restored images with various ringing artifacts due to naive patch aggregation. Using either approach alone usually limits performance and generalizability in image restoration tasks. In this paper, we propose a joint low-rank and deep (LRD) image model, which contains a pair of triply complementary priors, namely \textit{external} and \textit{internal}, \textit{deep} and \textit{shallow}, and \textit{local} and \textit{non-local} priors. We then propose a novel hybrid plug-and-play (H-PnP) framework based on the LRD model for image CS. To make the optimization tractable, a simple yet effective algorithm is proposed to solve the proposed H-PnP based image CS problem. Extensive experimental results demonstrate that the proposed H-PnP algorithm significantly outperforms the state-of-the-art techniques for image CS recovery such as SCSNet and WNNM.

From Rank Estimation to Rank Approximation: Rank Residual Constraint for Image Denoising

Aug 14, 2018

Abstract:In this paper, we propose a novel approach for the rank minimization problem, termed rank residual constraint (RRC). Different from existing low-rank based approaches, such as the well-known weighted nuclear norm minimization (WNNM) and nuclear norm minimization (NNM), which aim to estimate the underlying low-rank matrix directly from the corrupted observation, we progressively approximate or approach the underlying low-rank matrix via minimizing the rank residual. By integrating the image nonlocal self-similarity (NSS) prior with the proposed RRC model, we develop an iterative algorithm for image denoising. To this end, we first present a recursive based nonlocal means method to obtain a good reference of the original image patch groups, and then the rank residual of the image patch groups between this reference and the noisy image is minimized to achieve a better estimate of the desired image. In this manner, both the reference and the estimated image in each iteration are improved gradually and jointly. Based on the group-based sparse representation model, we further provide a theoretical analysis on the feasibility of the proposed RRC model. Experimental results demonstrate that the proposed RRC model outperforms many state-of-the-art denoising methods in both the objective and perceptual qualities.

Analyzing the Weighted Nuclear Norm Minimization and Nuclear Norm Minimization based on Group Sparse Representation

Jul 19, 2018

Abstract:Rank minimization methods have attracted considerable interest in various areas, such as computer vision and machine learning. The most representative work is nuclear norm minimization (NNM), which can recover the matrix rank exactly under some restricted and theoretical guarantee conditions. However, for many real applications, NNM is not able to approximate the matrix rank accurately, since it often tends to over-shrink the rank components. To rectify the weakness of NNM, recent advances have shown that weighted nuclear norm minimization (WNNM) can achieve a better matrix rank approximation than NNM, which heuristically set the weight being inverse to the singular values. However, it still lacks a sound mathematical explanation on why WNNM is more feasible than NNM. In this paper, we propose a scheme to analyze WNNM and NNM from the perspective of the group sparse representation. Specifically, we design an adaptive dictionary to bridge the gap between the group sparse representation and the rank minimization models. Based on this scheme, we provide a mathematical derivation to explain why WNNM is more feasible than NNM. Moreover, due to the heuristical set of the weight, WNNM sometimes pops out error in the operation of SVD, and thus we present an adaptive weight setting scheme to avoid this error. We then employ the proposed scheme on two low-level vision tasks including image denoising and image inpainting. Experimental results demonstrate that WNNM is more feasible than NNM and the proposed scheme outperforms many current state-of-the-art methods.

Group Sparsity Residual with Non-Local Samples for Image Denoising

Mar 22, 2018

Abstract:Inspired by group-based sparse coding, recently proposed group sparsity residual (GSR) scheme has demonstrated superior performance in image processing. However, one challenge in GSR is to estimate the residual by using a proper reference of the group-based sparse coding (GSC), which is desired to be as close to the truth as possible. Previous researches utilized the estimations from other algorithms (i.e., GMM or BM3D), which are either not accurate or too slow. In this paper, we propose to use the Non-Local Samples (NLS) as reference in the GSR regime for image denoising, thus termed GSR-NLS. More specifically, we first obtain a good estimation of the group sparse coefficients by the image nonlocal self-similarity, and then solve the GSR model by an effective iterative shrinkage algorithm. Experimental results demonstrate that the proposed GSR-NLS not only outperforms many state-of-the-art methods, but also delivers the competitive advantage of speed.

Bridge the Gap Between Group Sparse Coding and Rank Minimization via Adaptive Dictionary Learning

Nov 08, 2017

Abstract:Both sparse coding and rank minimization have led to great successes in various image processing tasks. Though the underlying principles of these two approaches are similar, no theory is available to demonstrate the correspondence. In this paper, starting by designing an adaptive dictionary for each group of image patches, we analyze the sparsity of image patches in each group using the rank minimization approach. Based on this, we prove that the group-based sparse coding is equivalent to the rank minimization problem under our proposed adaptive dictionary. Therefore, the sparsity of the coefficients for each group can be measured by estimating the singular values of this group. Inspired by our theoretical analysis, four nuclear norm like minimization methods including the standard nuclear norm minimization (NNM), weighted nuclear norm minimization (WNNM), Schatten $p$-norm minimization (SNM), and weighted Schatten $p$-norm minimization (WSNM), are employed to analyze the sparsity of the coefficients and WSNM is found to be the closest solution to the singular values of each group. Based on this, WSNM is then translated to a non-convex weighted $\ell_p$-norm minimization problem in group-based sparse coding, and in order to solve this problem, a new algorithm based on the alternating direction method of multipliers (ADMM) framework is developed. Experimental results on two low-level vision tasks: image inpainting and image compressive sensing recovery, demonstrate that the proposed scheme is feasible and outperforms state-of-the-art methods.

Group-based Sparse Representation for Image Compressive Sensing Reconstruction with Non-Convex Regularization

Aug 17, 2017

Abstract:Patch-based sparse representation modeling has shown great potential in image compressive sensing (CS) reconstruction. However, this model usually suffers from some limits, such as dictionary learning with great computational complexity, neglecting the relationship among similar patches. In this paper, a group-based sparse representation method with non-convex regularization (GSR-NCR) for image CS reconstruction is proposed. In GSR-NCR, the local sparsity and nonlocal self-similarity of images is simultaneously considered in a unified framework. Different from the previous methods based on sparsity-promoting convex regularization, we extend the non-convex weighted Lp (0 < p < 1) penalty function on group sparse coefficients of the data matrix, rather than conventional L1-based regularization. To reduce the computational complexity, instead of learning the dictionary with a high computational complexity from natural images, we learn the principle component analysis (PCA) based dictionary for each group. Moreover, to make the proposed scheme tractable and robust, we have developed an efficient iterative shrinkage/thresholding algorithm to solve the non-convex optimization problem. Experimental results demonstrate that the proposed method outperforms many state-of-the-art techniques for image CS reconstruction.

Group Sparsity Residual Constraint for Image Denoising

Jul 31, 2017

Abstract:Group-based sparse representation has shown great potential in image denoising. However, most existing methods only consider the nonlocal self-similarity (NSS) prior of noisy input image. That is, the similar patches are collected only from degraded input, which makes the quality of image denoising largely depend on the input itself. However, such methods often suffer from a common drawback that the denoising performance may degrade quickly with increasing noise levels. In this paper we propose a new prior model, called group sparsity residual constraint (GSRC). Unlike the conventional group-based sparse representation denoising methods, two kinds of prior, namely, the NSS priors of noisy and pre-filtered images, are used in GSRC. In particular, we integrate these two NSS priors through the mechanism of sparsity residual, and thus, the task of image denoising is converted to the problem of reducing the group sparsity residual. To this end, we first obtain a good estimation of the group sparse coefficients of the original image by pre-filtering, and then the group sparse coefficients of the noisy image are used to approximate this estimation. To improve the accuracy of the nonlocal similar patch selection, an adaptive patch search scheme is designed. Furthermore, to fuse these two NSS prior better, an effective iterative shrinkage algorithm is developed to solve the proposed GSRC model. Experimental results demonstrate that the proposed GSRC modeling outperforms many state-of-the-art denoising methods in terms of the objective and the perceptual metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge