Zhipeng Lin

UAV-Aided Progressive Interference Source Localization Based on Improved Trust Region Optimization

Apr 27, 2025

Abstract:Trust region optimization-based received signal strength indicator (RSSI) interference source localization methods have been widely used in low-altitude research. However, these methods often converge to local optima in complex environments, degrading the positioning performance. This paper presents a novel unmanned aerial vehicle (UAV)-aided progressive interference source localization method based on improved trust region optimization. By combining the Levenberg-Marquardt (LM) algorithm with particle swarm optimization (PSO), our proposed method can effectively enhance the success rate of localization. We also propose a confidence quantification approach based on the UAV-to-ground channel model. This approach considers the surrounding environmental information of the sampling points and dynamically adjusts the weight of the sampling data during the data fusion. As a result, the overall positioning accuracy can be significantly improved. Experimental results demonstrate the proposed method can achieve high-precision interference source localization in noisy and interference-prone environments.

Mitigating Sensitive Information Leakage in LLMs4Code through Machine Unlearning

Feb 09, 2025Abstract:Large Language Models for Code (LLMs4Code) excel at code generation tasks, yielding promise to release developers from huge software development burdens. Nonetheless, these models have been shown to suffer from the significant privacy risks due to the potential leakage of sensitive information embedded during training, known as the memorization problem. Addressing this issue is crucial for ensuring privacy compliance and upholding user trust, but till now there is a dearth of dedicated studies in the literature that focus on this specific direction. Recently, machine unlearning has emerged as a promising solution by enabling models to "forget" sensitive information without full retraining, offering an efficient and scalable approach compared to traditional data cleaning methods. In this paper, we empirically evaluate the effectiveness of unlearning techniques for addressing privacy concerns in LLMs4Code.Specifically, we investigate three state-of-the-art unlearning algorithms and three well-known open-sourced LLMs4Code, on a benchmark that takes into consideration both the privacy data to be forgotten as well as the code generation capabilites of these models. Results show that it is feasible to mitigate the privacy concerns of LLMs4Code through machine unlearning while maintain their code generation capabilities at the same time. We also dissect the forms of privacy protection/leakage after unlearning and observe that there is a shift from direct leakage to indirect leakage, which underscores the need for future studies addressing this risk.

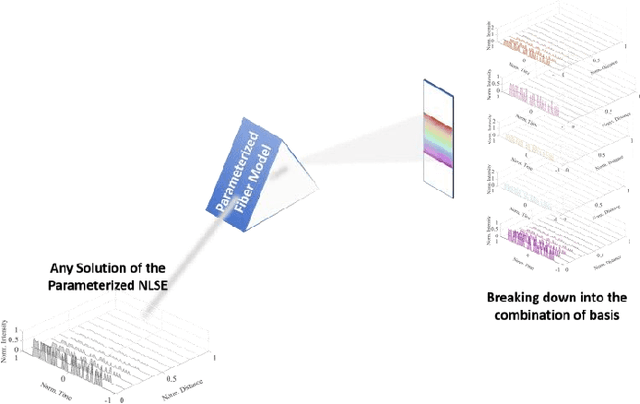

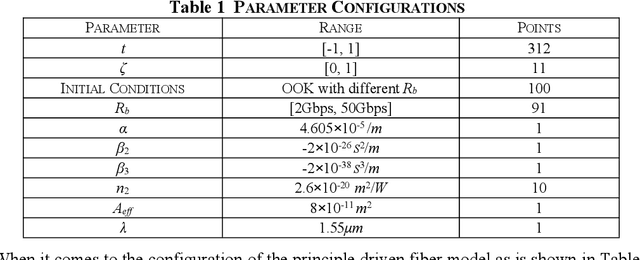

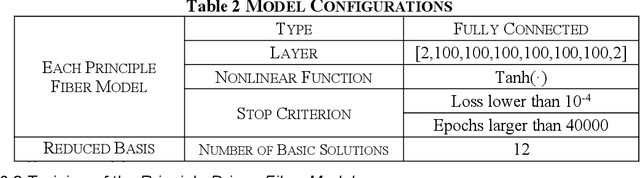

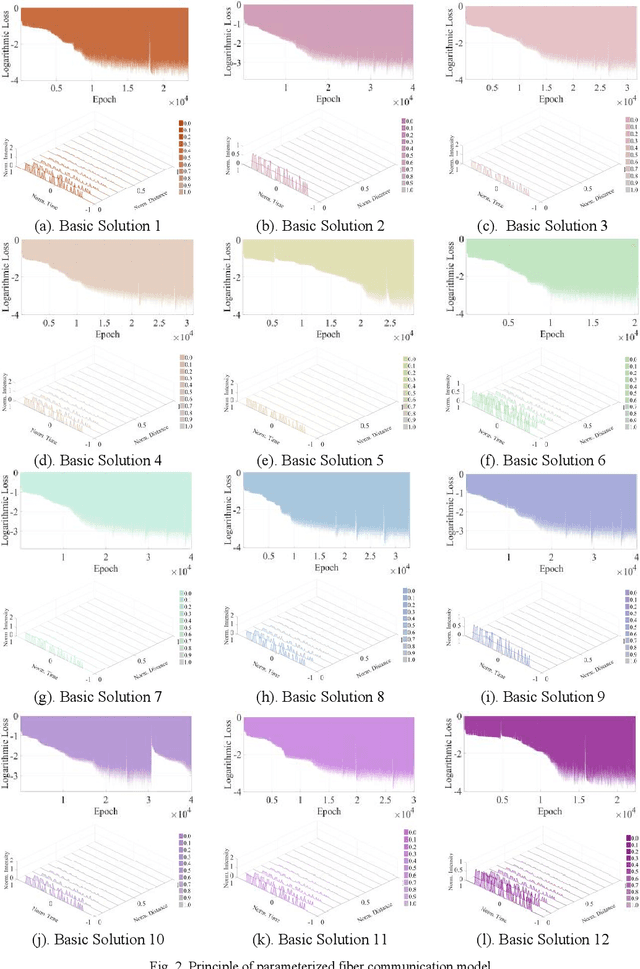

Principle Driven Parameterized Fiber Model based on GPT-PINN Neural Network

Aug 19, 2024Abstract:In cater the need of Beyond 5G communications, large numbers of data driven artificial intelligence based fiber models has been put forward as to utilize artificial intelligence's regression ability to predict pulse evolution in fiber transmission at a much faster speed compared with the traditional split step Fourier method. In order to increase the physical interpretabiliy, principle driven fiber models have been proposed which inserts the Nonlinear Schodinger Equation into their loss functions. However, regardless of either principle driven or data driven models, they need to be re-trained the whole model under different transmission conditions. Unfortunately, this situation can be unavoidable when conducting the fiber communication optimization work. If the scale of different transmission conditions is large, then the whole model needs to be retrained large numbers of time with relatively large scale of parameters which may consume higher time costs. Computing efficiency will be dragged down as well. In order to address this problem, we propose the principle driven parameterized fiber model in this manuscript. This model breaks down the predicted NLSE solution with respect to one set of transmission condition into the linear combination of several eigen solutions which were outputted by each pre-trained principle driven fiber model via the reduced basis method. Therefore, the model can greatly alleviate the heavy burden of re-training since only the linear combination coefficients need to be found when changing the transmission condition. Not only strong physical interpretability can the model posses, but also higher computing efficiency can be obtained. Under the demonstration, the model's computational complexity is 0.0113% of split step Fourier method and 1% of the previously proposed principle driven fiber model.

Fiber Transmission Model with Parameterized Inputs based on GPT-PINN Neural Network

Aug 19, 2024

Abstract:In this manuscript, a novelty principle driven fiber transmission model for short-distance transmission with parameterized inputs is put forward. By taking into the account of the previously proposed principle driven fiber model, the reduced basis expansion method and transforming the parameterized inputs into parameterized coefficients of the Nonlinear Schrodinger Equations, universal solutions with respect to inputs corresponding to different bit rates can all be obtained without the need of re-training the whole model. This model, once adopted, can have prominent advantages in both computation efficiency and physical background. Besides, this model can still be effectively trained without the needs of transmitted signals collected in advance. Tasks of on-off keying signals with bit rates ranging from 2Gbps to 50Gbps are adopted to demonstrate the fidelity of the model.

A Reconfigurable Subarray Architecture and Hybrid Beamforming for Millimeter-Wave Dual-Function-Radar-Communication Systems

Apr 24, 2024Abstract:Dual-function-radar-communication (DFRC) is a promising candidate technology for next-generation networks. By integrating hybrid analog-digital (HAD) beamforming into a multi-user millimeter-wave (mmWave) DFRC system, we design a new reconfigurable subarray (RS) architecture and jointly optimize the HAD beamforming to maximize the communication sum-rate and ensure a prescribed signal-to-clutter-plus-noise ratio for radar sensing. Considering the non-convexity of this problem arising from multiplicative coupling of the analog and digital beamforming, we convert the sum-rate maximization into an equivalent weighted mean-square error minimization and apply penalty dual decomposition to decouple the analog and digital beamforming. Specifically, a second-order cone program is first constructed to optimize the fully digital counterpart of the HAD beamforming. Then, the sparsity of the RS architecture is exploited to obtain a low-complexity solution for the HAD beamforming. The convergence and complexity analyses of our algorithm are carried out under the RS architecture. Simulations corroborate that, with the RS architecture, DFRC offers effective communication and sensing and improves energy efficiency by 83.4% and 114.2% with a moderate number of radio frequency chains and phase shifters, compared to the persistently- and fullyconnected architectures, respectively.

Sparse Bayesian Learning-Based 3D Spectrum Environment Map Construction-Sampling Optimization, Scenario-Dependent Dictionary Construction and Sparse Recovery

Feb 25, 2023

Abstract:The spectrum environment map (SEM), which can visualize the information of invisible electromagnetic spectrum, is vital for monitoring, management, and security of spectrum resources in cognitive radio (CR) networks. In view of a limited number of spectrum sensors and constrained sampling time, this paper presents a new three-dimensional (3D) SEM construction scheme based on sparse Bayesian learning (SBL). Firstly, we construct a scenario-dependent channel dictionary matrix by considering the propagation characteristic of the interested scenario. To improve sampling efficiency, a maximum mutual information (MMI)-based optimization algorithm is developed for the layout of sampling sensors. Then, a maximum and minimum distance (MMD) clustering-based SBL algorithm is proposed to recover the spectrum data at the unsampled positions and construct the whole 3D SEM. We finally use the simulation data of the campus scenario to construct the 3D SEMs and compare the proposed method with the state-of-the-art. The recovery performance and the impact of different sparsity on the constructed SEMs are also analyzed. Numerical results show that the proposed scheme can reduce the required spectrum sensor number and has higher accuracy under the low sampling rate.

Geometry-Based Stochastic Probability Models for the LoS and NLoS Paths of A2G Channels under Urban Scenario

May 19, 2022

Abstract:Path probability prediction is essential to describe the dynamic birth and death of propagation paths, and build the accurate channel model for air-to-ground (A2G) communications. The occurrence probability of each path is complex and time-variant due to fast changeable altitudes of UAVs and scattering environments. Considering the A2G channels under urban scenarios, this paper presents three novel stochastic probability models for the line-of-sight (LoS) path, ground specular (GS) path, and building-scattering (BS) path, respectively. By analyzing the geometric stochastic information of three-dimensional (3D) scattering environments, the proposed models are derived with respect to the width, height, and distribution of buildings. The effect of Fresnel zone and altitudes of transceivers are also taken into account. Simulation results show that the proposed LoS path probability model has good performance at different frequencies and altitudes, and is also consistent with existing models at the low or high altitude. Moreover, the proposed LoS and NLoS path probability models show good agreement with the ray-tracing (RT) simulation method.

Energy-Delay Minimization of Task Migration Based on Game Theory in MEC-assisted Vehicular Networks

May 13, 2022

Abstract:Roadside units (RSUs), which have strong computing capability and are close to vehicle nodes, have been widely used to process delay- and computation-intensive tasks of vehicle nodes. However, due to their high mobility, vehicles may drive out of the coverage of RSUs before receiving the task processing results. In this paper, we propose a mobile edge computing-assisted vehicular network, where vehicles can offload their tasks to a nearby vehicle via a vehicle-to-vehicle (V2V) link or a nearby RSU via a vehicle-to-infrastructure link. These tasks are also migrated by a V2V link or an infrastructure-to-infrastructure (I2I) link to avoid the scenario where the vehicles cannot receive the processed task from the RSUs. Considering mutual interference from the same link of offloading tasks and migrating tasks, we construct a vehicle offloading decision-based game to minimize the computation overhead. We prove that the game can always achieve Nash equilibrium and convergence by exploiting the finite improvement property. We then propose a task migration (TM) algorithm that includes three task-processing methods and two task-migration methods. Based on the TM algorithm, computation overhead minimization offloading (COMO) algorithm is presented. Extensive simulation results show that the proposed TM and COMO algorithms reduce the computation overhead and increase the success rate of task processing.

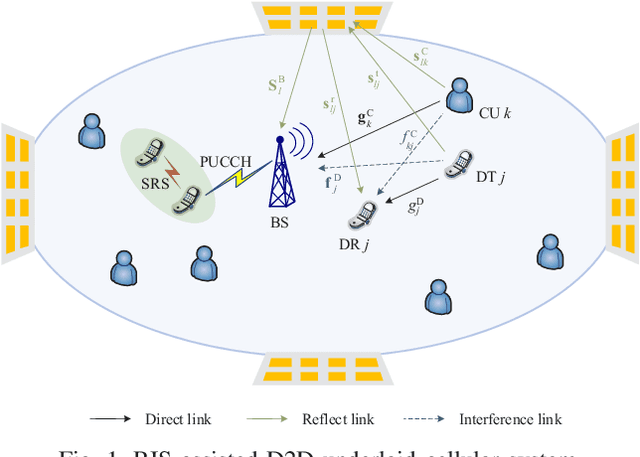

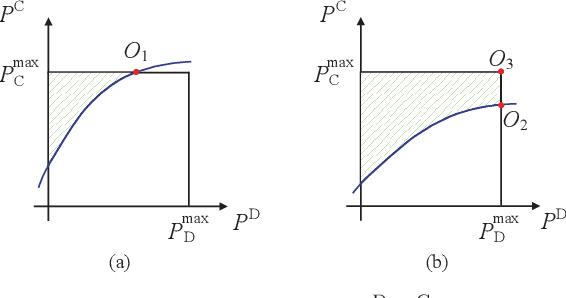

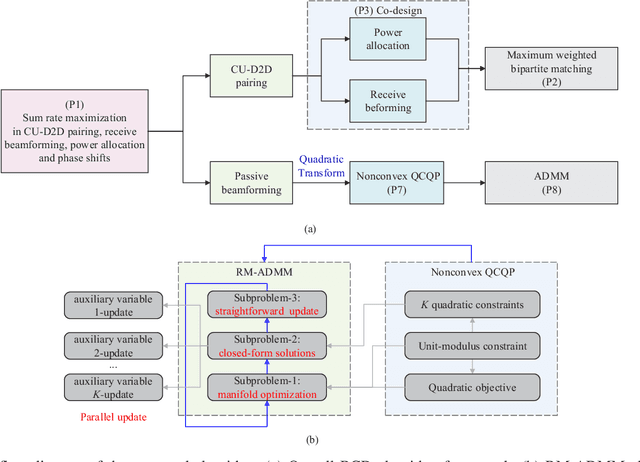

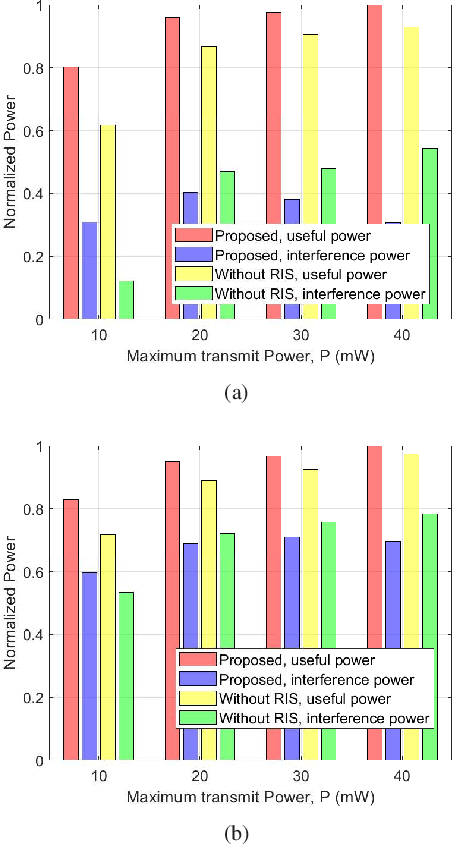

Sum-Rate Maximization for Multi-Reconfigurable Intelligent Surface-Assisted Device-to-Device Communications

Aug 16, 2021

Abstract:This paper proposes to deploy multiple reconfigurable intelligent surfaces (RISs) in device-to-device (D2D)-underlaid cellular systems. The uplink sum-rate of the system is maximized by jointly optimizing the transmit powers of the users, the pairing of the cellular users (CUs) and D2D links, the receive beamforming of the base station (BS), and the configuration of the RISs, subject to the power limits and quality-of-service (QoS) of the users. To address the non-convexity of this problem, we develop a new block coordinate descent (BCD) framework which decouples the D2D-CU pairing, power allocation and receive beamforming, from the configuration of the RISs. Specifically, we derive closed-form expressions for the power allocation and receive beamforming under any D2D-CU pairing, which facilitates interpreting the D2D-CU pairing as a bipartite graph matching solved using the Hungarian algorithm. We transform the configuration of the RISs into a quadratically constrained quadratic program (QCQP) with multiple quadratic constraints. A low-complexity algorithm, named Riemannian manifold-based alternating direction method of multipliers (RM-ADMM), is developed to decompose the QCQP into simpler QCQPs with a single constraint each, and solve them efficiently in a decentralized manner. Simulations show that the proposed algorithm can significantly improve the sum-rate of the D2D-underlaid system with a reduced complexity, as compared to its alternative based on semidefinite relaxation (SDR).

Few-Sample Named Entity Recognition for Security Vulnerability Reports by Fine-Tuning Pre-Trained Language Models

Aug 14, 2021

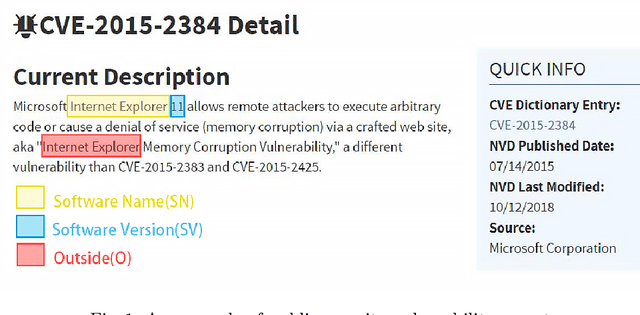

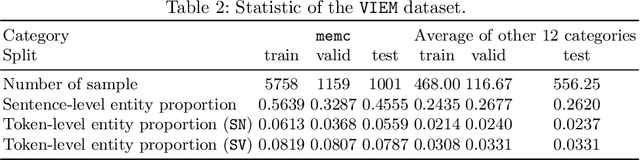

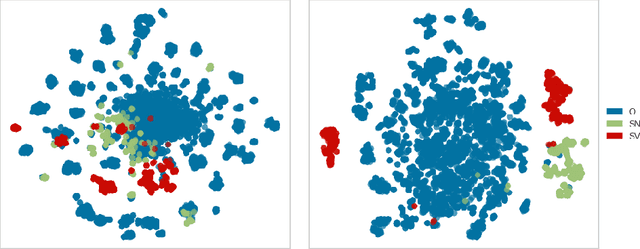

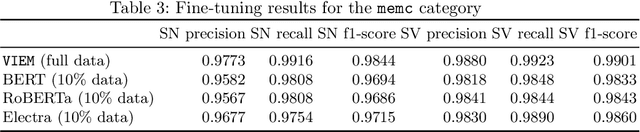

Abstract:Public security vulnerability reports (e.g., CVE reports) play an important role in the maintenance of computer and network systems. Security companies and administrators rely on information from these reports to prioritize tasks on developing and deploying patches to their customers. Since these reports are unstructured texts, automatic information extraction (IE) can help scale up the processing by converting the unstructured reports to structured forms, e.g., software names and versions and vulnerability types. Existing works on automated IE for security vulnerability reports often rely on a large number of labeled training samples. However, creating massive labeled training set is both expensive and time consuming. In this work, for the first time, we propose to investigate this problem where only a small number of labeled training samples are available. In particular, we investigate the performance of fine-tuning several state-of-the-art pre-trained language models on our small training dataset. The results show that with pre-trained language models and carefully tuned hyperparameters, we have reached or slightly outperformed the state-of-the-art system on this task. Consistent with previous two-step process of first fine-tuning on main category and then transfer learning to others as in [7], if otherwise following our proposed approach, the number of required labeled samples substantially decrease in both stages: 90% reduction in fine-tuning from 5758 to 576,and 88.8% reduction in transfer learning with 64 labeled samples per category. Our experiments thus demonstrate the effectiveness of few-sample learning on NER for security vulnerability report. This result opens up multiple research opportunities for few-sample learning for security vulnerability reports, which is discussed in the paper. Code: https://github.com/guanqun-yang/FewVulnerability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge