Zhipeng Dong

Towards Deploying VLA without Fine-Tuning: Plug-and-Play Inference-Time VLA Policy Steering via Embodied Evolutionary Diffusion

Nov 18, 2025Abstract:Vision-Language-Action (VLA) models have demonstrated significant potential in real-world robotic manipulation. However, pre-trained VLA policies still suffer from substantial performance degradation during downstream deployment. Although fine-tuning can mitigate this issue, its reliance on costly demonstration collection and intensive computation makes it impractical in real-world settings. In this work, we introduce VLA-Pilot, a plug-and-play inference-time policy steering method for zero-shot deployment of pre-trained VLA without any additional fine-tuning or data collection. We evaluate VLA-Pilot on six real-world downstream manipulation tasks across two distinct robotic embodiments, encompassing both in-distribution and out-of-distribution scenarios. Experimental results demonstrate that VLA-Pilot substantially boosts the success rates of off-the-shelf pre-trained VLA policies, enabling robust zero-shot generalization to diverse tasks and embodiments. Experimental videos and code are available at: https://rip4kobe.github.io/vla-pilot/.

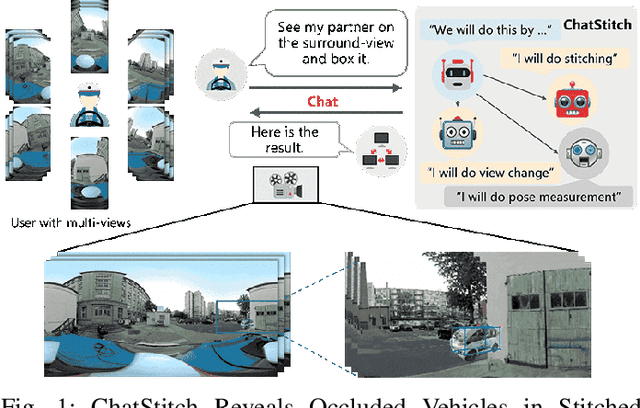

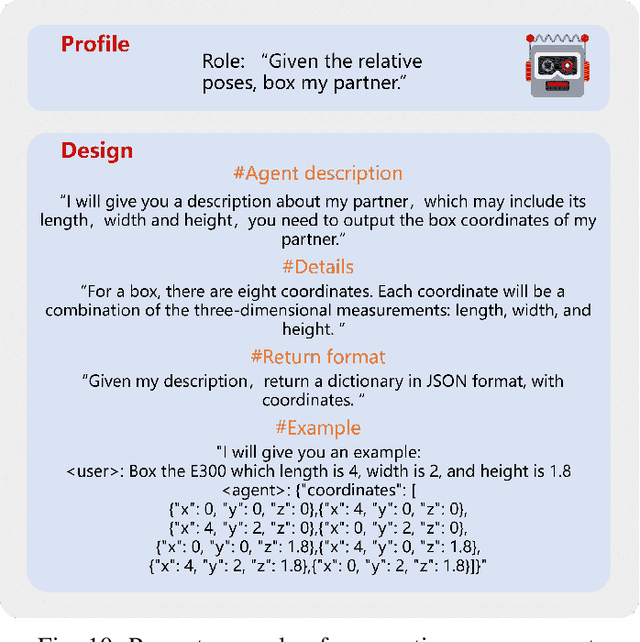

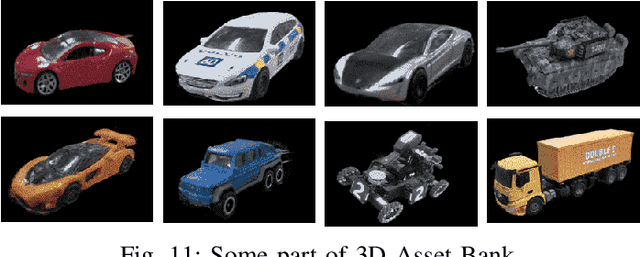

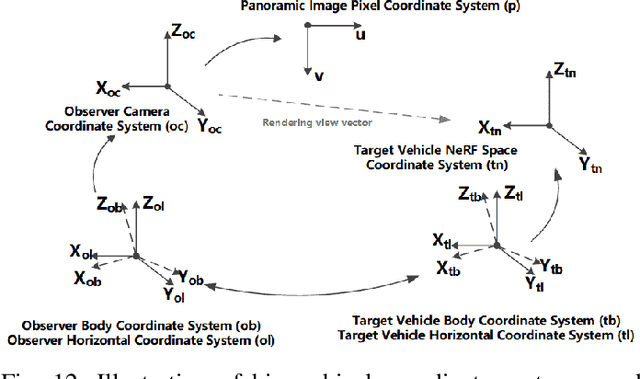

ChatStitch: Visualizing Through Structures via Surround-View Unsupervised Deep Image Stitching with Collaborative LLM-Agents

Mar 19, 2025

Abstract:Collaborative perception has garnered significant attention for its ability to enhance the perception capabilities of individual vehicles through the exchange of information with surrounding vehicle-agents. However, existing collaborative perception systems are limited by inefficiencies in user interaction and the challenge of multi-camera photorealistic visualization. To address these challenges, this paper introduces ChatStitch, the first collaborative perception system capable of unveiling obscured blind spot information through natural language commands integrated with external digital assets. To adeptly handle complex or abstract commands, ChatStitch employs a multi-agent collaborative framework based on Large Language Models. For achieving the most intuitive perception for humans, ChatStitch proposes SV-UDIS, the first surround-view unsupervised deep image stitching method under the non-global-overlapping condition. We conducted extensive experiments on the UDIS-D, MCOV-SLAM open datasets, and our real-world dataset. Specifically, our SV-UDIS method achieves state-of-the-art performance on the UDIS-D dataset for 3, 4, and 5 image stitching tasks, with PSNR improvements of 9%, 17%, and 21%, and SSIM improvements of 8%, 18%, and 26%, respectively.

Human-Humanoid Robots Cross-Embodiment Behavior-Skill Transfer Using Decomposed Adversarial Learning from Demonstration

Dec 19, 2024Abstract:Humanoid robots are envisioned as embodied intelligent agents capable of performing a wide range of human-level loco-manipulation tasks, particularly in scenarios requiring strenuous and repetitive labor. However, learning these skills is challenging due to the high degrees of freedom of humanoid robots, and collecting sufficient training data for humanoid is a laborious process. Given the rapid introduction of new humanoid platforms, a cross-embodiment framework that allows generalizable skill transfer is becoming increasingly critical. To address this, we propose a transferable framework that reduces the data bottleneck by using a unified digital human model as a common prototype and bypassing the need for re-training on every new robot platform. The model learns behavior primitives from human demonstrations through adversarial imitation, and the complex robot structures are decomposed into functional components, each trained independently and dynamically coordinated. Task generalization is achieved through a human-object interaction graph, and skills are transferred to different robots via embodiment-specific kinematic motion retargeting and dynamic fine-tuning. Our framework is validated on five humanoid robots with diverse configurations, demonstrating stable loco-manipulation and highlighting its effectiveness in reducing data requirements and increasing the efficiency of skill transfer across platforms.

Unified Vertex Motion Estimation for Integrated Video Stabilization and Stitching in Tractor-Trailer Wheeled Robots

Dec 10, 2024

Abstract:Tractor-trailer wheeled robots need to perform comprehensive perception tasks to enhance their operations in areas such as logistics parks and long-haul transportation. The perception of these robots face three major challenges: the relative pose change between the tractor and trailer, the asynchronous vibrations between the tractor and trailer, and the significant camera parallax caused by the large size. In this paper, we propose a novel Unified Vertex Motion Video Stabilization and Stitching framework designed for unknown environments. To establish the relationship between stabilization and stitching, the proposed Unified Vertex Motion framework comprises the Stitching Motion Field, which addresses relative positional change, and the Stabilization Motion Field, which tackles asynchronous vibrations. Then, recognizing the heterogeneity of optimization functions required for stabilization and stitching, a weighted cost function approach is proposed to address the problem of camera parallax. Furthermore, this framework has been successfully implemented in real tractor-trailer wheeled robots. The proposed Unified Vertex Motion Video Stabilization and Stitching method has been thoroughly tested in various challenging scenarios, demonstrating its accuracy and practicality in real-world robot tasks.

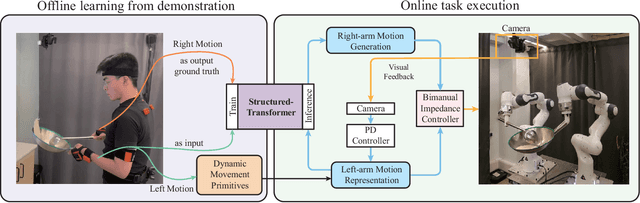

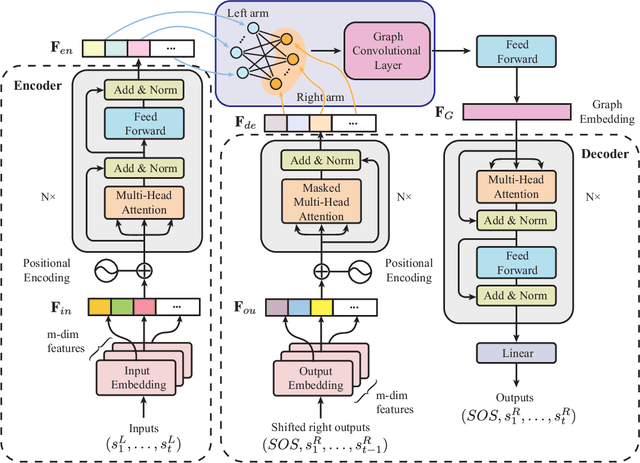

Robot Cooking with Stir-fry: Bimanual Non-prehensile Manipulation of Semi-fluid Objects

May 12, 2022

Abstract:This letter describes an approach to achieve well-known Chinese cooking art stir-fry on a bimanual robot system. Stir-fry requires a sequence of highly dynamic coordinated movements, which is usually difficult to learn for a chef, let alone transfer to robots. In this letter, we define a canonical stir-fry movement, and then propose a decoupled framework for learning this deformable object manipulation from human demonstration. First, the dual arms of the robot are decoupled into different roles (a leader and follower) and learned with classical and neural network-based methods separately, then the bimanual task is transformed into a coordination problem. To obtain general bimanual coordination, we secondly propose a Graph and Transformer based model -- Structured-Transformer, to capture the spatio-temporal relationship between dual-arm movements. Finally, by adding visual feedback of content deformation, our framework can adjust the movements automatically to achieve the desired stir-fry effect. We verify the framework by a simulator and deploy it on a real bimanual Panda robot system. The experimental results validate our framework can realize the bimanual robot stir-fry motion and have the potential to extend to other deformable objects with bimanual coordination.

* 8 pages, 8 figures, published to RA-L

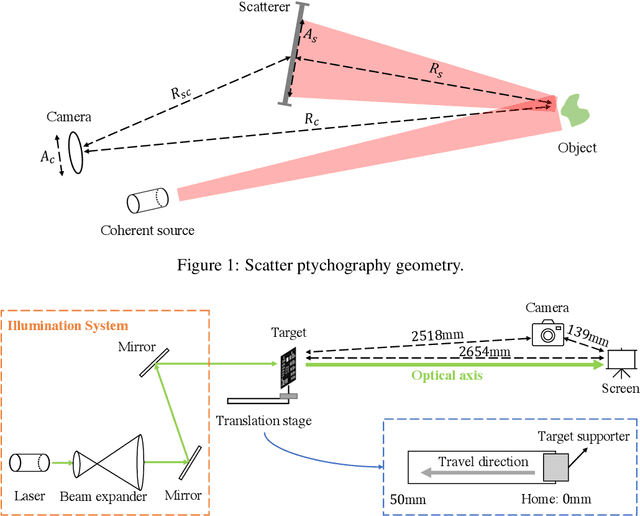

Scatter Ptychography

Mar 23, 2022

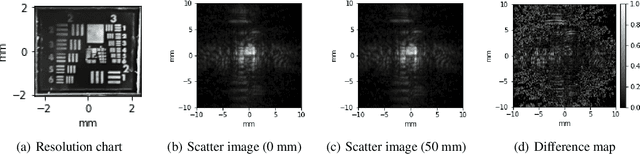

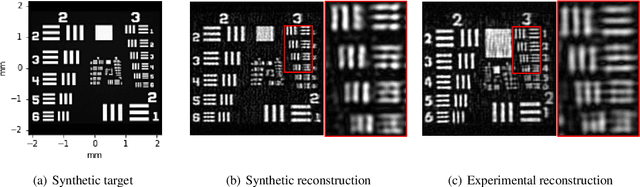

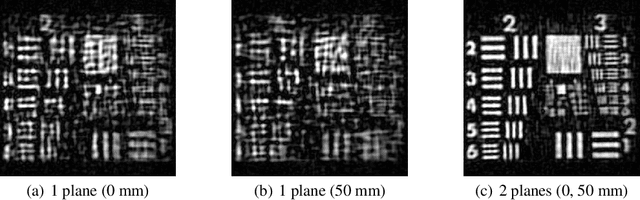

Abstract:Coherent illumination reflected by a remote target may be secondarily scattered by intermediate objects or materials. Here we show that phase retrieval on remotely observed images of such scattered fields enables imaging of the illuminated object at resolution proportional to $\lambda R_s/A_s$, where $R_s$ is the range between the scatterer and the target and $A_s$ is the diameter of the observed scatter. This resolution may exceed the resolution of directly viewing the target by the factor $R_cA_s/R_sA_c$, where $R_c$ is the range between the observer and the target and $A_c$ is the observing aperture. Here we use this technique to demonstrate $\approx 32\times$ resolution improvement relative to direct imaging.

Vector Detection Network: An Application Study on Robots Reading Analog Meters in the Wild

May 30, 2021

Abstract:Analog meters equipped with one or multiple pointers are wildly utilized to monitor vital devices' status in industrial sites for safety concerns. Reading these legacy meters {\bi autonomously} remains an open problem since estimating pointer origin and direction under imaging damping factors imposed in the wild could be challenging. Nevertheless, high accuracy, flexibility, and real-time performance are demanded. In this work, we propose the Vector Detection Network (VDN) to detect analog meters' pointers given their images, eliminating the barriers for autonomously reading such meters using intelligent agents like robots. We tackled the pointer as a two-dimensional vector, whose initial point coincides with the tip, and the direction is along tail-to-tip. The network estimates a confidence map, wherein the peak pixels are treated as vectors' initial points, along with a two-layer scalar map, whose pixel values at each peak form the scalar components in the directions of the coordinate axes. We established the Pointer-10K dataset composing of real-world analog meter images to evaluate our approach due to no similar dataset is available for now. Experiments on the dataset demonstrated that our methods generalize well to various meters, robust to harsh imaging factors, and run in real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge