Zangir Iklassov

The AI Data Scientist

Aug 25, 2025

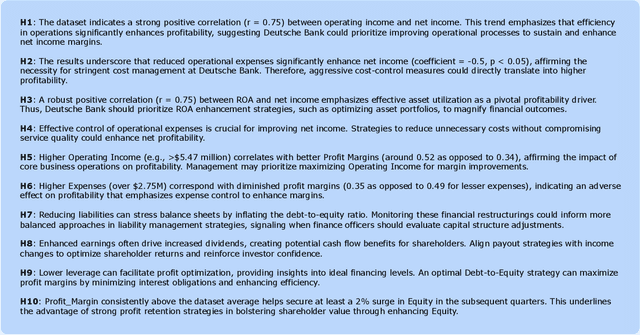

Abstract:Imagine decision-makers uploading data and, within minutes, receiving clear, actionable insights delivered straight to their fingertips. That is the promise of the AI Data Scientist, an autonomous Agent powered by large language models (LLMs) that closes the gap between evidence and action. Rather than simply writing code or responding to prompts, it reasons through questions, tests ideas, and delivers end-to-end insights at a pace far beyond traditional workflows. Guided by the scientific tenet of the hypothesis, this Agent uncovers explanatory patterns in data, evaluates their statistical significance, and uses them to inform predictive modeling. It then translates these results into recommendations that are both rigorous and accessible. At the core of the AI Data Scientist is a team of specialized LLM Subagents, each responsible for a distinct task such as data cleaning, statistical testing, validation, and plain-language communication. These Subagents write their own code, reason about causality, and identify when additional data is needed to support sound conclusions. Together, they achieve in minutes what might otherwise take days or weeks, enabling a new kind of interaction that makes deep data science both accessible and actionable.

SVRPBench: A Realistic Benchmark for Stochastic Vehicle Routing Problem

May 29, 2025

Abstract:Robust routing under uncertainty is central to real-world logistics, yet most benchmarks assume static, idealized settings. We present SVRPBench, the first open benchmark to capture high-fidelity stochastic dynamics in vehicle routing at urban scale. Spanning more than 500 instances with up to 1000 customers, it simulates realistic delivery conditions: time-dependent congestion, log-normal delays, probabilistic accidents, and empirically grounded time windows for residential and commercial clients. Our pipeline generates diverse, constraint-rich scenarios, including multi-depot and multi-vehicle setups. Benchmarking reveals that state-of-the-art RL solvers like POMO and AM degrade by over 20% under distributional shift, while classical and metaheuristic methods remain robust. To enable reproducible research, we release the dataset and evaluation suite. SVRPBench challenges the community to design solvers that generalize beyond synthetic assumptions and adapt to real-world uncertainty.

LLM-BABYBENCH: Understanding and Evaluating Grounded Planning and Reasoning in LLMs

May 17, 2025Abstract:Assessing the capacity of Large Language Models (LLMs) to plan and reason within the constraints of interactive environments is crucial for developing capable AI agents. We introduce $\textbf{LLM-BabyBench}$, a new benchmark suite designed specifically for this purpose. Built upon a textual adaptation of the procedurally generated BabyAI grid world, this suite evaluates LLMs on three fundamental aspects of grounded intelligence: (1) predicting the consequences of actions on the environment state ($\textbf{Predict}$ task), (2) generating sequences of low-level actions to achieve specified objectives ($\textbf{Plan}$ task), and (3) decomposing high-level instructions into coherent subgoal sequences ($\textbf{Decompose}$ task). We detail the methodology for generating the three corresponding datasets ($\texttt{LLM-BabyBench-Predict}$, $\texttt{-Plan}$, $\texttt{-Decompose}$) by extracting structured information from an expert agent operating within the text-based environment. Furthermore, we provide a standardized evaluation harness and metrics, including environment interaction for validating generated plans, to facilitate reproducible assessment of diverse LLMs. Initial baseline results highlight the challenges posed by these grounded reasoning tasks. The benchmark suite, datasets, data generation code, and evaluation code are made publicly available ($\href{https://github.com/choukrani/llm-babybench}{\text{GitHub}}$, $\href{https://huggingface.co/datasets/salem-mbzuai/LLM-BabyBench}{\text{HuggingFace}}$).

A Decade of Deep Learning: A Survey on The Magnificent Seven

Dec 13, 2024Abstract:Deep learning has fundamentally reshaped the landscape of artificial intelligence over the past decade, enabling remarkable achievements across diverse domains. At the heart of these developments lie multi-layered neural network architectures that excel at automatic feature extraction, leading to significant improvements in machine learning tasks. To demystify these advances and offer accessible guidance, we present a comprehensive overview of the most influential deep learning algorithms selected through a broad-based survey of the field. Our discussion centers on pivotal architectures, including Residual Networks, Transformers, Generative Adversarial Networks, Variational Autoencoders, Graph Neural Networks, Contrastive Language-Image Pre-training, and Diffusion models. We detail their historical context, highlight their mathematical foundations and algorithmic principles, and examine subsequent variants, extensions, and practical considerations such as training methodologies, normalization techniques, and learning rate schedules. Beyond historical and technical insights, we also address their applications, challenges, and potential research directions. This survey aims to serve as a practical manual for both newcomers seeking an entry point into cutting-edge deep learning methods and experienced researchers transitioning into this rapidly evolving domain.

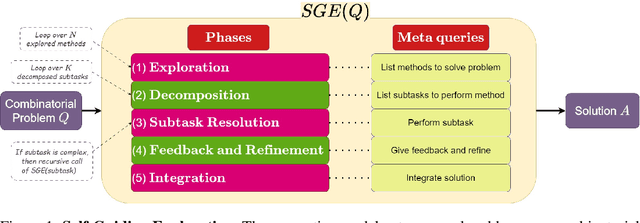

Self-Guiding Exploration for Combinatorial Problems

May 28, 2024

Abstract:Large Language Models (LLMs) have become pivotal in addressing reasoning tasks across diverse domains, including arithmetic, commonsense, and symbolic reasoning. They utilize prompting techniques such as Exploration-of-Thought, Decomposition, and Refinement to effectively navigate and solve intricate tasks. Despite these advancements, the application of LLMs to Combinatorial Problems (CPs), known for their NP-hardness and critical roles in logistics and resource management remains underexplored. To address this gap, we introduce a novel prompting strategy: Self-Guiding Exploration (SGE), designed to enhance the performance of solving CPs. SGE operates autonomously, generating multiple thought trajectories for each CP task. It then breaks these trajectories down into actionable subtasks, executes them sequentially, and refines the results to ensure optimal outcomes. We present our research as the first to apply LLMs to a broad range of CPs and demonstrate that SGE outperforms existing prompting strategies by over 27.84% in CP optimization performance. Additionally, SGE achieves a 2.46% higher accuracy over the best existing results in other reasoning tasks (arithmetic, commonsense, and symbolic).

Reinforcement Learning for Solving Stochastic Vehicle Routing Problem with Time Windows

Feb 15, 2024Abstract:This paper introduces a reinforcement learning approach to optimize the Stochastic Vehicle Routing Problem with Time Windows (SVRP), focusing on reducing travel costs in goods delivery. We develop a novel SVRP formulation that accounts for uncertain travel costs and demands, alongside specific customer time windows. An attention-based neural network trained through reinforcement learning is employed to minimize routing costs. Our approach addresses a gap in SVRP research, which traditionally relies on heuristic methods, by leveraging machine learning. The model outperforms the Ant-Colony Optimization algorithm, achieving a 1.73% reduction in travel costs. It uniquely integrates external information, demonstrating robustness in diverse environments, making it a valuable benchmark for future SVRP studies and industry application.

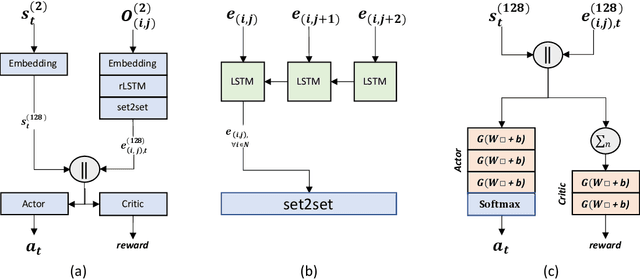

Reinforcement Learning for Solving Stochastic Vehicle Routing Problem

Nov 13, 2023Abstract:This study addresses a gap in the utilization of Reinforcement Learning (RL) and Machine Learning (ML) techniques in solving the Stochastic Vehicle Routing Problem (SVRP) that involves the challenging task of optimizing vehicle routes under uncertain conditions. We propose a novel end-to-end framework that comprehensively addresses the key sources of stochasticity in SVRP and utilizes an RL agent with a simple yet effective architecture and a tailored training method. Through comparative analysis, our proposed model demonstrates superior performance compared to a widely adopted state-of-the-art metaheuristic, achieving a significant 3.43% reduction in travel costs. Furthermore, the model exhibits robustness across diverse SVRP settings, highlighting its adaptability and ability to learn optimal routing strategies in varying environments. The publicly available implementation of our framework serves as a valuable resource for future research endeavors aimed at advancing RL-based solutions for SVRP.

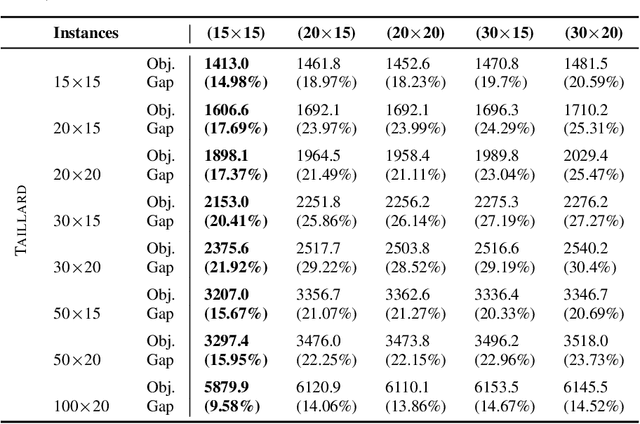

Learning to generalize Dispatching rules on the Job Shop Scheduling

Jun 09, 2022

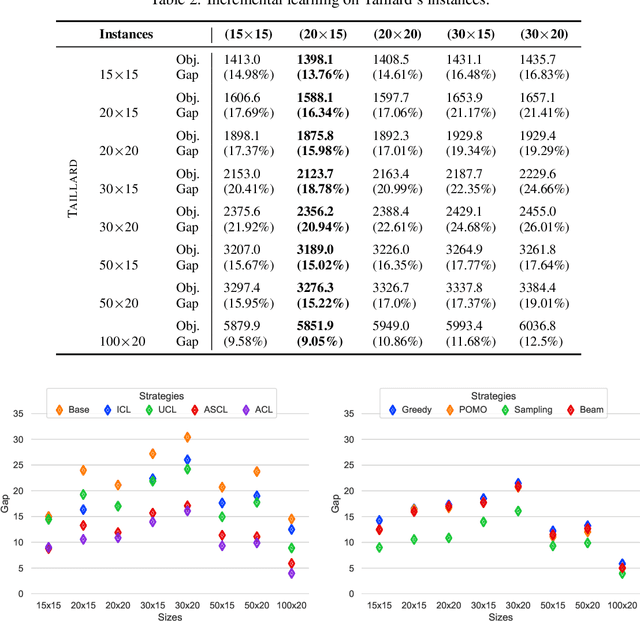

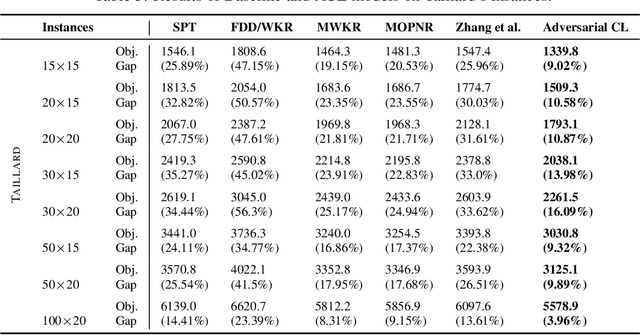

Abstract:This paper introduces a Reinforcement Learning approach to better generalize heuristic dispatching rules on the Job-shop Scheduling Problem (JSP). Current models on the JSP do not focus on generalization, although, as we show in this work, this is key to learning better heuristics on the problem. A well-known technique to improve generalization is to learn on increasingly complex instances using Curriculum Learning (CL). However, as many works in the literature indicate, this technique might suffer from catastrophic forgetting when transferring the learned skills between different problem sizes. To address this issue, we introduce a novel Adversarial Curriculum Learning (ACL) strategy, which dynamically adjusts the difficulty level during the learning process to revisit the worst-performing instances. This work also presents a deep learning model to solve the JSP, which is equivariant w.r.t. the job definition and size-agnostic. Conducted experiments on Taillard's and Demirkol's instances show that the presented approach significantly improves the current state-of-the-art models on the JSP. It reduces the average optimality gap from 19.35\% to 10.46\% on Taillard's instances and from 38.43\% to 18.85\% on Demirkol's instances. Our implementation is available online.

AI for Porosity and Permeability Prediction from Geologic Core X-Ray Micro-Tomography

May 26, 2022

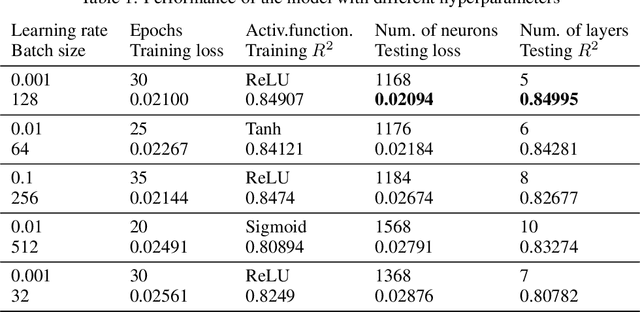

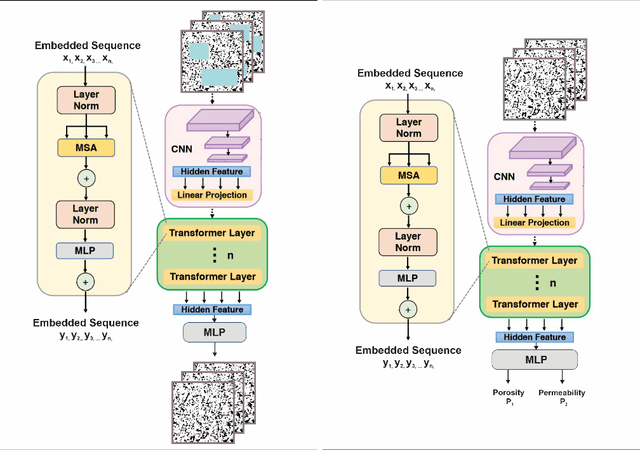

Abstract:Geologic cores are rock samples that are extracted from deep under the ground during the well drilling process. They are used for petroleum reservoirs' performance characterization. Traditionally, physical studies of cores are carried out by the means of manual time-consuming experiments. With the development of deep learning, scientists actively started working on developing machine-learning-based approaches to identify physical properties without any manual experiments. Several previous works used machine learning to determine the porosity and permeability of the rocks, but either method was inaccurate or computationally expensive. We are proposing to use self-supervised pretraining of the very small CNN-transformer-based model to predict the physical properties of the rocks with high accuracy in a time-efficient manner. We show that this technique prevents overfitting even for extremely small datasets.

Robust Reinforcement Learning on Graphs for Logistics optimization

May 25, 2022

Abstract:Logistics optimization nowadays is becoming one of the hottest areas in the AI community. In the past year, significant advancements in the domain were achieved by representing the problem in a form of graph. Another promising area of research was to apply reinforcement learning algorithms to the above task. In our work, we made advantage of using both approaches and apply reinforcement learning on a graph. To do that, we have analyzed the most recent results in both fields and selected SOTA algorithms both from graph neural networks and reinforcement learning. Then, we combined selected models on the problem of AMOD systems optimization for the transportation network of New York city. Our team compared three algorithms - GAT, Pro-CNN and PTDNet - to bring to the fore the important nodes on a graph representation. Finally, we achieved SOTA results on AMOD systems optimization problem employing PTDNet with GNN and training them in reinforcement fashion. Keywords: Graph Neural Network (GNN), Logistics optimization, Reinforcement Learning

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge