Learning to generalize Dispatching rules on the Job Shop Scheduling

Paper and Code

Jun 09, 2022

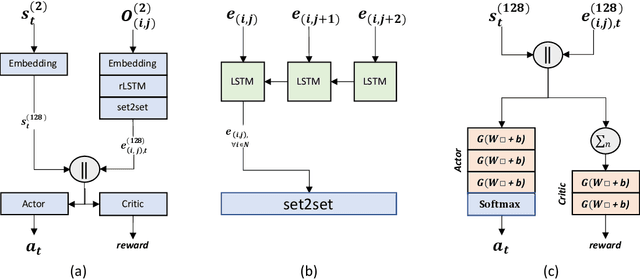

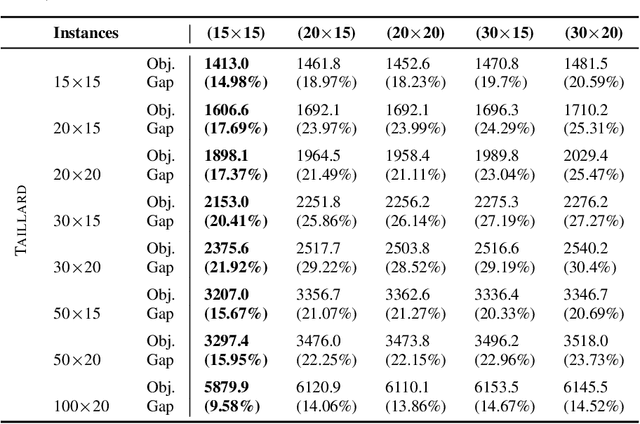

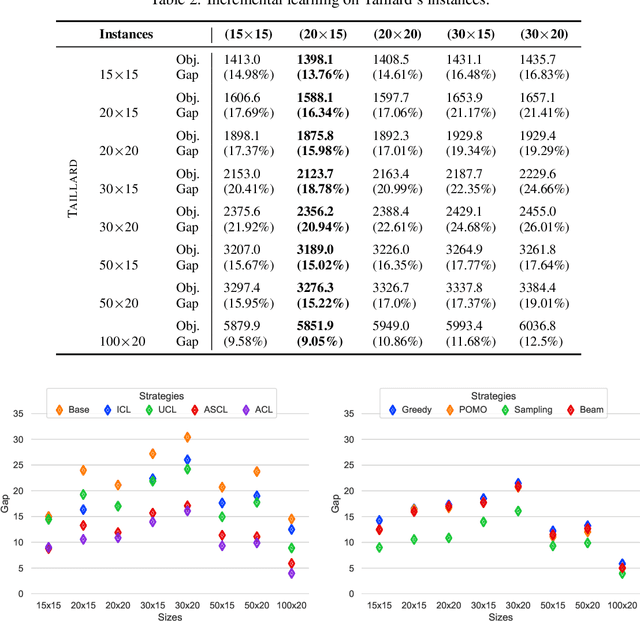

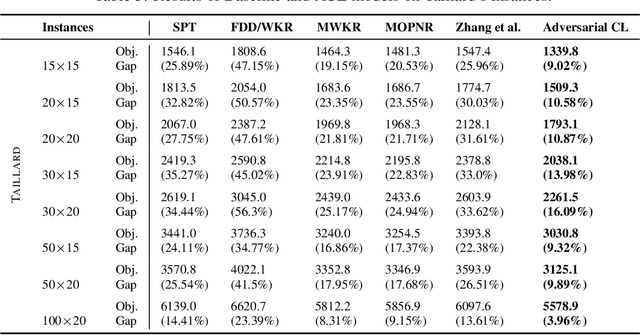

This paper introduces a Reinforcement Learning approach to better generalize heuristic dispatching rules on the Job-shop Scheduling Problem (JSP). Current models on the JSP do not focus on generalization, although, as we show in this work, this is key to learning better heuristics on the problem. A well-known technique to improve generalization is to learn on increasingly complex instances using Curriculum Learning (CL). However, as many works in the literature indicate, this technique might suffer from catastrophic forgetting when transferring the learned skills between different problem sizes. To address this issue, we introduce a novel Adversarial Curriculum Learning (ACL) strategy, which dynamically adjusts the difficulty level during the learning process to revisit the worst-performing instances. This work also presents a deep learning model to solve the JSP, which is equivariant w.r.t. the job definition and size-agnostic. Conducted experiments on Taillard's and Demirkol's instances show that the presented approach significantly improves the current state-of-the-art models on the JSP. It reduces the average optimality gap from 19.35\% to 10.46\% on Taillard's instances and from 38.43\% to 18.85\% on Demirkol's instances. Our implementation is available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge