Yutao Feng

ElastoGen: 4D Generative Elastodynamics

May 23, 2024Abstract:We present ElastoGen, a knowledge-driven model that generates physically accurate and coherent 4D elastodynamics. Instead of relying on petabyte-scale data-driven learning, ElastoGen leverages the principles of physics-in-the-loop and learns from established physical knowledge, such as partial differential equations and their numerical solutions. The core idea of ElastoGen is converting the global differential operator, corresponding to the nonlinear elastodynamic equations, into iterative local convolution-like operations, which naturally fit modern neural networks. Each network module is specifically designed to support this goal rather than functioning as a black box. As a result, ElastoGen is exceptionally lightweight in terms of both training requirements and network scale. Additionally, due to its alignment with physical procedures, ElastoGen efficiently generates accurate dynamics for a wide range of hyperelastic materials and can be easily integrated with upstream and downstream deep modules to enable end-to-end 4D generation.

VR-GS: A Physical Dynamics-Aware Interactive Gaussian Splatting System in Virtual Reality

Jan 30, 2024

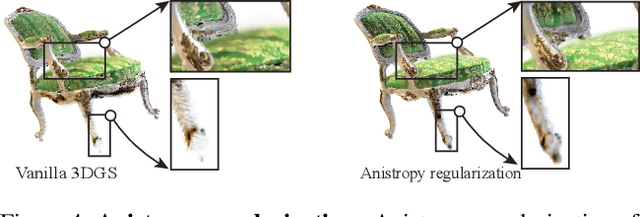

Abstract:As consumer Virtual Reality (VR) and Mixed Reality (MR) technologies gain momentum, there's a growing focus on the development of engagements with 3D virtual content. Unfortunately, traditional techniques for content creation, editing, and interaction within these virtual spaces are fraught with difficulties. They tend to be not only engineering-intensive but also require extensive expertise, which adds to the frustration and inefficiency in virtual object manipulation. Our proposed VR-GS system represents a leap forward in human-centered 3D content interaction, offering a seamless and intuitive user experience. By developing a physical dynamics-aware interactive Gaussian Splatting in a Virtual Reality setting, and constructing a highly efficient two-level embedding strategy alongside deformable body simulations, VR-GS ensures real-time execution with highly realistic dynamic responses. The components of our Virtual Reality system are designed for high efficiency and effectiveness, starting from detailed scene reconstruction and object segmentation, advancing through multi-view image in-painting, and extending to interactive physics-based editing. The system also incorporates real-time deformation embedding and dynamic shadow casting, ensuring a comprehensive and engaging virtual experience.Our project page is available at: https://yingjiang96.github.io/VR-GS/.

Gaussian Splashing: Dynamic Fluid Synthesis with Gaussian Splatting

Jan 27, 2024

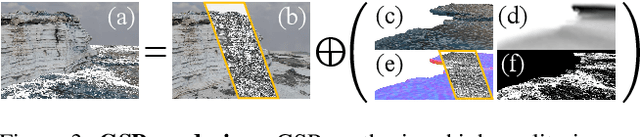

Abstract:We demonstrate the feasibility of integrating physics-based animations of solids and fluids with 3D Gaussian Splatting (3DGS) to create novel effects in virtual scenes reconstructed using 3DGS. Leveraging the coherence of the Gaussian splatting and position-based dynamics (PBD) in the underlying representation, we manage rendering, view synthesis, and the dynamics of solids and fluids in a cohesive manner. Similar to Gaussian shader, we enhance each Gaussian kernel with an added normal, aligning the kernel's orientation with the surface normal to refine the PBD simulation. This approach effectively eliminates spiky noises that arise from rotational deformation in solids. It also allows us to integrate physically based rendering to augment the dynamic surface reflections on fluids. Consequently, our framework is capable of realistically reproducing surface highlights on dynamic fluids and facilitating interactions between scene objects and fluids from new views. For more information, please visit our project page at \url{https://amysteriouscat.github.io/GaussianSplashing/}.

PhysGaussian: Physics-Integrated 3D Gaussians for Generative Dynamics

Nov 22, 2023

Abstract:We introduce PhysGaussian, a new method that seamlessly integrates physically grounded Newtonian dynamics within 3D Gaussians to achieve high-quality novel motion synthesis. Employing a custom Material Point Method (MPM), our approach enriches 3D Gaussian kernels with physically meaningful kinematic deformation and mechanical stress attributes, all evolved in line with continuum mechanics principles. A defining characteristic of our method is the seamless integration between physical simulation and visual rendering: both components utilize the same 3D Gaussian kernels as their discrete representations. This negates the necessity for triangle/tetrahedron meshing, marching cubes, "cage meshes," or any other geometry embedding, highlighting the principle of "what you see is what you simulate (WS$^2$)." Our method demonstrates exceptional versatility across a wide variety of materials--including elastic entities, metals, non-Newtonian fluids, and granular materials--showcasing its strong capabilities in creating diverse visual content with novel viewpoints and movements. Our project page is at: https://xpandora.github.io/PhysGaussian/

PIE-NeRF: Physics-based Interactive Elastodynamics with NeRF

Nov 22, 2023

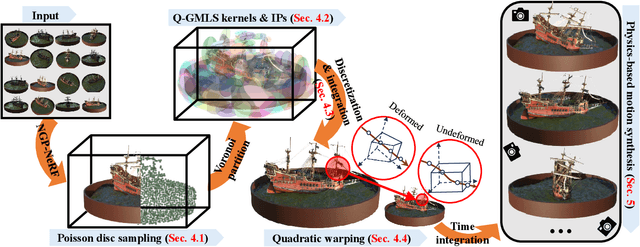

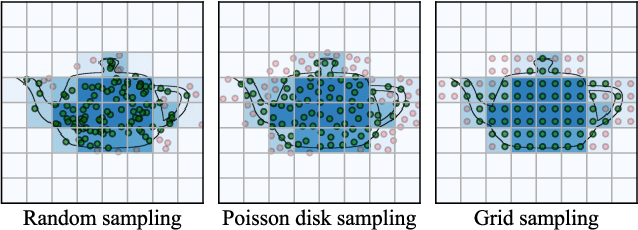

Abstract:We show that physics-based simulations can be seamlessly integrated with NeRF to generate high-quality elastodynamics of real-world objects. Unlike existing methods, we discretize nonlinear hyperelasticity in a meshless way, obviating the necessity for intermediate auxiliary shape proxies like a tetrahedral mesh or voxel grid. A quadratic generalized moving least square (Q-GMLS) is employed to capture nonlinear dynamics and large deformation on the implicit model. Such meshless integration enables versatile simulations of complex and codimensional shapes. We adaptively place the least-square kernels according to the NeRF density field to significantly reduce the complexity of the nonlinear simulation. As a result, physically realistic animations can be conveniently synthesized using our method for a wide range of hyperelastic materials at an interactive rate. For more information, please visit our project page at https://fytalon.github.io/pienerf/.

Sentence Simplification via Large Language Models

Feb 23, 2023

Abstract:Sentence Simplification aims to rephrase complex sentences into simpler sentences while retaining original meaning. Large Language models (LLMs) have demonstrated the ability to perform a variety of natural language processing tasks. However, it is not yet known whether LLMs can be served as a high-quality sentence simplification system. In this work, we empirically analyze the zero-/few-shot learning ability of LLMs by evaluating them on a number of benchmark test sets. Experimental results show LLMs outperform state-of-the-art sentence simplification methods, and are judged to be on a par with human annotators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge