Yongjae Lee

Process-Aware Procurement Lead Time Prediction for Shipyard Delay Mitigation

Jan 27, 2026Abstract:Accurately predicting procurement lead time (PLT) remains a challenge in engineered-to-order industries such as shipbuilding and plant construction, where delays in a single key component can disrupt project timelines. In shipyards, pipe spools are critical components; installed deep within hull blocks soon after steel erection, any delay in their procurement can halt all downstream tasks. Recognizing their importance, existing studies predict PLT using the static physical attributes of pipe spools. However, procurement is inherently a dynamic, multi-stakeholder business process involving a continuous sequence of internal and external events at the shipyard, factors often overlooked in traditional approaches. To address this issue, this paper proposes a novel framework that combines event logs, dataset records of the procurement events, with static attributes to predict PLT. The temporal attributes of each event are extracted to reflect the continuity and temporal context of the process. Subsequently, a deep sequential neural network combined with a multi-layered perceptron is employed to integrate these static and dynamic features, enabling the model to capture both structural and contextual information in procurement. Comparative experiments are conducted using real-world pipe spool procurement data from a globally renowned South Korean shipbuilding corporation. Three tasks are evaluated, which are production, post-processing, and procurement lead time prediction. The results show a 22.6% to 50.4% improvement in prediction performance in terms of mean absolute error over the best-performing existing approaches across the three tasks. These findings indicate the value of considering procurement process information for more accurate PLT prediction.

GuruAgents: Emulating Wise Investors with Prompt-Guided LLM Agents

Oct 02, 2025Abstract:This study demonstrates that GuruAgents, prompt-guided AI agents, can systematically operationalize the strategies of legendary investment gurus. We develop five distinct GuruAgents, each designed to emulate an iconic investor, by encoding their distinct philosophies into LLM prompts that integrate financial tools and a deterministic reasoning pipeline. In a backtest on NASDAQ-100 constituents from Q4 2023 to Q2 2025, the GuruAgents exhibit unique behaviors driven by their prompted personas. The Buffett GuruAgent achieves the highest performance, delivering a 42.2\% CAGR that significantly outperforms benchmarks, while other agents show varied results. These findings confirm that prompt engineering can successfully translate the qualitative philosophies of investment gurus into reproducible, quantitative strategies, highlighting a novel direction for automated systematic investing. The source code and data are available at https://github.com/yejining99/GuruAgents.

* 7 Pages, 2 figures

Prediction Loss Guided Decision-Focused Learning

Sep 10, 2025Abstract:Decision-making under uncertainty is often considered in two stages: predicting the unknown parameters, and then optimizing decisions based on predictions. While traditional prediction-focused learning (PFL) treats these two stages separately, decision-focused learning (DFL) trains the predictive model by directly optimizing the decision quality in an end-to-end manner. However, despite using exact or well-approximated gradients, vanilla DFL often suffers from unstable convergence due to its flat-and-sharp loss landscapes. In contrast, PFL yields more stable optimization, but overlooks the downstream decision quality. To address this, we propose a simple yet effective approach: perturbing the decision loss gradient using the prediction loss gradient to construct an update direction. Our method requires no additional training and can be integrated with any DFL solvers. Using the sigmoid-like decaying parameter, we let the prediction loss gradient guide the decision loss gradient to train a predictive model that optimizes decision quality. Also, we provide a theoretical convergence guarantee to Pareto stationary point under mild assumptions. Empirically, we demonstrate our method across three stochastic optimization problems, showing promising results compared to other baselines. We validate that our approach achieves lower regret with more stable training, even in situations where either PFL or DFL struggles.

THEME : Enhancing Thematic Investing with Semantic Stock Representations and Temporal Dynamics

Aug 23, 2025Abstract:Thematic investing aims to construct portfolios aligned with structural trends, yet selecting relevant stocks remains challenging due to overlapping sector boundaries and evolving market dynamics. To address this challenge, we construct the Thematic Representation Set (TRS), an extended dataset that begins with real-world thematic ETFs and expands upon them by incorporating industry classifications and financial news to overcome their coverage limitations. The final dataset contains both the explicit mapping of themes to their constituent stocks and the rich textual profiles for each. Building on this dataset, we introduce \textsc{THEME}, a hierarchical contrastive learning framework. By representing the textual profiles of themes and stocks as embeddings, \textsc{THEME} first leverages their hierarchical relationship to achieve semantic alignment. Subsequently, it refines these semantic embeddings through a temporal refinement stage that incorporates individual stock returns. The final stock representations are designed for effective retrieval of thematically aligned assets with strong return potential. Empirical results show that \textsc{THEME} outperforms strong baselines across multiple retrieval metrics and significantly improves performance in portfolio construction. By jointly modeling thematic relationships from text and market dynamics from returns, \textsc{THEME} provides a scalable and adaptive solution for navigating complex investment themes.

Your AI, Not Your View: The Bias of LLMs in Investment Analysis

Jul 28, 2025Abstract:In finance, Large Language Models (LLMs) face frequent knowledge conflicts due to discrepancies between pre-trained parametric knowledge and real-time market data. These conflicts become particularly problematic when LLMs are deployed in real-world investment services, where misalignment between a model's embedded preferences and those of the financial institution can lead to unreliable recommendations. Yet little research has examined what investment views LLMs actually hold. We propose an experimental framework to investigate such conflicts, offering the first quantitative analysis of confirmation bias in LLM-based investment analysis. Using hypothetical scenarios with balanced and imbalanced arguments, we extract models' latent preferences and measure their persistence. Focusing on sector, size, and momentum, our analysis reveals distinct, model-specific tendencies. In particular, we observe a consistent preference for large-cap stocks and contrarian strategies across most models. These preferences often harden into confirmation bias, with models clinging to initial judgments despite counter-evidence.

FinDER: Financial Dataset for Question Answering and Evaluating Retrieval-Augmented Generation

Apr 22, 2025Abstract:In the fast-paced financial domain, accurate and up-to-date information is critical to addressing ever-evolving market conditions. Retrieving this information correctly is essential in financial Question-Answering (QA), since many language models struggle with factual accuracy in this domain. We present FinDER, an expert-generated dataset tailored for Retrieval-Augmented Generation (RAG) in finance. Unlike existing QA datasets that provide predefined contexts and rely on relatively clear and straightforward queries, FinDER focuses on annotating search-relevant evidence by domain experts, offering 5,703 query-evidence-answer triplets derived from real-world financial inquiries. These queries frequently include abbreviations, acronyms, and concise expressions, capturing the brevity and ambiguity common in the realistic search behavior of professionals. By challenging models to retrieve relevant information from large corpora rather than relying on readily determined contexts, FinDER offers a more realistic benchmark for evaluating RAG systems. We further present a comprehensive evaluation of multiple state-of-the-art retrieval models and Large Language Models, showcasing challenges derived from a realistic benchmark to drive future research on truthful and precise RAG in the financial domain.

Integrating LLM-Generated Views into Mean-Variance Optimization Using the Black-Litterman Model

Apr 19, 2025

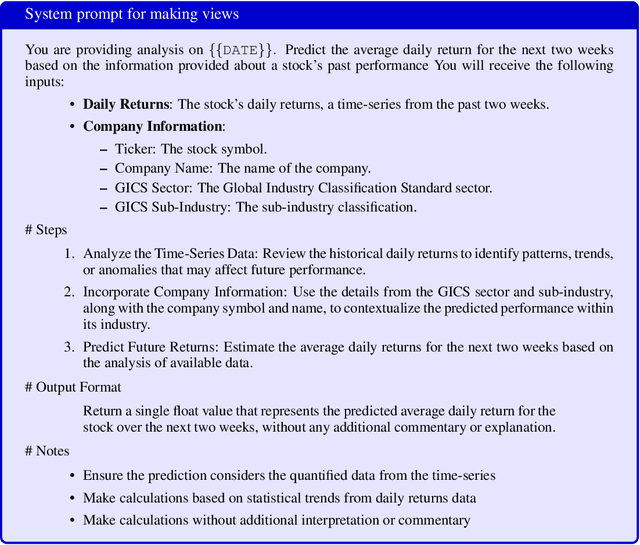

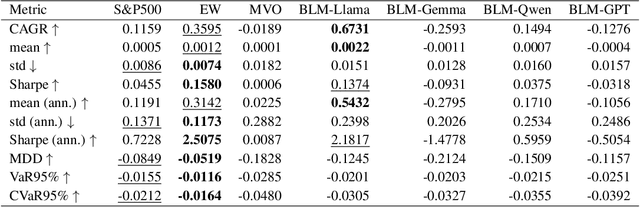

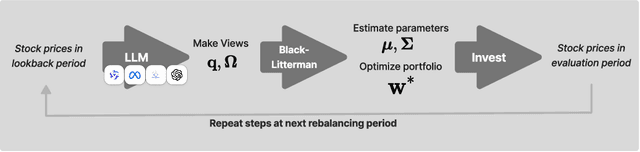

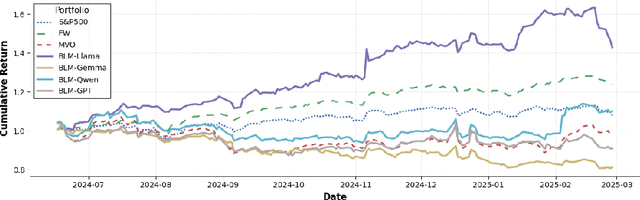

Abstract:Portfolio optimization faces challenges due to the sensitivity in traditional mean-variance models. The Black-Litterman model mitigates this by integrating investor views, but defining these views remains difficult. This study explores the integration of large language models (LLMs) generated views into portfolio optimization using the Black-Litterman framework. Our method leverages LLMs to estimate expected stock returns from historical prices and company metadata, incorporating uncertainty through the variance in predictions. We conduct a backtest of the LLM-optimized portfolios from June 2024 to February 2025, rebalancing biweekly using the previous two weeks of price data. As baselines, we compare against the S&P 500, an equal-weighted portfolio, and a traditional mean-variance optimized portfolio constructed using the same set of stocks. Empirical results suggest that different LLMs exhibit varying levels of predictive optimism and confidence stability, which impact portfolio performance. The source code and data are available at https://github.com/youngandbin/LLM-MVO-BLM.

Speedy MASt3R

Mar 13, 2025Abstract:Image matching is a key component of modern 3D vision algorithms, essential for accurate scene reconstruction and localization. MASt3R redefines image matching as a 3D task by leveraging DUSt3R and introducing a fast reciprocal matching scheme that accelerates matching by orders of magnitude while preserving theoretical guarantees. This approach has gained strong traction, with DUSt3R and MASt3R collectively cited over 250 times in a short span, underscoring their impact. However, despite its accuracy, MASt3R's inference speed remains a bottleneck. On an A40 GPU, latency per image pair is 198.16 ms, mainly due to computational overhead from the ViT encoder-decoder and Fast Reciprocal Nearest Neighbor (FastNN) matching. To address this, we introduce Speedy MASt3R, a post-training optimization framework that enhances inference efficiency while maintaining accuracy. It integrates multiple optimization techniques, including FlashMatch-an approach leveraging FlashAttention v2 with tiling strategies for improved efficiency, computation graph optimization via layer and tensor fusion having kernel auto-tuning with TensorRT (GraphFusion), and a streamlined FastNN pipeline that reduces memory access time from quadratic to linear while accelerating block-wise correlation scoring through vectorized computation (FastNN-Lite). Additionally, it employs mixed-precision inference with FP16/FP32 hybrid computations (HybridCast), achieving speedup while preserving numerical precision. Evaluated on Aachen Day-Night, InLoc, 7-Scenes, ScanNet1500, and MegaDepth1500, Speedy MASt3R achieves a 54% reduction in inference time (198 ms to 91 ms per image pair) without sacrificing accuracy. This advancement enables real-time 3D understanding, benefiting applications like mixed reality navigation and large-scale 3D scene reconstruction.

BitAbuse: A Dataset of Visually Perturbed Texts for Defending Phishing Attacks

Feb 06, 2025Abstract:Phishing often targets victims through visually perturbed texts to bypass security systems. The noise contained in these texts functions as an adversarial attack, designed to deceive language models and hinder their ability to accurately interpret the content. However, since it is difficult to obtain sufficient phishing cases, previous studies have used synthetic datasets that do not contain real-world cases. In this study, we propose the BitAbuse dataset, which includes real-world phishing cases, to address the limitations of previous research. Our dataset comprises a total of 325,580 visually perturbed texts. The dataset inputs are drawn from the raw corpus, consisting of visually perturbed sentences and sentences generated through an artificial perturbation process. Each input sentence is labeled with its corresponding ground truth, representing the restored, non-perturbed version. Language models trained on our proposed dataset demonstrated significantly better performance compared to previous methods, achieving an accuracy of approximately 96%. Our analysis revealed a significant gap between real-world and synthetic examples, underscoring the value of our dataset for building reliable pre-trained models for restoration tasks. We release the BitAbuse dataset, which includes real-world phishing cases annotated with visual perturbations, to support future research in adversarial attack defense.

Decision-informed Neural Networks with Large Language Model Integration for Portfolio Optimization

Feb 02, 2025Abstract:This paper addresses the critical disconnect between prediction and decision quality in portfolio optimization by integrating Large Language Models (LLMs) with decision-focused learning. We demonstrate both theoretically and empirically that minimizing the prediction error alone leads to suboptimal portfolio decisions. We aim to exploit the representational power of LLMs for investment decisions. An attention mechanism processes asset relationships, temporal dependencies, and macro variables, which are then directly integrated into a portfolio optimization layer. This enables the model to capture complex market dynamics and align predictions with the decision objectives. Extensive experiments on S\&P100 and DOW30 datasets show that our model consistently outperforms state-of-the-art deep learning models. In addition, gradient-based analyses show that our model prioritizes the assets most crucial to decision making, thus mitigating the effects of prediction errors on portfolio performance. These findings underscore the value of integrating decision objectives into predictions for more robust and context-aware portfolio management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge