Yitong Zhou

A 28nm 0.22 μJ/token memory-compute-intensity-aware CNN-Transformer accelerator with hybrid-attention-based layer-fusion and cascaded pruning for semanticsegmentation

Dec 19, 2025Abstract:This work presents a 28nm 13.93mm2 CNN-Transformer accelerator for semantic segmentation, achieving 3.86-to-10.91x energy reduction over previous designs. It features a hybrid attention unit, layer-fusion scheduler, and cascaded feature-map pruner, with peak energy efficiency of 52.90TOPS/W (INT8).

* 3 pages,7 pages, 2025 IEEE International Solid-State Circuits Conference (ISSCC)

BLADE: A Behavior-Level Data Augmentation Framework with Dual Fusion Modeling for Multi-Behavior Sequential Recommendation

Dec 15, 2025Abstract:Multi-behavior sequential recommendation aims to capture users' dynamic interests by modeling diverse types of user interactions over time. Although several studies have explored this setting, the recommendation performance remains suboptimal, mainly due to two fundamental challenges: the heterogeneity of user behaviors and data sparsity. To address these challenges, we propose BLADE, a framework that enhances multi-behavior modeling while mitigating data sparsity. Specifically, to handle behavior heterogeneity, we introduce a dual item-behavior fusion architecture that incorporates behavior information at both the input and intermediate levels, enabling preference modeling from multiple perspectives. To mitigate data sparsity, we design three behavior-level data augmentation methods that operate directly on behavior sequences rather than core item sequences. These methods generate diverse augmented views while preserving the semantic consistency of item sequences. These augmented views further enhance representation learning and generalization via contrastive learning. Experiments on three real-world datasets demonstrate the effectiveness of our approach.

Towards Stable and Structured Time Series Generation with Perturbation-Aware Flow Matching

Nov 18, 2025

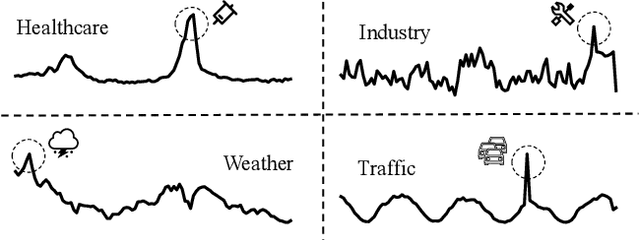

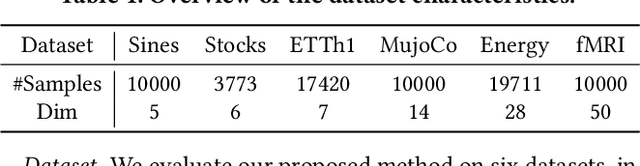

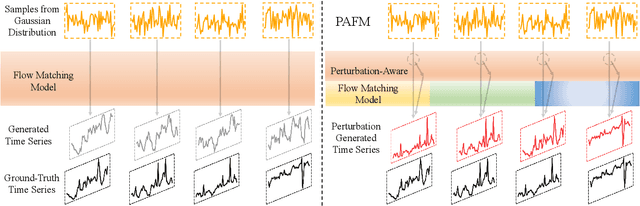

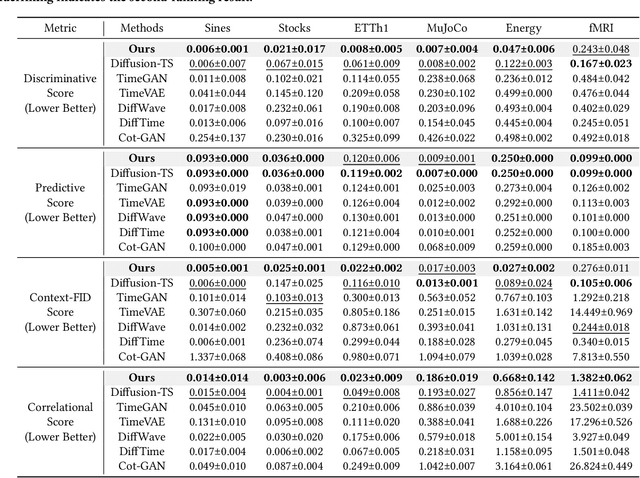

Abstract:Time series generation is critical for a wide range of applications, which greatly supports downstream analytical and decision-making tasks. However, the inherent temporal heterogeneous induced by localized perturbations present significant challenges for generating structurally consistent time series. While flow matching provides a promising paradigm by modeling temporal dynamics through trajectory-level supervision, it fails to adequately capture abrupt transitions in perturbed time series, as the use of globally shared parameters constrains the velocity field to a unified representation. To address these limitations, we introduce \textbf{PAFM}, a \textbf{P}erturbation-\textbf{A}ware \textbf{F}low \textbf{M}atching framework that models perturbed trajectories to ensure stable and structurally consistent time series generation. The framework incorporates perturbation-guided training to simulate localized disturbances and leverages a dual-path velocity field to capture trajectory deviations under perturbation, enabling refined modeling of perturbed behavior to enhance the structural coherence. In order to further improve sensitivity to trajectory perturbations while enhancing expressiveness, a mixture-of-experts decoder with flow routing dynamically allocates modeling capacity in response to different trajectory dynamics. Extensive experiments on both unconditional and conditional generation tasks demonstrate that PAFM consistently outperforms strong baselines. Code is available at https://anonymous.4open.science/r/PAFM-03B2.

Benchmarking Multimodal LLMs on Recognition and Understanding over Chemical Tables

Jun 13, 2025Abstract:Chemical tables encode complex experimental knowledge through symbolic expressions, structured variables, and embedded molecular graphics. Existing benchmarks largely overlook this multimodal and domain-specific complexity, limiting the ability of multimodal large language models to support scientific understanding in chemistry. In this work, we introduce ChemTable, a large-scale benchmark of real-world chemical tables curated from the experimental sections of literature. ChemTable includes expert-annotated cell polygons, logical layouts, and domain-specific labels, including reagents, catalysts, yields, and graphical components and supports two core tasks: (1) Table Recognition, covering structure parsing and content extraction; and (2) Table Understanding, encompassing both descriptive and reasoning-oriented question answering grounded in table structure and domain semantics. We evaluated a range of representative multimodal models, including both open-source and closed-source models, on ChemTable and reported a series of findings with practical and conceptual insights. Although models show reasonable performance on basic layout parsing, they exhibit substantial limitations on both descriptive and inferential QA tasks compared to human performance, and we observe significant performance gaps between open-source and closed-source models across multiple dimensions. These results underscore the challenges of chemistry-aware table understanding and position ChemTable as a rigorous and realistic benchmark for advancing scientific reasoning.

Time Series Forecasting as Reasoning: A Slow-Thinking Approach with Reinforced LLMs

Jun 12, 2025

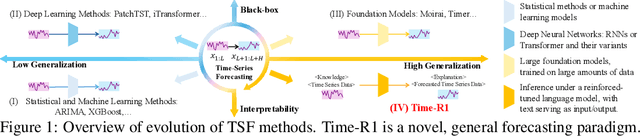

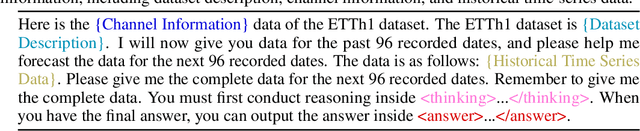

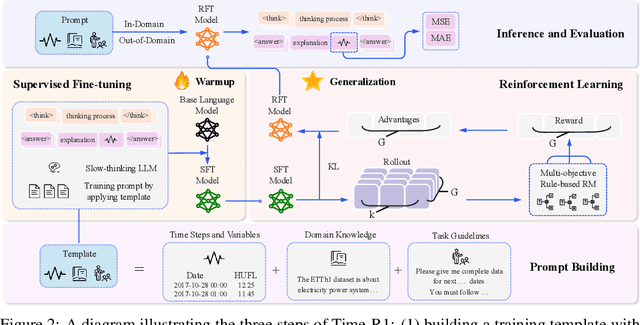

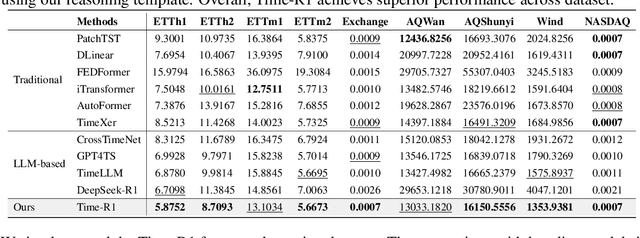

Abstract:To advance time series forecasting (TSF), various methods have been proposed to improve prediction accuracy, evolving from statistical techniques to data-driven deep learning architectures. Despite their effectiveness, most existing methods still adhere to a fast thinking paradigm-relying on extracting historical patterns and mapping them to future values as their core modeling philosophy, lacking an explicit thinking process that incorporates intermediate time series reasoning. Meanwhile, emerging slow-thinking LLMs (e.g., OpenAI-o1) have shown remarkable multi-step reasoning capabilities, offering an alternative way to overcome these issues. However, prompt engineering alone presents several limitations - including high computational cost, privacy risks, and limited capacity for in-depth domain-specific time series reasoning. To address these limitations, a more promising approach is to train LLMs to develop slow thinking capabilities and acquire strong time series reasoning skills. For this purpose, we propose Time-R1, a two-stage reinforcement fine-tuning framework designed to enhance multi-step reasoning ability of LLMs for time series forecasting. Specifically, the first stage conducts supervised fine-tuning for warmup adaptation, while the second stage employs reinforcement learning to improve the model's generalization ability. Particularly, we design a fine-grained multi-objective reward specifically for time series forecasting, and then introduce GRIP (group-based relative importance for policy optimization), which leverages non-uniform sampling to further encourage and optimize the model's exploration of effective reasoning paths. Experiments demonstrate that Time-R1 significantly improves forecast performance across diverse datasets.

Enhancing Table Recognition with Vision LLMs: A Benchmark and Neighbor-Guided Toolchain Reasoner

Dec 30, 2024

Abstract:Pre-trained foundation models have recently significantly progressed in structured table understanding and reasoning. However, despite advancements in areas such as table semantic understanding and table question answering, recognizing the structure and content of unstructured tables using Vision Large Language Models (VLLMs) remains under-explored. In this work, we address this research gap by employing VLLMs in a training-free reasoning paradigm. First, we design a benchmark with various hierarchical dimensions relevant to table recognition. Subsequently, we conduct in-depth evaluations using pre-trained VLLMs, finding that low-quality image input is a significant bottleneck in the recognition process. Drawing inspiration from these findings, we propose the Neighbor-Guided Toolchain Reasoner (NGTR) framework, which is characterized by integrating multiple lightweight models for low-level visual processing operations aimed at mitigating issues with low-quality input images. Specifically, we utilize a neighbor retrieval mechanism to guide the generation of multiple tool invocation plans, transferring tool selection experiences from similar neighbors to the given input, thereby facilitating suitable tool selection. Additionally, we introduce a reflection module to supervise the tool invocation process. Extensive experiments on public table recognition datasets demonstrate that our approach significantly enhances the recognition capabilities of the vanilla VLLMs. We believe that the designed benchmark and the proposed NGTR framework could provide an alternative solution in table recognition.

3D Hand Mesh Recovery from Monocular RGB in Camera Space

May 12, 2024

Abstract:With the rapid advancement of technologies such as virtual reality, augmented reality, and gesture control, users expect interactions with computer interfaces to be more natural and intuitive. Existing visual algorithms often struggle to accomplish advanced human-computer interaction tasks, necessitating accurate and reliable absolute spatial prediction methods. Moreover, dealing with complex scenes and occlusions in monocular images poses entirely new challenges. This study proposes a network model that performs parallel processing of root-relative grids and root recovery tasks. The model enables the recovery of 3D hand meshes in camera space from monocular RGB images. To facilitate end-to-end training, we utilize an implicit learning approach for 2D heatmaps, enhancing the compatibility of 2D cues across different subtasks. Incorporate the Inception concept into spectral graph convolutional network to explore relative mesh of root, and integrate it with the locally detailed and globally attentive method designed for root recovery exploration. This approach improves the model's predictive performance in complex environments and self-occluded scenes. Through evaluation on the large-scale hand dataset FreiHAND, we have demonstrated that our proposed model is comparable with state-of-the-art models. This study contributes to the advancement of techniques for accurate and reliable absolute spatial prediction in various human-computer interaction applications.

Every Preference Changes Differently: Neural Multi-Interest Preference Model with Temporal Dynamics for Recommendation

Jul 21, 2022

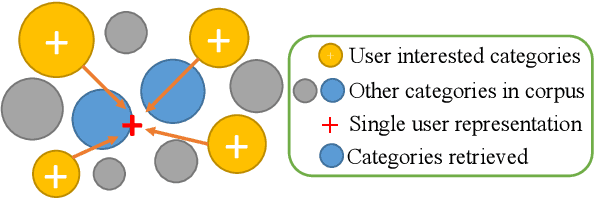

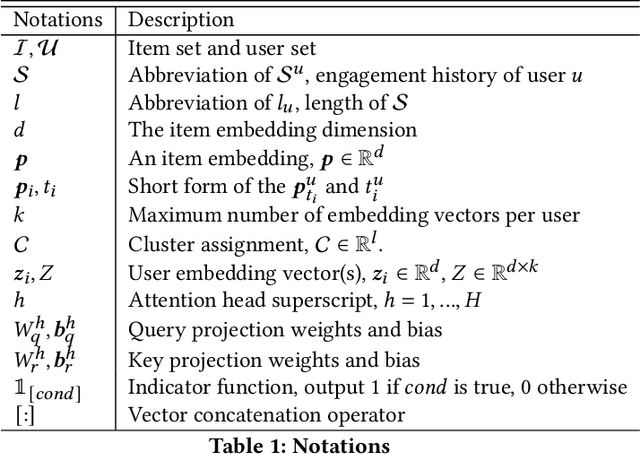

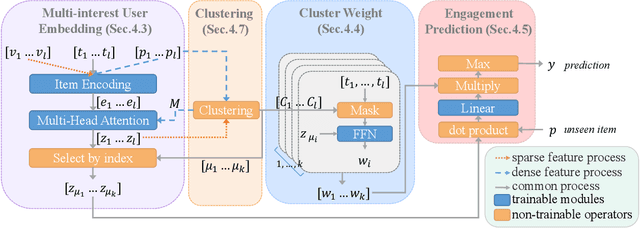

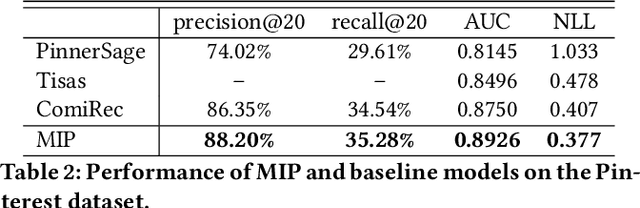

Abstract:User embeddings (vectorized representations of a user) are essential in recommendation systems. Numerous approaches have been proposed to construct a representation for the user in order to find similar items for retrieval tasks, and they have been proven effective in industrial recommendation systems as well. Recently people have discovered the power of using multiple embeddings to represent a user, with the hope that each embedding represents the user's interest in a certain topic. With multi-interest representation, it's important to model the user's preference over the different topics and how the preference change with time. However, existing approaches either fail to estimate the user's affinity to each interest or unreasonably assume every interest of every user fades with an equal rate with time, thus hurting the recall of candidate retrieval. In this paper, we propose the Multi-Interest Preference (MIP) model, an approach that not only produces multi-interest for users by using the user's sequential engagement more effectively but also automatically learns a set of weights to represent the preference over each embedding so that the candidates can be retrieved from each interest proportionally. Extensive experiments have been done on various industrial-scale datasets to demonstrate the effectiveness of our approach.

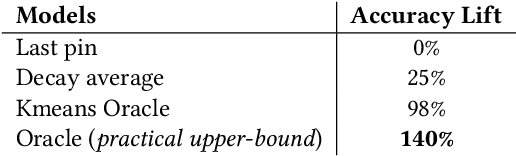

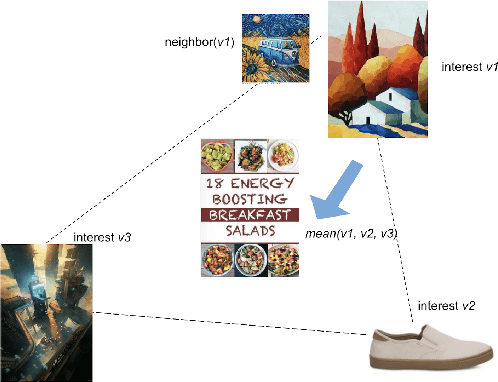

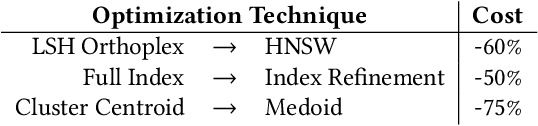

PinnerSage: Multi-Modal User Embedding Framework for Recommendations at Pinterest

Jul 07, 2020

Abstract:Latent user representations are widely adopted in the tech industry for powering personalized recommender systems. Most prior work infers a single high dimensional embedding to represent a user, which is a good starting point but falls short in delivering a full understanding of the user's interests. In this work, we introduce PinnerSage, an end-to-end recommender system that represents each user via multi-modal embeddings and leverages this rich representation of users to provides high quality personalized recommendations. PinnerSage achieves this by clustering users' actions into conceptually coherent clusters with the help of a hierarchical clustering method (Ward) and summarizes the clusters via representative pins (Medoids) for efficiency and interpretability. PinnerSage is deployed in production at Pinterest and we outline the several design decisions that makes it run seamlessly at a very large scale. We conduct several offline and online A/B experiments to show that our method significantly outperforms single embedding methods.

* 10 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge