Yingyong Qi

SEMA: a Scalable and Efficient Mamba like Attention via Token Localization and Averaging

Jun 10, 2025Abstract:Attention is the critical component of a transformer. Yet the quadratic computational complexity of vanilla full attention in the input size and the inability of its linear attention variant to focus have been challenges for computer vision tasks. We provide a mathematical definition of generalized attention and formulate both vanilla softmax attention and linear attention within the general framework. We prove that generalized attention disperses, that is, as the number of keys tends to infinity, the query assigns equal weights to all keys. Motivated by the dispersion property and recent development of Mamba form of attention, we design Scalable and Efficient Mamba like Attention (SEMA) which utilizes token localization to avoid dispersion and maintain focusing, complemented by theoretically consistent arithmetic averaging to capture global aspect of attention. We support our approach on Imagenet-1k where classification results show that SEMA is a scalable and effective alternative beyond linear attention, outperforming recent vision Mamba models on increasingly larger scales of images at similar model parameter sizes.

AFIDAF: Alternating Fourier and Image Domain Adaptive Filters as an Efficient Alternative to Attention in ViTs

Jul 16, 2024Abstract:We propose and demonstrate an alternating Fourier and image domain filtering approach for feature extraction as an efficient alternative to build a vision backbone without using the computationally intensive attention. The performance among the lightweight models reaches the state-of-the-art level on ImageNet-1K classification, and improves downstream tasks on object detection and segmentation consistently as well. Our approach also serves as a new tool to compress vision transformers (ViTs).

A Proximal Algorithm for Network Slimming

Jul 02, 2023

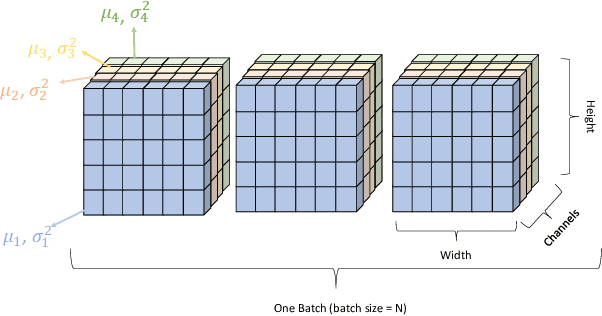

Abstract:As a popular channel pruning method for convolutional neural networks (CNNs), network slimming (NS) has a three-stage process: (1) it trains a CNN with $\ell_1$ regularization applied to the scaling factors of the batch normalization layers; (2) it removes channels whose scaling factors are below a chosen threshold; and (3) it retrains the pruned model to recover the original accuracy. This time-consuming, three-step process is a result of using subgradient descent to train CNNs. Because subgradient descent does not exactly train CNNs towards sparse, accurate structures, the latter two steps are necessary. Moreover, subgradient descent does not have any convergence guarantee. Therefore, we develop an alternative algorithm called proximal NS. Our proposed algorithm trains CNNs towards sparse, accurate structures, so identifying a scaling factor threshold is unnecessary and fine tuning the pruned CNNs is optional. Using Kurdyka-{\L}ojasiewicz assumptions, we establish global convergence of proximal NS. Lastly, we validate the efficacy of the proposed algorithm on VGGNet, DenseNet and ResNet on CIFAR 10/100. Our experiments demonstrate that after one round of training, proximal NS yields a CNN with competitive accuracy and compression.

Feature Affinity Assisted Knowledge Distillation and Quantization of Deep Neural Networks on Label-Free Data

Feb 10, 2023

Abstract:In this paper, we propose a feature affinity (FA) assisted knowledge distillation (KD) method to improve quantization-aware training of deep neural networks (DNN). The FA loss on intermediate feature maps of DNNs plays the role of teaching middle steps of a solution to a student instead of only giving final answers in the conventional KD where the loss acts on the network logits at the output level. Combining logit loss and FA loss, we found that the quantized student network receives stronger supervision than from the labeled ground-truth data. The resulting FAQD is capable of compressing model on label-free data, which brings immediate practical benefits as pre-trained teacher models are readily available and unlabeled data are abundant. In contrast, data labeling is often laborious and expensive. Finally, we propose a fast feature affinity (FFA) loss that accurately approximates FA loss with a lower order of computational complexity, which helps speed up training for high resolution image input.

Searching Intrinsic Dimensions of Vision Transformers

Apr 16, 2022

Abstract:It has been shown by many researchers that transformers perform as well as convolutional neural networks in many computer vision tasks. Meanwhile, the large computational costs of its attention module hinder further studies and applications on edge devices. Some pruning methods have been developed to construct efficient vision transformers, but most of them have considered image classification tasks only. Inspired by these results, we propose SiDT, a method for pruning vision transformer backbones on more complicated vision tasks like object detection, based on the search of transformer dimensions. Experiments on CIFAR-100 and COCO datasets show that the backbones with 20\% or 40\% dimensions/parameters pruned can have similar or even better performance than the unpruned models. Moreover, we have also provided the complexity analysis and comparisons with the previous pruning methods.

Nonconvex Regularization for Network Slimming:Compressing CNNs Even More

Oct 03, 2020

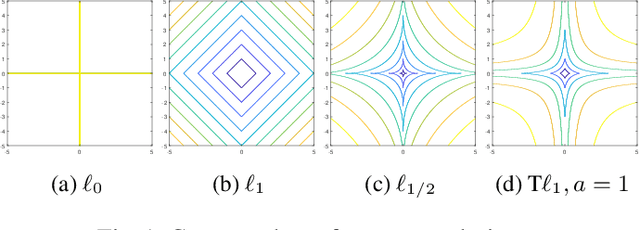

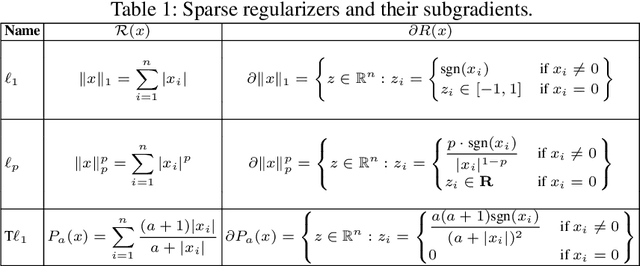

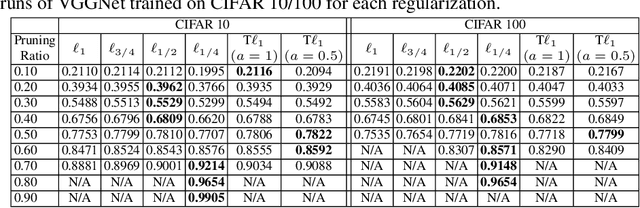

Abstract:In the last decade, convolutional neural networks (CNNs) have evolved to become the dominant models for various computer vision tasks, but they cannot be deployed in low-memory devices due to its high memory requirement and computational cost. One popular, straightforward approach to compressing CNNs is network slimming, which imposes an $\ell_1$ penalty on the channel-associated scaling factors in the batch normalization layers during training. In this way, channels with low scaling factors are identified to be insignificant and are pruned in the models. In this paper, we propose replacing the $\ell_1$ penalty with the $\ell_p$ and transformed $\ell_1$ (T$\ell_1$) penalties since these nonconvex penalties outperformed $\ell_1$ in yielding sparser satisfactory solutions in various compressed sensing problems. In our numerical experiments, we demonstrate network slimming with $\ell_p$ and T$\ell_1$ penalties on VGGNet and Densenet trained on CIFAR 10/100. The results demonstrate that the nonconvex penalties compress CNNs better than $\ell_1$. In addition, T$\ell_1$ preserves the model accuracy after channel pruning, and $\ell_{1/2, 3/4}$ yield compressed models with similar accuracies as $\ell_1$ after retraining.

RARTS: a Relaxed Architecture Search Method

Aug 10, 2020

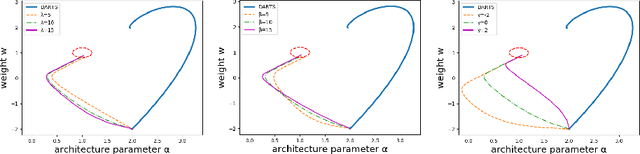

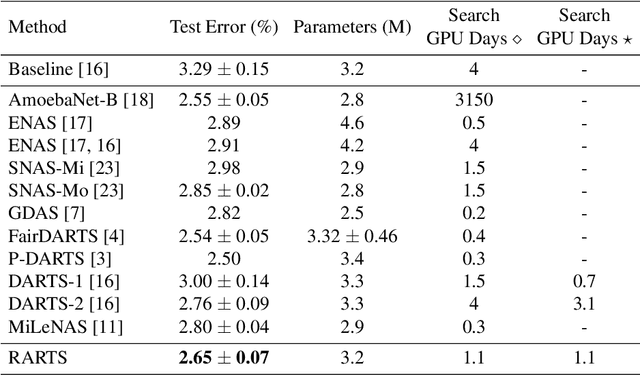

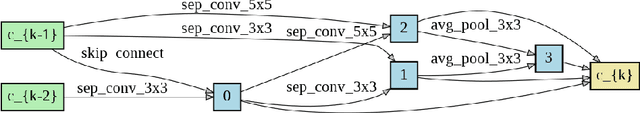

Abstract:Differentiable architecture search (DARTS) is an effective method for data-driven neural network design based on solving a bilevel optimization problem. In this paper, we formulate a single level alternative and a relaxed architecture search (RARTS) method that utilizes training and validation datasets in architecture learning without involving mixed second derivatives of the corresponding loss functions. Through weight/architecture variable splitting and Gauss-Seidel iterations, the core algorithm outperforms DARTS significantly in accuracy and search efficiency, as shown in both a solvable model and CIFAR-10 based architecture search. Our model continues to out-perform DARTS upon transfer to ImageNet and is on par with recent variants of DARTS even though our innovation is purely on the training algorithm.

TRP: Trained Rank Pruning for Efficient Deep Neural Networks

Apr 30, 2020

Abstract:To enable DNNs on edge devices like mobile phones, low-rank approximation has been widely adopted because of its solid theoretical rationale and efficient implementations. Several previous works attempted to directly approximate a pretrained model by low-rank decomposition; however, small approximation errors in parameters can ripple over a large prediction loss. As a result, performance usually drops significantly and a sophisticated effort on fine-tuning is required to recover accuracy. Apparently, it is not optimal to separate low-rank approximation from training. Unlike previous works, this paper integrates low rank approximation and regularization into the training process. We propose Trained Rank Pruning (TRP), which alternates between low rank approximation and training. TRP maintains the capacity of the original network while imposing low-rank constraints during training. A nuclear regularization optimized by stochastic sub-gradient descent is utilized to further promote low rank in TRP. The TRP trained network inherently has a low-rank structure, and is approximated with negligible performance loss, thus eliminating the fine-tuning process after low rank decomposition. The proposed method is comprehensively evaluated on CIFAR-10 and ImageNet, outperforming previous compression methods using low rank approximation.

$\ell_0$ Regularized Structured Sparsity Convolutional Neural Networks

Dec 17, 2019

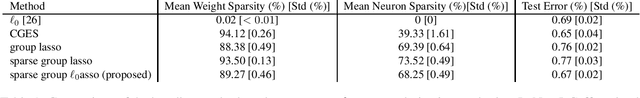

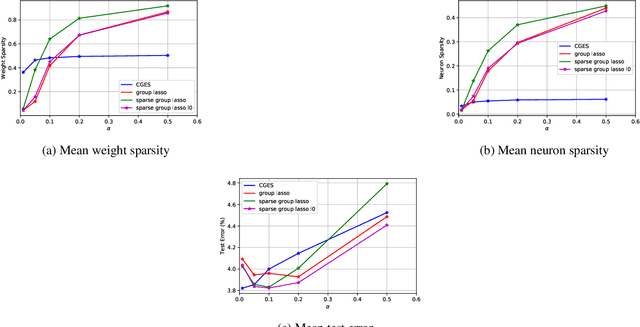

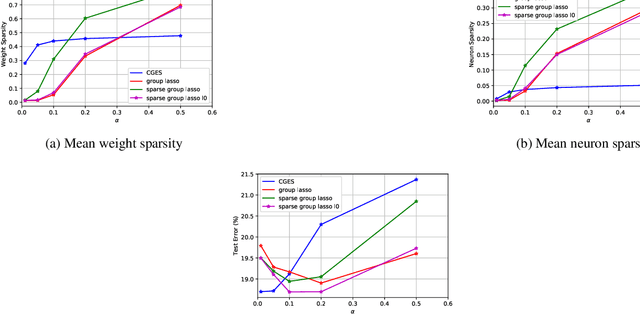

Abstract:Deepening and widening convolutional neural networks (CNNs) significantly increases the number of trainable weight parameters by adding more convolutional layers and feature maps per layer, respectively. By imposing inter- and intra-group sparsity onto the weights of the layers during the training process, a compressed network can be obtained with accuracy comparable to a dense one. In this paper, we propose a new variant of sparse group lasso that blends the $\ell_0$ norm onto the individual weight parameters and the $\ell_{2,1}$ norm onto the output channels of a layer. To address the non-differentiability of the $\ell_0$ norm, we apply variable splitting resulting in an algorithm that consists of executing stochastic gradient descent followed by hard thresholding for each iteration. Numerical experiments are demonstrated on LeNet-5 and wide-residual-networks for MNIST and CIFAR 10/100, respectively. They showcase the effectiveness of our proposed method in attaining superior test accuracy with network sparsification on par with the current state of the art.

Weakly-Supervised Degree of Eye-Closeness Estimation

Oct 24, 2019

Abstract:Following recent technological advances there is a growing interest in building non-intrusive methods that help us communicate with computing devices. In this regard, accurate information from eye is a promising input medium between a user and computing devices. In this paper we propose a method that captures the degree of eye closeness. Although many methods exist for detection of eyelid openness, they are inherently unable to satisfactorily perform in real world applications. Detailed eye state estimation is more important, in extracting meaningful information, than estimating whether eyes are open or closed. However, learning reliable eye state estimator requires accurate annotations which is cost prohibitive. In this work, we leverage synthetic face images which can be generated via computer graphics rendering techniques and automatically annotated with different levels of eye openness. These synthesized training data images, however, have a domain shift from real-world data. To alleviate this issue, we propose a weakly-supervised method which utilizes the accurate annotation from the synthetic data set, to learn accurate degree of eye openness, and the weakly labeled (open or closed) real world eye data set to control the domain shift. We introduce a data set of 1.3M synthetic face images with detail eye openness and eye gaze information, and 21k real-world images with open/closed annotation. The dataset will be released online upon acceptance. Extensive experiments validate the effectiveness of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge