Yi Sheng

Beyond One-Way Pruning: Bidirectional Pruning-Regrowth for Extreme Accuracy-Sparsity Tradeoff

Nov 11, 2025Abstract:As a widely adopted model compression technique, model pruning has demonstrated strong effectiveness across various architectures. However, we observe that when sparsity exceeds a certain threshold, both iterative and one-shot pruning methods lead to a steep decline in model performance. This rapid degradation limits the achievable compression ratio and prevents models from meeting the stringent size constraints required by certain hardware platforms, rendering them inoperable. To overcome this limitation, we propose a bidirectional pruning-regrowth strategy. Starting from an extremely compressed network that satisfies hardware constraints, the method selectively regenerates critical connections to recover lost performance, effectively mitigating the sharp accuracy drop commonly observed under high sparsity conditions.

A Novel Diffusion Model for Pairwise Geoscience Data Generation with Unbalanced Training Dataset

Jan 01, 2025

Abstract:Recently, the advent of generative AI technologies has made transformational impacts on our daily lives, yet its application in scientific applications remains in its early stages. Data scarcity is a major, well-known barrier in data-driven scientific computing, so physics-guided generative AI holds significant promise. In scientific computing, most tasks study the conversion of multiple data modalities to describe physical phenomena, for example, spatial and waveform in seismic imaging, time and frequency in signal processing, and temporal and spectral in climate modeling; as such, multi-modal pairwise data generation is highly required instead of single-modal data generation, which is usually used in natural images (e.g., faces, scenery). Moreover, in real-world applications, the unbalance of available data in terms of modalities commonly exists; for example, the spatial data (i.e., velocity maps) in seismic imaging can be easily simulated, but real-world seismic waveform is largely lacking. While the most recent efforts enable the powerful diffusion model to generate multi-modal data, how to leverage the unbalanced available data is still unclear. In this work, we use seismic imaging in subsurface geophysics as a vehicle to present ``UB-Diff'', a novel diffusion model for multi-modal paired scientific data generation. One major innovation is a one-in-two-out encoder-decoder network structure, which can ensure pairwise data is obtained from a co-latent representation. Then, the co-latent representation will be used by the diffusion process for pairwise data generation. Experimental results on the OpenFWI dataset show that UB-Diff significantly outperforms existing techniques in terms of Fr\'{e}chet Inception Distance (FID) score and pairwise evaluation, indicating the generation of reliable and useful multi-modal pairwise data.

Data-Algorithm-Architecture Co-Optimization for Fair Neural Networks on Skin Lesion Dataset

Jul 18, 2024Abstract:As Artificial Intelligence (AI) increasingly integrates into our daily lives, fairness has emerged as a critical concern, particularly in medical AI, where datasets often reflect inherent biases due to social factors like the underrepresentation of marginalized communities and socioeconomic barriers to data collection. Traditional approaches to mitigating these biases have focused on data augmentation and the development of fairness-aware training algorithms. However, this paper argues that the architecture of neural networks, a core component of Machine Learning (ML), plays a crucial role in ensuring fairness. We demonstrate that addressing fairness effectively requires a holistic approach that simultaneously considers data, algorithms, and architecture. Utilizing Automated ML (AutoML) technology, specifically Neural Architecture Search (NAS), we introduce a novel framework, BiaslessNAS, designed to achieve fair outcomes in analyzing skin lesion datasets. BiaslessNAS incorporates fairness considerations at every stage of the NAS process, leading to the identification of neural networks that are not only more accurate but also significantly fairer. Our experiments show that BiaslessNAS achieves a 2.55% increase in accuracy and a 65.50% improvement in fairness compared to traditional NAS methods, underscoring the importance of integrating fairness into neural network architecture for better outcomes in medical AI applications.

APS-USCT: Ultrasound Computed Tomography on Sparse Data via AI-Physic Synergy

Jul 18, 2024

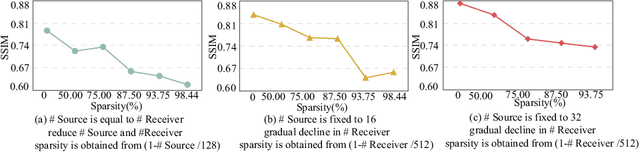

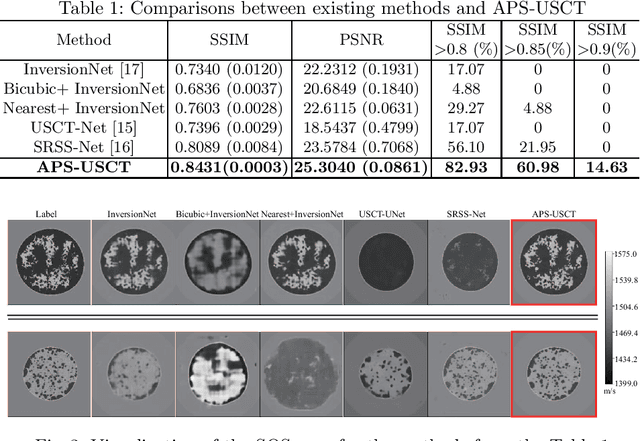

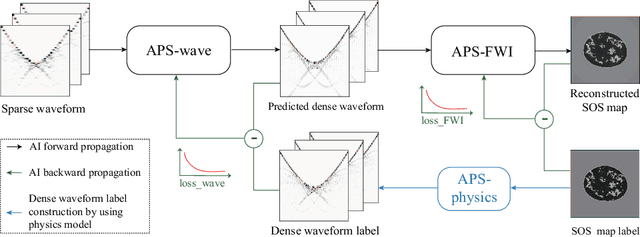

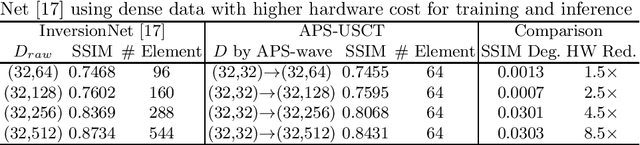

Abstract:Ultrasound computed tomography (USCT) is a promising technique that achieves superior medical imaging reconstruction resolution by fully leveraging waveform information, outperforming conventional ultrasound methods. Despite its advantages, high-quality USCT reconstruction relies on extensive data acquisition by a large number of transducers, leading to increased costs, computational demands, extended patient scanning times, and manufacturing complexities. To mitigate these issues, we propose a new USCT method called APS-USCT, which facilitates imaging with sparse data, substantially reducing dependence on high-cost dense data acquisition. Our APS-USCT method consists of two primary components: APS-wave and APS-FWI. The APS-wave component, an encoder-decoder system, preprocesses the waveform data, converting sparse data into dense waveforms to augment sample density prior to reconstruction. The APS-FWI component, utilizing the InversionNet, directly reconstructs the speed of sound (SOS) from the ultrasound waveform data. We further improve the model's performance by incorporating Squeeze-and-Excitation (SE) Blocks and source encoding techniques. Testing our method on a breast cancer dataset yielded promising results. It demonstrated outstanding performance with an average Structural Similarity Index (SSIM) of 0.8431. Notably, over 82% of samples achieved an SSIM above 0.8, with nearly 61% exceeding 0.85, highlighting the significant potential of our approach in improving USCT image reconstruction by efficiently utilizing sparse data.

PristiQ: A Co-Design Framework for Preserving Data Security of Quantum Learning in the Cloud

Apr 20, 2024Abstract:Benefiting from cloud computing, today's early-stage quantum computers can be remotely accessed via the cloud services, known as Quantum-as-a-Service (QaaS). However, it poses a high risk of data leakage in quantum machine learning (QML). To run a QML model with QaaS, users need to locally compile their quantum circuits including the subcircuit of data encoding first and then send the compiled circuit to the QaaS provider for execution. If the QaaS provider is untrustworthy, the subcircuit to encode the raw data can be easily stolen. Therefore, we propose a co-design framework for preserving the data security of QML with the QaaS paradigm, namely PristiQ. By introducing an encryption subcircuit with extra secure qubits associated with a user-defined security key, the security of data can be greatly enhanced. And an automatic search algorithm is proposed to optimize the model to maintain its performance on the encrypted quantum data. Experimental results on simulation and the actual IBM quantum computer both prove the ability of PristiQ to provide high security for the quantum data while maintaining the model performance in QML.

A Physics-guided Generative AI Toolkit for Geophysical Monitoring

Jan 06, 2024Abstract:Full-waveform inversion (FWI) plays a vital role in geoscience to explore the subsurface. It utilizes the seismic wave to image the subsurface velocity map. As the machine learning (ML) technique evolves, the data-driven approaches using ML for FWI tasks have emerged, offering enhanced accuracy and reduced computational cost compared to traditional physics-based methods. However, a common challenge in geoscience, the unprivileged data, severely limits ML effectiveness. The issue becomes even worse during model pruning, a step essential in geoscience due to environmental complexities. To tackle this, we introduce the EdGeo toolkit, which employs a diffusion-based model guided by physics principles to generate high-fidelity velocity maps. The toolkit uses the acoustic wave equation to generate corresponding seismic waveform data, facilitating the fine-tuning of pruned ML models. Our results demonstrate significant improvements in SSIM scores and reduction in both MAE and MSE across various pruning ratios. Notably, the ML model fine-tuned using data generated by EdGeo yields superior quality of velocity maps, especially in representing unprivileged features, outperforming other existing methods.

TrojFair: Trojan Fairness Attacks

Dec 16, 2023

Abstract:Deep learning models have been incorporated into high-stakes sectors, including healthcare diagnosis, loan approvals, and candidate recruitment, among others. Consequently, any bias or unfairness in these models can harm those who depend on such models. In response, many algorithms have emerged to ensure fairness in deep learning. However, while the potential for harm is substantial, the resilience of these fair deep learning models against malicious attacks has never been thoroughly explored, especially in the context of emerging Trojan attacks. Moving beyond prior research, we aim to fill this void by introducing \textit{TrojFair}, a Trojan fairness attack. Unlike existing attacks, TrojFair is model-agnostic and crafts a Trojaned model that functions accurately and equitably for clean inputs. However, it displays discriminatory behaviors \text{-} producing both incorrect and unfair results \text{-} for specific groups with tainted inputs containing a trigger. TrojFair is a stealthy Fairness attack that is resilient to existing model fairness audition detectors since the model for clean inputs is fair. TrojFair achieves a target group attack success rate exceeding $88.77\%$, with an average accuracy loss less than $0.44\%$. It also maintains a high discriminative score between the target and non-target groups across various datasets and models.

Muffin: A Framework Toward Multi-Dimension AI Fairness by Uniting Off-the-Shelf Models

Aug 26, 2023

Abstract:Model fairness (a.k.a., bias) has become one of the most critical problems in a wide range of AI applications. An unfair model in autonomous driving may cause a traffic accident if corner cases (e.g., extreme weather) cannot be fairly regarded; or it will incur healthcare disparities if the AI model misdiagnoses a certain group of people (e.g., brown and black skin). In recent years, there have been emerging research works on addressing unfairness, and they mainly focus on a single unfair attribute, like skin tone; however, real-world data commonly have multiple attributes, among which unfairness can exist in more than one attribute, called 'multi-dimensional fairness'. In this paper, we first reveal a strong correlation between the different unfair attributes, i.e., optimizing fairness on one attribute will lead to the collapse of others. Then, we propose a novel Multi-Dimension Fairness framework, namely Muffin, which includes an automatic tool to unite off-the-shelf models to improve the fairness on multiple attributes simultaneously. Case studies on dermatology datasets with two unfair attributes show that the existing approach can achieve 21.05% fairness improvement on the first attribute while it makes the second attribute unfair by 1.85%. On the other hand, the proposed Muffin can unite multiple models to achieve simultaneously 26.32% and 20.37% fairness improvement on both attributes; meanwhile, it obtains 5.58% accuracy gain.

Federated Self-Supervised Contrastive Learning and Masked Autoencoder for Dermatological Disease Diagnosis

Aug 24, 2022

Abstract:In dermatological disease diagnosis, the private data collected by mobile dermatology assistants exist on distributed mobile devices of patients. Federated learning (FL) can use decentralized data to train models while keeping data local. Existing FL methods assume all the data have labels. However, medical data often comes without full labels due to high labeling costs. Self-supervised learning (SSL) methods, contrastive learning (CL) and masked autoencoders (MAE), can leverage the unlabeled data to pre-train models, followed by fine-tuning with limited labels. However, combining SSL and FL has unique challenges. For example, CL requires diverse data but each device only has limited data. For MAE, while Vision Transformer (ViT) based MAE has higher accuracy over CNNs in centralized learning, MAE's performance in FL with unlabeled data has not been investigated. Besides, the ViT synchronization between the server and clients is different from traditional CNNs. Therefore, special synchronization methods need to be designed. In this work, we propose two federated self-supervised learning frameworks for dermatological disease diagnosis with limited labels. The first one features lower computation costs, suitable for mobile devices. The second one features high accuracy and fits high-performance servers. Based on CL, we proposed federated contrastive learning with feature sharing (FedCLF). Features are shared for diverse contrastive information without sharing raw data for privacy. Based on MAE, we proposed FedMAE. Knowledge split separates the global and local knowledge learned from each client. Only global knowledge is aggregated for higher generalization performance. Experiments on dermatological disease datasets show superior accuracy of the proposed frameworks over state-of-the-arts.

The Larger The Fairer? Small Neural Networks Can Achieve Fairness for Edge Devices

Feb 23, 2022

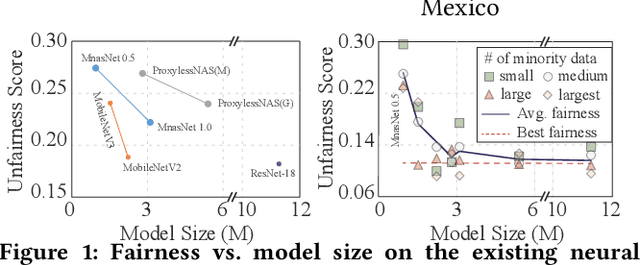

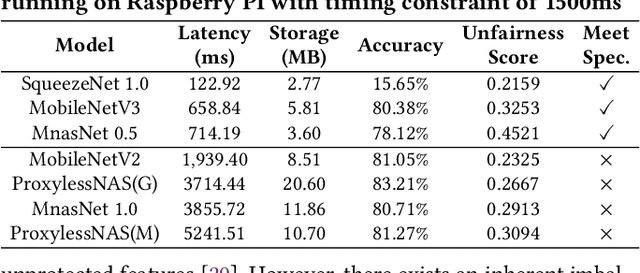

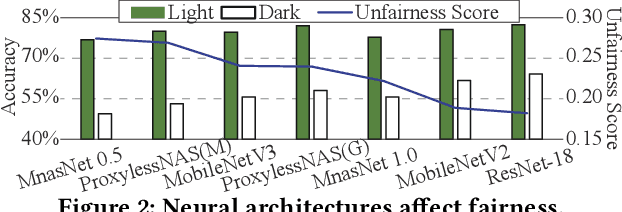

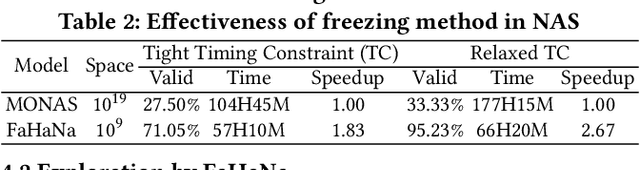

Abstract:Along with the progress of AI democratization, neural networks are being deployed more frequently in edge devices for a wide range of applications. Fairness concerns gradually emerge in many applications, such as face recognition and mobile medical. One fundamental question arises: what will be the fairest neural architecture for edge devices? By examining the existing neural networks, we observe that larger networks typically are fairer. But, edge devices call for smaller neural architectures to meet hardware specifications. To address this challenge, this work proposes a novel Fairness- and Hardware-aware Neural architecture search framework, namely FaHaNa. Coupled with a model freezing approach, FaHaNa can efficiently search for neural networks with balanced fairness and accuracy, while guaranteed to meet hardware specifications. Results show that FaHaNa can identify a series of neural networks with higher fairness and accuracy on a dermatology dataset. Target edge devices, FaHaNa finds a neural architecture with slightly higher accuracy, 5.28x smaller size, 15.14% higher fairness score, compared with MobileNetV2; meanwhile, on Raspberry PI and Odroid XU-4, it achieves 5.75x and 5.79x speedup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge