Alaina J. James

Federated Self-Supervised Contrastive Learning and Masked Autoencoder for Dermatological Disease Diagnosis

Aug 24, 2022

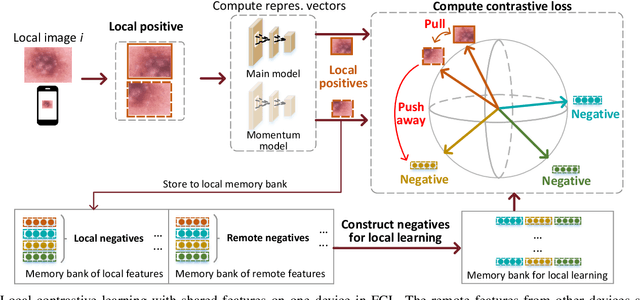

Abstract:In dermatological disease diagnosis, the private data collected by mobile dermatology assistants exist on distributed mobile devices of patients. Federated learning (FL) can use decentralized data to train models while keeping data local. Existing FL methods assume all the data have labels. However, medical data often comes without full labels due to high labeling costs. Self-supervised learning (SSL) methods, contrastive learning (CL) and masked autoencoders (MAE), can leverage the unlabeled data to pre-train models, followed by fine-tuning with limited labels. However, combining SSL and FL has unique challenges. For example, CL requires diverse data but each device only has limited data. For MAE, while Vision Transformer (ViT) based MAE has higher accuracy over CNNs in centralized learning, MAE's performance in FL with unlabeled data has not been investigated. Besides, the ViT synchronization between the server and clients is different from traditional CNNs. Therefore, special synchronization methods need to be designed. In this work, we propose two federated self-supervised learning frameworks for dermatological disease diagnosis with limited labels. The first one features lower computation costs, suitable for mobile devices. The second one features high accuracy and fits high-performance servers. Based on CL, we proposed federated contrastive learning with feature sharing (FedCLF). Features are shared for diverse contrastive information without sharing raw data for privacy. Based on MAE, we proposed FedMAE. Knowledge split separates the global and local knowledge learned from each client. Only global knowledge is aggregated for higher generalization performance. Experiments on dermatological disease datasets show superior accuracy of the proposed frameworks over state-of-the-arts.

Federated Contrastive Learning for Dermatological Disease Diagnosis via On-device Learning

Feb 14, 2022

Abstract:Deep learning models have been deployed in an increasing number of edge and mobile devices to provide healthcare. These models rely on training with a tremendous amount of labeled data to achieve high accuracy. However, for medical applications such as dermatological disease diagnosis, the private data collected by mobile dermatology assistants exist on distributed mobile devices of patients, and each device only has a limited amount of data. Directly learning from limited data greatly deteriorates the performance of learned models. Federated learning (FL) can train models by using data distributed on devices while keeping the data local for privacy. Existing works on FL assume all the data have ground-truth labels. However, medical data often comes without any accompanying labels since labeling requires expertise and results in prohibitively high labor costs. The recently developed self-supervised learning approach, contrastive learning (CL), can leverage the unlabeled data to pre-train a model, after which the model is fine-tuned on limited labeled data for dermatological disease diagnosis. However, simply combining CL with FL as federated contrastive learning (FCL) will result in ineffective learning since CL requires diverse data for learning but each device only has limited data. In this work, we propose an on-device FCL framework for dermatological disease diagnosis with limited labels. Features are shared in the FCL pre-training process to provide diverse and accurate contrastive information. After that, the pre-trained model is fine-tuned with local labeled data independently on each device or collaboratively with supervised federated learning on all devices. Experiments on dermatological disease datasets show that the proposed framework effectively improves the recall and precision of dermatological disease diagnosis compared with state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge