Yeping Hu

Towards Generalizable and Interpretable Motion Prediction: A Deep Variational Bayes Approach

Mar 10, 2024Abstract:Estimating the potential behavior of the surrounding human-driven vehicles is crucial for the safety of autonomous vehicles in a mixed traffic flow. Recent state-of-the-art achieved accurate prediction using deep neural networks. However, these end-to-end models are usually black boxes with weak interpretability and generalizability. This paper proposes the Goal-based Neural Variational Agent (GNeVA), an interpretable generative model for motion prediction with robust generalizability to out-of-distribution cases. For interpretability, the model achieves target-driven motion prediction by estimating the spatial distribution of long-term destinations with a variational mixture of Gaussians. We identify a causal structure among maps and agents' histories and derive a variational posterior to enhance generalizability. Experiments on motion prediction datasets validate that the fitted model can be interpretable and generalizable and can achieve comparable performance to state-of-the-art results.

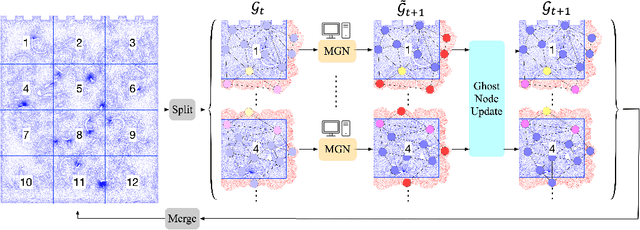

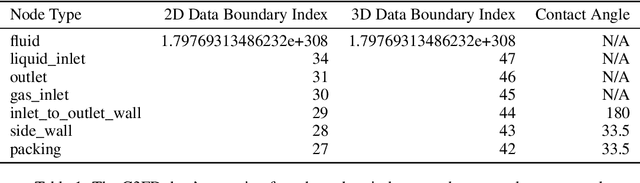

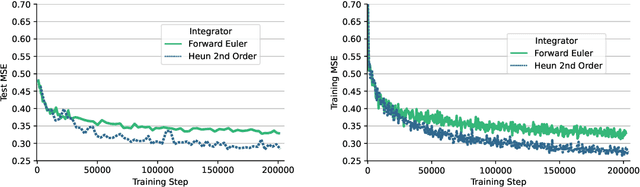

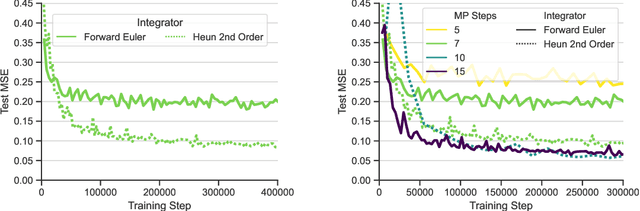

Scientific Computing Algorithms to Learn Enhanced Scalable Surrogates for Mesh Physics

Apr 01, 2023

Abstract:Data-driven modeling approaches can produce fast surrogates to study large-scale physics problems. Among them, graph neural networks (GNNs) that operate on mesh-based data are desirable because they possess inductive biases that promote physical faithfulness, but hardware limitations have precluded their application to large computational domains. We show that it is \textit{possible} to train a class of GNN surrogates on 3D meshes. We scale MeshGraphNets (MGN), a subclass of GNNs for mesh-based physics modeling, via our domain decomposition approach to facilitate training that is mathematically equivalent to training on the whole domain under certain conditions. With this, we were able to train MGN on meshes with \textit{millions} of nodes to generate computational fluid dynamics (CFD) simulations. Furthermore, we show how to enhance MGN via higher-order numerical integration, which can reduce MGN's error and training time. We validated our methods on an accompanying dataset of 3D $\text{CO}_2$-capture CFD simulations on a 3.1M-node mesh. This work presents a practical path to scaling MGN for real-world applications.

Editing Driver Character: Socially-Controllable Behavior Generation for Interactive Traffic Simulation

Mar 24, 2023Abstract:Traffic simulation plays a crucial role in evaluating and improving autonomous driving planning systems. After being deployed on public roads, autonomous vehicles need to interact with human road participants with different social preferences (e.g., selfish or courteous human drivers). To ensure that autonomous vehicles take safe and efficient maneuvers in different interactive traffic scenarios, we should be able to evaluate autonomous vehicles against reactive agents with different social characteristics in the simulation environment. We propose a socially-controllable behavior generation (SCBG) model for this purpose, which allows the users to specify the level of courtesy of the generated trajectory while ensuring realistic and human-like trajectory generation through learning from real-world driving data. Specifically, we define a novel and differentiable measure to quantify the level of courtesy of driving behavior, leveraging marginal and conditional behavior prediction models trained from real-world driving data. The proposed courtesy measure allows us to auto-label the courtesy levels of trajectories from real-world driving data and conveniently train an SCBG model generating trajectories based on the input courtesy values. We examined the SCBG model on the Waymo Open Motion Dataset (WOMD) and showed that we were able to control the SCBG model to generate realistic driving behaviors with desired courtesy levels. Interestingly, we found that the SCBG model was able to identify different motion patterns of courteous behaviors according to the scenarios.

Analyzing and Enhancing Closed-loop Stability in Reactive Simulation

Aug 09, 2022

Abstract:Simulation has played an important role in efficiently evaluating self-driving vehicles in terms of scalability. Existing methods mostly rely on heuristic-based simulation, where traffic participants follow certain human-encoded rules that fail to generate complex human behaviors. Therefore, the reactive simulation concept is proposed to bridge the human behavior gap between simulation and real-world traffic scenarios by leveraging real-world data. However, these reactive models can easily generate unreasonable behaviors after a few steps of simulation, where we regard the model as losing its stability. To the best of our knowledge, no work has explicitly discussed and analyzed the stability of the reactive simulation framework. In this paper, we aim to provide a thorough stability analysis of the reactive simulation and propose a solution to enhance the stability. Specifically, we first propose a new reactive simulation framework, where we discover that the smoothness and consistency of the simulated state sequences are crucial factors to stability. We then incorporate the kinematic vehicle model into the framework to improve the closed-loop stability of the reactive simulation. Furthermore, along with commonly-used metrics, several novel metrics are proposed in this paper to better analyze the simulation performance.

Generalizability Analysis of Graph-based Trajectory Predictor with Vectorized Representation

Aug 06, 2022

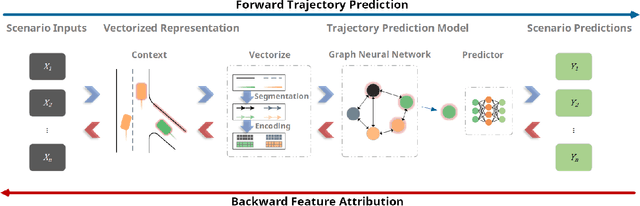

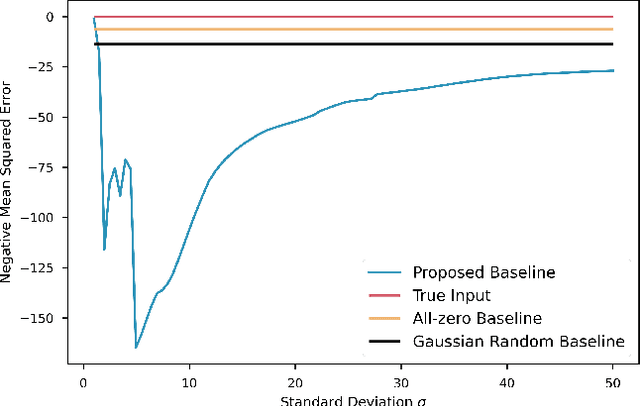

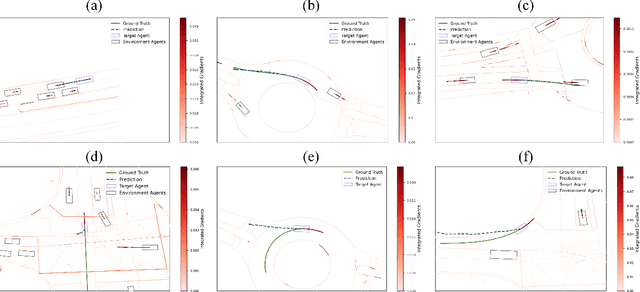

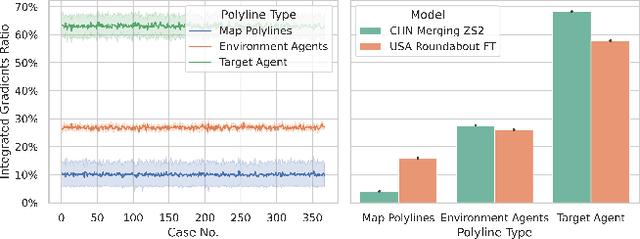

Abstract:Trajectory prediction is one of the essential tasks for autonomous vehicles. Recent progress in machine learning gave birth to a series of advanced trajectory prediction algorithms. Lately, the effectiveness of using graph neural networks (GNNs) with vectorized representations for trajectory prediction has been demonstrated by many researchers. Nonetheless, these algorithms either pay little attention to models' generalizability across various scenarios or simply assume training and test data follow similar statistics. In fact, when test scenarios are unseen or Out-of-Distribution (OOD), the resulting train-test domain shift usually leads to significant degradation in prediction performance, which will impact downstream modules and eventually lead to severe accidents. Therefore, it is of great importance to thoroughly investigate the prediction models in terms of their generalizability, which can not only help identify their weaknesses but also provide insights on how to improve these models. This paper proposes a generalizability analysis framework using feature attribution methods to help interpret black-box models. For the case study, we provide an in-depth generalizability analysis of one of the state-of-the-art graph-based trajectory predictors that utilize vectorized representation. Results show significant performance degradation due to domain shift, and feature attribution provides insights to identify potential causes of these problems. Finally, we conclude the common prediction challenges and how weighting biases induced by the training process can deteriorate the accuracy.

Transferable and Adaptable Driving Behavior Prediction

Feb 13, 2022

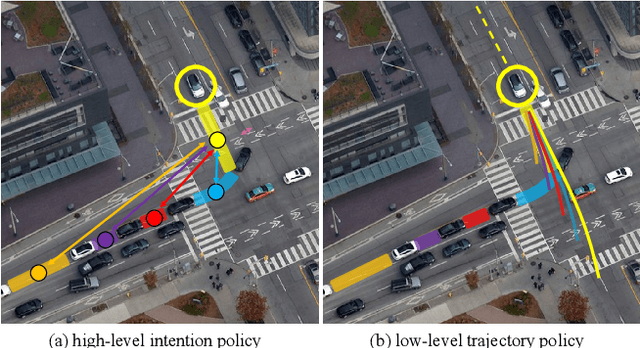

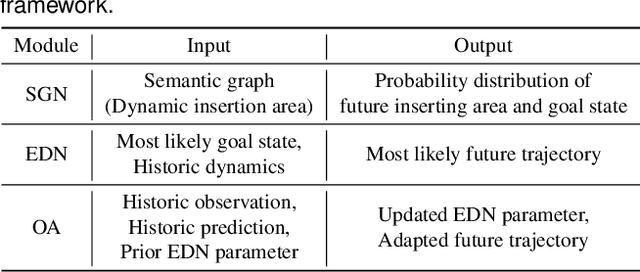

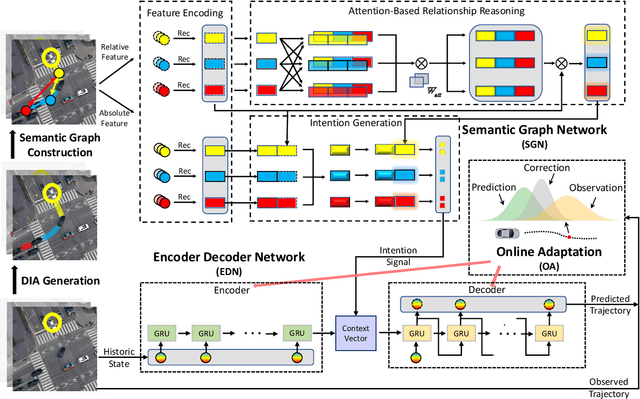

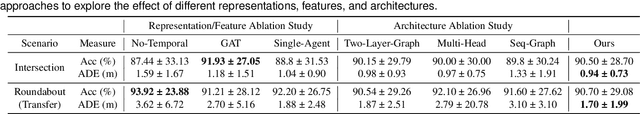

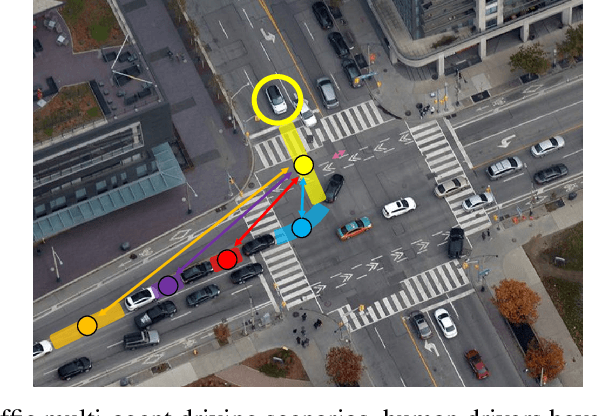

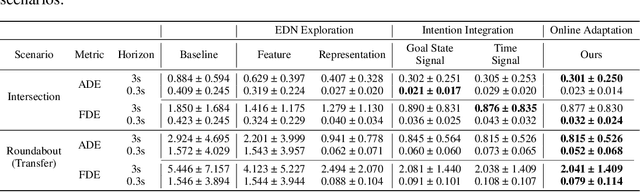

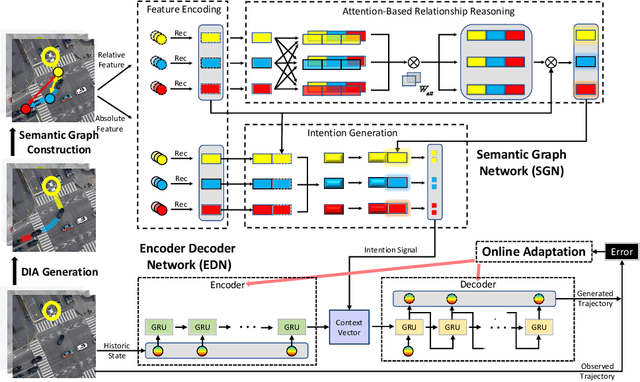

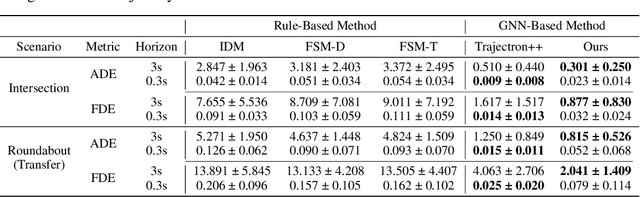

Abstract:While autonomous vehicles still struggle to solve challenging situations during on-road driving, humans have long mastered the essence of driving with efficient, transferable, and adaptable driving capability. By mimicking humans' cognition model and semantic understanding during driving, we propose HATN, a hierarchical framework to generate high-quality, transferable, and adaptable predictions for driving behaviors in multi-agent dense-traffic environments. Our hierarchical method consists of a high-level intention identification policy and a low-level trajectory generation policy. We introduce a novel semantic sub-task definition and generic state representation for each sub-task. With these techniques, the hierarchical framework is transferable across different driving scenarios. Besides, our model is able to capture variations of driving behaviors among individuals and scenarios by an online adaptation module. We demonstrate our algorithms in the task of trajectory prediction for real traffic data at intersections and roundabouts from the INTERACTION dataset. Through extensive numerical studies, it is evident that our method significantly outperformed other methods in terms of prediction accuracy, transferability, and adaptability. Pushing the state-of-the-art performance by a considerable margin, we also provide a cognitive view of understanding the driving behavior behind such improvement. We highlight that in the future, more research attention and effort are deserved for transferability and adaptability. It is not only due to the promising performance elevation of prediction and planning algorithms, but more fundamentally, they are crucial for the scalable and general deployment of autonomous vehicles.

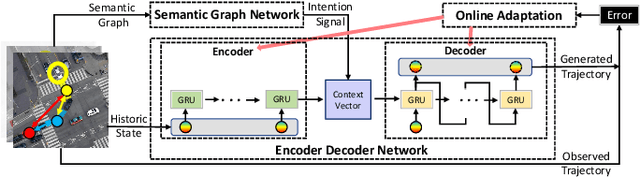

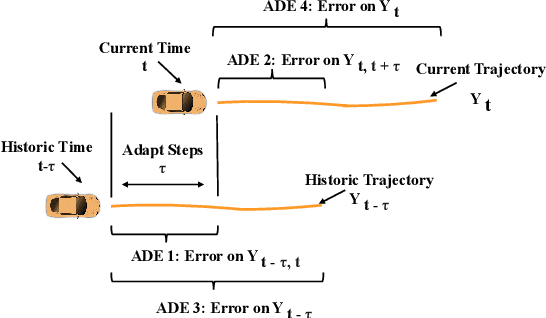

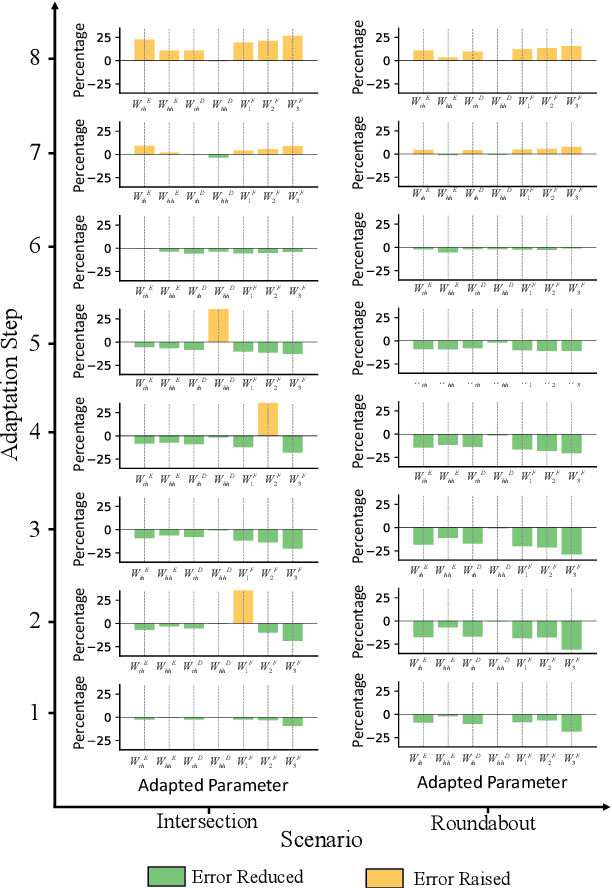

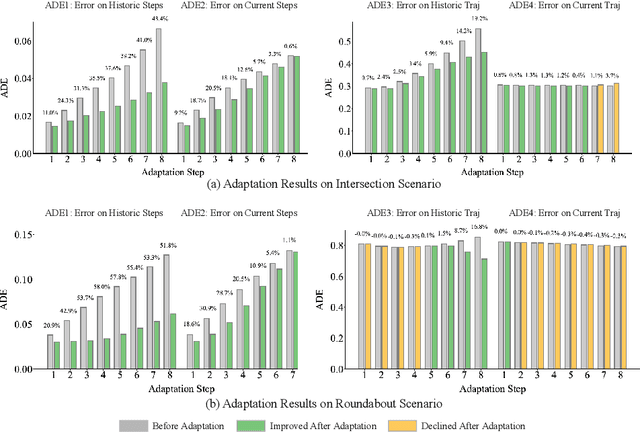

Online Adaptation of Neural Network Models by Modified Extended Kalman Filter for Customizable and Transferable Driving Behavior Prediction

Dec 09, 2021

Abstract:High fidelity behavior prediction of human drivers is crucial for efficient and safe deployment of autonomous vehicles, which is challenging due to the stochasticity, heterogeneity, and time-varying nature of human behaviors. On one hand, the trained prediction model can only capture the motion pattern in an average sense, while the nuances among individuals can hardly be reflected. On the other hand, the prediction model trained on the training set may not generalize to the testing set which may be in a different scenario or data distribution, resulting in low transferability and generalizability. In this paper, we applied a $\tau$-step modified Extended Kalman Filter parameter adaptation algorithm (MEKF$_\lambda$) to the driving behavior prediction task, which has not been studied before in literature. With the feedback of the observed trajectory, the algorithm is applied to neural-network-based models to improve the performance of driving behavior predictions across different human subjects and scenarios. A new set of metrics is proposed for systematic evaluation of online adaptation performance in reducing the prediction error for different individuals and scenarios. Empirical studies on the best layer in the model and steps of observation to adapt are also provided.

Causal-based Time Series Domain Generalization for Vehicle Intention Prediction

Dec 03, 2021

Abstract:Accurately predicting possible behaviors of traffic participants is an essential capability for autonomous vehicles. Since autonomous vehicles need to navigate in dynamically changing environments, they are expected to make accurate predictions regardless of where they are and what driving circumstances they encountered. Therefore, generalization capability to unseen domains is crucial for prediction models when autonomous vehicles are deployed in the real world. In this paper, we aim to address the domain generalization problem for vehicle intention prediction tasks and a causal-based time series domain generalization (CTSDG) model is proposed. We construct a structural causal model for vehicle intention prediction tasks to learn an invariant representation of input driving data for domain generalization. We further integrate a recurrent latent variable model into our structural causal model to better capture temporal latent dependencies from time-series input data. The effectiveness of our approach is evaluated via real-world driving data. We demonstrate that our proposed method has consistent improvement on prediction accuracy compared to other state-of-the-art domain generalization and behavior prediction methods.

Hierarchical Adaptable and Transferable Networks (HATN) for Driving Behavior Prediction

Nov 01, 2021

Abstract:When autonomous vehicles still struggle to solve challenging situations during on-road driving, humans have long mastered the essence of driving with efficient transferable and adaptable driving capability. By mimicking humans' cognition model and semantic understanding during driving, we present HATN, a hierarchical framework to generate high-quality driving behaviors in multi-agent dense-traffic environments. Our method hierarchically consists of a high-level intention identification and low-level action generation policy. With the semantic sub-task definition and generic state representation, the hierarchical framework is transferable across different driving scenarios. Besides, our model is also able to capture variations of driving behaviors among individuals and scenarios by an online adaptation module. We demonstrate our algorithms in the task of trajectory prediction for real traffic data at intersections and roundabouts, where we conducted extensive studies of the proposed method and demonstrated how our method outperformed other methods in terms of prediction accuracy and transferability.

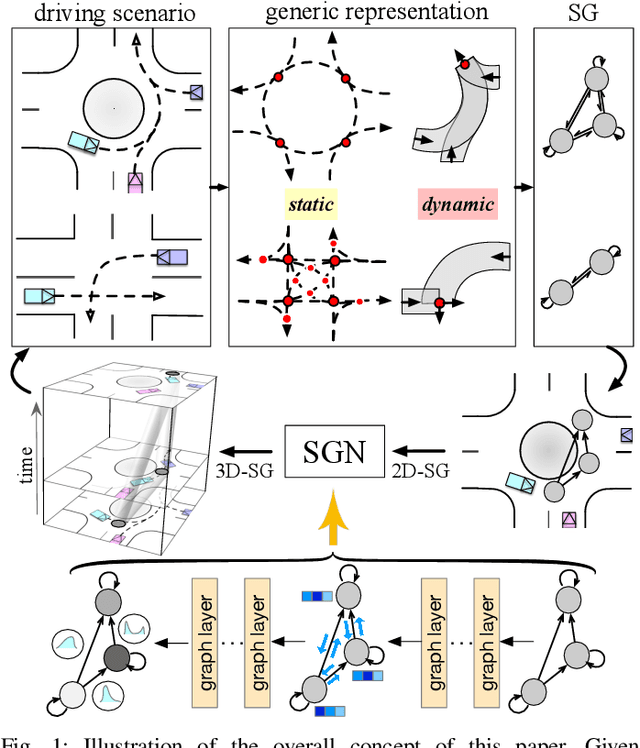

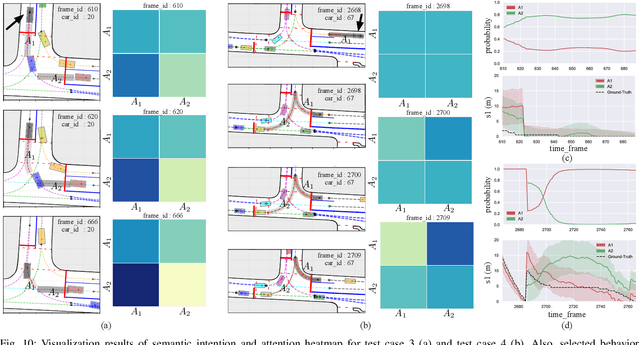

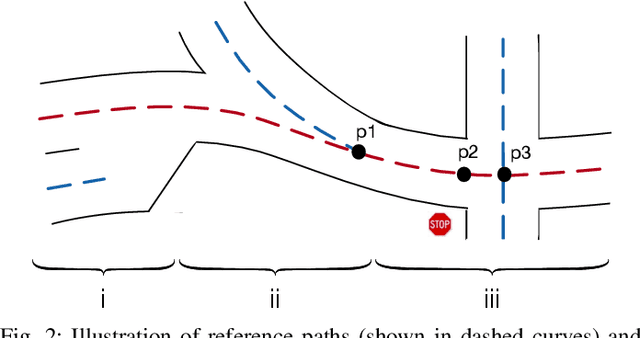

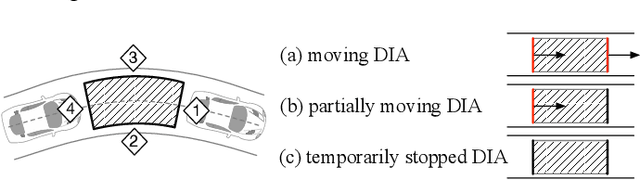

Scenario-Transferable Semantic Graph Reasoning for Interaction-Aware Probabilistic Prediction

Apr 07, 2020

Abstract:Accurately predicting the possible behaviors of traffic participants is an essential capability for autonomous vehicles. Since autonomous vehicles need to navigate in dynamically changing environments, they are expected to make accurate predictions regardless of where they are and what driving circumstances they encountered. A number of methodologies have been proposed to solve prediction problems under different traffic situations. However, these works either focus on one particular driving scenario (e.g. highway, intersection, or roundabout) or do not take sufficient environment information (e.g. road topology, traffic rules, and surrounding agents) into account. In fact, the limitation to certain scenario is mainly due to the lackness of generic representations of the environment. The insufficiency of environment information further limits the flexibility and transferability of the predictor. In this paper, we propose a scenario-transferable and interaction-aware probabilistic prediction algorithm based on semantic graph reasoning, which predicts behaviors of selected agents. We put forward generic representations for various environment information and utilize them as building blocks to construct their spatio-temporal structural relations. We then take the advantage of these structured representations to develop a flexible and transferable prediction algorithm, where the predictor can be directly used under unforeseen driving circumstances that are completely different from training scenarios. The proposed algorithm is thoroughly examined under several complicated real-world driving scenarios to demonstrate its flexibility and transferability with the generic representation for autonomous driving systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge