Yang Shang

Fusion-Restoration Image Processing Algorithm to Improve the High-Temperature Deformation Measurement

Jan 19, 2026Abstract:In the deformation measurement of high-temperature structures, image degradation caused by thermal radiation and random errors introduced by heat haze restrict the accuracy and effectiveness of deformation measurement. To suppress thermal radiation and heat haze using fusion-restoration image processing methods, thereby improving the accuracy and effectiveness of DIC in the measurement of high-temperature deformation. For image degradation caused by thermal radiation, based on the image layered representation, the image is decomposed into positive and negative channels for parallel processing, and then optimized for quality by multi-exposure image fusion. To counteract the high-frequency, random errors introduced by heat haze, we adopt the FSIM as the objective function to guide the iterative optimization of model parameters, and the grayscale average algorithm is applied to equalize anomalous gray values, thereby reducing measurement error. The proposed multi-exposure image fusion algorithm effectively suppresses image degradation caused by complex illumination conditions, boosting the effective computation area from 26% to 50% for under-exposed images and from 32% to 40% for over-exposed images without degrading measurement accuracy in the experiment. Meanwhile, the image restoration combined with the grayscale average algorithm reduces static thermal deformation measurement errors. The error in ε_xx is reduced by 85.3%, while the errors in ε_yy and γ_xy are reduced by 36.0% and 36.4%, respectively. We present image processing methods to suppress the interference of thermal radiation and heat haze in high-temperature deformation measurement using DIC. The experimental results verify that the proposed method can effectively improve image quality, reduce deformation measurement errors, and has potential application value in thermal deformation measurement.

Robust Subpixel Localization of Diagonal Markers in Large-Scale Navigation via Multi-Layer Screening and Adaptive Matching

Jan 13, 2026Abstract:This paper proposes a robust, high-precision positioning methodology to address localization failures arising from complex background interference in large-scale flight navigation and the computational inefficiency inherent in conventional sliding window matching techniques. The proposed methodology employs a three-tiered framework incorporating multi-layer corner screening and adaptive template matching. Firstly, dimensionality is reduced through illumination equalization and structural information extraction. A coarse-to-fine candidate selection strategy minimizes sliding window computational costs, enabling rapid estimation of the marker's position. Finally, adaptive templates are generated for candidate points, achieving subpixel precision through improved template matching with correlation coefficient extremum fitting. Experimental results demonstrate the method's effectiveness in extracting and localizing diagonal markers in complex, large-scale environments, making it ideal for field-of-view measurement in navigation tasks.

A Hardware-Algorithm Co-Designed Framework for HDR Imaging and Dehazing in Extreme Rocket Launch Environments

Jan 13, 2026Abstract:Quantitative optical measurement of critical mechanical parameters -- such as plume flow fields, shock wave structures, and nozzle oscillations -- during rocket launch faces severe challenges due to extreme imaging conditions. Intense combustion creates dense particulate haze and luminance variations exceeding 120 dB, degrading image data and undermining subsequent photogrammetric and velocimetric analyses. To address these issues, we propose a hardware-algorithm co-design framework that combines a custom Spatially Varying Exposure (SVE) sensor with a physics-aware dehazing algorithm. The SVE sensor acquires multi-exposure data in a single shot, enabling robust haze assessment without relying on idealized atmospheric models. Our approach dynamically estimates haze density, performs region-adaptive illumination optimization, and applies multi-scale entropy-constrained fusion to effectively separate haze from scene radiance. Validated on real launch imagery and controlled experiments, the framework demonstrates superior performance in recovering physically accurate visual information of the plume and engine region. This offers a reliable image basis for extracting key mechanical parameters, including particle velocity, flow instability frequency, and structural vibration, thereby supporting precise quantitative analysis in extreme aerospace environments.

Event-based high temporal resolution measurement of shock wave motion field

Dec 27, 2025Abstract:Accurate measurement of shock wave motion parameters with high spatiotemporal resolution is essential for applications such as power field testing and damage assessment. However, significant challenges are posed by the fast, uneven propagation of shock waves and unstable testing conditions. To address these challenges, a novel framework is proposed that utilizes multiple event cameras to estimate the asymmetry of shock waves, leveraging its high-speed and high-dynamic range capabilities. Initially, a polar coordinate system is established, which encodes events to reveal shock wave propagation patterns, with adaptive region-of-interest (ROI) extraction through event offset calculations. Subsequently, shock wave front events are extracted using iterative slope analysis, exploiting the continuity of velocity changes. Finally, the geometric model of events and shock wave motion parameters is derived according to event-based optical imaging model, along with the 3D reconstruction model. Through the above process, multi-angle shock wave measurement, motion field reconstruction, and explosive equivalence inversion are achieved. The results of the speed measurement are compared with those of the pressure sensors and the empirical formula, revealing a maximum error of 5.20% and a minimum error of 0.06%. The experimental results demonstrate that our method achieves high-precision measurement of the shock wave motion field with both high spatial and temporal resolution, representing significant progress.

Optical Flow-Guided 6DoF Object Pose Tracking with an Event Camera

Dec 24, 2025Abstract:Object pose tracking is one of the pivotal technologies in multimedia, attracting ever-growing attention in recent years. Existing methods employing traditional cameras encounter numerous challenges such as motion blur, sensor noise, partial occlusion, and changing lighting conditions. The emerging bio-inspired sensors, particularly event cameras, possess advantages such as high dynamic range and low latency, which hold the potential to address the aforementioned challenges. In this work, we present an optical flow-guided 6DoF object pose tracking method with an event camera. A 2D-3D hybrid feature extraction strategy is firstly utilized to detect corners and edges from events and object models, which characterizes object motion precisely. Then, we search for the optical flow of corners by maximizing the event-associated probability within a spatio-temporal window, and establish the correlation between corners and edges guided by optical flow. Furthermore, by minimizing the distances between corners and edges, the 6DoF object pose is iteratively optimized to achieve continuous pose tracking. Experimental results of both simulated and real events demonstrate that our methods outperform event-based state-of-the-art methods in terms of both accuracy and robustness.

Globally Optimal Solution to the Generalized Relative Pose Estimation Problem using Affine Correspondences

Dec 19, 2025Abstract:Mobile devices equipped with a multi-camera system and an inertial measurement unit (IMU) are widely used nowadays, such as self-driving cars. The task of relative pose estimation using visual and inertial information has important applications in various fields. To improve the accuracy of relative pose estimation of multi-camera systems, we propose a globally optimal solver using affine correspondences to estimate the generalized relative pose with a known vertical direction. First, a cost function about the relative rotation angle is established after decoupling the rotation matrix and translation vector, which minimizes the algebraic error of geometric constraints from affine correspondences. Then, the global optimization problem is converted into two polynomials with two unknowns based on the characteristic equation and its first derivative is zero. Finally, the relative rotation angle can be solved using the polynomial eigenvalue solver, and the translation vector can be obtained from the eigenvector. Besides, a new linear solution is proposed when the relative rotation is small. The proposed solver is evaluated on synthetic data and real-world datasets. The experiment results demonstrate that our method outperforms comparable state-of-the-art methods in accuracy.

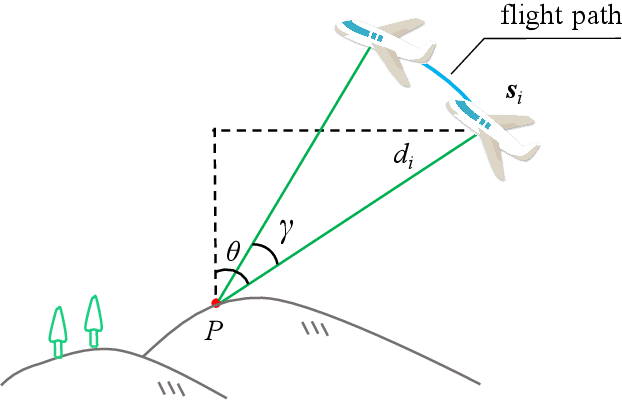

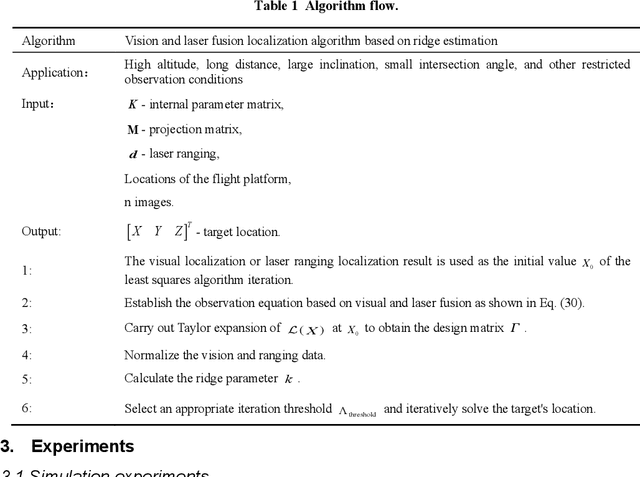

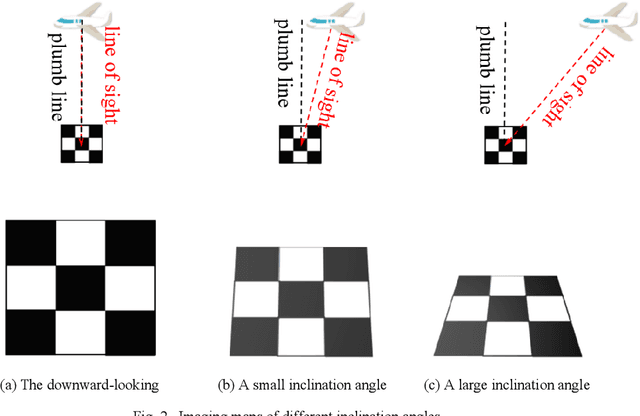

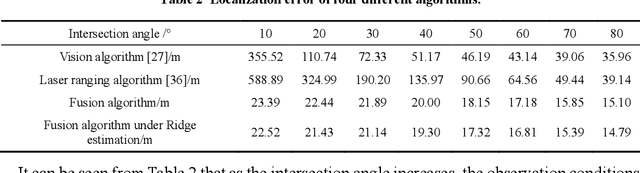

Ridge Estimation-Based Vision and Laser Ranging Fusion Localization Method for UAVs

Dec 18, 2025

Abstract:Tracking and measuring targets using a variety of sensors mounted on UAVs is an effective means to quickly and accurately locate the target. This paper proposes a fusion localization method based on ridge estimation, combining the advantages of rich scene information from sequential imagery with the high precision of laser ranging to enhance localization accuracy. Under limited conditions such as long distances, small intersection angles, and large inclination angles, the column vectors of the design matrix have serious multicollinearity when using the least squares estimation algorithm. The multicollinearity will lead to ill-conditioned problems, resulting in significant instability and low robustness. Ridge estimation is introduced to mitigate the serious multicollinearity under the condition of limited observation. Experimental results demonstrate that our method achieves higher localization accuracy compared to ground localization algorithms based on single information. Moreover, the introduction of ridge estimation effectively enhances the robustness, particularly under limited observation conditions.

Flexible Camera Calibration using a Collimator System

Dec 18, 2025Abstract:Camera calibration is a crucial step in photogrammetry and 3D vision applications. This paper introduces a novel camera calibration method using a designed collimator system. Our collimator system provides a reliable and controllable calibration environment for the camera. Exploiting the unique optical geometry property of our collimator system, we introduce an angle invariance constraint and further prove that the relative motion between the calibration target and camera conforms to a spherical motion model. This constraint reduces the original 6DOF relative motion between target and camera to a 3DOF pure rotation motion. Using spherical motion constraint, a closed-form linear solver for multiple images and a minimal solver for two images are proposed for camera calibration. Furthermore, we propose a single collimator image calibration algorithm based on the angle invariance constraint. This algorithm eliminates the requirement for camera motion, providing a novel solution for flexible and fast calibration. The performance of our method is evaluated in both synthetic and real-world experiments, which verify the feasibility of calibration using the collimator system and demonstrate that our method is superior to existing baseline methods. Demo code is available at https://github.com/LiangSK98/CollimatorCalibration

Collimator-assisted high-precision calibration method for event cameras

Dec 18, 2025Abstract:Event cameras are a new type of brain-inspired visual sensor with advantages such as high dynamic range and high temporal resolution. The geometric calibration of event cameras, which involves determining their intrinsic and extrinsic parameters, particularly in long-range measurement scenarios, remains a significant challenge. To address the dual requirements of long-distance and high-precision measurement, we propose an event camera calibration method utilizing a collimator with flickering star-based patterns. The proposed method first linearly solves camera parameters using the sphere motion model of the collimator, followed by nonlinear optimization to refine these parameters with high precision. Through comprehensive real-world experiments across varying conditions, we demonstrate that the proposed method consistently outperforms existing event camera calibration methods in terms of accuracy and reliability.

* 4 pages, 3 figures

Asynchronous Event Stream Noise Filtering for High-frequency Structure Deformation Measurement

Dec 17, 2025Abstract:Large-scale structures suffer high-frequency deformations due to complex loads. However, harsh lighting conditions and high equipment costs limit measurement methods based on traditional high-speed cameras. This paper proposes a method to measure high-frequency deformations by exploiting an event camera and LED markers. Firstly, observation noise is filtered based on the characteristics of the event stream generated by LED markers blinking and spatiotemporal correlation. Then, LED markers are extracted from the event stream after differentiating between motion-induced events and events from LED blinking, which enables the extraction of high-speed moving LED markers. Ultimately, high-frequency planar deformations are measured by a monocular event camera. Experimental results confirm the accuracy of our method in measuring high-frequency planar deformations.

* 13 pages, 12 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge