Xianwei Zhu

Step-GUI Technical Report

Dec 19, 2025

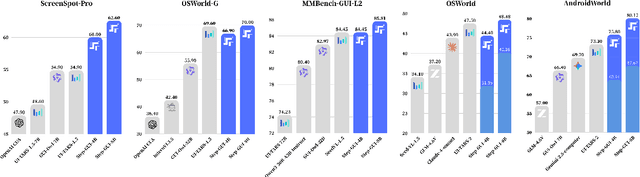

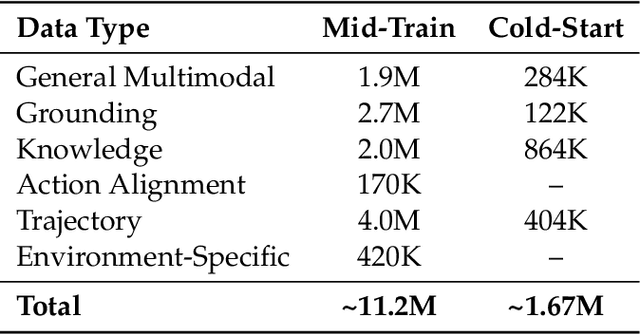

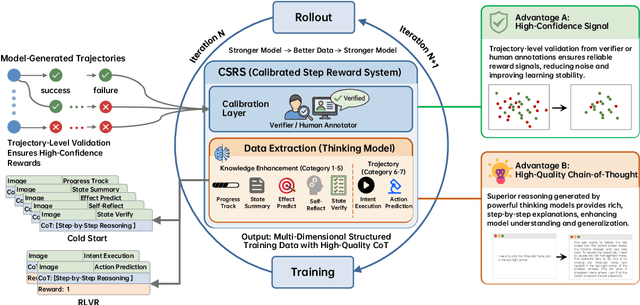

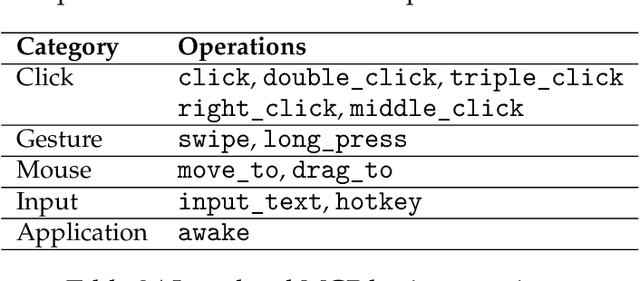

Abstract:Recent advances in multimodal large language models unlock unprecedented opportunities for GUI automation. However, a fundamental challenge remains: how to efficiently acquire high-quality training data while maintaining annotation reliability? We introduce a self-evolving training pipeline powered by the Calibrated Step Reward System, which converts model-generated trajectories into reliable training signals through trajectory-level calibration, achieving >90% annotation accuracy with 10-100x lower cost. Leveraging this pipeline, we introduce Step-GUI, a family of models (4B/8B) that achieves state-of-the-art GUI performance (8B: 80.2% AndroidWorld, 48.5% OSWorld, 62.6% ScreenShot-Pro) while maintaining robust general capabilities. As GUI agent capabilities improve, practical deployment demands standardized interfaces across heterogeneous devices while protecting user privacy. To this end, we propose GUI-MCP, the first Model Context Protocol for GUI automation with hierarchical architecture that combines low-level atomic operations and high-level task delegation to local specialist models, enabling high-privacy execution where sensitive data stays on-device. Finally, to assess whether agents can handle authentic everyday usage, we introduce AndroidDaily, a benchmark grounded in real-world mobile usage patterns with 3146 static actions and 235 end-to-end tasks across high-frequency daily scenarios (8B: static 89.91%, end-to-end 52.50%). Our work advances the development of practical GUI agents and demonstrates strong potential for real-world deployment in everyday digital interactions.

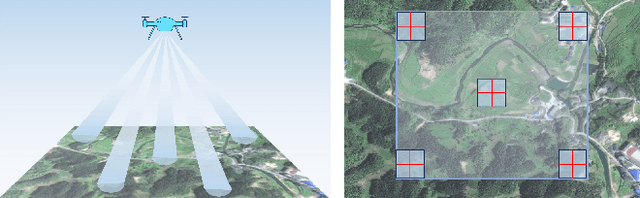

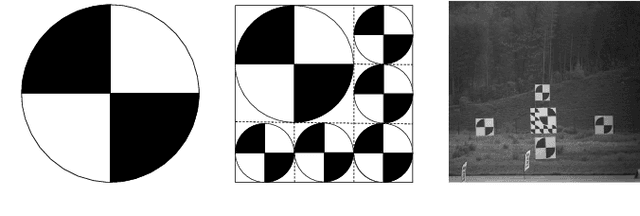

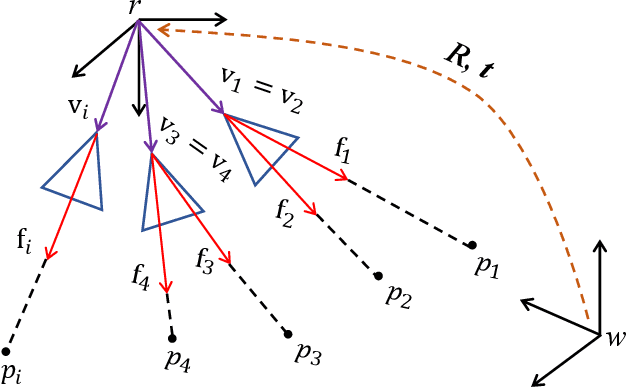

Accurate Pose Estimation for Flight Platforms based on Divergent Multi-Aperture Imaging System

Feb 27, 2025

Abstract:Vision-based pose estimation plays a crucial role in the autonomous navigation of flight platforms. However, the field of view and spatial resolution of the camera limit pose estimation accuracy. This paper designs a divergent multi-aperture imaging system (DMAIS), equivalent to a single imaging system to achieve simultaneous observation of a large field of view and high spatial resolution. The DMAIS overcomes traditional observation limitations, allowing accurate pose estimation for the flight platform. {Before conducting pose estimation, the DMAIS must be calibrated. To this end we propose a calibration method for DMAIS based on the 3D calibration field.} The calibration process determines the imaging parameters of the DMAIS, which allows us to model DMAIS as a generalized camera. Subsequently, a new algorithm for accurately determining the pose of flight platform is introduced. We transform the absolute pose estimation problem into a nonlinear minimization problem. New optimality conditions are established for solving this problem based on Lagrange multipliers. Finally, real calibration experiments show the effectiveness and accuracy of the proposed method. Results from real flight experiments validate the system's ability to achieve centimeter-level positioning accuracy and arc-minute-level orientation accuracy.

CoreGuard: Safeguarding Foundational Capabilities of LLMs Against Model Stealing in Edge Deployment

Oct 16, 2024

Abstract:Proprietary large language models (LLMs) demonstrate exceptional generalization ability across various tasks. Additionally, deploying LLMs on edge devices is trending for efficiency and privacy reasons. However, edge deployment of proprietary LLMs introduces new security threats: attackers who obtain an edge-deployed LLM can easily use it as a base model for various tasks due to its high generalization ability, which we call foundational capability stealing. Unfortunately, existing model protection mechanisms are often task-specific and fail to protect general-purpose LLMs, as they mainly focus on protecting task-related parameters using trusted execution environments (TEEs). Although some recent TEE-based methods are able to protect the overall model parameters in a computation-efficient way, they still suffer from prohibitive communication costs between TEE and CPU/GPU, making it impractical to deploy for edge LLMs. To protect the foundational capabilities of edge LLMs, we propose CoreGuard, a computation- and communication-efficient model protection approach against model stealing on edge devices. The core component of CoreGuard is a lightweight and propagative authorization module residing in TEE. Extensive experiments show that CoreGuard achieves the same security protection as the black-box security guarantees with negligible overhead.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge